Automating Backups with Cron Jobs in Linux

Learn how to automate Linux backups using cron jobs with clear, beginner-friendly steps and sample scripts. Schedule, test and secure backups to protect your systems and data.

Automating backups saves time and reduces the chance of data loss by ensuring copies are created regularly without manual intervention. This guide walks you through writing a simple backup script, scheduling it with cron, keeping backups tidy with retention rules, and verifying restores — all with practical examples.

Why automate backups?

Manual backups are error-prone and easy to forget. Automation with cron ensures consistency and lets you focus on other tasks while the system keeps regular snapshots of important data. Automated jobs can also be scripted to include logging, notifications, and retention policies.

Example: quickly create a one-off tar backup to see what automation would do later.

# create a timestamped tarball of /home and save to /backups

sudo tar -czf /backups/home-$(date +%F_%H%M).tar.gz /home

ls -lh /backups

Writing a simple backup script

Start with a small, readable shell script that uses absolute paths, logs output, and returns nonzero on failure. Here is a compact example using rsync for file-level backups (fast and efficient for repeated runs):

#!/bin/bash

set -euo pipefail

# Config

SRC="/home/"

DEST="/backups/home"

LOG="/var/log/backup-home.log"

DATE=$(date +%F_%H%M)

mkdir -p "$DEST"

echo "[$(date +'%F %T')] Starting backup" >> "$LOG"

# Rsync options: archive, compress, show progress, delete removed files

rsync -a --delete --info=progress2 "$SRC" "$DEST" >> "$LOG" 2>&1

echo "[$(date +'%F %T')] Backup completed: $DEST ($DATE)" >> "$LOG"

Save as /usr/local/bin/backup-home.sh and make executable:

sudo chmod +x /usr/local/bin/backup-home.sh

Key tips:

- Use absolute paths in scripts and cron entries.

- Redirect stdout/stderr to a log for troubleshooting.

- Use set -euo pipefail to fail early on errors.

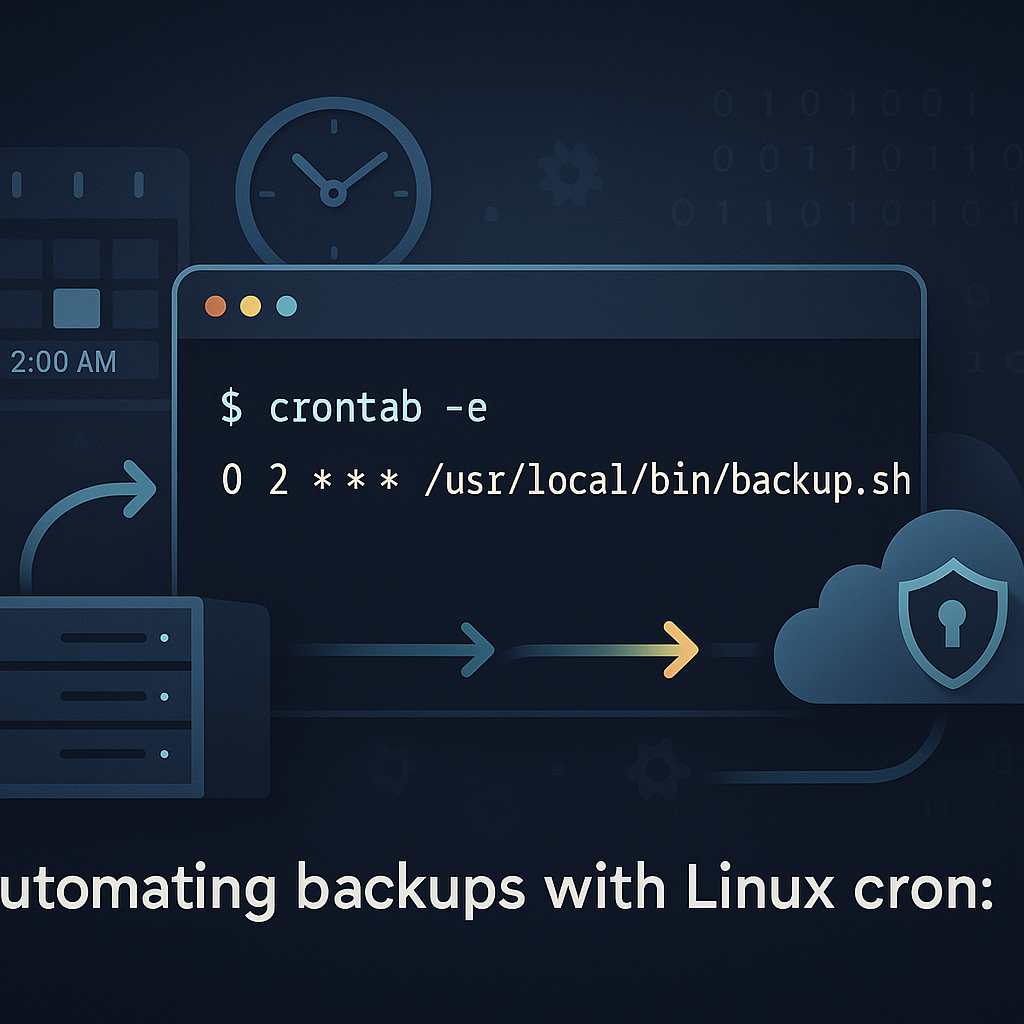

Scheduling with cron

Cron runs commands on a schedule. Edit the crontab for the user that should execute backups (often root for system-wide backups). Example: run the backup script every day at 2:30 AM.

Open the crontab:

sudo crontab -e

Add this line:

30 2 * * * /usr/local/bin/backup-home.sh

Quick breakdown of fields: minute hour day month weekday command. Important environment notes:

Prevent overlapping runs using flock:

30 2 * * * /usr/bin/flock -n /var/lock/backup-home.lock /usr/local/bin/backup-home.sh

Cron runs with a minimal PATH; use full paths or set PATH at top of crontab, e.g.:

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

30 2 * * * /usr/local/bin/backup-home.sh

Check cron logs to verify execution:

# Debian/Ubuntu

sudo grep CRON /var/log/syslog | tail -n 20

# systemd-based systems sometimes log via journal

sudo journalctl -u cron --since "2 days ago"

Managing retention and rotation

Backups can consume disk quickly. Implement automatic pruning of old backups using simple find commands or tools like restic/duplicity for built-in retention. Example: keep the last 30 days of tarball backups in /backups/tars:

Script snippet to remove files older than 30 days:

#!/bin/bash

BACKUP_DIR="/backups/tars"

find "$BACKUP_DIR" -type f -name "*.tar.gz" -mtime +30 -print -delete

If you use timestamped directories, you can rotate by keeping a fixed number of backups:

# Keep last 7 directories (by mtime)

cd /backups/daily || exit

ls -1tr | head -n -7 | xargs -r rm -rf --

For more advanced retention policies and encryption consider using a backup tool:

- restic: deduplicated, encrypted, supports remote storage.

- borgbackup: efficient compression and encryption.

Example initializing a restic repo (local):

export RESTIC_REPOSITORY=/backups/restic

export RESTIC_PASSWORD=strongpassword

restic init

restic backup /home

restic forget --keep-daily 7 --keep-weekly 4 --prune

Testing and monitoring backups

Automation is only useful if you can restore. Regularly test restores and monitor logs/notifications.

Example: restore a single file from a tarball:

# list files in a tarball

tar -tzf /backups/home-2025-10-01_0230.tar.gz | head

# extract a single file

tar -xzf /backups/home-2025-10-01_0230.tar.gz -C /tmp path/to/file.txt

ls -l /tmp/path/to/file.txt

Automated verification ideas:

Add checksums for critical files:

sha256sum /backups/home-*.tar.gz > /backups/checksums.sha256

sha256sum -c /backups/checksums.sha256

After backup completes, check exit code and file sizes:

if [ $? -ne 0 ]; then

echo "Backup failed" | mail -s "Backup FAILED" admin@example.com

fi

Monitor health:

- Configure email alerts in cron (MAILTO=you@example.com at top of crontab) or integrate with monitoring (Prometheus, Nagios).

Tail the backup log:

sudo tail -n 50 /var/log/backup-home.log

Common Pitfalls

- Not testing restores (you have backups, but they might be corrupted or missing important files). Periodically perform a manual restore to verify.

Not handling concurrency — a long-running backup overlaps the next scheduled run.

# Use flock to avoid overlapping runs:

/usr/bin/flock -n /var/lock/backup-home.lock /usr/local/bin/backup-home.sh

Using relative paths in scripts or cron entries, causing "command not found" or files dumped to the wrong location.

# Bad: relative path

./backup-home.sh

Next Steps

- Schedule a weekly restore drill to verify backups are restorable.

- Replace plain scripts with a dedicated backup tool (restic or borg) for encryption and deduplication.

- Add alerting (email or monitoring) so failures are noticed quickly.

👉 Explore more IT books and guides at dargslan.com.