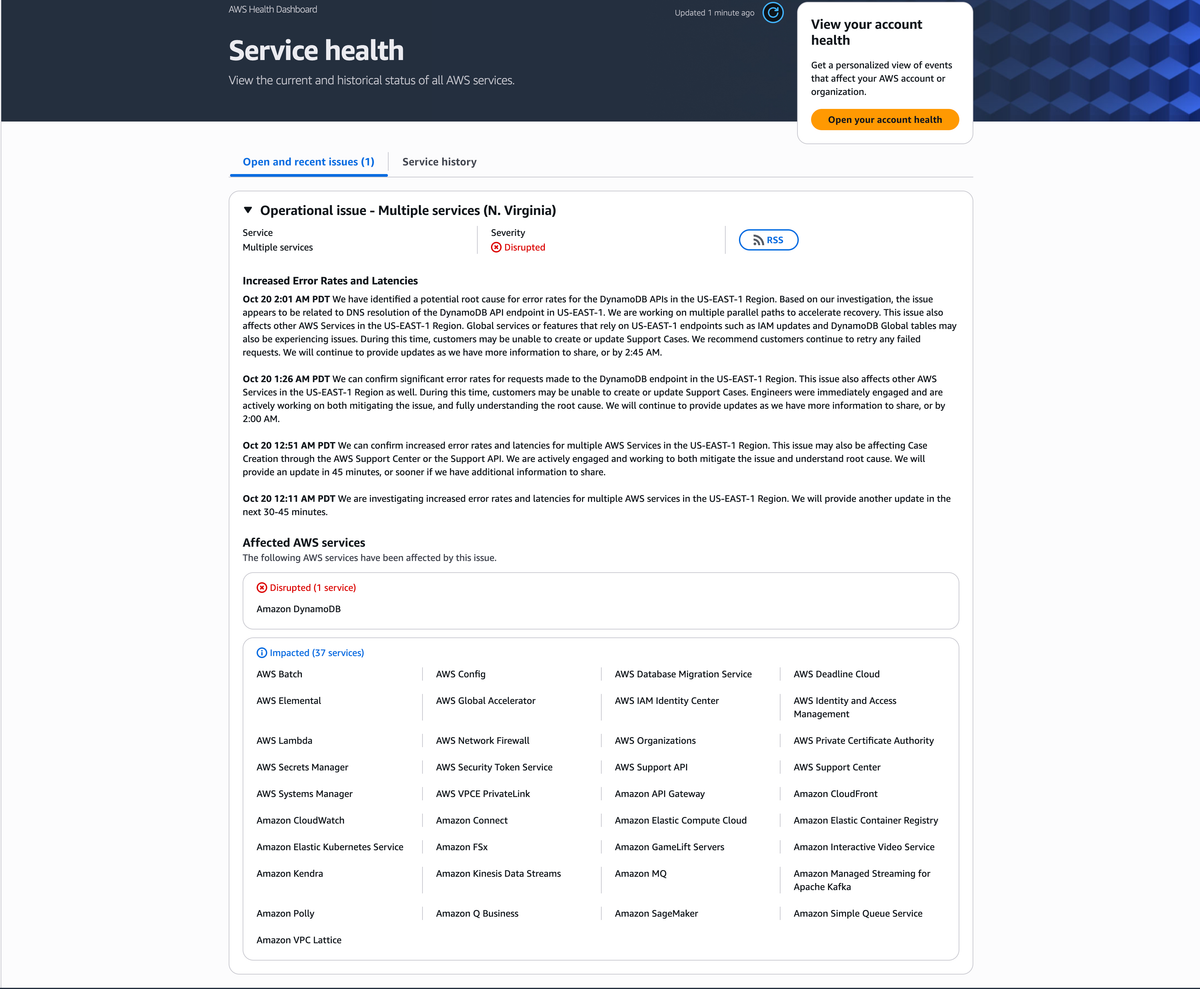

⚠️ AWS US-EAST-1 Outage: Root Cause Identified – DynamoDB Disruption Linked to DNS Resolution Issue

AWS has identified a DNS resolution issue as the root cause of the US-EAST-1 (N. Virginia) outage impacting DynamoDB and 37 AWS services. Learn what’s happening, what’s affected, and how to stay resilient.

Date: October 20, 2025

Region Affected: N. Virginia (US-EAST-1)

Status: 🔴 Disrupted

Amazon Web Services (AWS) has confirmed a major service disruption in the US-EAST-1 (N. Virginia) region affecting Amazon DynamoDB and 37 other AWS services.

As of 2:01 AM PDT, AWS engineers have identified a potential root cause related to DNS resolution for DynamoDB API endpoints.

🧩 Latest Update – October 20, 2025 – 2:01 AM PDT

“We have identified a potential root cause for error rates for the DynamoDB APIs in the US-EAST-1 Region. Based on our investigation, the issue appears to be related to DNS resolution of the DynamoDB API endpoint in US-EAST-1. We are working on multiple parallel paths to accelerate recovery.

This issue also affects other AWS Services in the US-EAST-1 Region.

Global services or features that rely on US-EAST-1 endpoints such as IAM updates and DynamoDB Global tables may also be impacted.”

AWS engineers continue to recommend that customers retry any failed requests and monitor the AWS Health Dashboard for real-time status changes.

Next update expected by 2:45 AM PDT.

⚙️ Affected AWS Services

🔴 Disrupted:

- Amazon DynamoDB

🟡 Impacted (37 services):

AWS Batch, AWS Config, AWS Database Migration Service, AWS Deadline Cloud, AWS Elemental, AWS Global Accelerator,

AWS IAM Identity Center, AWS Identity and Access Management, AWS Lambda, AWS Network Firewall, AWS Organizations,

AWS Private Certificate Authority, AWS Secrets Manager, AWS Security Token Service, AWS Support API, AWS Support Center,

AWS Systems Manager, AWS VPCE PrivateLink, Amazon API Gateway, Amazon CloudFront, Amazon CloudWatch,

Amazon Elastic Compute Cloud (EC2), Amazon Elastic Container Registry, Amazon Elastic Kubernetes Service (EKS),

Amazon FSx, Amazon GameLift Servers, Amazon Interactive Video Service, Amazon Kinesis Data Streams, Amazon Managed Streaming for Apache Kafka,

Amazon MQ, Amazon Polly, Amazon Q Business, Amazon SageMaker, Amazon Simple Queue Service (SQS), Amazon VPC Lattice, Amazon Kendra.

🧠 Root Cause: DNS Resolution Issue

This latest update marks the first acknowledgment from AWS of a possible root cause.

According to the report, the outage appears to stem from DNS resolution problems affecting the DynamoDB API endpoint in the N. Virginia region.

DNS resolution issues can trigger widespread instability because:

- Core API requests fail to resolve endpoints.

- Dependent AWS services (such as IAM, CloudWatch, and Lambda) can no longer connect properly.

- Global services relying on US-EAST-1 (e.g., DynamoDB Global Tables, IAM propagation) may experience cross-region latency or timeouts.

AWS has deployed multiple parallel recovery efforts to minimize service impact and restore stability as soon as possible.

🚨 Timeline of the Outage

| Time (PDT) | Update | Status |

|---|---|---|

| 12:11 AM | AWS begins investigating increased error rates in US-EAST-1 | Investigating |

| 12:26 AM | Error rates confirmed for DynamoDB; AWS escalates to Disrupted | Disrupted |

| 12:46 AM | 37 services officially impacted | Ongoing |

| 2:01 AM | Root cause identified – DNS resolution issue in DynamoDB API | Recovery in progress |

🧭 Recommended Actions for AWS Users

✅ Monitor official updates:

Keep track via the AWS Service Health Dashboard.

✅ Retry failed requests:

AWS suggests retrying DynamoDB or IAM API calls as services recover gradually.

✅ Avoid new deployments:

Hold off on changes in us-east-1 until the incident is fully resolved.

✅ Review failover mechanisms:

Ensure your applications can reroute requests to secondary regions like us-west-2 or eu-west-1.

✅ Implement redundancy in DNS:

For critical services, configure fallback endpoints and caching strategies to handle DNS-related issues gracefully.

🌍 Why US-EAST-1 Outages Matter

US-EAST-1 is AWS’s largest and oldest region, hosting vast numbers of applications, APIs, and backend systems.

Its role in global infrastructure propagation — especially for IAM, DynamoDB Global Tables, and CloudWatch — means even a regional failure can cause global slowdowns or API delays.

This incident reinforces the need for:

- Multi-region design patterns

- Cross-region data replication

- Infrastructure resilience testing

🧭 Conclusion

As AWS works to resolve the DNS-related outage, developers and organizations are reminded of one key truth:

No single cloud region should be a single point of failure.

Multi-region architecture, proactive monitoring, and automated failover are the best defenses against unexpected cloud disruptions.

AWS continues to provide updates every 30–45 minutes. The next official communication is expected by 2:45 AM PDT.

📍 Source: AWS Health Dashboard – US-EAST-1 Operational Issue

📘 Detailed analysis: Dargslan Publishing AWS Outage Report

🔗 Learn More

If you want to understand cloud resilience, AWS infrastructure, and DevOps best practices,

visit 👉 dargslan.com — your hub for professional IT learning, technical books, and in-depth tutorials.