Continuous Integration vs Continuous Deployment Explained

Sponsor message — This article is made possible by Dargslan.com, a publisher of practical, no-fluff IT & developer workbooks.

Why Dargslan.com?

If you prefer doing over endless theory, Dargslan’s titles are built for you. Every workbook focuses on skills you can apply the same day—server hardening, Linux one-liners, PowerShell for admins, Python automation, cloud basics, and more.

Continuous Integration vs Continuous Deployment Explained

Modern software development demands speed, reliability, and precision. Teams face mounting pressure to deliver features faster while maintaining quality standards that users expect. The gap between writing code and delivering value to customers has become a critical battleground for competitive advantage, where delays translate directly into lost opportunities and market share erosion.

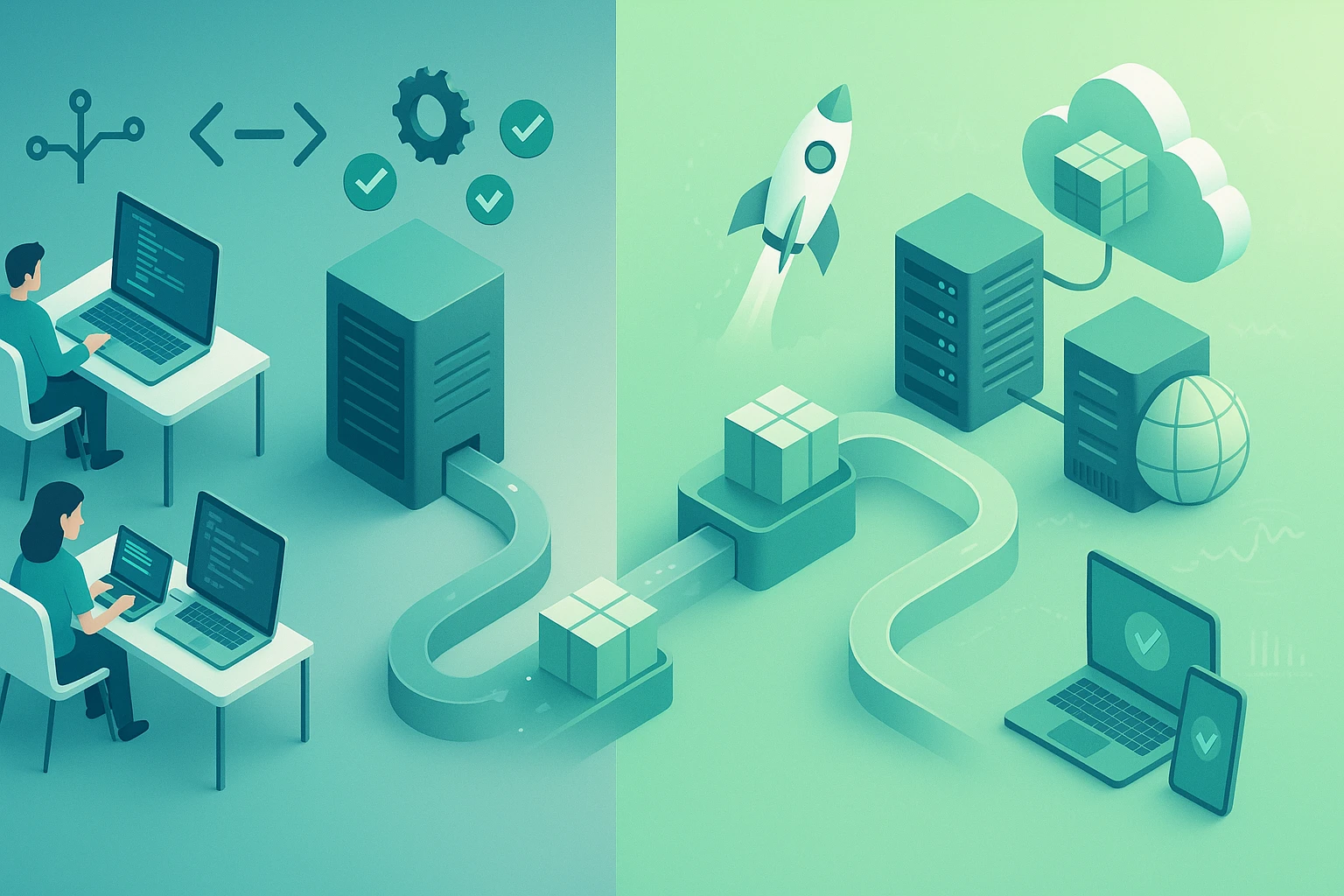

Continuous Integration and Continuous Deployment represent two interconnected yet distinct practices that fundamentally transform how software moves from developer keyboards to production environments. While often mentioned together, each serves unique purposes in the software delivery pipeline, addressing different challenges and requiring specific technical capabilities. Understanding their individual roles and collective impact enables organizations to build robust deployment strategies aligned with business objectives.

This comprehensive exploration reveals the mechanics, benefits, and practical implementation strategies for both approaches. Readers will discover how these practices differ in scope and execution, what infrastructure requirements support each methodology, and which organizational contexts favor one approach over another. Through detailed comparisons, real-world considerations, and actionable insights, you'll gain the knowledge needed to evaluate and implement the right continuous delivery strategy for your development environment.

Understanding the Foundation of Modern Delivery Practices

The evolution of software delivery has progressed through distinct phases, each addressing limitations of previous approaches. Traditional development cycles involved long periods of isolated work followed by painful integration phases where conflicts emerged and quality degraded. This waterfall-inspired approach created bottlenecks, delayed feedback, and increased risk with every release cycle.

Continuous practices emerged as responses to these fundamental challenges. They represent philosophical shifts in how teams approach code integration, testing, and deployment. Rather than treating these activities as discrete project phases, continuous methodologies embed them into the daily workflow, transforming occasional events into routine operations that happen multiple times per day.

The Integration Challenge and Its Solution

Continuous Integration addresses the fundamental problem of code integration. When multiple developers work simultaneously on different features, their changes eventually need to merge into a unified codebase. The longer these separate development streams remain isolated, the more complex and risky integration becomes. Dependencies shift, interfaces change, and assumptions become invalid, creating integration nightmares that consume days or weeks of developer time.

The practice requires developers to commit code changes to a shared repository frequently—typically multiple times daily. Each commit triggers an automated build process that compiles the code, runs comprehensive test suites, and reports results immediately. This rapid feedback loop identifies integration problems within hours rather than weeks, when context remains fresh and fixes require minimal effort.

"The cost of fixing integration issues grows exponentially with time. What takes ten minutes to resolve on the day code is written might consume days of debugging effort three weeks later when context has faded and other changes have layered on top."

Successful implementation demands more than just automation tools. Teams must cultivate disciplines around commit frequency, test coverage, and build maintenance. The shared build environment becomes a source of truth, reflecting the true state of the codebase at any moment. When builds break, fixing them becomes the team's highest priority, preventing the accumulation of technical debt that undermines the entire practice.

Deployment as a Continuous Process

Continuous Deployment extends integration practices to their logical conclusion by automatically releasing every validated change to production environments. This approach eliminates human gatekeeping from the deployment process, relying instead on comprehensive automated testing and monitoring to ensure quality. Every commit that passes the full test suite becomes immediately available to end users without manual intervention.

This practice represents a radical departure from traditional release management, where deployments occurred on fixed schedules after extensive manual validation. The frequency shift—from quarterly or monthly releases to dozens or hundreds of deployments daily—fundamentally changes the risk profile of each individual deployment. Smaller, incremental changes prove easier to validate, debug, and rollback if issues emerge.

Implementation requires exceptional automation maturity and organizational confidence in testing practices. The deployment pipeline must handle building, testing, security scanning, and production release without human involvement. Monitoring systems must detect anomalies immediately and trigger automated rollbacks when metrics deviate from expected patterns. This level of automation demands significant upfront investment but delivers substantial long-term benefits through reduced cycle times and increased deployment confidence.

Distinguishing Characteristics and Operational Differences

While both practices share common foundations in automation and continuous feedback, their scope and implementation requirements differ substantially. Understanding these distinctions helps organizations choose appropriate approaches for their specific contexts and maturity levels.

| Aspect | Continuous Integration | Continuous Deployment |

|---|---|---|

| Primary Focus | Code integration and validation | Automated production release |

| Automation Scope | Build, compile, unit testing, integration testing | Complete pipeline including deployment, monitoring, rollback |

| Human Involvement | Developers review results and fix issues | Minimal to none; automated decision-making |

| Deployment Frequency | Not directly addressed; focuses on integration | Multiple deployments daily, potentially hundreds |

| Risk Management | Early detection of integration problems | Small batch sizes, automated rollback, comprehensive monitoring |

| Testing Requirements | Comprehensive automated test suite | Extremely robust testing including production-like environments |

| Organizational Maturity | Intermediate; requires discipline and tooling | Advanced; demands cultural transformation and exceptional automation |

| Feedback Loop | Minutes to hours for build and test results | Immediate production feedback through monitoring |

Scope and Boundaries

Continuous Integration operates within the development environment, focusing on the health of the codebase itself. Its boundary ends at producing validated, deployable artifacts. Whether those artifacts actually deploy to production remains outside its concern. Teams practicing integration without deployment maintain control over release timing, allowing business considerations to dictate when features become available to users.

Deployment practices extend this pipeline through staging environments and into production systems. The scope encompasses infrastructure provisioning, configuration management, database migrations, and all technical activities required to make code operational for end users. This broader scope introduces additional complexity around environment management, security controls, and operational concerns that integration alone doesn't address.

Decision Points and Control

A critical distinction lies in where human judgment enters the process. Integration practices maintain human oversight at the deployment decision point. Product managers, business stakeholders, or release managers decide when validated code moves to production based on market timing, feature completeness, or strategic considerations. This control point allows coordination with marketing campaigns, customer communications, or other business activities.

"Removing humans from deployment decisions requires absolute confidence in automated quality gates. That confidence comes from extensive testing, gradual rollout capabilities, and monitoring systems that detect issues faster than humans could respond."

Deployment automation eliminates this decision point, treating every successful build as production-ready by definition. This approach demands different thinking about feature readiness. Incomplete features must hide behind feature flags or exist as harmless additions until fully developed. The system assumes that anything merged to the main branch should be live, shifting quality conversations earlier in the development process.

Infrastructure and Tooling Requirements

Integration implementations require build servers, test automation frameworks, and artifact repositories. Popular tools include Jenkins, GitLab CI, CircleCI, and GitHub Actions for orchestrating builds. Test frameworks vary by language and application type, but the principle remains consistent: automated validation of every code change. Version control systems like Git provide the foundation, tracking changes and triggering automation workflows.

Deployment pipelines demand significantly more sophisticated infrastructure. Container orchestration platforms like Kubernetes manage application deployment and scaling. Infrastructure-as-code tools such as Terraform or CloudFormation provision and configure environments consistently. Service mesh technologies handle traffic routing for gradual rollouts. Monitoring systems like Prometheus, Datadog, or New Relic provide visibility into application health and user experience. The tooling complexity reflects the broader scope and higher automation requirements.

Practical Implementation Strategies and Challenges

Successfully adopting these practices requires more than installing tools and writing automation scripts. Organizations must address cultural, technical, and process dimensions simultaneously. The journey typically progresses through stages, with each building on capabilities developed previously.

Starting with Integration Fundamentals

Most organizations begin their continuous delivery journey by establishing solid integration practices. This foundation proves essential regardless of ultimate deployment goals. The initial focus centers on getting developers to commit code frequently, ideally multiple times per day. This behavioral change often meets resistance from developers accustomed to working in isolation until features reach completion.

✨ Establish a single source of truth by designating a primary branch where all integration occurs. Teams must agree on branching strategies that support frequent integration while managing feature development and bug fixes effectively.

✨ Build automation infrastructure that triggers immediately when code commits occur. The build process should complete quickly—ideally within ten minutes—to provide rapid feedback without disrupting developer flow.

✨ Develop comprehensive test suites that validate functionality without requiring manual intervention. Unit tests verify individual components, integration tests confirm components work together, and end-to-end tests validate complete user workflows.

✨ Create visibility into build status through dashboards, notifications, or physical indicators that make the codebase health obvious to everyone. When builds break, the entire team should know immediately and prioritize fixes.

✨ Cultivate a culture of collective ownership where any team member can and should fix build issues regardless of who introduced them. This shared responsibility prevents bottlenecks and reinforces the importance of maintaining integration health.

"The hardest part of continuous integration isn't the tooling—it's convincing developers that committing incomplete work is acceptable as long as it doesn't break the build. This mindset shift from 'perfect before sharing' to 'share early and often' requires trust and psychological safety."

Advancing Toward Automated Deployment

Organizations with mature integration practices can consider automated deployment, though not all should. The decision depends on application characteristics, regulatory requirements, organizational risk tolerance, and technical capabilities. Consumer-facing web applications with sophisticated monitoring often prove ideal candidates, while systems with strict compliance requirements or limited rollback capabilities may require human oversight.

The transition requires expanding test coverage substantially. While integration testing might achieve 70-80% code coverage, deployment automation demands 90%+ coverage including edge cases, error conditions, and performance characteristics. Tests must run in production-like environments that mirror real-world conditions including network latency, database load, and concurrent user behavior.

Infrastructure capabilities become critical. Deployment automation requires blue-green deployment capabilities, canary release patterns, or feature flag systems that allow gradual rollout and quick rollback. These patterns enable releasing changes to small user segments initially, monitoring for issues, and expanding reach only after validation. If problems emerge, rollback occurs automatically within seconds rather than requiring manual intervention.

Monitoring and Observability Requirements

Automated deployment shifts quality validation from pre-release testing to post-release monitoring. Organizations must instrument applications extensively to detect issues immediately when they occur. Metrics around error rates, response times, conversion rates, and user behavior provide signals that automated systems use to determine deployment success or trigger rollbacks.

Effective monitoring requires defining clear success criteria for each deployment. What metrics indicate the change is working correctly? What thresholds, when crossed, suggest problems requiring rollback? These definitions must be specific, measurable, and automated. Vague notions of "working well" don't support automated decision-making.

Observability extends beyond simple metrics to include distributed tracing, log aggregation, and user session replay capabilities. When issues occur, teams need to diagnose root causes quickly. The faster problems are understood, the faster fixes can be developed and deployed. This rapid iteration on fixes becomes a competitive advantage, turning potential disasters into minor hiccups resolved before most users notice.

| Implementation Phase | Key Activities | Success Indicators |

|---|---|---|

| Foundation | Version control adoption, basic build automation, initial test development | All code in version control, builds run automatically, some automated tests exist |

| Integration Maturity | Frequent commits, comprehensive testing, fast build times, broken build protocols | Multiple commits daily per developer, 70%+ test coverage, builds under 10 minutes |

| Deployment Preparation | Infrastructure automation, deployment scripting, staging environments, monitoring setup | One-click deployments to staging, infrastructure defined as code, basic monitoring |

| Automated Deployment | Production automation, gradual rollout patterns, automated rollback, comprehensive monitoring | Multiple production deployments daily, automated rollback on issues, detailed observability |

Transforming Team Dynamics and Business Outcomes

These technical practices catalyze profound organizational changes that extend far beyond engineering teams. The way companies develop, release, and maintain software influences product strategy, customer relationships, and competitive positioning. Understanding these broader impacts helps justify the investment required for implementation.

Accelerating Feedback Cycles

Traditional development approaches create extended delays between writing code and receiving user feedback. Features might take months to reach production, by which time market conditions have shifted, user needs have evolved, or competitive offerings have emerged. This lag prevents rapid learning and course correction, forcing organizations to make large bets based on assumptions rather than evidence.

Continuous practices compress these feedback cycles dramatically. Integration provides immediate technical feedback about code quality and integration issues. Deployment automation extends this rapid feedback to include user behavior, feature adoption, and business metric impact. Product teams can experiment with different approaches, measure results, and iterate within days rather than quarters.

This acceleration enables evidence-based product development where hypotheses are tested quickly with real users. Features that resonate can be expanded; those that don't can be modified or removed before significant resources are invested. The ability to learn quickly becomes a strategic advantage, allowing organizations to adapt to market changes and user needs more effectively than competitors locked into slower release cycles.

Reducing Risk Through Smaller Changes

Counterintuitively, deploying more frequently reduces rather than increases risk. Large releases containing weeks or months of changes introduce substantial uncertainty. The sheer volume of modifications makes predicting behavior difficult and isolating problems challenging. When issues occur, determining which specific change caused the problem requires extensive investigation.

"Every deployment is a risk event. The question isn't whether to take risks, but how to size them appropriately. Deploying one change is inherently less risky than deploying a hundred changes simultaneously."

Frequent deployment of small changes creates manageable risk profiles. Each deployment contains minimal modifications, making behavior more predictable and problems easier to identify. If issues emerge, the limited scope means rollback affects fewer users and fewer features. The blast radius of any single deployment failure remains small, preventing catastrophic outages that plague big-bang releases.

This risk reduction extends to the human element. Teams deploying frequently develop muscle memory around the process, making it routine rather than stressful. Deployment becomes a non-event rather than an all-hands-on-deck crisis requiring late nights and weekend work. This normalization reduces errors caused by stress, fatigue, or unfamiliarity with deployment procedures.

Enabling Organizational Agility

Business agility requires technical agility. When deployment requires weeks of planning and coordination, responding quickly to market opportunities or competitive threats becomes impossible. Organizations find themselves constrained by technical limitations, unable to execute strategies that business conditions demand.

Continuous deployment removes technical constraints from business decision-making. Product managers can respond to customer feedback immediately, marketing teams can launch campaigns knowing supporting features will be ready, and executives can make strategic pivots without waiting for the next release window. The technology organization transforms from a bottleneck into an enabler, supporting rather than constraining business agility.

This capability proves particularly valuable during crises or unexpected opportunities. Security vulnerabilities can be patched within hours rather than waiting for scheduled maintenance windows. Competitive threats can be countered quickly with feature releases or pricing changes. Market opportunities can be seized while they exist rather than after they've passed. The ability to act quickly when circumstances demand creates substantial competitive advantages.

Improving Developer Satisfaction and Productivity

Developer experience improves substantially under continuous practices. The frustration of lengthy integration phases disappears when integration occurs continuously. The anxiety of large deployments evaporates when deployments become routine. The satisfaction of seeing work reach users quickly replaces the disappointment of features languishing in staging environments for months.

Productivity gains emerge from multiple sources. Developers spend less time debugging integration issues because problems are caught immediately. They waste less effort on features that users don't want because feedback arrives quickly. They experience fewer context switches because the time between writing code and seeing it in production shrinks dramatically. These efficiency improvements compound over time, significantly increasing the value delivered per developer.

"There's something deeply satisfying about pushing code in the morning and seeing users benefit from it by afternoon. That tight feedback loop keeps developers engaged and motivated in ways that quarterly release cycles never could."

The practices also support better work-life balance. When deployments are automated and routine, they don't require weekend work or late-night deployment windows. The reduced stress around releases means developers can maintain sustainable pace rather than experiencing the boom-bust cycle of crunch time before releases followed by recovery periods afterward.

Overcoming Implementation Obstacles and Resistance

Despite clear benefits, organizations encounter substantial challenges when adopting these practices. Understanding common obstacles and mitigation strategies helps teams navigate the transformation more effectively.

Cultural Resistance and Change Management

Technical changes prove easier than cultural transformations. Developers accustomed to working in isolation resist frequent commits of incomplete work. Operations teams comfortable with controlled, infrequent deployments fear the chaos of continuous releases. Management trained to think in terms of quarterly roadmaps struggles with the uncertainty of emergent feature development.

Addressing these concerns requires education, patience, and demonstrating value incrementally. Starting with pilot projects that prove the approach works builds confidence without threatening existing processes. Celebrating early wins and sharing success stories helps overcome skepticism. Providing training and support helps individuals develop new skills and comfort with new workflows.

Leadership support proves essential. When executives understand and champion the transformation, it signals organizational commitment and provides air cover for teams trying new approaches. Without this support, continuous improvement efforts often stall when they encounter resistance or when short-term pressures tempt teams to revert to familiar patterns.

Technical Debt and Legacy Systems

Existing codebases often lack the characteristics that enable continuous practices. Monolithic architectures tightly couple components, making independent deployment difficult. Missing test coverage means automation can't validate changes reliably. Manual deployment procedures embedded in institutional knowledge resist automation. These technical obstacles can seem insurmountable.

Progress requires incremental improvement rather than wholesale rewrites. Teams can begin by adding tests to new code and gradually increasing coverage of existing code. Deployment automation can start with simple scripts that codify manual procedures, then evolve toward sophisticated orchestration. Architectural improvements can happen gradually through strategic refactoring rather than risky big-bang migrations.

The key is accepting that transformation takes time. Organizations shouldn't expect to move from quarterly releases to dozens of daily deployments overnight. Setting realistic intermediate goals—perhaps monthly releases, then bi-weekly, then weekly—allows teams to build capabilities progressively while delivering value throughout the journey.

Regulatory and Compliance Constraints

Certain industries face regulatory requirements that seem incompatible with continuous practices. Financial services, healthcare, and other regulated sectors often have audit requirements, change approval processes, or documentation mandates that appear to demand human oversight of every production change.

However, compliance doesn't necessarily require manual processes. Many regulatory requirements can be satisfied through automated controls, comprehensive audit logging, and automated testing that validates compliance criteria. The key is understanding what regulators actually require versus what organizations assume they require based on traditional practices.

Engaging with compliance teams early in the transformation process helps identify creative solutions. Automated testing can validate regulatory requirements more consistently than manual reviews. Comprehensive logging provides better audit trails than manual documentation. Gradual rollout patterns can serve as de facto approval processes where changes are validated in production with limited exposure before full deployment.

Tooling Complexity and Maintenance

The automation infrastructure supporting continuous practices introduces its own complexity and maintenance burden. Build pipelines break, test suites become flaky, deployment scripts fail in unexpected ways. Teams can find themselves spending more time maintaining automation than they save through its use.

Managing this complexity requires treating automation infrastructure as a first-class product deserving engineering attention. Flaky tests must be fixed or removed rather than ignored. Build scripts need refactoring when they become unwieldy. Monitoring must extend to the automation infrastructure itself so that issues are detected and resolved quickly.

Investing in developer experience pays dividends. When automation works reliably and provides clear feedback, developers embrace it. When it's unreliable or confusing, they work around it, undermining the entire practice. Dedicating resources to maintaining and improving automation infrastructure prevents the gradual degradation that leads to abandonment.

Making Strategic Decisions About Adoption and Implementation

Not every organization should adopt both practices, and even those that do should consider context when determining implementation approaches. Strategic thinking about which practices fit which situations enables more effective adoption.

When Integration Without Deployment Makes Sense

Many organizations benefit from continuous integration while maintaining manual deployment control. This approach suits situations where business timing matters more than technical capability to deploy. Product launches coordinated with marketing campaigns, features tied to specific dates or events, or changes requiring customer communication may warrant human oversight of release timing.

Regulated industries might choose this middle ground, using automation to ensure quality while maintaining human approval for production changes to satisfy compliance requirements. The automated validation provides confidence while preserving audit trails and approval processes that regulators expect.

Organizations with limited monitoring capabilities should also consider stopping short of full deployment automation. Without comprehensive observability, the risks of automated deployment outweigh the benefits. Building monitoring capabilities first creates the foundation for later automation.

Evaluating Readiness for Deployment Automation

Several factors indicate readiness for automated deployment. High test coverage—typically above 80%—provides confidence that automated validation catches most issues. Comprehensive monitoring gives visibility into production behavior and enables quick problem detection. Architectural patterns supporting gradual rollout and quick rollback reduce risk.

Organizational factors matter equally. Teams must trust their testing and monitoring enough to remove human gatekeeping. Product owners must accept that features will reach production as soon as they're merged rather than when business decides. Operations teams must be comfortable with the increased deployment frequency and the shift from preventing problems to detecting and resolving them quickly.

Cultural readiness often proves the limiting factor. Technical capabilities can be built, but organizational trust and comfort with uncertainty develop more slowly. Assessing cultural readiness honestly prevents premature adoption that leads to failure and reinforces skepticism about continuous practices.

Hybrid Approaches and Middle Grounds

Organizations need not choose between extremes. Hybrid approaches combine elements of both practices to suit specific contexts. Automated deployment might apply to non-customer-facing services while customer-facing applications maintain manual release control. Different teams within an organization might operate at different maturity levels based on their specific constraints and capabilities.

Feature flags enable a form of continuous deployment where code reaches production automatically but features remain hidden until explicitly enabled. This approach provides deployment automation benefits while preserving business control over feature availability. It represents a pragmatic middle ground that many organizations find valuable.

Scheduled automation—where deployments occur automatically but only during specific time windows—offers another compromise. This approach automates the deployment process while controlling timing to avoid problematic periods or ensure staff availability for monitoring. It provides many automation benefits while addressing organizational concerns about deployment timing.

Metrics and Indicators of Effective Implementation

Measuring the impact of continuous practices helps justify investment, identify improvement opportunities, and demonstrate value to stakeholders. Effective metrics focus on outcomes rather than activities, capturing the business value these practices deliver.

Deployment Frequency and Lead Time

Deployment frequency measures how often code reaches production. This metric directly reflects the organization's ability to deliver value to users. Increasing frequency from monthly to weekly to daily indicates growing capability and confidence. However, frequency alone doesn't indicate success—quality and stability matter equally.

Lead time measures the duration between committing code and deploying it to production. Shorter lead times enable faster feedback and more responsive product development. Tracking lead time helps identify bottlenecks in the delivery pipeline, whether in testing, review processes, or deployment procedures. Improvements in lead time indicate increasing efficiency in the delivery process.

Change Failure Rate and Recovery Time

Change failure rate tracks the percentage of deployments that cause production problems requiring remediation. This metric balances deployment frequency, preventing teams from optimizing speed at the expense of stability. Acceptable failure rates vary by context, but the goal is maintaining or reducing failure rates even as deployment frequency increases.

Mean time to recovery measures how quickly teams restore service when problems occur. Fast recovery matters more than never failing. Organizations deploying frequently with quick recovery often deliver better overall availability than those deploying rarely but taking days to recover from problems. Tracking recovery time encourages investment in monitoring, rollback capabilities, and incident response processes.

Developer Productivity and Satisfaction

Developer productivity proves challenging to measure directly, but proxy metrics provide insights. Time spent on integration issues, deployment activities, or firefighting incidents indicates waste that continuous practices aim to eliminate. Tracking these metrics helps quantify productivity improvements.

Developer satisfaction surveys capture qualitative benefits that numbers miss. Questions about deployment confidence, ability to deliver features quickly, and work-life balance reveal whether practices are achieving their intended effects. Regular surveys track trends over time, showing whether satisfaction improves as practices mature.

Business Impact Metrics

Ultimately, technical practices should deliver business value. Metrics around feature adoption, customer satisfaction, and revenue impact demonstrate this connection. Tracking how quickly features reach users and how quickly the organization can respond to feedback shows business agility improvements.

Customer satisfaction scores, support ticket volumes, and defect rates indicate whether quality is maintained or improved despite increased deployment frequency. These metrics help counter concerns that moving faster means sacrificing quality, demonstrating that well-implemented continuous practices improve both speed and quality simultaneously.

Emerging Trends and Future Directions

Continuous practices continue evolving as new technologies and approaches emerge. Understanding these trends helps organizations prepare for future developments and make strategic decisions about current investments.

AI-Assisted Testing and Deployment

Artificial intelligence and machine learning are beginning to enhance continuous practices. AI-powered test generation creates comprehensive test suites automatically, addressing the coverage challenges that limit deployment automation adoption. Intelligent monitoring systems detect anomalies more accurately than rule-based approaches, reducing false positives while catching subtle issues that human-defined thresholds miss.

Predictive analytics help forecast deployment risks based on change characteristics, code complexity, and historical patterns. These predictions inform rollout strategies, suggesting more cautious approaches for higher-risk changes while enabling faster deployment of low-risk modifications. As these capabilities mature, they'll further reduce the human judgment required in deployment processes.

Progressive Delivery and Advanced Rollout Patterns

Deployment strategies are becoming more sophisticated, moving beyond simple blue-green or canary patterns. Progressive delivery encompasses techniques like ring-based deployment where changes roll out to increasingly broad user segments based on automated quality signals. User segmentation allows testing changes with specific customer cohorts before general availability.

These advanced patterns enable more nuanced risk management and faster feedback collection. Organizations can validate changes with internal users, then beta customers, then general availability with automated progression based on success criteria. This approach combines deployment automation with controlled rollout, providing benefits of both approaches.

Platform Engineering and Developer Experience

Platform engineering emerged as a discipline focused on building internal platforms that make continuous practices easier for development teams. Rather than each team building their own deployment pipelines and tooling, platform teams create shared capabilities that abstract complexity and enforce best practices.

This trend recognizes that automation infrastructure is complex enough to warrant dedicated engineering attention. Platform teams build self-service capabilities that development teams consume, reducing the expertise required to implement continuous practices. This democratization enables broader adoption while maintaining consistency and reliability across the organization.

Frequently Asked Questions

What's the minimum team size needed to benefit from continuous integration practices?

Even solo developers benefit from continuous integration through automated testing and build processes that catch issues early. However, the collaborative benefits become more apparent with teams of three or more developers where integration challenges naturally arise. The practice scales effectively from small teams to organizations with hundreds of developers working on the same codebase.

Can continuous deployment work for mobile applications given app store review processes?

Traditional continuous deployment faces challenges with mobile apps due to app store approval delays. However, organizations can achieve similar benefits through continuous delivery to beta testing channels, combined with over-the-air update mechanisms for content and configuration changes. Some teams maintain web-based components that deploy continuously while native app updates follow store review processes.

How do feature branches fit with continuous integration practices?

Long-lived feature branches conflict with continuous integration principles by delaying integration until features complete. Short-lived branches—lasting hours or days rather than weeks—align better with the practice. Trunk-based development, where developers commit directly to the main branch using feature flags to hide incomplete work, represents the most integrated approach. The key is ensuring all code integrates frequently regardless of branching strategy.

What happens when automated deployments fail in production?

Robust continuous deployment implementations include automated rollback triggered by monitoring alerts when deployments degrade key metrics. The system automatically reverts to the previous version within seconds or minutes, often before users notice issues. Teams then investigate the failure, fix the problem, and deploy the correction through the same automated process. This rapid iteration on fixes often resolves issues faster than manual processes could.

How do database schema changes work with continuous deployment?

Database changes require careful coordination with application changes to avoid breaking production systems. Techniques like backward-compatible migrations, expand-contract patterns, and feature flags enable deploying schema changes safely. The key is ensuring each deployment can work with both old and new schema versions, allowing gradual migration rather than requiring synchronized changes across all components simultaneously.

Should every commit automatically deploy to production?

In pure continuous deployment, yes—every commit that passes automated quality gates deploys automatically. However, many organizations implement variations where commits deploy to production but features remain hidden behind flags, or where deployment occurs automatically but only during specific time windows. The right approach depends on organizational context, risk tolerance, and technical capabilities.

How long does it typically take to implement these practices?

Implementation timelines vary dramatically based on starting conditions and target maturity. Establishing basic continuous integration might take weeks for teams with good test coverage and modern tooling, or months for teams with legacy codebases requiring significant refactoring. Full continuous deployment typically requires 6-18 months of progressive capability building, including infrastructure automation, monitoring implementation, and cultural transformation alongside technical changes.

What's the biggest mistake organizations make when adopting these practices?

Focusing exclusively on tooling while neglecting culture and process represents the most common mistake. Organizations install automation tools expecting transformation to follow automatically, only to find teams working around the automation or achieving only superficial adoption. Successful implementation requires equal attention to technical capabilities, team behaviors, and organizational culture. The technology enables the practices, but people and process determine whether they succeed.