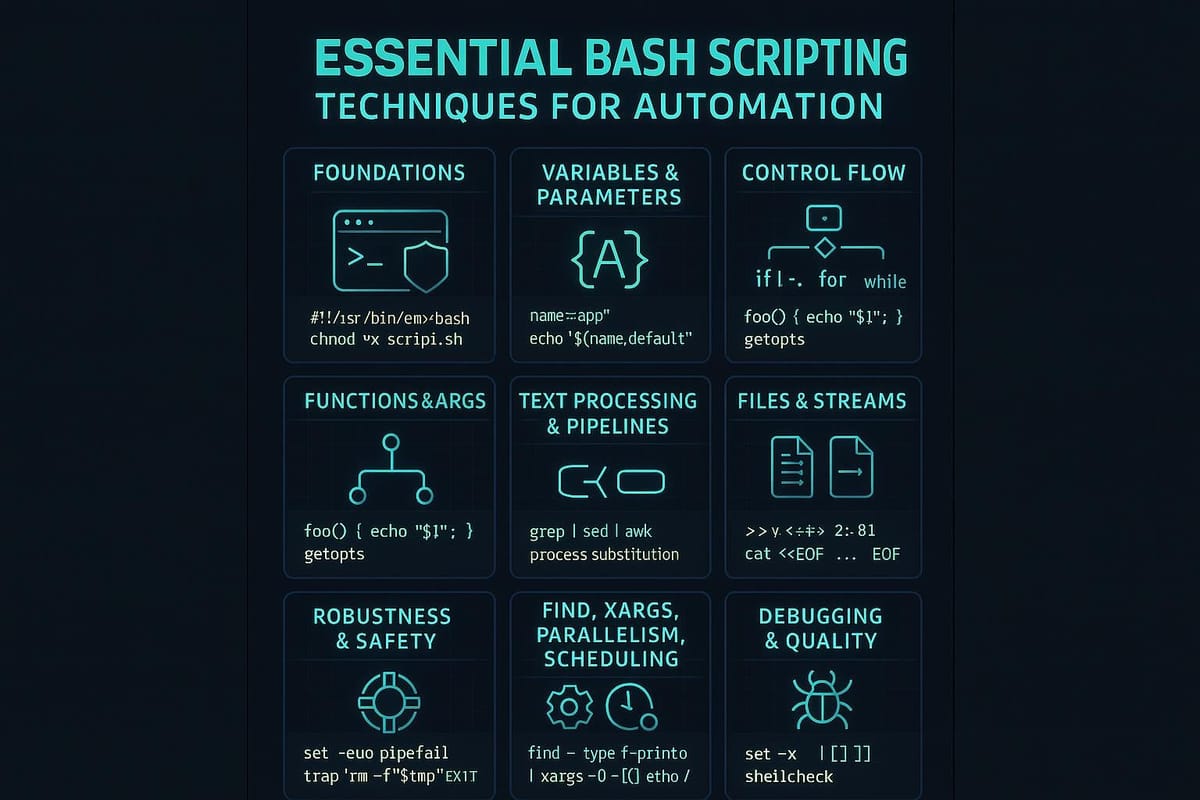

Essential Bash Scripting Techniques for Automation

Learn essential Bash scripting techniques to automate tasks on Linux with clear examples and best practices. Perfect for Linux and DevOps beginners looking to boost efficiency.

Short introduction:

Bash is the glue of many automation tasks on Unix-like systems — lightweight, powerful, and available almost everywhere. This tutorial gives practical, beginner-friendly techniques you can use right away to write safer, more maintainable Bash scripts for automation.

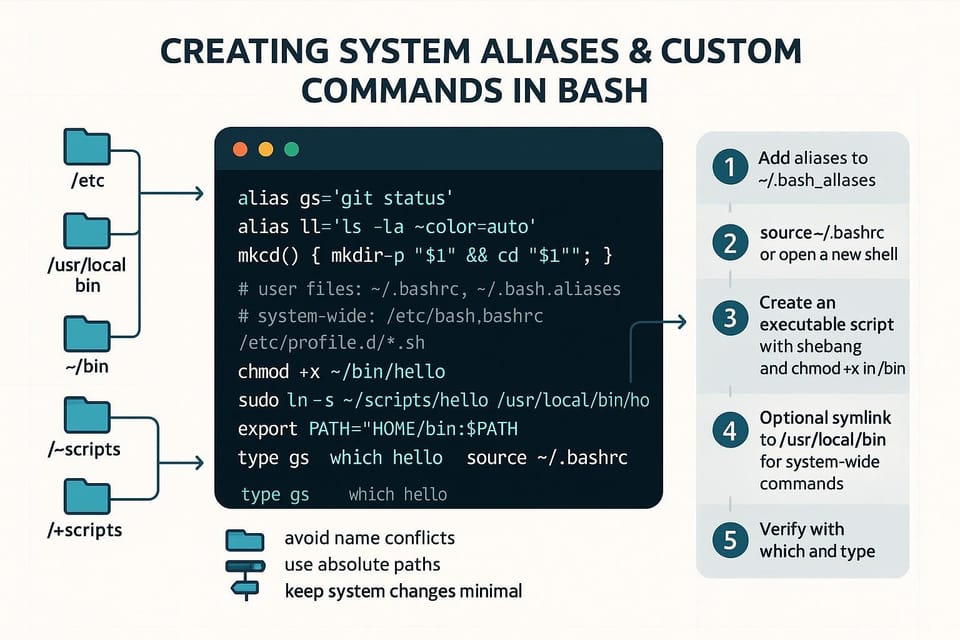

Getting started: script skeleton, shebang, and execution

Every Bash script should start with a shebang so the kernel knows which interpreter to use. Then make the file executable and run it.

Example script (save as hello.sh):

#!/usr/bin/env bash

# Simple script that greets the user

name="${1:-World}" # positional arg or default

echo "Hello, $name!"

Make it executable and run:

chmod +x hello.sh

./hello.sh Alice

# Output: Hello, Alice!

./hello.sh

# Output: Hello, World!

Why this matters:

- Using /usr/bin/env makes your script more portable across systems.

- Always check the file permission (chmod +x) before assuming you can run the script.

- Prefer readable, short scripts and delegate heavy logic to functions (see later).

Variables, quoting, and command substitution

Variables are simple in Bash but quoting rules are crucial to avoid word splitting and globbing bugs.

Assignments and usage:

greeting="Hello"

name="Alice"

echo "$greeting, $name" # use double quotes to preserve spaces

count=$(wc -l < file.txt) # command substitution (preferred)

files=( *.txt ) # array with glob expansion

echo "Found ${#files[@]} txt files"

Quoting rules in brief:

- Double quotes ("") preserve most characters but allow variable and command substitution.

- Single quotes ('') prevent substitutions entirely.

- Unquoted variable expansions can split into multiple words or be interpreted as globs — usually undesirable.

Safe default for undefined variables:

set -u # treat unset variables as an error

name="${1:-default}" # provide fallback to avoid errors

Control flow, loops, and functions

Control structures let you express logic cleanly. Break repeated behavior into functions.

If/elif/else:

if [[ -f "$1" ]]; then

echo "$1 exists and is a file"

elif [[ -d "$1" ]]; then

echo "$1 exists and is a directory"

else

echo "$1 does not exist"

fi

Loops:

# For each file passed as args

for f in "$@"; do

echo "Processing: $f"

done

# Read lines safely (handles spaces)

while IFS= read -r line; do

echo "Line: $line"

done < input.txt

Functions and exit codes:

log() { printf '%s\n' "$*"; }

backup_file() {

local src="$1" dst="$2"

cp -- "$src" "$dst" || return 1 # return non-zero on failure

log "Backed up $src to $dst"

}

if backup_file "a.conf" "a.conf.bak"; then

log "Success"

else

log "Backup failed"

fi

Tips:

- Use local variables inside functions: local foo=...

- Rely on exit codes and use || and && to control flow concisely.

Essential commands (commands table)

Below is a handy table of commonly used Bash/Unix commands for automation, with quick examples.

| Command | What it does | Example |

|---|---|---|

| grep | Search text using patterns | grep -i "error" logfile |

| awk | Field processing and reporting | awk -F: '{print $1}' /etc/passwd |

| sed | Stream editing (substitute) | sed 's/foo/bar/g' file |

| xargs | Build and run commands from stdin | find . -name '*.tmp' |

| find | Find files recursively | find /var/log -name '*.log' -mtime +7 |

| tee | Write stdout to file and stdout | somecmd |

| jq | Parse JSON (if installed) | curl ... |

| cut | Extract columns | cut -d',' -f2 file.csv |

Examples that combine commands:

# Delete .tmp files older than 7 days (preview first)

find . -name '*.tmp' -mtime +7 -print # preview

find . -name '*.tmp' -mtime +7 -print0 | xargs -0 rm -v

Why use these:

- Pipes and small tools let you build powerful one-liners and readable scripts.

- Prefer composability: one tool per job (grep to filter, awk to transform, sed to edit).

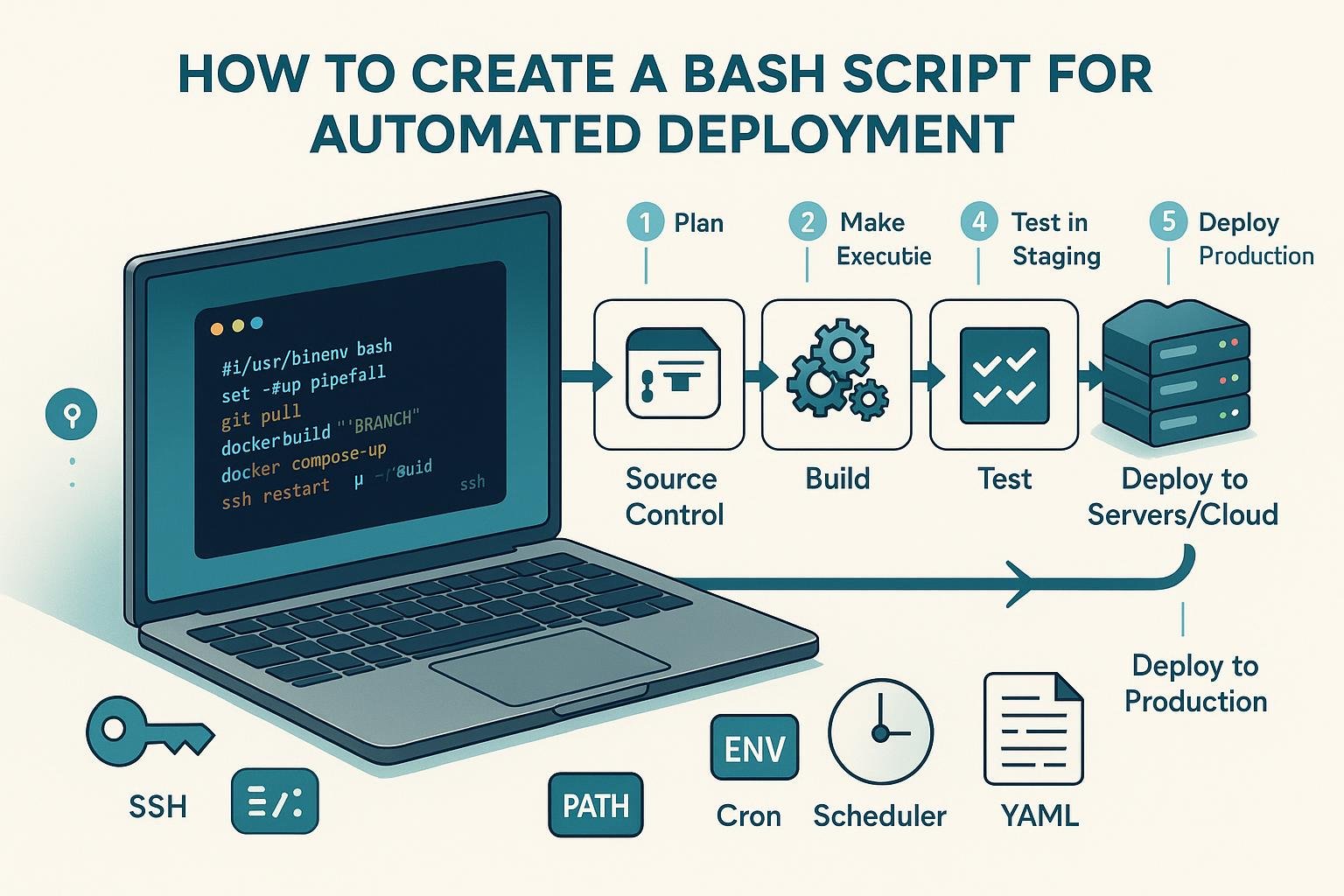

Automation patterns and safe scripting

When automating, safety and observability matter: use strict settings, logging, argument parsing, and scheduled jobs.

Shell safety flags:

#!/usr/bin/env bash

set -euo pipefail

IFS=$'\n\t' # safer default IFS

- set -e: exit on first command that fails (be careful with commands that return non-zero on purpose).

- set -u: error on unset variables.

- set -o pipefail: return non-zero if any command in a pipeline fails.

Argument parsing with getopts:

usage() { echo "Usage: $0 -s source -d dest"; exit 2; }

while getopts ":s:d:h" opt; do

case "$opt" in

s) src="$OPTARG" ;;

d) dst="$OPTARG" ;;

h) usage ;;

*) usage ;;

esac

done

shift $((OPTIND-1))

[ -n "${src:-}" ] || usage

[ -n "${dst:-}" ] || usage

Cron scheduling:

- Edit crontab: crontab -e

- Always redirect stdout and stderr to a log file for scheduled jobs.

- Test the script manually before scheduling it.

Example cron entry (runs at 2:30am daily):

30 2 * * * /usr/local/bin/backup.sh >> /var/log/backup.log 2>&1

Tips:

Cleanup with trap:

tmpdir=$(mktemp -d)

trap 'rm -rf "$tmpdir"' EXIT ERR INT TERM

# do work using $tmpdir

trap ensures temporary resources are cleaned on exit or interruption.

Logging patterns:

log() { printf '%s %s\n' "$(date --iso-8601=seconds)" "$*"; }

log "Starting job"

Consistent timestamps and messages make debugging scheduled tasks much easier.

Common Pitfalls

- Unquoted variable expansion: echo $files can break on filenames with spaces; prefer "$files" or iterate arrays.

- Relying only on set -e: some commands in pipelines or conditional checks can mask failures; combine with pipefail and explicit checks.

- Not testing scripts interactively: always run scripts manually with sample inputs, and add verbose/debug mode (e.g., -x) before putting them into cron.

Next Steps

- Practice: write small scripts to automate daily repetitive tasks (backups, log rotation, report generation).

- Learn one tool deeply: choose awk or sed and solve a few text-processing problems with it.

- Version and test: put scripts in Git, add comments and usage output, and test them on edge cases (empty inputs, missing files).

This guide covers the essentials needed to start scripting efficiently and safely in Bash. Use small, composable tools, prefer clear error handling, and iterate — your automation will become more reliable and easier to maintain as you apply these techniques.

👉 Explore more IT books and guides at dargslan.com.