How to Automate Deployment with Jenkins

Jenkins pipeline diagram: checkout, build, test, package, push artifact, containerize, deploy to staging, run smoke tests, promote to production, notify teams, rollback if failure.

Understanding the Critical Role of Automated Deployment in Modern Development

In today's fast-paced software development landscape, the ability to deploy applications quickly, reliably, and consistently has become a fundamental requirement rather than a luxury. Manual deployment processes are not only time-consuming but also prone to human error, creating bottlenecks that can significantly impact your team's productivity and your organization's ability to respond to market demands. When deployment becomes a manual chore, developers spend valuable time on repetitive tasks instead of focusing on innovation and solving complex problems.

Automated deployment represents a systematic approach to releasing software where code changes flow through predefined pipelines, undergoing testing, validation, and deployment without requiring manual intervention at each step. Jenkins, an open-source automation server, has emerged as one of the most powerful and flexible tools for implementing continuous integration and continuous deployment (CI/CD) practices. It provides the infrastructure needed to orchestrate complex deployment workflows while maintaining complete visibility and control over the entire process.

Throughout this comprehensive guide, you'll discover practical strategies for implementing automated deployment with Jenkins, from initial setup through advanced configuration techniques. Whether you're deploying to traditional servers, cloud platforms, or containerized environments, you'll gain actionable insights into building robust deployment pipelines that enhance reliability, reduce deployment time, and empower your development team to deliver value more frequently and confidently.

Establishing Your Jenkins Foundation for Deployment Automation

Before diving into deployment automation, you need a properly configured Jenkins instance that serves as the foundation for all your CI/CD activities. The installation process varies depending on your infrastructure preferences, but the core principles remain consistent across platforms. Jenkins can run on physical servers, virtual machines, or as a containerized application, with each approach offering distinct advantages based on your organizational requirements.

Installing and Configuring Jenkins for Deployment Tasks

Setting up Jenkins begins with selecting the appropriate installation method for your environment. For Linux-based systems, you can install Jenkins through package managers, which simplifies updates and maintenance. On Ubuntu or Debian systems, this involves adding the Jenkins repository and installing via apt, while Red Hat-based distributions use yum or dnf. For organizations embracing containerization, running Jenkins in Docker provides isolation, portability, and simplified scaling capabilities.

Once installed, accessing the Jenkins web interface at the default port 8080 presents you with an initial setup wizard. This wizard guides you through unlocking Jenkins with an administrator password, installing suggested plugins, and creating your first admin user. The plugin installation step is particularly important for deployment automation, as Jenkins's extensibility through plugins is what makes it so powerful for diverse deployment scenarios.

"The true power of automation isn't just about speed—it's about creating repeatable, reliable processes that eliminate the uncertainty and stress from deployment activities."

After completing the initial setup, configuring global tool installations ensures Jenkins can execute the build and deployment commands your applications require. Navigate to "Manage Jenkins" and then "Global Tool Configuration" to specify installations for JDK, Git, Maven, Gradle, Node.js, or other tools your projects depend on. Jenkins can automatically install these tools or use existing installations on the system, providing flexibility for different organizational policies.

Essential Plugins for Deployment Automation

Jenkins's plugin ecosystem transforms it from a basic automation server into a comprehensive deployment platform. For deployment automation, several categories of plugins prove essential:

- Source Control Integration: Git Plugin, GitHub Plugin, Bitbucket Plugin enable Jenkins to monitor repositories and trigger builds based on code changes

- Build Tools: Maven Integration, Gradle Plugin, NodeJS Plugin provide native support for various build systems

- Deployment Targets: SSH Plugin, Publish Over SSH, Deploy to Container Plugin facilitate deploying to different environments

- Cloud Platforms: AWS Steps, Azure CLI Plugin, Google Cloud SDK Plugin enable cloud-native deployments

- Container Orchestration: Docker Plugin, Kubernetes Plugin, OpenShift Plugin support containerized deployment workflows

- Pipeline Enhancement: Pipeline Plugin, Blue Ocean, Multibranch Pipeline provide advanced pipeline capabilities

Installing plugins through the Jenkins Plugin Manager is straightforward—navigate to "Manage Jenkins," select "Manage Plugins," and use the "Available" tab to search for and install required plugins. After installation, most plugins require a Jenkins restart to become fully operational, though some support dynamic loading.

Designing Effective Deployment Pipelines

A deployment pipeline represents the automated manifestation of your release process, defining every step from code commit to production deployment. Well-designed pipelines balance thoroughness with speed, incorporating necessary validation stages while minimizing overall deployment time. Jenkins supports both declarative and scripted pipeline syntaxes, with declarative pipelines offering a more structured, opinionated approach that suits most deployment scenarios.

Pipeline Structure and Best Practices

A typical deployment pipeline consists of several distinct stages that code passes through sequentially. The build stage compiles source code and creates deployable artifacts, ensuring code can be successfully built before proceeding further. The test stage executes automated tests—unit tests, integration tests, and sometimes end-to-end tests—to validate functionality and catch regressions early. The artifact stage packages the application into deployable formats and stores them in artifact repositories for traceability and potential rollback.

Following successful testing, the deployment stage delivers the application to target environments. Organizations typically employ multiple environments—development, staging, and production—with deployments progressing through each sequentially. This progression allows for increasingly rigorous validation before changes reach end users. Between stages, approval gates can require manual confirmation before proceeding, particularly useful before production deployments where human oversight provides an additional safety mechanism.

| Pipeline Stage | Primary Purpose | Typical Duration | Failure Impact |

|---|---|---|---|

| Checkout | Retrieve source code from repository | 10-30 seconds | Pipeline stops immediately |

| Build | Compile code and resolve dependencies | 1-5 minutes | No artifact created, pipeline halts |

| Unit Test | Validate individual components | 1-3 minutes | Code quality issue identified early |

| Integration Test | Verify component interactions | 3-10 minutes | Integration problems detected |

| Security Scan | Identify vulnerabilities | 2-5 minutes | Security issues flagged |

| Deploy to Staging | Release to pre-production environment | 2-5 minutes | Deployment mechanism issues found |

| Smoke Test | Verify basic functionality in staging | 1-3 minutes | Deployment validation fails |

| Deploy to Production | Release to end users | 3-10 minutes | Production deployment fails, rollback needed |

Creating Your First Deployment Pipeline

Jenkins pipelines are defined using Groovy-based domain-specific language (DSL) in files named Jenkinsfile. Storing this file in your source repository alongside application code ensures version control for your deployment process and enables pipeline-as-code practices. A basic declarative pipeline for deploying a web application might look like this:

pipeline {

agent any

environment {

DEPLOY_SERVER = 'production.example.com'

DEPLOY_PATH = '/var/www/application'

}

stages {

stage('Checkout') {

steps {

git branch: 'main', url: 'https://github.com/yourorg/yourapp.git'

}

}

stage('Build') {

steps {

sh 'npm install'

sh 'npm run build'

}

}

stage('Test') {

steps {

sh 'npm test'

}

}

stage('Deploy to Staging') {

steps {

sh '''

rsync -avz --delete ./dist/ staging@staging.example.com:/var/www/application/

'''

}

}

stage('Approval') {

steps {

input message: 'Deploy to production?', ok: 'Deploy'

}

}

stage('Deploy to Production') {

steps {

sh '''

rsync -avz --delete ./dist/ deploy@${DEPLOY_SERVER}:${DEPLOY_PATH}/

'''

}

}

}

post {

success {

echo 'Deployment completed successfully!'

}

failure {

echo 'Deployment failed. Check logs for details.'

}

}

}

This pipeline demonstrates fundamental concepts: the agent directive specifies where the pipeline executes, environment variables store configuration values, stages define the sequential steps, and post actions handle success or failure scenarios. Each stage contains steps that execute specific commands or invoke Jenkins plugins.

"Automation doesn't eliminate the need for human judgment—it amplifies it by removing mundane tasks and allowing teams to focus on decisions that truly require expertise and context."

Implementing Secure Credential Management

Deployment automation inevitably requires handling sensitive information—SSH keys, API tokens, database passwords, and cloud provider credentials. Storing these secrets directly in pipeline code or configuration files creates significant security vulnerabilities and complicates credential rotation. Jenkins provides a robust credentials management system that securely stores sensitive information and makes it available to pipelines without exposing the actual values.

Configuring Credentials in Jenkins

The Jenkins credentials system supports multiple credential types, each suited to different authentication scenarios. Username with password credentials work for basic authentication, SSH username with private key enables secure server access, secret text stores API tokens or single-value secrets, and secret file handles credential files like service account keys or certificate files.

Adding credentials through the Jenkins interface involves navigating to "Manage Jenkins," selecting "Manage Credentials," choosing the appropriate domain (typically "Global"), and clicking "Add Credentials." Each credential receives a unique ID that pipelines reference to access the credential without seeing its actual value. This abstraction ensures that even users with access to pipeline code cannot view the underlying secrets.

Within pipelines, the credentials() helper function retrieves credentials and makes them available as environment variables. For username-password credentials, Jenkins creates two variables: one for the username and one for the password. For secret text, a single variable contains the secret value. This approach maintains security while providing pipelines with necessary authentication information:

pipeline {

agent any

environment {

AWS_CREDENTIALS = credentials('aws-deploy-credentials')

DB_PASSWORD = credentials('production-db-password')

}

stages {

stage('Deploy to AWS') {

steps {

sh '''

aws configure set aws_access_key_id $AWS_CREDENTIALS_USR

aws configure set aws_secret_access_key $AWS_CREDENTIALS_PSW

aws s3 sync ./dist/ s3://my-application-bucket/

'''

}

}

}

}

Deploying to Different Target Environments

Modern applications deploy to diverse infrastructure types, each with unique requirements and optimal deployment approaches. Understanding how to configure Jenkins for various deployment targets ensures your automation strategy adapts to your specific infrastructure landscape.

Traditional Server Deployments

Deploying to traditional servers—whether physical machines or virtual instances—typically involves transferring application files and restarting services. The SSH protocol provides secure remote access for these operations, with Jenkins offering several approaches to SSH-based deployment.

The SSH Agent Plugin enables Jenkins to use SSH credentials for remote operations. Combined with standard shell commands, this approach offers maximum flexibility for custom deployment procedures. The deployment process generally involves establishing an SSH connection, transferring files using scp or rsync, and executing remote commands to restart services or perform other deployment tasks.

For more structured deployments, the Publish Over SSH Plugin provides a configuration-driven approach. After installing the plugin, you configure SSH servers in Jenkins global configuration, specifying hostname, credentials, and remote directories. Pipeline stages can then reference these configured servers, simplifying deployment syntax while maintaining security through credential abstraction.

Cloud Platform Deployments

Cloud platforms like AWS, Azure, and Google Cloud offer specialized deployment mechanisms that Jenkins can leverage through dedicated plugins and CLI tools. These platforms typically involve deploying to managed services that abstract underlying infrastructure, requiring different deployment approaches than traditional servers.

AWS deployments might target Elastic Beanstalk for application hosting, S3 for static website hosting, or ECS for containerized applications. The AWS CLI, installable as a Jenkins tool, provides comprehensive control over AWS services. Pipelines authenticate using IAM credentials stored in Jenkins, then execute AWS CLI commands to upload artifacts and trigger deployments:

stage('Deploy to AWS Elastic Beanstalk') {

steps {

withCredentials([[$class: 'AmazonWebServicesCredentialsBinding',

credentialsId: 'aws-credentials']]) {

sh '''

aws elasticbeanstalk create-application-version \

--application-name my-application \

--version-label ${BUILD_NUMBER} \

--source-bundle S3Bucket="my-deployment-bucket",S3Key="app-${BUILD_NUMBER}.zip"

aws elasticbeanstalk update-environment \

--environment-name production \

--version-label ${BUILD_NUMBER}

'''

}

}

}

Azure deployments utilize the Azure CLI or Azure DevOps integration. The Azure CLI Plugin installs the Azure command-line tools, enabling pipelines to deploy to App Services, Container Instances, or Kubernetes Service. Authentication uses service principals stored as Jenkins credentials, providing secure, automated access to Azure resources.

Google Cloud deployments leverage the Google Cloud SDK for deploying to App Engine, Cloud Run, or Kubernetes Engine. Similar to other cloud providers, the SDK requires service account credentials, which Jenkins stores securely and makes available during pipeline execution.

Container and Kubernetes Deployments

Containerized applications have transformed deployment practices, with Docker and Kubernetes becoming standard platforms for modern applications. Jenkins integrates deeply with container technologies, supporting building container images, pushing to registries, and deploying to orchestration platforms.

A typical containerized deployment workflow involves building a Docker image containing your application, tagging the image with version information, pushing the image to a container registry (Docker Hub, AWS ECR, Google Container Registry, or Azure Container Registry), and updating the deployment in your orchestration platform to use the new image.

"The goal of deployment automation isn't perfection on the first try—it's creating a system that fails fast, provides clear feedback, and makes recovery straightforward."

The Docker Pipeline Plugin provides native Docker support within Jenkins pipelines. This plugin exposes a docker object with methods for building images, running containers, and interacting with registries. A pipeline building and deploying a containerized application might include these stages:

pipeline {

agent any

environment {

DOCKER_REGISTRY = 'registry.example.com'

IMAGE_NAME = 'my-application'

IMAGE_TAG = "${BUILD_NUMBER}"

}

stages {

stage('Build Docker Image') {

steps {

script {

docker.build("${DOCKER_REGISTRY}/${IMAGE_NAME}:${IMAGE_TAG}")

}

}

}

stage('Push to Registry') {

steps {

script {

docker.withRegistry("https://${DOCKER_REGISTRY}", 'registry-credentials') {

docker.image("${DOCKER_REGISTRY}/${IMAGE_NAME}:${IMAGE_TAG}").push()

docker.image("${DOCKER_REGISTRY}/${IMAGE_NAME}:${IMAGE_TAG}").push('latest')

}

}

}

}

stage('Deploy to Kubernetes') {

steps {

withKubeConfig([credentialsId: 'kubernetes-credentials']) {

sh '''

kubectl set image deployment/my-application \

my-application=${DOCKER_REGISTRY}/${IMAGE_NAME}:${IMAGE_TAG} \

--record

kubectl rollout status deployment/my-application

'''

}

}

}

}

}

For Kubernetes deployments, the Kubernetes Plugin enables Jenkins to deploy directly to clusters using kubectl commands or Kubernetes API calls. The plugin supports multiple authentication methods, including kubeconfig files, service account tokens, and cloud provider authentication. Deployments typically update existing Kubernetes Deployment resources with new image tags, triggering rolling updates that gradually replace old pods with new ones while maintaining application availability.

Implementing Blue-Green and Canary Deployment Strategies

Advanced deployment strategies minimize risk and downtime by carefully controlling how new versions replace old ones. While basic deployments simply replace the running application version, sophisticated strategies provide mechanisms for validation, gradual rollout, and instant rollback if problems arise.

Blue-Green Deployments

Blue-green deployment maintains two identical production environments—blue (currently serving traffic) and green (idle). When deploying a new version, you deploy to the idle environment, perform validation, then switch traffic from blue to green. This approach provides instant rollback capability (switch traffic back to blue) and zero-downtime deployments, though it requires double the infrastructure resources.

Implementing blue-green deployments in Jenkins involves pipeline stages that deploy to the inactive environment, run smoke tests, and update load balancer or DNS configuration to switch traffic. Cloud platforms often provide native support for blue-green deployments through their load balancing services:

stage('Blue-Green Deployment') {

steps {

script {

// Determine current active environment

def currentEnv = sh(script: 'aws elasticbeanstalk describe-environments --application-name my-app --query "Environments[?Status==\`Ready\`].EnvironmentName" --output text', returnStdout: true).trim()

def targetEnv = currentEnv == 'my-app-blue' ? 'my-app-green' : 'my-app-blue'

// Deploy to inactive environment

sh """

aws elasticbeanstalk update-environment \

--environment-name ${targetEnv} \

--version-label ${BUILD_NUMBER}

"""

// Wait for deployment to complete

sh "aws elasticbeanstalk wait environment-updated --environment-name ${targetEnv}"

// Run smoke tests against new environment

sh "curl -f http://${targetEnv}.elasticbeanstalk.com/health"

// Swap environment URLs to switch traffic

sh """

aws elasticbeanstalk swap-environment-cnames \

--source-environment-name ${currentEnv} \

--destination-environment-name ${targetEnv}

"""

}

}

}

Canary Deployments

Canary deployments gradually roll out new versions by initially routing a small percentage of traffic to the new version while monitoring metrics. If metrics remain healthy, the percentage gradually increases until all traffic uses the new version. This approach catches issues affecting only certain users or usage patterns while limiting the blast radius of problems.

Kubernetes provides excellent support for canary deployments through its Deployment resources and Service routing. A Jenkins pipeline can deploy a canary version alongside the stable version, gradually scale up the canary while scaling down the stable version, and monitor metrics to decide whether to proceed or abort:

stage('Canary Deployment') {

steps {

// Deploy canary version with 10% of pods

sh '''

kubectl apply -f - < 0.01) {

error("Canary deployment showing elevated error rate. Rolling back.")

}

}

// Gradually increase canary traffic

sh 'kubectl scale deployment my-application-canary --replicas=3'

sleep 300

// Final promotion - replace stable with canary

sh '''

kubectl set image deployment/my-application my-application=${DOCKER_REGISTRY}/${IMAGE_NAME}:${IMAGE_TAG}

kubectl delete deployment my-application-canary

'''

}

}

| Deployment Strategy | Rollback Speed | Resource Requirements | Risk Level | Best Use Case |

|---|---|---|---|---|

| Rolling Update | Moderate (redeploy previous version) | Standard (no extra resources) | Medium | Standard applications with good test coverage |

| Blue-Green | Instant (switch traffic back) | High (2x production environment) | Low | Critical applications requiring zero downtime |

| Canary | Fast (scale down canary) | Moderate (small additional capacity) | Very Low | High-traffic applications where gradual validation is important |

| Recreate | Moderate (redeploy previous version) | Standard (no extra resources) | High | Development environments or applications that can tolerate downtime |

Monitoring and Notification Integration

Deployment automation doesn't end when code reaches production—effective monitoring and notification ensure teams immediately know about deployment outcomes and can respond quickly to issues. Jenkins integrates with numerous monitoring and communication platforms to provide comprehensive visibility into deployment activities.

Build Status Notifications

Timely notifications keep teams informed about deployment progress without requiring constant monitoring of the Jenkins interface. Email notifications represent the most basic notification mechanism, with the Email Extension Plugin providing rich formatting and conditional sending based on build status. However, modern teams often prefer real-time chat notifications that integrate with their existing communication workflows.

The Slack Notification Plugin sends build status updates to Slack channels, providing immediate visibility to entire teams. After configuring the plugin with your Slack workspace credentials, pipelines can send notifications at various stages:

pipeline {

agent any

stages {

stage('Deploy') {

steps {

slackSend channel: '#deployments',

color: 'good',

message: "Deployment started for ${env.JOB_NAME} #${env.BUILD_NUMBER}"

// Deployment steps here

slackSend channel: '#deployments',

color: 'good',

message: "Deployment completed successfully for ${env.JOB_NAME} #${env.BUILD_NUMBER}"

}

}

}

post {

failure {

slackSend channel: '#deployments',

color: 'danger',

message: "Deployment failed for ${env.JOB_NAME} #${env.BUILD_NUMBER}. Check logs: ${env.BUILD_URL}"

}

}

}

Similar plugins exist for Microsoft Teams, Discord, and other communication platforms, ensuring Jenkins fits into your existing team communication patterns rather than requiring teams to adapt to Jenkins's notification preferences.

"Successful automation isn't measured by how much you've automated, but by how much confidence it gives your team to deploy frequently without fear."

Integration with Monitoring Systems

Beyond notifying teams about deployment completion, integrating with application monitoring systems provides crucial context about deployment impact. When deployments complete, recording deployment events in monitoring systems enables correlation between deployments and metrics changes, helping teams quickly identify whether new deployments caused performance degradations or error rate increases.

For applications monitored by Prometheus, pipelines can create deployment annotations that appear on Grafana dashboards, providing visual markers showing when deployments occurred. For New Relic, the deployment marker API records deployments, associating them with specific application versions. These integrations transform monitoring from passive observation to active deployment validation.

Handling Deployment Failures and Rollbacks

Despite thorough testing and careful planning, deployments sometimes fail. Effective automation includes strategies for detecting failures quickly, rolling back to previous versions, and preserving diagnostic information for post-incident analysis.

Automated Rollback Mechanisms

Rollback strategies depend on your deployment target and strategy. For containerized applications in Kubernetes, the kubectl rollout undo command reverts to the previous deployment version. For traditional server deployments, maintaining versioned artifact directories enables quick switching between versions. Cloud platforms typically provide version management features that support rollback to previous application versions.

Jenkins pipelines can implement automated rollback triggered by post-deployment validation failures. After deploying a new version, the pipeline executes smoke tests or health checks. If these validations fail, the pipeline automatically triggers rollback procedures:

stage('Deploy and Validate') {

steps {

script {

try {

// Deploy new version

sh 'kubectl set image deployment/my-application my-application=${IMAGE_TAG}'

sh 'kubectl rollout status deployment/my-application --timeout=5m'

// Validate deployment

def healthCheck = sh(script: 'curl -f http://my-application/health', returnStatus: true)

if (healthCheck != 0) {

throw new Exception("Health check failed after deployment")

}

echo "Deployment validated successfully"

} catch (Exception e) {

echo "Deployment validation failed. Rolling back..."

sh 'kubectl rollout undo deployment/my-application'

sh 'kubectl rollout status deployment/my-application'

error("Deployment failed and was rolled back: ${e.message}")

}

}

}

}

Preserving Deployment Artifacts and Logs

When deployments fail, comprehensive logs and artifacts enable teams to diagnose issues effectively. Jenkins automatically preserves console logs for each build, but explicitly archiving deployment artifacts, configuration files, and test results provides additional diagnostic value. The archiveArtifacts step stores files from the workspace, making them available through the Jenkins web interface even after the workspace is cleaned:

post {

always {

archiveArtifacts artifacts: 'build/logs/**/*.log', allowEmptyArchive: true

archiveArtifacts artifacts: 'deployment-manifest.yaml', allowEmptyArchive: true

junit 'test-results/**/*.xml'

}

}

For complex deployments involving multiple services or components, maintaining a deployment manifest that records exactly what was deployed (version numbers, configuration values, environment variables) provides crucial context for troubleshooting. This manifest, stored as an artifact, enables teams to reproduce deployment conditions when investigating issues.

Scaling Jenkins for Enterprise Deployment Needs

As organizations grow and deployment frequency increases, a single Jenkins instance may struggle to handle the workload. Scaling Jenkins involves distributing build execution across multiple agents, implementing high availability for the Jenkins controller, and optimizing pipeline performance to handle increased demand.

Distributed Build Architecture

Jenkins supports a controller-agent architecture where the controller manages scheduling and coordination while agents execute actual build and deployment tasks. This distribution enables horizontal scaling by adding more agents as workload increases. Agents can run on physical machines, virtual machines, or as containers, with Jenkins dynamically provisioning agents based on demand.

Configuring agents involves specifying connection methods (SSH, JNLP, or cloud provider APIs), defining labels that categorize agent capabilities, and setting executor counts determining concurrent build capacity. Pipelines specify agent requirements using the agent directive, with Jenkins automatically selecting appropriate agents based on availability and labels:

pipeline {

agent {

label 'docker && linux'

}

stages {

stage('Build') {

agent {

docker {

image 'node:16'

label 'docker'

}

}

steps {

sh 'npm install'

sh 'npm run build'

}

}

}

}

Cloud-Based Dynamic Agent Provisioning

For organizations with variable workload, cloud-based dynamic agent provisioning automatically creates agents when needed and terminates them when idle, optimizing costs while maintaining capacity for peak demand. The Amazon EC2 Plugin, Azure VM Agents Plugin, and Google Compute Engine Plugin enable Jenkins to provision cloud instances as build agents on demand.

Kubernetes provides particularly elegant dynamic agent provisioning through the Kubernetes Plugin. Jenkins creates Kubernetes pods as build agents, with each pod potentially containing multiple containers providing different build environments. When builds complete, pods are automatically deleted, ensuring efficient resource utilization:

pipeline {

agent {

kubernetes {

yaml '''

apiVersion: v1

kind: Pod

spec:

containers:

- name: maven

image: maven:3.8-openjdk-11

command:

- cat

tty: true

- name: docker

image: docker:latest

command:

- cat

tty: true

volumeMounts:

- name: docker-sock

mountPath: /var/run/docker.sock

volumes:

- name: docker-sock

hostPath:

path: /var/run/docker.sock

'''

}

}

stages {

stage('Build with Maven') {

steps {

container('maven') {

sh 'mvn clean package'

}

}

}

stage('Build Docker Image') {

steps {

container('docker') {

sh 'docker build -t my-application:${BUILD_NUMBER} .'

}

}

}

}

}

"The measure of good automation is not how rarely humans intervene, but how effectively the system communicates when intervention is needed and how easily humans can act on that information."

Security Considerations for Deployment Automation

Automated deployment systems require elevated privileges to modify production environments, making security a critical concern. Compromised Jenkins instances can provide attackers with access to production systems, source code, and sensitive credentials. Implementing comprehensive security measures protects both Jenkins itself and the systems it deploys to.

Securing the Jenkins Instance

Jenkins security begins with proper authentication and authorization. While Jenkins supports its own user database, integrating with enterprise identity providers through LDAP, Active Directory, or SAML ensures consistent access control and simplifies user management. The Matrix Authorization Strategy provides granular permission control, allowing administrators to specify exactly which users or groups can perform specific actions.

Enabling CSRF protection prevents cross-site request forgery attacks, while configuring agent-to-controller security ensures agents cannot compromise the controller through malicious build scripts. Regular Jenkins updates address security vulnerabilities, with the Jenkins security advisory providing notifications about critical issues requiring immediate attention.

Principle of Least Privilege for Deployment Credentials

Deployment credentials should provide only the minimum permissions necessary for deployment tasks. Rather than using administrative credentials with broad access, create dedicated deployment accounts with permissions limited to specific actions on specific resources. For cloud deployments, this means creating IAM roles or service principals with restricted permissions rather than using root or administrator accounts.

Credential rotation policies ensure that even if credentials are compromised, their useful lifetime to attackers is limited. Jenkins supports credential rotation through its credentials API, allowing automated systems to update stored credentials without manual intervention. For highly sensitive environments, consider using short-lived credentials that expire after each deployment, requesting fresh credentials at the start of each pipeline execution.

Audit Logging and Compliance

Comprehensive audit logging tracks all actions performed through Jenkins, providing accountability and enabling security investigations. The Audit Trail Plugin records user actions, configuration changes, and build executions, storing logs in formats suitable for analysis by security information and event management (SIEM) systems.

For organizations subject to regulatory compliance requirements, Jenkins can integrate with compliance automation tools to enforce policies and generate compliance reports. The Job DSL Plugin enables defining jobs as code, ensuring consistent configuration and facilitating compliance audits by making job configurations reviewable and version-controlled.

Optimizing Pipeline Performance

As pipelines grow more complex, execution time can increase significantly, slowing feedback cycles and reducing deployment frequency. Optimizing pipeline performance involves identifying bottlenecks, parallelizing independent tasks, and implementing caching strategies to avoid redundant work.

Parallel Execution Strategies

Many pipeline stages can execute concurrently rather than sequentially. Testing different application modules, deploying to multiple environments, or building multiple artifacts can occur in parallel, significantly reducing overall pipeline duration. Jenkins provides a parallel directive that executes multiple stages simultaneously:

stage('Parallel Testing') {

parallel {

stage('Unit Tests') {

steps {

sh 'npm run test:unit'

}

}

stage('Integration Tests') {

steps {

sh 'npm run test:integration'

}

}

stage('Security Scan') {

steps {

sh 'npm audit'

}

}

}

}

When implementing parallel execution, consider agent capacity—running too many parallel stages simultaneously can overwhelm available agents, actually slowing execution. Monitoring agent utilization helps identify optimal parallelization levels that maximize throughput without causing resource contention.

Caching and Artifact Reuse

Downloading dependencies, compiling code, and building artifacts consumes significant time in most pipelines. Caching these resources between builds eliminates redundant work when inputs haven't changed. Most build tools support caching mechanisms—Maven's local repository, npm's cache, Docker's layer caching—that Jenkins pipelines can leverage.

For Docker-based pipelines, using multi-stage builds and layer caching significantly reduces build times. Structuring Dockerfiles to copy dependency manifests before application code ensures dependency layers are cached and reused when only application code changes:

FROM node:16 AS builder

WORKDIR /app

# Copy dependency manifests first (cached layer)

COPY package*.json ./

RUN npm ci

# Copy application code (changes more frequently)

COPY . .

RUN npm run build

FROM node:16-alpine

WORKDIR /app

COPY --from=builder /app/dist ./dist

COPY --from=builder /app/node_modules ./node_modules

CMD ["node", "dist/server.js"]

Implementing GitOps Practices with Jenkins

GitOps represents an operational model where infrastructure and application configuration are stored in Git repositories, with automated systems continuously reconciling actual state with desired state defined in Git. Jenkins can implement GitOps workflows by monitoring Git repositories for configuration changes and automatically applying those changes to target environments.

Declarative Configuration Management

GitOps requires that all configuration exists as code in version-controlled repositories. For Kubernetes deployments, this means storing Deployment, Service, ConfigMap, and other resource definitions in Git. For traditional infrastructure, configuration management tools like Ansible, Terraform, or CloudFormation templates define infrastructure state declaratively.

Jenkins multibranch pipelines automatically create pipeline jobs for each branch in a repository, enabling teams to test configuration changes in isolated environments before merging to production branches. This workflow provides a natural progression from development to production, with each stage validated through automated testing:

pipeline {

agent any

stages {

stage('Validate Configuration') {

steps {

sh 'kubectl apply --dry-run=client -f kubernetes/'

}

}

stage('Deploy to Environment') {

when {

branch 'main'

}

steps {

sh 'kubectl apply -f kubernetes/'

sh 'kubectl rollout status deployment/my-application'

}

}

}

}

Continuous Reconciliation

True GitOps involves continuous reconciliation where automated systems detect configuration drift—differences between actual state and desired state—and automatically correct it. While Jenkins traditionally operates on a trigger-based model (builds execute when changes occur), scheduled pipelines can implement periodic reconciliation by reapplying configuration from Git regardless of whether changes occurred.

This approach ensures that manual changes to production environments are automatically reverted, maintaining consistency with the declared configuration in Git. For teams transitioning to GitOps, this reconciliation provides valuable visibility into configuration drift and helps identify cases where manual intervention occurs.

Advanced Pipeline Patterns and Techniques

Beyond basic deployment automation, advanced pipeline patterns address complex scenarios like multi-environment deployments, feature flag integration, and deployment orchestration across microservices architectures.

Shared Libraries for Reusable Pipeline Code

As organizations develop multiple pipelines, common patterns emerge that benefit from reusability. Jenkins Shared Libraries enable defining reusable pipeline code that multiple projects can consume, ensuring consistency and reducing duplication. Libraries contain Groovy code defining custom steps, complete pipeline templates, or utility functions that pipelines invoke.

Creating a shared library involves structuring code in a specific directory layout within a Git repository, then configuring Jenkins to load the library. Pipelines reference library functions using the @Library annotation:

@Library('my-shared-library') _

pipeline {

agent any

stages {

stage('Deploy') {

steps {

deployToKubernetes(

cluster: 'production',

namespace: 'default',

manifest: 'kubernetes/deployment.yaml'

)

}

}

}

}

Shared libraries promote best practices by encoding organizational standards into reusable components. Security scanning, deployment validation, notification formatting, and credential management can all be abstracted into library functions, ensuring consistent implementation across all pipelines.

Dynamic Pipeline Generation

For organizations managing many similar applications, manually creating pipelines for each becomes impractical. The Job DSL Plugin enables programmatically generating Jenkins jobs from code, allowing teams to define templates that automatically create pipelines for new applications. Combined with repository scanning, this approach can automatically provision CI/CD pipelines when new repositories are created, significantly reducing setup friction for new projects.

Integration with Feature Flag Systems

Feature flags decouple deployment from release, enabling teams to deploy code to production while keeping features disabled until ready. Jenkins pipelines can integrate with feature flag systems like LaunchDarkly, Split, or custom solutions to automatically enable features after successful deployment and validation.

This integration enables sophisticated release strategies where features are gradually enabled for increasing percentages of users, with automatic rollback if metrics indicate problems. Pipelines query feature flag APIs to control flag states based on deployment stage and validation results:

stage('Enable Feature for 10% of Users') {

steps {

script {

sh '''

curl -X PATCH https://app.launchdarkly.com/api/v2/flags/default/my-feature \

-H "Authorization: ${LAUNCHDARKLY_API_KEY}" \

-H "Content-Type: application/json" \

-d '{"instructions": [{"kind": "updateFallthroughVariationOrRollout", "rolloutWeights": {"true": 10000, "false": 90000}}]}'

'''

sleep 600 // Monitor for 10 minutes

// Check metrics and decide whether to continue rollout

}

}

}

Troubleshooting Common Deployment Issues

Even well-designed deployment automation encounters issues. Understanding common problems and their solutions accelerates troubleshooting and minimizes downtime when deployments fail.

Connectivity and Authentication Failures

Deployment failures frequently stem from connectivity issues or authentication problems. When Jenkins cannot reach deployment targets, verify network connectivity from Jenkins agents to target systems, check firewall rules allowing required ports, and confirm credential validity. For cloud deployments, ensure service accounts or IAM roles have necessary permissions and haven't been inadvertently modified.

Jenkins credential expiration represents another common issue, particularly for credentials with automatic rotation policies. Implementing credential validation steps at the beginning of pipelines catches expired credentials before deployment attempts, providing clearer error messages and faster resolution.

Resource Exhaustion and Timeout Issues

Deployments may fail due to insufficient resources—disk space on deployment targets, memory constraints preventing application startup, or CPU limitations causing health checks to timeout. Monitoring resource utilization on deployment targets helps identify capacity issues before they cause failures.

Pipeline timeouts can occur when deployment operations take longer than expected. While increasing timeout values resolves immediate issues, investigating why operations are slow often reveals underlying problems like network latency, inefficient deployment procedures, or resource contention that warrant addressing.

Configuration Drift and Environment Inconsistencies

Applications may behave differently across environments due to configuration drift—subtle differences in environment configuration that accumulate over time. Implementing infrastructure as code and using configuration management tools ensures environment consistency, while automated validation scripts detect configuration differences before they cause deployment failures.

"The best deployment pipeline is one that makes the right thing the easy thing, where following best practices requires less effort than working around them."

Measuring Deployment Automation Success

Effective deployment automation should produce measurable improvements in development velocity, system reliability, and team confidence. Tracking key metrics quantifies automation value and identifies areas for further improvement.

Key Performance Indicators for Deployment Automation

Deployment frequency measures how often code reaches production. Increased deployment frequency indicates teams can deliver value more rapidly and respond to market demands more quickly. Mature organizations deploy multiple times per day, while teams beginning automation journeys might initially deploy weekly or monthly.

Lead time for changes tracks time from code commit to production deployment. Shorter lead times enable faster feedback cycles and reduce the time between identifying problems and deploying fixes. Automation significantly reduces lead time by eliminating manual handoffs and wait times.

Change failure rate indicates the percentage of deployments causing production incidents requiring remediation. While automation alone doesn't guarantee quality, effective automated testing and validation reduce failure rates by catching issues before production deployment.

Mean time to recovery (MTTR) measures how quickly teams restore service after incidents. Automated rollback capabilities and comprehensive monitoring significantly reduce MTTR by enabling rapid response to deployment-related issues.

Continuous Improvement Through Metrics

Regularly reviewing these metrics with development teams identifies trends and opportunities for improvement. Increasing failure rates might indicate insufficient test coverage or inadequate staging environment validation. Lengthening lead times could suggest pipeline performance issues or approval bottlenecks requiring attention.

Jenkins provides plugins for tracking and visualizing these metrics, with the Build Metrics Plugin and Prometheus Plugin enabling integration with monitoring and visualization platforms. Displaying metrics prominently encourages teams to treat deployment pipeline performance as an important aspect of overall system health.

Future Trends in Deployment Automation

Deployment automation continues evolving as new technologies and practices emerge. Understanding emerging trends helps organizations prepare for future requirements and evaluate whether current automation strategies will scale to meet future needs.

Progressive Delivery and Observability Integration

Progressive delivery extends beyond simple canary deployments to incorporate comprehensive observability and automated decision-making. Future deployment systems will increasingly integrate with observability platforms, automatically analyzing metrics, traces, and logs to make deployment decisions without human intervention. Deployments will automatically pause or roll back based on anomaly detection rather than requiring explicit validation criteria.

AI-Assisted Deployment Optimization

Machine learning models will analyze historical deployment data to predict optimal deployment timing, identify likely failure causes before they occur, and recommend pipeline optimizations. These systems will learn from past deployments to continuously improve automation effectiveness without explicit programming.

Service Mesh Integration

As service mesh technologies like Istio and Linkerd mature, deployment automation will increasingly leverage service mesh capabilities for traffic management, observability, and security. Deployment pipelines will configure service mesh routing rules to implement sophisticated deployment strategies without requiring application code changes.

How do I handle database migrations in automated deployments?

Database migrations require careful coordination with application deployments. Implement backward-compatible migrations that work with both old and new application versions, deploy migrations separately from application code, and use tools like Flyway or Liquibase for version-controlled migration management. Jenkins pipelines can execute migration scripts as a deployment stage, with validation steps ensuring successful migration before proceeding with application deployment.

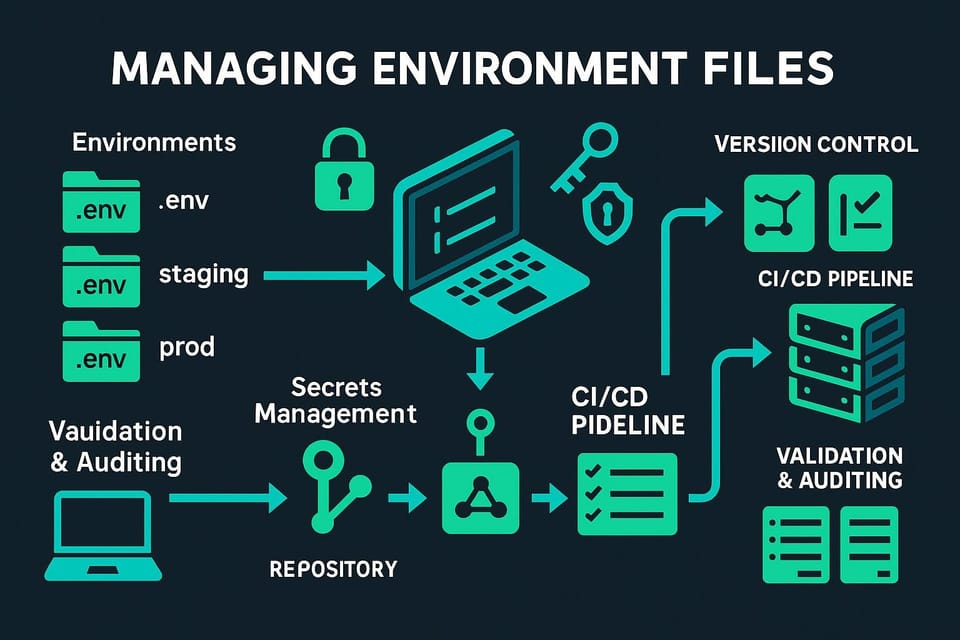

What's the best way to manage environment-specific configuration?

Store environment-specific configuration outside application code using environment variables, configuration management systems, or cloud provider parameter stores. Jenkins pipelines inject appropriate configuration during deployment based on target environment. Avoid storing sensitive configuration in Git repositories, instead retrieving secrets from Jenkins credentials or external secret management systems during deployment.

How can I implement approval gates without slowing down deployments?

Use automated validation as much as possible, reserving manual approvals only for production deployments or high-risk changes. Implement time-based auto-approval where changes automatically proceed if not explicitly rejected within a timeframe. Consider using deployment windows where pre-approved changes automatically deploy during designated low-risk periods.

Should I use scripted or declarative pipeline syntax?

Declarative syntax is recommended for most use cases due to its structured format, better error handling, and easier learning curve. Use scripted syntax only when you need complex conditional logic or dynamic behavior that declarative syntax cannot express. Many organizations use declarative syntax for standard pipelines while maintaining a few scripted pipelines for special cases.

How do I test deployment pipelines without affecting production?

Create dedicated test environments that mirror production architecture, use feature branches to test pipeline changes before merging to main branches, and implement dry-run modes where deployment commands are logged but not executed. Jenkins multibranch pipelines automatically create isolated pipeline instances for each branch, enabling safe testing of pipeline modifications.

What's the recommended approach for deploying microservices?

Implement separate pipelines for each microservice to enable independent deployment cadences, use container orchestration platforms like Kubernetes for consistent deployment mechanisms, and implement service versioning strategies that enable backward compatibility. Consider using deployment orchestration tools that coordinate multi-service deployments when changes span multiple services.