How to Build a Complete CI/CD Pipeline from Scratch

In today's fast-paced software development landscape, the ability to deliver high-quality code quickly and reliably has become a fundamental requirement rather than a competitive advantage. Organizations that fail to automate their software delivery processes find themselves struggling with manual errors, deployment anxiety, and an inability to respond to market demands with the agility their business requires. The pressure to release features faster while maintaining stability creates a tension that can only be resolved through systematic automation.

A Continuous Integration and Continuous Deployment pipeline represents a structured approach to automating the journey of code from a developer's workstation to production environments. This automated pathway encompasses everything from initial code commits through testing, security scanning, artifact creation, and ultimately deployment across various environments. By establishing this automated workflow, teams eliminate manual intervention points that introduce delays and errors, while simultaneously creating a repeatable, auditable process that enhances both speed and reliability.

Throughout this comprehensive guide, you'll discover the foundational concepts that underpin effective pipeline architecture, explore the essential tools and technologies that power modern automation workflows, and gain practical knowledge for implementing each stage of the pipeline. Whether you're building your first automated deployment system or refining an existing one, you'll find actionable insights on tool selection, configuration strategies, security integration, monitoring approaches, and optimization techniques that transform theoretical concepts into production-ready solutions.

Understanding the Foundation of Pipeline Architecture

Before diving into implementation details, establishing a clear understanding of what constitutes a pipeline and why its architecture matters sets the stage for making informed decisions throughout the build process. A pipeline isn't merely a collection of scripts strung together; it represents a carefully designed system with distinct stages, each serving specific purposes and adhering to particular principles.

The fundamental architecture of any deployment automation system consists of several interconnected stages that code must pass through before reaching production. These stages typically include source control integration, build processes, automated testing at multiple levels, artifact management, deployment to various environments, and post-deployment validation. Each stage acts as a quality gate, ensuring that only code meeting specific criteria progresses forward.

"The goal isn't just automation for automation's sake—it's about creating a reliable, repeatable process that gives teams confidence to deploy frequently without fear."

Core Principles That Guide Effective Pipeline Design

Several guiding principles distinguish well-designed pipelines from those that create more problems than they solve. Fail-fast mechanisms ensure that issues are detected as early as possible in the pipeline, minimizing wasted resources on builds that will ultimately fail. This principle dictates that quick, inexpensive checks should run before slower, more resource-intensive operations.

The concept of pipeline as code has become increasingly important, treating pipeline definitions with the same rigor as application code. This approach stores pipeline configurations in version control, enables code review processes for pipeline changes, and allows teams to track the evolution of their deployment processes over time. When pipelines are defined as code, they become reproducible, testable, and subject to the same quality controls as the applications they deploy.

Idempotency represents another crucial principle—the ability to run pipeline stages multiple times with the same inputs and achieve identical outcomes. This characteristic proves invaluable when troubleshooting issues or recovering from partial failures, as stages can be safely re-executed without creating inconsistent states or duplicate resources.

Selecting the Right Tools for Your Technology Stack

The ecosystem of tools available for building deployment automation has expanded dramatically, offering options that range from self-hosted solutions to fully managed cloud services. The choice between platforms like Jenkins, GitLab CI/CD, GitHub Actions, CircleCI, Azure DevOps, or AWS CodePipeline depends on multiple factors including existing infrastructure, team expertise, budget constraints, and specific technical requirements.

| Tool Category | Purpose | Popular Options | Key Considerations |

|---|---|---|---|

| Pipeline Orchestration | Coordinates workflow execution across stages | Jenkins, GitLab CI, GitHub Actions, Azure Pipelines | Integration capabilities, learning curve, maintenance overhead |

| Source Control | Manages code repositories and triggers pipeline runs | GitHub, GitLab, Bitbucket, Azure Repos | Webhook support, branching strategies, access controls |

| Build Tools | Compiles code and creates deployable artifacts | Maven, Gradle, npm, pip, Docker | Language compatibility, dependency management, caching |

| Testing Frameworks | Validates code quality and functionality | JUnit, pytest, Jest, Selenium, JMeter | Test types supported, reporting capabilities, parallel execution |

| Artifact Repositories | Stores build outputs and dependencies | Artifactory, Nexus, Docker Hub, ECR | Storage costs, versioning, security scanning |

| Deployment Targets | Hosts the running application | Kubernetes, ECS, Lambda, VMs, App Services | Scalability, cost model, operational complexity |

When evaluating tools, consider not only their individual capabilities but how they integrate with one another. A Jenkins pipeline might excel at complex orchestration but requires additional configuration to work smoothly with cloud-native artifact repositories. Conversely, GitHub Actions provides seamless integration with GitHub repositories but may require creative solutions for complex deployment scenarios involving multiple environments and approval gates.

Implementing Source Control Integration and Triggering Mechanisms

The journey of automated deployment begins the moment a developer commits code to a repository. Establishing robust integration between source control systems and pipeline orchestration tools creates the foundation upon which all subsequent automation builds. This integration determines not only when pipelines execute but also what context and metadata they receive about the changes being processed.

Webhook Configuration and Event Filtering

Modern source control platforms communicate with external systems through webhooks—HTTP callbacks that fire when specific events occur within repositories. Configuring these webhooks requires careful consideration of which events should trigger pipeline execution. While it might seem logical to run pipelines on every possible event, this approach quickly becomes wasteful and creates noise that obscures meaningful signals.

📌 Push events to specific branches typically represent the primary trigger for pipeline execution, initiating builds whenever code is committed to branches like main, develop, or feature branches following specific naming conventions.

📌 Pull request events enable pipelines to validate proposed changes before they merge, providing immediate feedback to developers about whether their code meets quality standards and passes all automated checks.

📌 Tag creation events often trigger release-specific pipelines that handle versioning, changelog generation, and deployment to production environments, distinguishing these special builds from routine development activities.

The webhook payload contains valuable metadata including commit messages, author information, changed file paths, and branch details. Sophisticated pipelines parse this information to make intelligent decisions about what stages to execute. For instance, if a commit only modifies documentation files, the pipeline might skip compilation and testing stages entirely, proceeding directly to deploying updated documentation.

Branch Strategy and Pipeline Behavior

The branching strategy adopted by a development team profoundly influences pipeline design. Teams following Git Flow maintain separate pipelines for feature branches, develop, release branches, and main, with each branch type triggering different pipeline behaviors. Feature branches might run only unit tests and code quality checks, while the develop branch triggers integration testing, and main branch commits initiate full deployment sequences.

"Your branching strategy and pipeline configuration must align perfectly—mismatches between what developers expect and what actually happens create confusion and erode trust in the automation."

Trunk-based development, where developers commit frequently to a single main branch, requires pipelines optimized for speed and rapid feedback. These pipelines must complete quickly enough that developers receive results before context-switching to other work, typically targeting execution times under ten minutes for the critical path.

Building and Packaging Application Artifacts

Once source control integration triggers pipeline execution, the build stage transforms source code into deployable artifacts. This transformation encompasses dependency resolution, compilation, asset processing, and packaging—all steps that must occur reliably and reproducibly regardless of where the pipeline executes.

Containerization and Build Environments

The shift toward containerized builds has revolutionized how teams approach the build stage. By executing build processes inside Docker containers, pipelines achieve consistency across different execution environments. A build that succeeds on a developer's laptop will produce identical results when running in the pipeline, eliminating the classic "works on my machine" problem.

Creating effective build containers requires balancing several competing concerns. Smaller images reduce transfer times and storage costs but may lack tools needed for complex build processes. Multi-stage builds provide an elegant solution, using feature-rich images for compilation while producing minimal final artifacts that contain only runtime dependencies.

FROM node:16 AS builder

WORKDIR /app

COPY package*.json ./

RUN npm ci --only=production

COPY . .

RUN npm run build

FROM node:16-alpine

WORKDIR /app

COPY --from=builder /app/dist ./dist

COPY --from=builder /app/node_modules ./node_modules

EXPOSE 3000

CMD ["node", "dist/server.js"]This example demonstrates a multi-stage build where the first stage uses a full Node.js image to install dependencies and compile the application, while the second stage uses a minimal Alpine-based image, copying only the compiled output and production dependencies. The resulting image contains everything needed to run the application but none of the development tooling required during the build process.

Dependency Management and Caching Strategies

Downloading dependencies represents one of the most time-consuming aspects of build processes. A Node.js application might download hundreds of packages, a Java application could pull dozens of Maven dependencies, and a Python application needs its pip packages—all of which takes time and bandwidth.

Implementing effective caching strategies dramatically improves build performance. Most pipeline platforms offer mechanisms to cache directories between pipeline runs, preserving downloaded dependencies so subsequent builds can reuse them. The key lies in configuring cache keys that invalidate when dependency definitions change but persist when only application code changes.

🔧 Cache the node_modules directory in Node.js projects, keying the cache to the hash of package-lock.json so it refreshes only when dependencies actually change.

🔧 For Maven projects, cache the .m2/repository directory using pom.xml as the cache key, preserving downloaded JAR files across builds.

🔧 Python projects benefit from caching the pip cache directory, typically ~/.cache/pip, keyed to requirements.txt to maintain installed packages between runs.

Beyond simple directory caching, some teams maintain internal artifact repositories that mirror public package registries. These proxies not only improve build speed by keeping dependencies geographically close to build agents but also provide insulation against upstream registry outages and enable security scanning of dependencies before they enter the build environment.

Implementing Comprehensive Testing Strategies

Testing represents the heart of any deployment pipeline, serving as the primary mechanism for validating that code changes haven't introduced regressions or broken existing functionality. A well-designed testing strategy incorporates multiple levels of testing, each providing different types of feedback at different speeds and costs.

The Testing Pyramid in Practice

The testing pyramid concept suggests that teams should have many fast, focused unit tests at the base, fewer integration tests in the middle, and a small number of slow, comprehensive end-to-end tests at the top. This distribution reflects the trade-offs between test speed, maintenance burden, and the scope of functionality validated.

Unit tests validate individual functions or classes in isolation, typically executing in milliseconds and providing precise feedback about what broke when failures occur. Pipelines should run the complete unit test suite on every commit, as their speed makes them ideal for rapid feedback. A typical pipeline might execute thousands of unit tests in under a minute, providing developers with immediate confirmation that their changes haven't broken existing functionality.

Integration tests verify that different components of the application work correctly together, often requiring external dependencies like databases, message queues, or third-party APIs. These tests run slower than unit tests and require more sophisticated setup, but they catch issues that unit tests miss—problems that emerge from the interactions between components rather than within individual components.

End-to-end tests simulate real user interactions with the complete application, validating entire workflows from the user interface through backend services and data storage. These tests provide the highest confidence that the application works as users will experience it, but they're also the slowest to execute and the most brittle, often failing due to timing issues or environment inconsistencies rather than actual application bugs.

"The goal isn't to catch every possible bug through automated testing—it's to catch the most likely bugs as quickly and cheaply as possible, reserving expensive testing approaches for scenarios where they provide unique value."

Parallel Test Execution and Optimization

As test suites grow, execution time becomes a bottleneck that slows feedback and reduces the value of automation. Parallelizing test execution across multiple agents or containers can dramatically reduce total runtime, but this approach requires careful consideration of test isolation and resource contention.

Tests that modify shared state—writing to databases, creating files, or altering global configuration—often fail when run in parallel because multiple test instances interfere with each other. Designing tests to be truly independent, either through test data isolation strategies or by using in-memory implementations of external dependencies, enables safe parallel execution.

Many testing frameworks provide built-in support for parallel execution, but pipelines can also parallelize at a higher level by distributing different test suites across multiple pipeline jobs. One job might run unit tests while another executes integration tests and a third performs security scans, with all three running simultaneously and the pipeline proceeding only when all have completed successfully.

| Test Type | Typical Execution Time | Pipeline Stage | Failure Impact |

|---|---|---|---|

| Unit Tests | 30 seconds - 2 minutes | Every commit, all branches | Immediate pipeline failure, prevents further stages |

| Integration Tests | 2 - 10 minutes | Main branches, pull requests | Blocks merge, requires investigation before retry |

| End-to-End Tests | 10 - 30 minutes | Pre-deployment, scheduled runs | Blocks deployment, may indicate environment issues |

| Performance Tests | 15 - 60 minutes | Scheduled, release candidates | Warning only, requires analysis of regression severity |

| Security Scans | 5 - 15 minutes | Every commit, dependency updates | Severity-based: critical findings block, others warn |

Security Scanning and Compliance Integration

Security cannot be an afterthought bolted onto the end of the deployment process—it must be woven throughout the pipeline, with automated checks at multiple stages catching different categories of vulnerabilities. Modern pipelines integrate security scanning as a standard component, treating security issues with the same urgency as functional bugs.

Static Application Security Testing

Static analysis examines source code without executing it, identifying potential security vulnerabilities, code quality issues, and violations of coding standards. Tools like SonarQube, Checkmarx, or language-specific linters scan codebases for patterns known to indicate security problems—SQL injection vulnerabilities, cross-site scripting risks, insecure cryptographic implementations, and hardcoded credentials.

Integrating static analysis into pipelines requires configuring quality gates that define which findings should fail the build. Not all identified issues warrant blocking deployment—teams must establish policies that distinguish between critical vulnerabilities requiring immediate remediation and lower-severity findings that can be addressed in subsequent iterations.

⚡ Critical severity findings like SQL injection vulnerabilities or exposed credentials should immediately fail the pipeline, preventing any possibility of deploying vulnerable code.

⚡ High severity issues might block deployment to production while allowing deployment to development environments, giving teams time to address problems without completely halting progress.

⚡ Medium and low severity findings typically generate warnings and create tracking tickets but don't block deployment, preventing security tools from becoming obstacles that teams route around.

Dependency Vulnerability Scanning

Modern applications depend on hundreds or thousands of third-party libraries, each potentially containing security vulnerabilities. Dependency scanning tools compare project dependencies against databases of known vulnerabilities, alerting teams when they're using library versions with published security issues.

Tools like Snyk, WhiteSource, or GitHub's Dependabot continuously monitor dependencies, automatically creating pull requests to update vulnerable libraries to patched versions. Pipelines should fail when dependencies have critical vulnerabilities with available fixes, forcing teams to address security issues before deployment rather than accumulating technical security debt.

"Security scanning in pipelines isn't about achieving perfect security—it's about ensuring that known, preventable vulnerabilities don't make it to production while maintaining enough flexibility that security tools enable rather than obstruct development velocity."

Container Image Scanning

When applications deploy as container images, the security scope extends beyond application code and dependencies to include the base operating system and all installed packages within the container. Container scanning tools analyze images layer by layer, identifying vulnerabilities in OS packages, application dependencies, and configuration issues that might expose security risks.

Services like Amazon ECR, Azure Container Registry, and Docker Hub offer integrated scanning, automatically analyzing pushed images and providing vulnerability reports. Pipelines should incorporate these scans as a quality gate before deploying images to production, with policies that prevent deployment of images containing critical vulnerabilities.

Artifact Management and Versioning

Successfully built and tested artifacts require proper storage, versioning, and metadata management to enable reliable deployments and rollbacks. Artifact repositories serve as the bridge between build processes and deployment stages, providing a centralized location where immutable build outputs reside with complete traceability back to their source.

Immutable Artifacts and Build Once, Deploy Many

A fundamental principle of reliable deployment automation holds that artifacts should be built exactly once and then deployed to all environments without modification. This "build once, deploy many" approach ensures that the exact same code that passed testing in lower environments gets promoted to production, eliminating any possibility of environment-specific build variations introducing bugs.

Achieving true immutability requires externalizing environment-specific configuration from artifacts. Rather than building separate artifacts for development, staging, and production environments, pipelines build a single artifact that accepts configuration through environment variables, configuration files mounted at runtime, or configuration management systems. This separation allows the same Docker image, JAR file, or deployment package to run in any environment with appropriate configuration.

Semantic Versioning and Artifact Tagging

Establishing a clear versioning scheme for artifacts provides essential traceability and enables automated decision-making within pipelines. Semantic versioning (major.minor.patch) offers a widely adopted standard that conveys meaning about the nature of changes—major version increments indicate breaking changes, minor versions add functionality in a backward-compatible manner, and patch versions represent bug fixes.

Beyond version numbers, artifacts benefit from rich metadata tags that capture build context. Git commit SHA, build timestamp, pipeline run identifier, and branch name all provide valuable information for troubleshooting and auditing. Container images particularly benefit from multiple tags—a single image might be tagged with its semantic version (1.2.3), its commit SHA (abc123), and a mutable tag indicating its role (latest, stable).

Deployment Strategies and Environment Progression

Deploying artifacts to target environments represents the culmination of the pipeline, but the deployment process itself encompasses multiple strategies and patterns designed to minimize risk and enable rapid rollback if issues arise. The choice of deployment strategy profoundly affects both the reliability of releases and the complexity of pipeline configuration.

Environment Topology and Promotion Paths

Most organizations maintain multiple environments representing different stages of the release process. A typical progression moves from development to staging to production, with each environment serving distinct purposes and having different characteristics. Development environments prioritize rapid iteration and easy troubleshooting, staging environments closely mirror production to validate deployment procedures and performance characteristics, and production environments optimize for reliability and scale.

Pipelines should model these environment progressions explicitly, with promotion between environments requiring explicit approval or automated quality gates. A change might automatically deploy to development upon merge to the main branch, require successful execution of integration tests before promoting to staging, and need manual approval from designated stakeholders before deploying to production.

Blue-Green Deployments

Blue-green deployment maintains two identical production environments, with only one actively serving traffic at any time. When deploying a new version, the pipeline deploys to the inactive environment, runs validation tests against it, and then switches traffic from the active environment to the newly deployed one. This approach enables instant rollback by simply switching traffic back to the previous environment if issues arise.

Implementing blue-green deployments requires infrastructure that supports rapid traffic switching—load balancers with health checks, DNS configurations with short TTLs, or service meshes with traffic routing capabilities. The pipeline must coordinate the deployment sequence: deploy to inactive environment, validate deployment, switch traffic, monitor for issues, and finally tear down or repurpose the old environment once confidence is established.

Canary Deployments

Canary deployments gradually roll out changes to production by initially routing only a small percentage of traffic to the new version while the majority continues using the previous version. This approach allows real-world validation with actual production traffic while limiting the blast radius if problems occur. If metrics indicate the new version performs acceptably, the pipeline progressively increases traffic to it until it handles 100% of requests.

Successful canary deployments require sophisticated monitoring and automated decision-making. The pipeline must define success criteria—error rate thresholds, latency percentiles, business metrics—and continuously evaluate whether the canary version meets these criteria. If metrics degrade beyond acceptable levels, the pipeline automatically aborts the rollout and reverts traffic to the previous version.

"The deployment strategy you choose should match your application's architecture and your organization's risk tolerance—there's no universally correct answer, only trade-offs between complexity, risk, and rollback speed."

Rolling Deployments

Rolling deployments update instances incrementally, replacing old versions with new ones in batches while maintaining overall service availability. This strategy works particularly well for applications running on multiple instances behind load balancers, as the deployment process can remove instances from the load balancer, update them, verify they're healthy, and return them to service before proceeding to the next batch.

The pipeline configuration for rolling deployments must specify batch size and health check criteria. Smaller batches reduce risk but extend deployment duration, while larger batches complete faster but expose more users to potential issues. Health checks ensure that updated instances are actually ready to serve traffic before the deployment proceeds, preventing cascading failures from instances that deployed successfully but aren't functioning correctly.

Monitoring, Observability, and Feedback Loops

A pipeline doesn't end when deployment completes—comprehensive monitoring and observability ensure that deployed changes actually work in production and provide feedback that informs future development. Effective pipelines integrate monitoring from the start, treating observability as a first-class concern rather than an operational afterthought.

Deployment Verification and Smoke Tests

Immediately after deployment, automated smoke tests verify that critical functionality works in the target environment. These tests differ from pre-deployment testing in that they run against the actual deployed application in its real environment, catching issues that might not manifest in isolated test environments—configuration problems, network connectivity issues, or integration failures with external dependencies.

Smoke tests should complete quickly, typically within a few minutes, and focus on essential functionality rather than comprehensive coverage. A successful smoke test confirms that the application started correctly, can connect to required services, and responds appropriately to basic requests. Pipeline stages following deployment should wait for smoke tests to pass before considering the deployment successful and proceeding with any subsequent steps.

Metrics, Logging, and Alerting Integration

Modern observability platforms like Prometheus, Grafana, Datadog, or New Relic provide comprehensive monitoring capabilities that pipelines should leverage. Deployment events should be explicitly marked in monitoring systems, creating annotations on metric dashboards that correlate changes in system behavior with specific deployments. This correlation enables rapid identification of deployments that degraded performance or increased error rates.

🔔 Error rate monitoring tracks the percentage of requests that fail, alerting when rates exceed baseline thresholds and potentially triggering automated rollbacks if errors spike following deployment.

🔔 Latency tracking measures response times across percentiles (p50, p95, p99), detecting performance regressions that might not cause outright failures but degrade user experience.

🔔 Resource utilization monitors CPU, memory, and network usage, identifying deployments that introduce resource leaks or inefficient algorithms that consume excessive resources.

🔔 Business metrics track application-specific indicators like conversion rates, transaction volumes, or user engagement, ensuring that technical success translates to business value.

🔔 Dependency health monitors external services and databases, distinguishing between issues caused by the deployed application versus problems with downstream dependencies.

Automated Rollback Mechanisms

The fastest path to restoring service when deployments go wrong involves automated rollback triggered by monitoring thresholds. Pipelines can integrate with monitoring platforms to automatically revert deployments when key metrics degrade beyond acceptable levels. This integration requires careful configuration to avoid false positives that trigger unnecessary rollbacks while remaining sensitive enough to catch genuine problems quickly.

Rollback procedures must be thoroughly tested and proven reliable, as they represent the safety net when primary deployment processes fail. The pipeline should regularly validate rollback mechanisms through chaos engineering practices—deliberately triggering rollback conditions in non-production environments to verify that automated recovery functions correctly.

Infrastructure as Code and Environment Provisioning

Modern pipelines extend beyond application deployment to encompass the infrastructure that applications run on. Infrastructure as Code (IaC) tools like Terraform, CloudFormation, or Pulumi define infrastructure using declarative configuration files that pipelines can version, test, and deploy just like application code.

Separating Infrastructure and Application Pipelines

While infrastructure and application deployments are related, they often benefit from separate pipelines with different cadences and approval processes. Infrastructure changes typically happen less frequently than application updates and carry different risk profiles—a misconfigured load balancer can affect multiple applications, while an application bug affects only that specific service.

Infrastructure pipelines should include validation steps that test configuration syntax, simulate changes to preview their effects, and require approval before applying changes to production infrastructure. Tools like Terraform's plan command provide detailed previews of what changes will occur, allowing reviewers to understand the impact before execution.

Environment Parity and Configuration Management

Maintaining consistency across environments becomes significantly easier when infrastructure is defined as code. The same Terraform modules can provision development, staging, and production environments, with environment-specific parameters controlling scale, redundancy, and resource sizing. This approach ensures that environments remain structurally similar even as they differ in capacity and cost.

Configuration management systems like Ansible, Chef, or Puppet complement IaC tools by managing software installation and configuration within provisioned infrastructure. Pipelines can orchestrate both infrastructure provisioning and configuration management, ensuring that newly created instances are automatically configured correctly without manual intervention.

"Treating infrastructure as code doesn't just enable automation—it fundamentally changes how teams think about environments, making them disposable, reproducible resources rather than carefully maintained snowflakes."

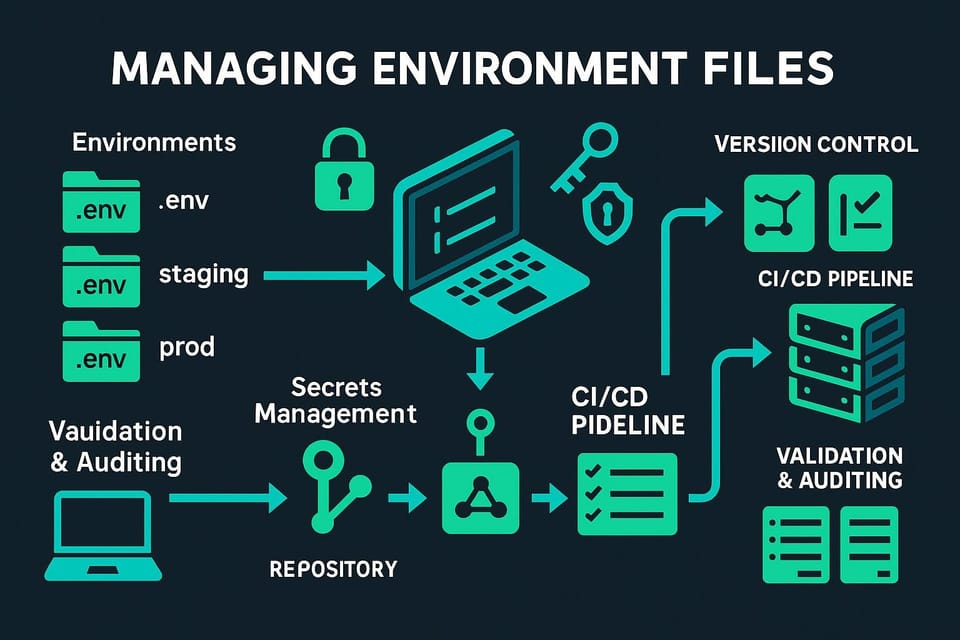

Secrets Management and Security Credentials

Pipelines require access to numerous secrets—database passwords, API keys, cloud provider credentials, signing certificates—and managing these secrets securely represents a critical security concern. Hardcoding secrets in pipeline configurations or storing them in version control creates severe security vulnerabilities, while overly restrictive secret management can impede automation.

Centralized Secret Storage

Dedicated secret management services like HashiCorp Vault, AWS Secrets Manager, Azure Key Vault, or Google Secret Manager provide secure storage with access controls, audit logging, and automatic rotation capabilities. Pipelines should retrieve secrets from these services at runtime rather than storing them in pipeline configurations, ensuring that secrets remain centralized and access is properly controlled.

Integration between pipeline platforms and secret managers typically involves granting pipeline execution environments permission to read specific secrets based on the pipeline's identity or associated service account. This approach maintains the principle of least privilege—each pipeline can access only the secrets it needs, and access is logged for audit purposes.

Secret Rotation and Expiration

Secrets should rotate regularly to limit the window of exposure if they're compromised. Automated rotation requires coordination between secret managers and applications—when a secret rotates, applications must seamlessly adopt the new value without downtime. Pipelines can facilitate this process by deploying updated configurations or triggering application restarts when secrets change.

Short-lived credentials represent an even more secure approach, where secrets expire automatically after brief periods. Cloud provider IAM roles can grant temporary credentials to pipeline execution environments, eliminating the need for long-lived access keys entirely. These credentials expire automatically, reducing risk if pipeline logs or artifacts inadvertently expose them.

Optimizing Pipeline Performance and Resource Usage

As pipelines mature and organizations scale, performance optimization becomes increasingly important. Slow pipelines delay feedback, frustrate developers, and ultimately reduce the value of automation. Systematic optimization requires measuring pipeline performance, identifying bottlenecks, and applying targeted improvements.

Pipeline Execution Metrics and Bottleneck Identification

Understanding where pipelines spend time requires detailed metrics about stage duration, wait times, and resource utilization. Most pipeline platforms provide built-in analytics showing how long each stage takes, but deeper analysis often requires exporting metrics to dedicated monitoring systems for trend analysis and alerting.

Common bottlenecks include dependency downloads, test execution, artifact uploads, and deployment operations. Identifying the specific stages consuming the most time focuses optimization efforts where they'll have the greatest impact. A stage that takes 30 seconds rarely warrants optimization, while one consuming 15 minutes of a 20-minute pipeline represents an obvious target.

Selective Stage Execution

Not every pipeline run needs to execute every stage. Intelligent pipelines analyze what changed and skip stages that aren't affected. If a commit only modifies documentation, there's no need to rebuild application binaries or run test suites. If only frontend code changed, backend tests can be skipped.

Implementing selective execution requires careful configuration to ensure that skipped stages don't mask real issues. The pipeline must correctly determine dependencies between code and stages—a change to a shared library should trigger tests for all services that depend on it, not just the library itself.

Resource Scaling and Agent Management

Pipeline execution requires compute resources—whether self-hosted agents running on virtual machines or cloud-based execution environments. Insufficient resources create queuing delays as pipelines wait for available agents, while excessive resources waste money on idle capacity. Finding the right balance requires understanding usage patterns and implementing appropriate scaling strategies.

Cloud-based pipeline platforms often provide elastic scaling, automatically provisioning resources when pipelines queue and releasing them when idle. Self-hosted solutions require more manual capacity management but offer greater control over execution environments and can be more cost-effective at scale. Some organizations adopt hybrid approaches, using cloud resources for peak demand while maintaining a baseline of self-hosted agents for consistent workloads.

Handling Failures, Retries, and Error Recovery

Pipelines fail for numerous reasons—flaky tests, network timeouts, resource exhaustion, external service outages—and effective error handling distinguishes robust pipelines from brittle ones. Rather than treating all failures identically, sophisticated pipelines categorize failures and respond appropriately to each type.

Transient Failures and Automatic Retry Logic

Some failures are transient—temporary network issues, momentary resource unavailability, or timing-sensitive test flakiness. Automatically retrying these operations often succeeds on subsequent attempts without any changes to code or configuration. Pipelines should implement intelligent retry logic with exponential backoff, attempting failed operations multiple times before declaring genuine failure.

However, retry logic must be applied judiciously. Retrying operations that modify state without proper idempotency checks can create duplicate resources or inconsistent states. Retrying compilation or test execution makes sense, but retrying deployment operations requires careful design to ensure that multiple executions don't cause problems.

Failure Notifications and Escalation

When pipelines fail, appropriate stakeholders need timely notification with sufficient context to understand what went wrong and how to address it. Notification strategies should consider failure type, branch, and time—a failing feature branch might notify only the developer who made the recent commit, while a failing production deployment might alert entire teams through multiple channels.

Notification fatigue represents a real risk when pipelines generate excessive alerts. Teams become desensitized to notifications and begin ignoring them, defeating their purpose. Effective notification strategies filter noise, escalate based on severity, and provide actionable information rather than simply announcing that something failed.

Partial Failure Recovery

Multi-stage pipelines sometimes fail partway through execution, raising the question of whether subsequent runs should restart from the beginning or resume from the failure point. Some pipeline platforms support checkpoint-based execution, saving state after each successful stage and allowing recovery from the last successful checkpoint.

This capability proves particularly valuable for long-running pipelines with expensive stages. Rather than re-executing 30 minutes of successful stages to retry a 2-minute stage that failed, checkpoint-based recovery jumps directly to the failed stage. However, this approach requires careful consideration of dependencies between stages—skipping earlier stages assumes they don't need re-execution, which may not hold if underlying conditions changed.

Compliance, Auditing, and Regulatory Requirements

Organizations in regulated industries face compliance requirements that affect pipeline design and operation. Financial services, healthcare, and government sectors must maintain detailed audit trails, implement separation of duties, and demonstrate control over deployment processes to satisfy regulatory frameworks.

Audit Logging and Traceability

Comprehensive audit logs capture who did what and when throughout the pipeline lifecycle. Every action—code commits, pipeline executions, approvals, deployments, rollbacks—should be logged with sufficient detail to reconstruct events during audits or incident investigations. These logs must be immutable and retained for periods specified by regulatory requirements, often measured in years.

Traceability extends beyond simple logging to creating connections between related events. Given a production deployment, auditors should be able to trace back through the pipeline execution, identify the specific code changes included, see who approved the deployment, and verify that all required quality gates passed. This end-to-end traceability demonstrates that proper processes were followed and provides evidence of control.

Approval Gates and Separation of Duties

Many regulatory frameworks require that the person who writes code cannot be the same person who approves its deployment to production, implementing separation of duties that prevents individual actors from unilaterally making changes to critical systems. Pipelines enforce these requirements through approval gates that halt execution until designated approvers explicitly authorize progression.

Approval gates must be configured to prevent circumvention—developers shouldn't be able to bypass approvals by modifying pipeline configurations, and approval requirements should be enforced through platform permissions rather than relying on convention. Some organizations implement dual approval for production deployments, requiring sign-off from both technical and business stakeholders before proceeding.

Change Management Integration

Formal change management processes require documenting proposed changes, assessing their risk, obtaining approval, and scheduling implementation windows. Pipelines can integrate with change management systems like ServiceNow, creating change records automatically when deployments are scheduled and updating them as pipelines progress through stages.

This integration maintains compliance without imposing excessive manual burden on teams. Rather than requiring developers to manually create change tickets and keep them updated, the pipeline handles this automatically, ensuring that change management records accurately reflect deployment reality.

Multi-Environment and Multi-Region Deployments

Applications serving global user bases often deploy across multiple regions to reduce latency and improve reliability. Managing deployments across these distributed environments introduces additional complexity that pipelines must orchestrate carefully.

Sequential vs. Parallel Multi-Region Deployment

When deploying to multiple regions, organizations must decide between sequential rollouts that deploy to one region at a time or parallel deployments that update all regions simultaneously. Sequential deployment reduces risk by limiting the blast radius—if issues emerge in the first region, deployment to remaining regions can be halted. However, sequential deployment takes longer and creates temporary inconsistency where different regions run different versions.

Parallel deployment completes faster and maintains version consistency across regions but exposes all regions simultaneously to potential issues. Many organizations adopt a hybrid approach, deploying first to a canary region with lower traffic, validating the deployment through monitoring, and then proceeding with parallel deployment to remaining regions once confidence is established.

Data Consistency and Migration Coordination

Applications with stateful components face additional challenges in multi-region deployments, particularly when schema changes or data migrations are involved. Database migrations must coordinate with application deployments to prevent incompatibilities—deploying application code that expects a new database column before the migration runs will cause failures.

Sophisticated pipelines orchestrate these dependencies, ensuring that database migrations complete successfully before deploying application code that depends on them. Backward-compatible changes enable safer deployments—adding a nullable column can happen before application code is updated to use it, while making the column non-null requires coordination to ensure all application instances are updated first.

Documentation and Knowledge Sharing

Pipelines represent critical infrastructure that multiple team members interact with, making clear documentation essential. Well-documented pipelines reduce onboarding time for new team members, enable effective troubleshooting, and create shared understanding of deployment processes.

Pipeline as Code Documentation

When pipelines are defined as code, the configuration files themselves serve as documentation, but they benefit from supplementary explanation that clarifies intent and design decisions. Comments within pipeline definitions should explain why certain choices were made rather than simply describing what the code does—the code itself shows what happens, but comments provide context about why that approach was chosen.

README files accompanying pipeline code should cover prerequisites, how to run pipelines locally for testing, common troubleshooting scenarios, and contact information for teams responsible for maintenance. This documentation serves as the first reference when issues arise, reducing the time spent diagnosing problems.

Runbooks and Troubleshooting Guides

Common pipeline failures should have documented troubleshooting procedures that guide team members through diagnosis and resolution. These runbooks capture institutional knowledge, preventing repeated rediscovery of solutions to known problems. When a new failure mode is encountered and resolved, updating runbooks ensures that future occurrences are handled more quickly.

Effective runbooks include specific error messages or symptoms, step-by-step diagnostic procedures, and links to relevant logs or monitoring dashboards. They distinguish between issues that team members can resolve independently and those requiring escalation to platform administrators or external support.

What is the difference between Continuous Integration and Continuous Deployment?

Continuous Integration focuses on automatically building and testing code whenever changes are committed to version control, ensuring that new code integrates cleanly with the existing codebase. Continuous Deployment extends this by automatically deploying successfully built and tested code to production environments without manual intervention. While CI validates that code works, CD ensures it reaches users automatically.

How long should a pipeline take to complete?

Pipeline duration depends on the specific stage and context, but general guidelines suggest that commit-triggered pipelines providing developer feedback should complete within 10 minutes to maintain rapid iteration cycles. Full deployment pipelines including all testing and deployment stages might take 30-60 minutes. Longer durations reduce the value of automation by delaying feedback and making it difficult for developers to maintain context while waiting for results.

Should pipelines fail when security scans identify vulnerabilities?

The answer depends on vulnerability severity and available remediation. Critical vulnerabilities with known exploits and available patches should fail pipelines immediately, preventing deployment of known security issues. Lower severity findings might generate warnings and tracking tickets without blocking deployment, preventing security tools from becoming obstacles that teams bypass. The key is establishing clear policies that balance security rigor with development velocity.

How can flaky tests be handled in pipelines?

Flaky tests that pass and fail inconsistently without code changes undermine confidence in automation and should be addressed systematically rather than ignored. Short-term solutions include quarantining flaky tests to run separately without blocking pipelines, or implementing automatic retry logic for specific tests known to have timing sensitivities. Long-term solutions require investigating and fixing the root causes of flakiness—whether inadequate test isolation, timing dependencies, or environmental inconsistencies.

What metrics indicate a healthy pipeline?

Key pipeline health metrics include deployment frequency (how often code reaches production), lead time (time from commit to production), change failure rate (percentage of deployments causing issues), and mean time to recovery (how quickly failures are resolved). Healthy pipelines typically show high deployment frequency with low change failure rates, indicating that automation enables rapid delivery without sacrificing quality. Additionally, consistent execution times and low failure rates for infrastructure reasons indicate reliable automation infrastructure.

How should pipeline configurations be managed across multiple teams?

Organizations with multiple teams often benefit from establishing shared pipeline templates or libraries that encode organizational standards while allowing team-specific customization. Central platform teams can maintain these shared components, ensuring consistency in security scanning, compliance checks, and deployment patterns, while individual teams customize application-specific stages like testing and build processes. This approach balances standardization with flexibility, preventing both excessive rigidity and complete fragmentation.