How to Build API Gateway for Microservices

How to Build API Gateway for Microservices

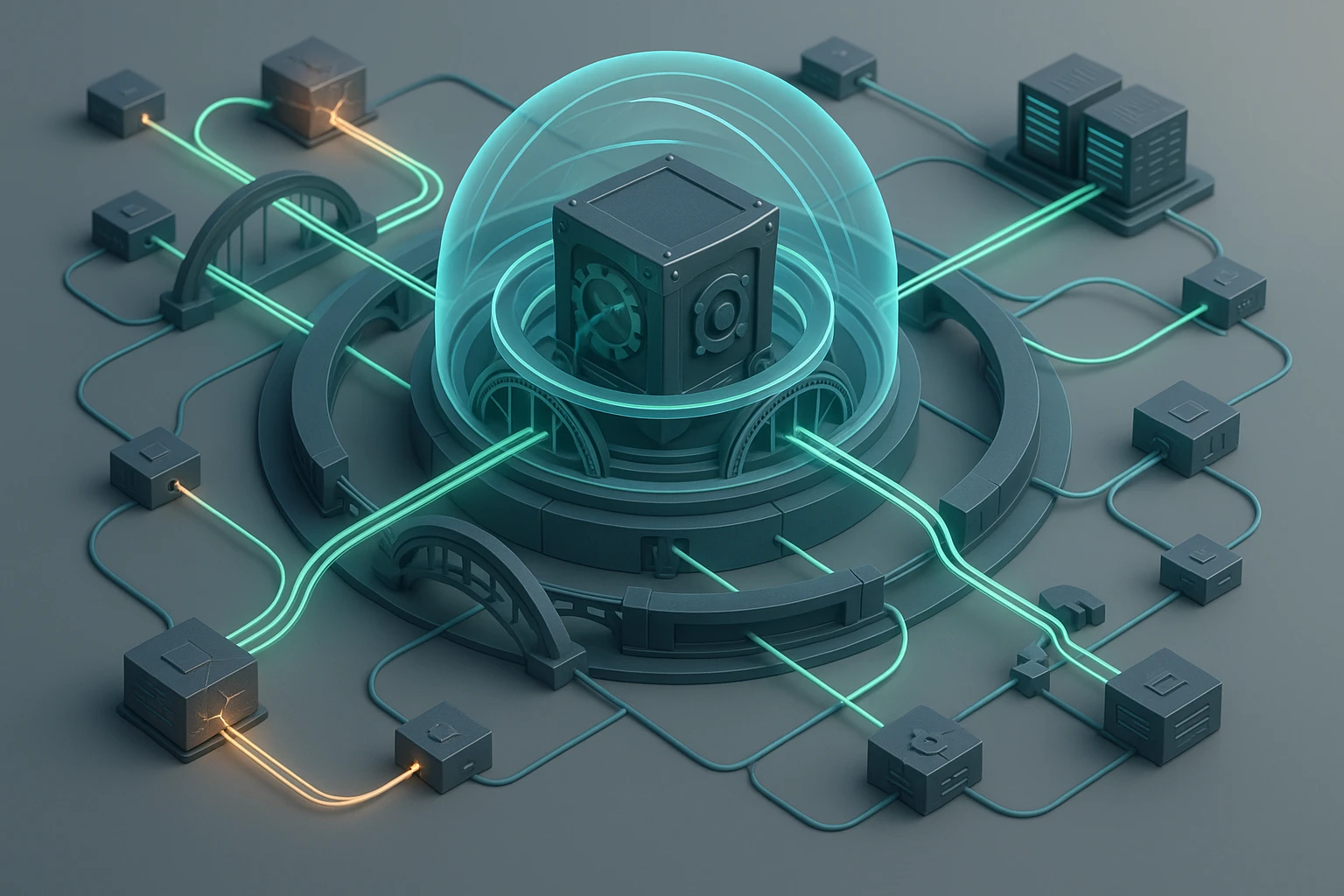

Modern software architecture has transformed dramatically over the past decade, with microservices emerging as the dominant pattern for building scalable, maintainable applications. At the heart of this architectural revolution lies a critical component that often determines the success or failure of your entire system: the API gateway. This single point of entry manages, routes, and secures all communication between your clients and the multitude of services running behind the scenes, making it an indispensable element in any microservices ecosystem.

An API gateway serves as an intelligent intermediary that sits between external clients and your internal microservices, handling cross-cutting concerns like authentication, rate limiting, request routing, and response transformation. Rather than forcing clients to communicate directly with dozens or hundreds of individual services, the gateway provides a unified interface that simplifies client interactions while giving you centralized control over security, monitoring, and traffic management. Understanding how to properly design and implement this component requires examining multiple perspectives: architectural patterns, security considerations, performance optimization, operational requirements, and developer experience.

Throughout this comprehensive exploration, you'll discover the fundamental principles behind effective gateway design, learn practical implementation strategies using various technologies and frameworks, understand common pitfalls and how to avoid them, and gain insights into scaling and maintaining your gateway as your microservices architecture grows. Whether you're building your first microservices system or optimizing an existing one, the knowledge shared here will equip you with the tools and understanding needed to create a robust, performant, and secure API gateway that serves as the reliable foundation for your distributed architecture.

Understanding the Foundation: What Makes an API Gateway Essential

Before diving into implementation details, grasping the fundamental role of an API gateway within microservices architecture proves essential. Traditional monolithic applications exposed a single API endpoint, making client integration straightforward but limiting scalability and deployment flexibility. Microservices architecture breaks applications into numerous independent services, each potentially running on different servers, using different protocols, and managed by different teams. Without a gateway, clients would need to know the location and interface of every service, creating tight coupling and making changes extremely difficult.

The gateway pattern solves this complexity by providing a single entry point that abstracts the underlying service topology. Clients interact with one consistent API, while the gateway handles the intricate work of routing requests to appropriate services, aggregating responses from multiple services when needed, and transforming data formats between what clients expect and what services provide. This separation of concerns allows backend services to evolve independently without breaking client applications, a flexibility that becomes increasingly valuable as systems grow in complexity.

"The API gateway isn't just about routing traffic—it's about creating a stable contract with your clients while giving your backend teams the freedom to innovate and refactor without fear of breaking integrations."

Beyond simple request routing, gateways handle critical cross-cutting concerns that would otherwise need implementation in every individual service. Authentication and authorization naturally belong at the gateway level, verifying user credentials once before requests reach internal services rather than duplicating this logic across dozens of endpoints. Rate limiting and throttling protect your services from being overwhelmed by excessive requests, whether from legitimate traffic spikes or malicious attacks. Request and response logging centralized at the gateway provides comprehensive visibility into system usage patterns without instrumenting every service.

Key Responsibilities That Define Gateway Functionality

Successful gateway implementations balance multiple responsibilities without becoming bottlenecks or single points of failure. Request routing forms the core function, examining incoming requests and directing them to appropriate backend services based on path, headers, or other criteria. This routing can be simple one-to-one mapping or complex logic involving conditional routing based on request characteristics, user attributes, or system state.

Protocol translation enables clients using REST over HTTP to communicate with backend services that might use gRPC, GraphQL, or message queues. The gateway handles these protocol conversions transparently, allowing each service to use the most appropriate communication pattern for its needs. Response aggregation combines data from multiple services into single responses, reducing the number of round trips clients need to make and simplifying client-side logic.

- Request routing and load balancing distribute traffic across service instances, ensuring high availability and optimal resource utilization

- Authentication and authorization verify user identity and permissions before allowing access to protected resources

- Rate limiting and throttling protect backend services from overload while ensuring fair resource allocation among clients

- Request and response transformation adapt data formats between client expectations and service implementations

- Caching stores frequently requested data to reduce backend load and improve response times

- Monitoring and analytics collect metrics about API usage, performance, and errors for operational insights

- SSL termination handles encryption at the edge, reducing computational burden on backend services

| Gateway Pattern | Best Use Case | Complexity Level | Primary Benefit |

|---|---|---|---|

| Single Gateway | Small to medium systems with unified client base | Low | Simplicity and centralized control |

| Gateway per Client Type | Systems serving mobile, web, and partner APIs differently | Medium | Optimized experience per client platform |

| Backend for Frontend (BFF) | Complex UIs with specific data aggregation needs | Medium-High | Tailored API design for each frontend |

| Micro-Gateway | Service mesh architectures with distributed control | High | Decentralized policy enforcement |

Architectural Patterns and Design Considerations

Designing an effective API gateway requires careful consideration of architectural patterns that balance simplicity, performance, and maintainability. The single gateway pattern offers the most straightforward approach, deploying one gateway instance (or cluster for high availability) that handles all incoming traffic. This pattern works well for smaller systems or those with relatively homogeneous client requirements, providing centralized management and consistent policy enforcement across all APIs.

As systems grow and client needs diversify, the gateway-per-client-type pattern becomes more appropriate. Mobile applications often require different data formats, smaller payloads, and specific caching strategies compared to web applications or third-party integrations. Implementing separate gateways for each client type allows optimization for specific use cases without compromising other clients. Mobile gateways might prioritize bandwidth efficiency and offline support, while partner API gateways emphasize versioning stability and detailed usage analytics.

Backend for Frontend: Tailoring Gateways to Specific Needs

The Backend for Frontend (BFF) pattern takes client-specific optimization further by creating dedicated gateway services for each user interface. Rather than forcing a single API to serve multiple frontends with different data requirements, each frontend team builds and maintains their own BFF that aggregates and transforms backend service data exactly as needed. This pattern reduces over-fetching and under-fetching problems common in generic APIs, improves frontend performance, and allows frontend teams to iterate independently.

"When your mobile team spends more time working around API limitations than building features, you know it's time to consider the Backend for Frontend pattern. Give each client the API it deserves, not a compromise that serves none well."

BFF implementations do introduce additional complexity and potential code duplication. Teams must balance the benefits of specialized APIs against the maintenance burden of multiple gateway services. Shared libraries for common functionality like authentication, logging, and error handling help reduce duplication while preserving the flexibility that makes BFF valuable. Clear ownership models ensure each BFF has a dedicated team responsible for its evolution and operation.

Service Mesh Integration and Micro-Gateway Patterns

Modern service mesh technologies like Istio, Linkerd, and Consul Connect blur the lines between API gateways and service-to-service communication. Service meshes handle many traditional gateway responsibilities—load balancing, circuit breaking, mutual TLS, observability—but at the service-to-service level rather than just at the edge. Micro-gateway patterns leverage service mesh capabilities by deploying lightweight gateway instances alongside each service, creating a distributed policy enforcement model.

This distributed approach offers several advantages: reduced latency by eliminating extra network hops, improved fault isolation since gateway failures affect fewer services, and better scalability since gateway capacity grows automatically with service instances. However, distributed gateways also complicate policy management and debugging, requiring sophisticated control planes to maintain consistency across the mesh. Organizations typically adopt service meshes after reaching significant scale, when centralized gateway architectures become bottlenecks or single points of failure.

Practical Implementation: Building Your Gateway

Translating architectural concepts into working code requires selecting appropriate technologies and frameworks that match your requirements, team expertise, and operational capabilities. The implementation landscape offers numerous options, from lightweight reverse proxies to comprehensive API management platforms, each with distinct trade-offs regarding features, performance, and complexity.

Technology Stack Selection: Evaluating Your Options

Open-source solutions dominate the API gateway space, providing battle-tested functionality without vendor lock-in. NGINX and HAProxy serve as foundational reverse proxies that many organizations extend into full-featured gateways through custom modules and scripting. These tools excel at raw performance and stability but require significant configuration and custom development for advanced features like authentication, rate limiting, and response transformation.

Purpose-built API gateway frameworks like Kong, Tyk, and KrakenD provide comprehensive feature sets out of the box, including plugin ecosystems for extending functionality. Kong, built on NGINX, offers robust plugin architecture supporting authentication, rate limiting, caching, and transformation through Lua scripting or Go plugins. Tyk provides similar capabilities with a focus on developer experience and GraphQL support. KrakenD emphasizes stateless operation and exceptional performance through its configuration-driven approach without requiring external databases.

- 🔧 NGINX/OpenResty for maximum performance and control with Lua scripting capabilities

- 🚀 Kong Gateway when you need extensive plugins and enterprise support options

- ⚡ KrakenD for stateless, high-performance scenarios with declarative configuration

- 🛠️ Spring Cloud Gateway when working within Java/Spring ecosystem

- 🌐 Express Gateway for Node.js teams preferring JavaScript-based customization

Cloud-native options like AWS API Gateway, Google Cloud Endpoints, and Azure API Management integrate seamlessly with their respective cloud platforms, offering managed services that eliminate operational overhead. These managed solutions handle scaling, high availability, and security automatically but introduce cloud vendor dependencies and potentially higher costs at scale. Hybrid approaches using open-source gateways deployed on cloud infrastructure provide middle ground between full control and managed convenience.

Core Implementation Components

Regardless of chosen technology, every gateway implementation requires several core components working together cohesively. The routing engine forms the foundation, mapping incoming requests to backend services based on configured rules. Simple path-based routing handles straightforward cases, while advanced routing might consider HTTP methods, headers, query parameters, or request body content. Dynamic routing capabilities allow runtime configuration changes without redeploying the gateway, essential for blue-green deployments and canary releases.

"The most elegant gateway implementations are those where adding a new service endpoint requires changing configuration, not code. When developers can safely modify routing rules through declarative configs, you've achieved the right abstraction level."

Authentication and authorization middleware intercepts requests before they reach backend services, validating credentials and enforcing access policies. Modern implementations support multiple authentication schemes: JWT tokens for stateless authentication, OAuth 2.0 for third-party integrations, API keys for partner access, and mutual TLS for service-to-service communication. Authorization logic determines whether authenticated users can access specific resources, often integrating with external policy engines like Open Policy Agent for complex permission models.

Request transformation capabilities adapt incoming requests to match backend service expectations. Header manipulation adds, removes, or modifies HTTP headers for authentication tokens, correlation IDs, or service-specific metadata. Path rewriting translates external API paths to internal service routes, allowing public API structure to evolve independently from internal implementations. Request body transformation converts between data formats, handles API versioning, or enriches requests with additional context before forwarding to services.

| Component | Primary Function | Implementation Considerations | Performance Impact |

|---|---|---|---|

| Routing Engine | Direct requests to appropriate services | Support for dynamic rules, pattern matching efficiency | Low if properly indexed |

| Authentication Layer | Verify client identity | Token validation caching, external provider integration | Medium (cacheable) |

| Rate Limiter | Control request volume | Distributed state management, algorithm selection | Low to Medium |

| Response Cache | Store and serve cached responses | Cache invalidation strategy, storage backend choice | Very Low (major benefit) |

| Circuit Breaker | Prevent cascading failures | Failure threshold tuning, fallback behavior | Low |

Implementing Rate Limiting and Throttling

Protecting backend services from overload requires sophisticated rate limiting that balances fairness, performance, and user experience. Token bucket algorithms provide smooth rate limiting by allowing burst traffic while maintaining average rates over time. Each client receives a bucket that fills with tokens at a steady rate; requests consume tokens, and when the bucket empties, subsequent requests are rejected or queued. This approach handles legitimate traffic spikes gracefully while preventing sustained abuse.

Distributed rate limiting presents challenges in multi-instance gateway deployments. Maintaining accurate rate limit counters across multiple gateway instances requires shared state, typically implemented using Redis or similar fast data stores. The overhead of synchronizing state between instances must be weighed against the accuracy requirements of your rate limiting policies. Some implementations accept eventual consistency, allowing brief periods where total request rates might exceed limits during synchronization delays.

Hierarchical rate limiting applies different limits at various levels: per-user, per-API-key, per-endpoint, and globally across the entire gateway. This multi-tiered approach prevents both individual abusers and systemic overload. A single user might be limited to 100 requests per minute, while the entire API endpoint handles 10,000 requests per minute across all users. Implementing these hierarchies efficiently requires careful data structure design to check multiple limit levels without introducing excessive latency.

Security Architecture: Protecting Your Gateway and Services

API gateways occupy a critical security position as the primary entry point to your microservices architecture, making them prime targets for attacks and essential components of defense-in-depth strategies. Comprehensive security requires addressing multiple threat vectors: unauthorized access, injection attacks, denial of service, data exposure, and compromised credentials. Each security layer adds protection without creating friction that degrades user experience or developer productivity.

Authentication Strategies and Token Management

Modern API authentication relies heavily on token-based schemes, with JWT (JSON Web Tokens) emerging as the dominant standard for stateless authentication. Clients authenticate once with an identity provider, receiving a signed JWT containing user identity and claims. The gateway validates these tokens on every request by verifying signatures using public keys, eliminating the need for database lookups or session storage. This stateless approach scales horizontally effortlessly since any gateway instance can validate any token without shared state.

Token validation must balance security and performance. Cryptographic signature verification consumes CPU cycles, but caching validation results for short periods dramatically reduces overhead. Gateways typically cache the validation status of tokens for 30-60 seconds, accepting the minimal risk that a revoked token might remain valid briefly in exchange for significant performance gains. For scenarios requiring immediate revocation, gateways can check token IDs against a revocation list, though this reintroduces state management complexity.

"Security is not a feature you add at the end—it's a fundamental architectural concern that must be designed into every component from the start. Your gateway is only as secure as the weakest link in your authentication chain."

Multi-factor authentication (MFA) and adaptive authentication add security layers for sensitive operations. Rather than enforcing MFA on every request, which would severely impact user experience, gateways can require additional verification for high-risk actions: financial transactions, account modifications, or access to sensitive data. Risk-based authentication analyzes request context—location, device, time, behavior patterns—to determine when additional verification is warranted, balancing security and usability intelligently.

Authorization and Access Control Patterns

Authorization determines what authenticated users can access, implementing business logic around permissions and roles. Simple role-based access control (RBAC) works for straightforward scenarios: administrators access everything, regular users access their own data, guests have read-only access. However, complex applications require attribute-based access control (ABAC) that considers multiple factors: user attributes, resource attributes, environmental conditions, and action types.

Implementing authorization at the gateway level provides centralized policy enforcement but risks creating a bottleneck that must understand every service's permission model. A hybrid approach works better: gateways enforce coarse-grained authorization (which services can this user access?), while services implement fine-grained authorization (can this user modify this specific resource?). This separation maintains security while keeping the gateway focused on cross-cutting concerns rather than business logic.

Policy-as-code approaches using tools like Open Policy Agent (OPA) externalize authorization logic from gateway code, making policies easier to audit, test, and modify. Policies written in declarative languages like Rego express complex rules clearly, and OPA's decision caching ensures minimal performance impact. Gateways query OPA with request context, receiving allow/deny decisions along with any required transformations or additional constraints.

Protecting Against Common Attack Vectors

SQL injection, cross-site scripting (XSS), and other injection attacks target applications through malicious input. While backend services must validate and sanitize inputs, gateways provide an additional defense layer by implementing input validation rules that reject obviously malicious requests before they reach services. Web Application Firewall (WAF) functionality integrated into gateways detects and blocks common attack patterns using signature-based detection and anomaly detection algorithms.

Distributed Denial of Service (DDoS) attacks attempt to overwhelm systems with traffic volume. Gateway-level rate limiting provides basic protection, but sophisticated DDoS mitigation requires additional layers: connection rate limiting, IP reputation checking, challenge-response mechanisms like CAPTCHA, and integration with upstream DDoS protection services. Cloud-based DDoS protection services absorb attack traffic before it reaches your infrastructure, while gateway configurations ensure legitimate traffic continues flowing during attacks.

API abuse takes more subtle forms than brute-force DDoS: credential stuffing using stolen passwords, scraping valuable data, exploiting business logic flaws. Behavioral analysis and anomaly detection identify suspicious patterns: sudden traffic spikes from new IPs, unusual API call sequences, access patterns inconsistent with normal usage. Machine learning models trained on historical traffic can flag anomalies for automated blocking or human review, adapting to new attack patterns over time.

Performance Optimization and Scalability

Gateway performance directly impacts user experience and system capacity, making optimization critical for successful microservices architectures. Every millisecond added to request processing accumulates across the thousands or millions of requests flowing through your gateway daily. Systematic performance optimization addresses multiple dimensions: reducing processing overhead, minimizing network latency, leveraging caching effectively, and scaling horizontally to handle growing traffic.

Minimizing Gateway Processing Overhead

Each feature added to your gateway—authentication, rate limiting, transformation, logging—consumes CPU cycles and adds latency. Efficient implementations minimize this overhead through careful algorithm selection and optimized code paths. Routing decisions based on simple string prefix matching execute in microseconds, while complex regex patterns or external service calls can add milliseconds. Profiling gateway performance under realistic load reveals bottlenecks and guides optimization efforts toward high-impact areas.

"Premature optimization wastes time, but understanding your performance baseline and monitoring for degradation as features are added prevents performance problems from becoming emergencies. Measure first, optimize second, always."

Asynchronous processing and non-blocking I/O allow gateways to handle thousands of concurrent connections without dedicating threads to each request. Modern gateway implementations built on async frameworks like Node.js, Go, or Rust achieve exceptional throughput by efficiently utilizing CPU and memory resources. Connection pooling to backend services eliminates the overhead of establishing new connections for each request, while keep-alive connections reduce TCP handshake overhead.

Strategic Caching for Maximum Impact

Caching represents the most impactful performance optimization available, potentially eliminating backend service calls entirely for frequently requested data. Response caching stores complete API responses, serving subsequent identical requests directly from cache without invoking backend services. Cache keys typically combine request path, query parameters, and relevant headers, ensuring different requests don't receive incorrect cached responses.

Cache invalidation challenges the effectiveness of any caching strategy. Time-based expiration provides simple cache management: responses remain cached for a configured duration before being refreshed. This approach works well for data that changes predictably but can serve stale data for the entire cache duration. Event-based invalidation purges cached entries when underlying data changes, providing fresher data at the cost of additional complexity and coordination between services and the gateway.

Multi-level caching strategies combine different cache types for optimal performance. In-memory caches within gateway instances provide sub-millisecond response times but don't share cached data between instances. Shared caches using Redis or Memcached synchronize cached data across gateway instances, ensuring consistent responses while maintaining excellent performance. CDN edge caching pushes static or semi-static content to geographic locations near users, dramatically reducing latency for global audiences.

Horizontal Scaling and Load Distribution

Gateway capacity scales horizontally by deploying multiple instances behind load balancers, distributing traffic across instances to handle increasing load. Stateless gateway designs scale effortlessly since any instance can handle any request without session affinity or state synchronization. Load balancers use various algorithms—round-robin, least connections, weighted distribution—to distribute requests optimally based on instance capacity and health.

Auto-scaling policies automatically adjust gateway instance counts based on traffic patterns and resource utilization. Cloud platforms provide sophisticated auto-scaling that monitors CPU, memory, request rates, and custom metrics, launching additional instances during traffic spikes and terminating excess capacity during quiet periods. Proper auto-scaling configuration requires understanding your traffic patterns: gradual scaling for predictable daily cycles, aggressive scaling for sudden viral events or marketing campaigns.

Health checking ensures load balancers only route traffic to healthy gateway instances. Active health checks periodically probe instances with synthetic requests, removing unresponsive instances from rotation. Passive health checks monitor real request success rates, detecting degraded instances that respond slowly or return errors. Combining both approaches provides robust failure detection while minimizing unnecessary health check overhead.

Observability, Monitoring, and Operational Excellence

Operating an API gateway in production demands comprehensive observability: the ability to understand system behavior, diagnose problems, and optimize performance based on real-world usage data. Effective observability combines metrics, logs, and traces to provide complete visibility into gateway operations, enabling teams to detect issues before they impact users and resolve problems quickly when they occur.

Metrics Collection and Analysis

Gateway metrics provide quantitative insights into system health and performance. Request rate metrics show traffic volume over time, revealing daily patterns, growth trends, and anomalous spikes. Latency metrics measure time spent processing requests, broken down by percentiles (p50, p95, p99) to understand typical performance and worst-case scenarios. Error rate metrics track failed requests by type—client errors (4xx), server errors (5xx), timeouts—helping identify systemic issues versus isolated failures.

Resource utilization metrics monitor gateway infrastructure health: CPU usage, memory consumption, network bandwidth, connection counts. These metrics inform capacity planning and auto-scaling policies while providing early warning of resource exhaustion. Backend service metrics tracked by the gateway—response times, error rates, availability—enable comprehensive system monitoring without instrumenting every service individually.

- Request throughput measuring requests per second across all endpoints and individual routes

- Response latency tracking p50, p95, and p99 latencies to identify performance degradation

- Error rates categorized by error type, endpoint, and client to pinpoint problem areas

- Cache hit ratios indicating caching effectiveness and opportunities for optimization

- Rate limit violations showing which clients or endpoints experience throttling

- Backend service health monitoring availability and performance of dependent services

Structured Logging for Debugging and Audit

Comprehensive logging captures detailed information about every request flowing through the gateway, supporting debugging, security audits, and compliance requirements. Request logs record client IP addresses, authentication credentials, requested endpoints, response status codes, and processing times. Structured logging using JSON or similar formats makes logs machine-readable, enabling automated analysis and correlation across distributed systems.

"Logs tell the story of what happened, metrics show you the trends, and traces reveal how it happened. You need all three to truly understand your system's behavior in production."

Log levels control verbosity and storage requirements. Production systems typically log at INFO level for normal operations, capturing essential request details without overwhelming storage. ERROR logs capture failures requiring investigation, while DEBUG logs provide detailed information useful during troubleshooting but too verbose for continuous production use. Dynamic log level adjustment allows temporarily increasing verbosity for specific endpoints or clients when investigating issues.

Log aggregation and analysis tools like Elasticsearch, Splunk, or CloudWatch Logs centralize logs from distributed gateway instances, providing unified search and analysis capabilities. Correlation IDs included in every log entry link related logs across services, enabling request tracing through complex distributed workflows. Automated alerting based on log patterns detects error spikes, security events, or anomalous behavior requiring immediate attention.

Distributed Tracing for Request Flow Visibility

Distributed tracing tracks individual requests as they flow through multiple services, providing unprecedented visibility into complex microservices interactions. Each request receives a unique trace ID, and every service involved adds span information describing its processing. Gateway instrumentation creates the root span for each request, recording when requests enter and exit the gateway along with all processing steps in between.

Trace data reveals performance bottlenecks and unexpected dependencies. Visualizing traces shows exactly how much time each service consumed, where requests waited for responses, and which service calls happened sequentially versus in parallel. This visibility proves invaluable when optimizing end-to-end request latency, often revealing surprising findings: network latency dominating processing time, unnecessary sequential calls that could be parallelized, or services performing redundant work.

Sampling strategies control tracing overhead and storage costs. Tracing every request provides complete visibility but generates enormous data volumes in high-traffic systems. Adaptive sampling traces all slow or failed requests while sampling a percentage of successful requests, capturing enough data for analysis without overwhelming storage. Priority sampling ensures traces for specific high-value endpoints or users are always captured regardless of sampling rates.

Deployment Strategies and Operational Best Practices

Successful gateway deployments require careful planning around infrastructure, configuration management, and operational procedures. Gateways occupy a critical path in your architecture, making deployment strategies and operational practices directly impact system availability and reliability. Balancing the need for rapid iteration with the requirement for stability demands mature DevOps practices and thoughtful automation.

Infrastructure and Deployment Architecture

High availability requires deploying gateway instances across multiple availability zones or regions, ensuring no single infrastructure failure takes down your entire API. Load balancers distribute traffic across gateway instances, automatically routing around failed instances. Active-active configurations run gateway instances in multiple regions simultaneously, providing geographic redundancy and reduced latency for global users. Active-passive configurations maintain standby capacity that activates during primary region failures, balancing cost against recovery time objectives.

Container orchestration platforms like Kubernetes simplify gateway deployment and scaling. Kubernetes deployments define desired gateway instance counts, resource requirements, and health check configurations. Horizontal Pod Autoscalers automatically scale gateway pods based on CPU utilization or custom metrics like request rates. Service meshes integrated with Kubernetes provide additional capabilities: automatic service discovery, mutual TLS, and sophisticated traffic routing without modifying gateway configurations.

Configuration management separates gateway code from environment-specific settings, enabling the same gateway binary to run across development, staging, and production environments with different configurations. Configuration stored in version control provides audit trails and enables rollback when configuration changes cause problems. External configuration stores like Consul, etcd, or cloud-native solutions allow runtime configuration updates without redeploying gateways, essential for dynamic routing changes and feature flags.

Safe Deployment Practices and Rollback Strategies

Blue-green deployments minimize downtime and risk during gateway updates. Two identical production environments—blue and green—run simultaneously, with only one serving live traffic. New gateway versions deploy to the inactive environment, undergo thorough testing, then traffic switches to the updated environment. If problems emerge, switching back to the previous environment provides instant rollback. This approach requires double the infrastructure but provides maximum safety for critical components like API gateways.

Canary deployments gradually roll out changes to small percentages of traffic before full deployment. Initial canary stages might route 5% of traffic to the new gateway version while monitoring error rates, latency, and other key metrics. Successful canary stages progressively increase traffic percentages—10%, 25%, 50%—until the new version handles all traffic. Automated canary analysis compares metrics between canary and baseline versions, automatically rolling back if degradation is detected.

Feature flags decouple deployment from release, allowing new gateway features to deploy in disabled states then activate when ready. This separation enables continuous deployment while maintaining control over when users experience changes. Percentage-based feature flags gradually roll out features to increasing user percentages, similar to canary deployments but at the feature level. User-targeted flags enable testing features with specific user segments before general availability.

Disaster Recovery and Business Continuity

Comprehensive disaster recovery plans address various failure scenarios: complete region outages, data center failures, widespread service degradation, security breaches. Regular disaster recovery testing validates plans work as expected, revealing gaps before real emergencies occur. Automated failover procedures reduce recovery time and eliminate manual errors during high-stress situations, though human oversight remains essential for complex scenarios.

Circuit breakers protect against cascading failures when backend services become unavailable or degraded. When a service exceeds error thresholds, the circuit breaker opens, immediately rejecting requests to that service without attempting calls that will fail. This fail-fast behavior prevents request queuing and resource exhaustion, giving failing services time to recover. Half-open states periodically test service recovery, closing the circuit when services return to health.

Fallback responses provide graceful degradation when services are unavailable. Rather than returning generic errors, gateways can serve cached data, default responses, or simplified alternatives that maintain partial functionality. Fallback strategies vary by endpoint: critical authentication endpoints might have no fallback and simply fail, while product listing endpoints might return cached or reduced data sets. Clearly communicating degraded functionality to users prevents confusion and sets appropriate expectations.

Common Pitfalls and How to Avoid Them

Building API gateways involves numerous decisions where seemingly reasonable choices lead to significant problems down the road. Learning from common mistakes helps teams avoid painful experiences and build more robust systems from the start. These pitfalls span technical architecture, operational practices, and organizational dynamics, each requiring awareness and deliberate counter-measures.

The Monolithic Gateway Anti-Pattern

Centralizing too much business logic in the gateway transforms it from a focused infrastructure component into a distributed monolith that defeats the purpose of microservices architecture. Gateways that perform complex data aggregation, implement business rules, or contain service-specific logic become bottlenecks requiring redeployment whenever any backend service changes. This coupling eliminates the independence that makes microservices valuable, creating a single point of change that coordinates releases across multiple teams.

Maintaining clear boundaries prevents this anti-pattern. Gateways should handle cross-cutting concerns—authentication, routing, rate limiting—while leaving business logic to services. Data aggregation belongs in dedicated composition services or Backend for Frontend layers, not in the gateway itself. When teams find themselves adding service-specific code to the gateway, it signals the need to reconsider architecture and push that logic to more appropriate locations.

Inadequate Capacity Planning and Performance Testing

Underestimating gateway capacity requirements leads to performance problems or outages during traffic spikes. Gateways must handle peak traffic with headroom for growth, not just average loads. Performance testing under realistic conditions reveals capacity limits and identifies bottlenecks before they impact production. Load testing tools simulate thousands of concurrent connections with realistic request patterns, measuring throughput, latency, and resource utilization under stress.

"Your gateway will be tested by production traffic eventually—the only question is whether you test it first under controlled conditions or discover its limits during a critical business event when customers are watching."

Capacity planning considers both vertical and horizontal scaling limits. Individual gateway instances have CPU and memory constraints that limit requests per second. Network bandwidth, connection limits, and downstream service capacity also constrain system throughput. Understanding these limits and architecting for horizontal scaling ensures systems handle growth gracefully rather than hitting hard ceilings requiring emergency architectural changes.

Insufficient Observability and Monitoring

Deploying gateways without comprehensive monitoring creates blind spots that hide problems until they become critical. Teams need visibility into gateway health, performance, and usage patterns to operate effectively. Monitoring gaps often emerge around edge cases: how does the gateway behave during partial backend outages? What happens when specific services degrade? How do rate limits affect user experience?

Comprehensive monitoring covers multiple dimensions: infrastructure metrics, application metrics, business metrics, and user experience metrics. Alerting strategies balance sensitivity and specificity—too many alerts cause fatigue and important signals get ignored, while too few alerts miss problems until users complain. Alert thresholds should reflect actual impact: slight latency increases might not warrant alerts, but sustained error rate spikes demand immediate attention.

Security Oversights and Compliance Gaps

Security vulnerabilities in API gateways expose entire microservices architectures to attack. Common oversights include weak authentication schemes, missing authorization checks, inadequate input validation, and exposed internal service details. Regular security audits and penetration testing identify vulnerabilities before attackers exploit them. Automated security scanning tools detect common issues like outdated dependencies, misconfigurations, and known vulnerability patterns.

Compliance requirements around data privacy, audit logging, and access control must be designed into gateway architecture from the start. Retrofitting compliance features into existing gateways proves far more difficult than building them in initially. GDPR, HIPAA, PCI-DSS, and other regulatory frameworks impose specific requirements around data handling, logging, and access control that gateways must support. Legal and compliance teams should review gateway designs early to ensure requirements are met.

Advanced Patterns and Future Considerations

As microservices architectures mature, advanced patterns and emerging technologies extend gateway capabilities beyond traditional request routing and authentication. These advanced topics represent the cutting edge of API gateway evolution, addressing complex scenarios and leveraging new technologies to solve previously intractable problems.

GraphQL Gateway Patterns

GraphQL fundamentally changes API design by allowing clients to request exactly the data they need through flexible queries rather than fixed endpoints. GraphQL gateways aggregate multiple backend services into unified schemas, enabling clients to retrieve related data from multiple services in single requests. Schema stitching combines separate GraphQL schemas from individual services into cohesive graphs, while federation allows services to contribute types and fields to shared schemas without tight coupling.

GraphQL gateways face unique challenges around authorization, caching, and query complexity. Field-level authorization determines which users can access specific data fields, requiring more granular security than endpoint-level controls. Query complexity analysis prevents expensive queries from overwhelming backend services by analyzing query depth, breadth, and estimated cost before execution. Response caching with GraphQL requires sophisticated strategies since different queries against the same data need different cache keys.

Event-Driven and Asynchronous Patterns

Traditional request-response patterns don't fit all scenarios. Long-running operations, real-time updates, and event notifications require asynchronous communication patterns. WebSocket support in gateways enables persistent connections for real-time bidirectional communication between clients and services. Server-Sent Events (SSE) provide simpler one-way streaming from servers to clients, useful for live updates and notifications.

Event-driven architectures integrate gateways with message brokers like Kafka, RabbitMQ, or cloud-native event services. Gateways can publish events to message brokers based on API requests, enabling asynchronous processing workflows. Webhook management transforms incoming webhooks from external services into internal events, providing consistent event handling across the system. These patterns decouple request processing from response generation, improving resilience and scalability.

Machine Learning Integration and Intelligent Routing

Machine learning models deployed within or alongside gateways enable intelligent traffic management and security. Anomaly detection models identify unusual traffic patterns that might indicate attacks, abuse, or system problems. Predictive scaling models forecast traffic patterns and proactively adjust capacity before demand spikes. Intelligent routing uses reinforcement learning to optimize traffic distribution based on service performance, user location, and request characteristics.

Content-based routing leverages ML models to analyze request content and route to specialized service instances optimized for specific request types. Image classification models might route image processing requests to GPU-enabled instances, while text analysis models route document processing to instances with NLP capabilities. This intelligent routing maximizes resource efficiency and improves response times by matching requests with optimal processing resources.

Edge Computing and Globally Distributed Gateways

Edge computing pushes computation closer to users, reducing latency and improving user experience for global applications. Globally distributed gateway deployments across multiple continents serve users from nearby locations, minimizing network round-trip times. Edge gateways can execute lightweight processing, caching, and routing decisions locally while coordinating with centralized control planes for policy enforcement and configuration management.

Multi-region active-active architectures require sophisticated data synchronization and consistency management. Gateway configurations, rate limit counters, and cache data must synchronize across regions while tolerating network partitions and regional failures. Eventual consistency models accept temporary inconsistencies across regions in exchange for continued operation during network problems, while strong consistency models ensure identical behavior across regions at the cost of increased latency and reduced availability during failures.

Frequently Asked Questions

What is the difference between an API gateway and a load balancer?

Load balancers distribute traffic across multiple instances of the same service, focusing primarily on availability and performance. API gateways provide comprehensive API management including routing to different services, authentication, rate limiting, request transformation, and response aggregation. While load balancers operate at the network or transport layer, gateways work at the application layer with deep understanding of API semantics. Many architectures use both: load balancers in front of gateway instances for high availability, with gateways handling API-specific concerns.

Should authentication happen at the gateway or in individual services?

Authentication typically happens at the gateway for efficiency and consistency, validating credentials once before requests reach backend services. The gateway then forwards authenticated user context to services via headers or tokens. However, services should still validate they received authenticated requests and perform their own authorization checks. This defense-in-depth approach ensures services remain secure even if gateway security is somehow bypassed, while avoiding redundant authentication overhead across every service.

How do I handle versioning in my API gateway?

API versioning strategies include URL path versioning (api/v1/users), header-based versioning (Accept: application/vnd.api+json;version=1), and query parameter versioning (api/users?version=1). Gateways route requests to appropriate service versions based on version indicators, allowing multiple API versions to coexist during transition periods. Maintain backward compatibility when possible, use semantic versioning to communicate change significance, and provide clear deprecation timelines for old versions. Gateway routing rules map version indicators to specific backend service deployments.

What happens if my API gateway fails?

Gateway failures represent serious availability risks since they sit in the critical path for all API traffic. High availability architectures deploy multiple gateway instances across availability zones with load balancers distributing traffic and routing around failures. Health checks detect failed instances and remove them from rotation automatically. For complete region failures, multi-region active-active or active-passive architectures provide geographic redundancy. Regular disaster recovery testing validates failover procedures work correctly. Despite best efforts, gateway failures will occur—design for graceful degradation and rapid recovery rather than assuming perfect availability.

How much latency does an API gateway add to requests?

Well-optimized gateways add minimal latency, typically 1-5 milliseconds for simple routing and authentication. Complex operations like multiple service aggregation, extensive transformations, or external authentication provider calls can add tens or hundreds of milliseconds. Measure gateway latency in your specific environment under realistic load since performance varies significantly based on gateway technology, configuration, and infrastructure. Optimize by minimizing unnecessary processing, leveraging caching aggressively, and ensuring adequate gateway capacity. Remember that the operational benefits of centralized security, monitoring, and traffic management typically outweigh modest latency increases.

Can I use multiple API gateways in the same system?

Multiple gateways serving different purposes or client types are common in mature architectures. Backend for Frontend patterns deploy separate gateways for mobile, web, and partner APIs, each optimized for specific client needs. Internal and external gateways separate private service-to-service communication from public API access with different security and performance characteristics. Ensure clear boundaries between gateway responsibilities to avoid confusion and duplication. Multiple gateways increase operational complexity, so adopt this pattern when benefits of specialized gateways outweigh additional management overhead.