How to Build GraphQL APIs from Scratch

Developers build a GraphQL API from scratch: design schema, implement resolvers, add queries & mutations, set up server and client, test, optimize performance and secure endpoints.

How to Build GraphQL APIs from Scratch

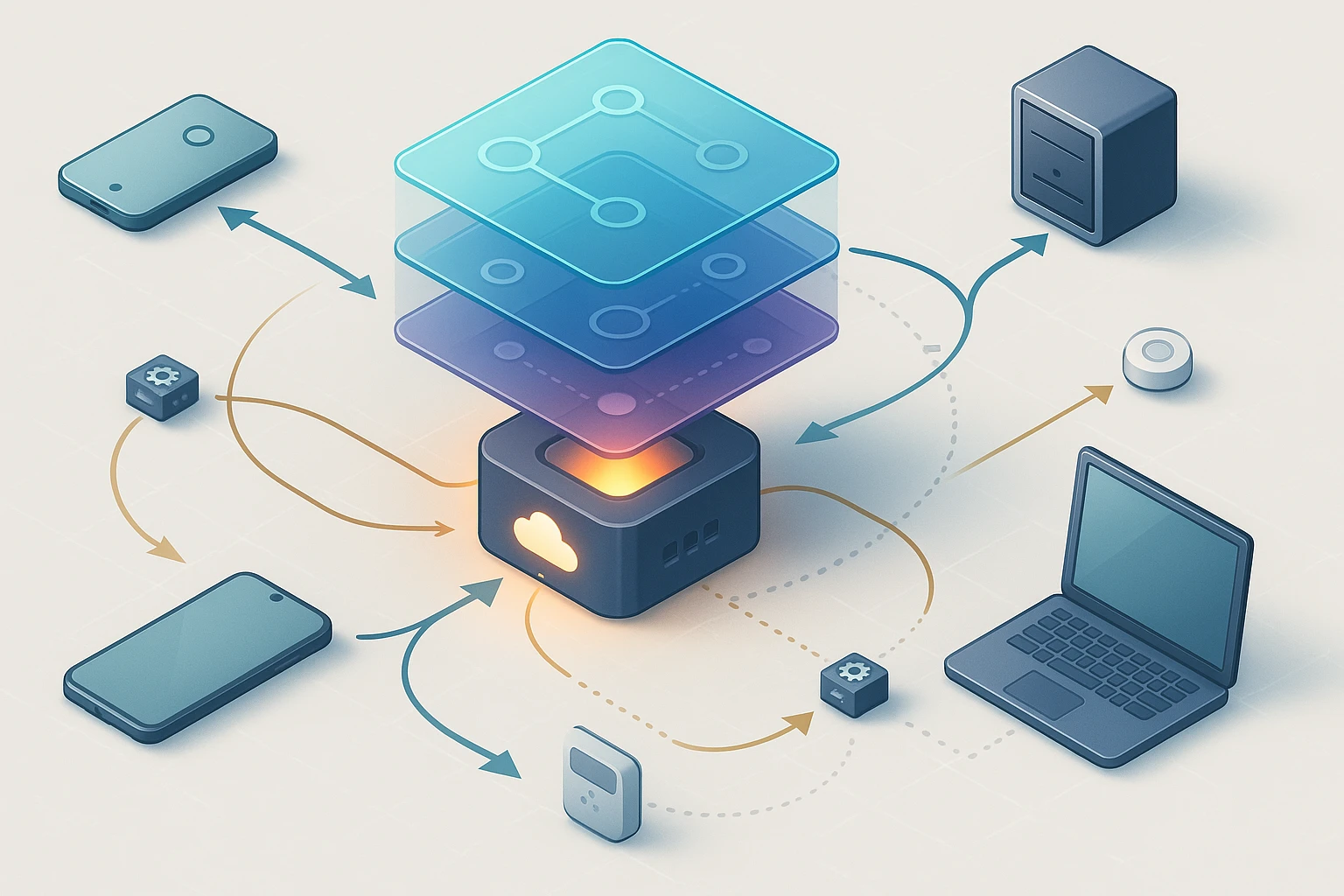

Modern web development demands efficient, flexible data communication between clients and servers. Traditional REST APIs, while functional, often lead to over-fetching or under-fetching data, creating performance bottlenecks and frustrating development experiences. GraphQL emerged as a revolutionary query language that addresses these fundamental challenges, offering developers unprecedented control over data retrieval and manipulation. Whether you're building a mobile application, a complex web platform, or microservices architecture, understanding GraphQL implementation becomes essential for creating scalable, maintainable systems.

GraphQL represents a query language for APIs and a runtime for executing those queries with existing data. Unlike REST, which exposes multiple endpoints for different resources, GraphQL provides a single endpoint that responds to precisely what clients request—nothing more, nothing less. This paradigm shift transforms how developers think about API design, moving from resource-oriented thinking to graph-based data modeling. The technology offers multiple perspectives: from a frontend developer's viewpoint, it simplifies data fetching; from a backend perspective, it provides clear contracts and type safety; from an architectural standpoint, it enables efficient communication patterns across distributed systems.

Throughout this comprehensive guide, you'll discover practical implementation strategies for building GraphQL APIs from the ground up. We'll explore schema design principles, resolver implementation patterns, authentication mechanisms, performance optimization techniques, and real-world integration scenarios. You'll gain hands-on knowledge of essential tools, libraries, and best practices that professional development teams employ in production environments. By the end, you'll possess the technical foundation and practical insights needed to architect, develop, and deploy robust GraphQL APIs that serve real business requirements.

Understanding GraphQL Fundamentals

Before diving into implementation details, grasping the core concepts that differentiate GraphQL from traditional API architectures becomes crucial. The technology revolves around a strongly-typed schema that defines all possible data operations. This schema serves as a contract between client and server, providing clarity, documentation, and validation mechanisms automatically. Unlike REST APIs where documentation often becomes outdated or incomplete, GraphQL schemas remain the single source of truth.

The type system forms the foundation of every GraphQL API. Scalar types like String, Int, Float, Boolean, and ID represent primitive values, while object types define complex data structures with fields. Each field can return either scalar values or other object types, creating a graph-like structure that mirrors real-world data relationships. Enums restrict values to specific sets, while interfaces and unions enable polymorphic designs. Input types specifically handle mutation arguments, separating read and write data structures.

"The schema-first approach fundamentally changes how teams collaborate, creating a clear contract that frontend and backend developers can work against simultaneously without blocking each other."

Three primary operation types define GraphQL's capabilities: queries for reading data, mutations for modifying data, and subscriptions for real-time updates. Queries allow clients to specify exactly which fields they need, traversing relationships without multiple round trips. Mutations handle create, update, and delete operations with predictable input and output structures. Subscriptions leverage WebSocket connections to push updates when specific events occur, enabling reactive user interfaces.

Schema Definition Language Essentials

The Schema Definition Language (SDL) provides an intuitive syntax for defining GraphQL schemas. Rather than programmatically constructing types, SDL offers a declarative approach that reads like documentation. A basic schema might define a User type with fields like id, name, and email, along with a Query type that exposes operations for fetching users. This human-readable format facilitates communication between team members and serves as living documentation.

Field resolvers represent the bridge between schema definitions and actual data sources. When a client requests specific fields, the GraphQL runtime invokes corresponding resolver functions that fetch or compute values. Resolvers receive four arguments: the parent object, field arguments, context shared across all resolvers, and info containing query metadata. This architecture enables flexible data sourcing from databases, REST APIs, microservices, or any other backend system.

Nullability and list types add precision to schema definitions. By default, fields can return null, but adding an exclamation mark makes them non-nullable, guaranteeing values will always exist. Square brackets denote lists, and combining these modifiers creates various patterns: [String] represents a nullable list of nullable strings, [String]! guarantees a list but allows null elements, and [String!]! ensures both the list and its elements always exist. These type modifiers prevent runtime errors and clarify data expectations.

Setting Up Your Development Environment

Establishing a proper development environment accelerates GraphQL API development and ensures consistency across team members. Node.js remains the most popular runtime for GraphQL servers, though implementations exist for virtually every programming language. Starting with a clean project structure, proper tooling, and essential dependencies creates a solid foundation for scalable API development.

Essential Dependencies and Tools

Several libraries form the core of most GraphQL server implementations. Apollo Server provides a production-ready GraphQL server with extensive features including schema stitching, federation, and built-in tracing. GraphQL.js offers the reference implementation with lower-level control for custom requirements. Express-graphql integrates GraphQL into existing Express applications, while Fastify-graphql serves performance-critical scenarios. Type-GraphQL enables TypeScript-first development with decorators, and GraphQL-tools provides utilities for schema manipulation and mocking.

| Library | Primary Use Case | Key Features | Learning Curve |

|---|---|---|---|

| Apollo Server | Full-featured production servers | Schema federation, caching, monitoring, plugins | Moderate |

| GraphQL.js | Custom implementations, learning | Reference implementation, maximum control | Steep |

| Express-graphql | Existing Express applications | Simple integration, middleware support | Low |

| Type-GraphQL | TypeScript-first development | Decorators, type inference, DI integration | Moderate |

| GraphQL Yoga | Quick prototypes, modern standards | File uploads, subscriptions, CORS handling | Low |

Development tools significantly enhance productivity when building GraphQL APIs. GraphQL Playground and GraphiQL provide interactive interfaces for testing queries, exploring schemas, and viewing documentation. These browser-based IDEs offer syntax highlighting, auto-completion, and query history. VS Code extensions like GraphQL Language Support add syntax highlighting and validation directly in your editor. Apollo Studio extends capabilities with schema registry, operation tracking, and performance monitoring.

Project structure influences maintainability as APIs grow. Separating schema definitions, resolvers, data sources, and utilities into distinct directories creates clarity. A typical structure might include a schema folder for type definitions, a resolvers folder organized by domain, a datasources folder for data access layers, and a utils folder for shared functionality. This modular approach enables teams to work on different features simultaneously without conflicts.

Initial Server Configuration

Creating a basic GraphQL server requires minimal boilerplate. After installing necessary dependencies, you define your schema using SDL or programmatic builders, implement resolver functions that fetch data, and instantiate a server that binds everything together. The server listens on a specified port, handling incoming GraphQL requests and returning JSON responses. Most implementations provide built-in GraphQL Playground access at the server endpoint for immediate testing.

Environment configuration separates development, staging, and production settings. Environment variables control database connections, API keys, authentication secrets, and feature flags. Libraries like dotenv load these variables from .env files during development, while production environments inject them through deployment platforms. This separation ensures sensitive credentials never appear in source code and allows identical codebases to operate across different environments.

"Starting with proper project structure and tooling might seem like overhead initially, but it pays dividends as your API grows and team expands."

TypeScript integration adds compile-time type safety that catches errors before runtime. Installing TypeScript along with type definitions for your chosen GraphQL library enables full IDE support with auto-completion and inline documentation. Code generators like GraphQL Code Generator automatically create TypeScript types from your schema, ensuring resolver implementations match schema definitions. This workflow eliminates an entire class of bugs related to type mismatches between schema and implementation.

Designing Your GraphQL Schema

Schema design represents the most critical phase in GraphQL API development. A well-designed schema balances client needs with backend capabilities, provides intuitive naming conventions, and anticipates future requirements without premature optimization. Unlike REST APIs where endpoints can be added incrementally, GraphQL schemas benefit from upfront planning since clients depend on stable type definitions.

Domain Modeling Strategies

Effective schemas mirror business domains rather than database structures. Starting with user stories and UI requirements helps identify necessary types and relationships. For an e-commerce platform, you might identify entities like Product, Category, Customer, Order, and Payment. Each entity becomes a GraphQL type with fields representing attributes and relationships. This domain-driven approach creates intuitive APIs that match how clients think about data.

Relationships between types form the graph structure that gives GraphQL its name. A Product might have a category field returning a Category type, while a Category includes a products field returning a list of Products. These bidirectional relationships allow clients to traverse data in any direction with a single query. Connection patterns handle one-to-many relationships with pagination support, typically including edges, nodes, and pageInfo fields that enable cursor-based navigation through large datasets.

- 🎯 Start with queries clients actually need rather than exposing all database tables

- 🔗 Model relationships explicitly to enable powerful query composition

- 📦 Group related fields into nested objects instead of flattening everything

- 🔄 Use interfaces for polymorphic types that share common fields

- ⚡ Consider performance implications of deeply nested relationships

Naming conventions establish consistency across your schema. Fields should use camelCase, types should use PascalCase, and enums should use SCREAMING_SNAKE_CASE. Boolean fields typically start with "is" or "has" prefixes. List fields use plural nouns, while singular fields return single objects. These conventions, while not technically enforced, create predictable patterns that reduce cognitive load for API consumers.

Mutation Design Patterns

Mutations modify server-side data and require careful design to ensure clarity and consistency. Each mutation should represent a single business operation with a descriptive name like createProduct, updateCustomerEmail, or processPayment. Input types bundle mutation arguments into cohesive objects, reducing argument lists and enabling input validation. Return types should include both the modified entity and operation metadata like success status, error messages, or affected record counts.

Error handling in mutations deserves special attention. Rather than throwing exceptions that interrupt query execution, consider including error fields in mutation responses. A union type combining success and error states provides explicit handling paths for clients. Alternatively, a consistent error interface across all mutations standardizes error reporting. This approach allows partial success scenarios where some operations succeed while others fail within a single request.

"Treating mutations as business operations rather than CRUD operations leads to more maintainable APIs that better express domain logic and business rules."

Optimistic concurrency control prevents conflicts in distributed systems. Including version fields or timestamps in input types enables the server to detect stale updates. When a client attempts to modify data based on outdated information, the mutation returns an error indicating the conflict. This pattern prevents lost updates and race conditions that plague naive implementations.

Implementing Resolvers and Data Fetching

Resolvers transform schema definitions into executable code that retrieves and manipulates data. Each field in your schema can have a resolver function, though simple fields often use default resolvers that return properties from parent objects. Understanding resolver execution order, argument handling, and context usage enables efficient data fetching patterns that avoid common performance pitfalls.

Resolver Function Anatomy

Every resolver receives four arguments that provide necessary context for data fetching. The parent argument contains the result of the parent resolver, enabling nested data access. Arguments contain field-specific parameters passed by the client query. Context holds shared resources like database connections, authentication information, and data loaders. Info contains query metadata including field selections, allowing resolvers to optimize based on what clients actually requested.

Resolver chains execute hierarchically as the GraphQL runtime traverses the query. When resolving a query for a user with posts, the user resolver executes first, returning a user object. The posts resolver then receives this user object as its parent argument, using the user's ID to fetch associated posts. Each post resolver might further resolve author fields, creating a chain that efficiently builds the complete response tree.

Asynchronous resolvers handle database queries, API calls, and other I/O operations. Returning promises from resolver functions allows the GraphQL runtime to execute multiple resolvers concurrently when possible. This parallelization significantly improves performance compared to sequential execution. Async/await syntax simplifies promise handling, making resolver code more readable while maintaining non-blocking behavior.

Data Source Abstraction

Separating data access logic from resolver functions promotes code reusability and testability. Data source classes encapsulate database queries, REST API calls, or other backend integrations. Resolvers delegate to these data sources through the context object, keeping resolver functions focused on orchestration rather than implementation details. This architecture enables swapping data sources without modifying resolver logic, facilitating testing and migration scenarios.

| Pattern | Use Case | Benefits | Considerations |

|---|---|---|---|

| Direct Database Access | Simple queries, prototypes | Minimal abstraction, straightforward | Tight coupling, difficult testing |

| Repository Pattern | Domain-driven designs | Clear separation, testable | Additional abstraction layer |

| Data Source Classes | Apollo Server projects | Caching support, request lifecycle | Apollo-specific implementation |

| ORM Integration | Complex data models | Type safety, migrations, relations | Learning curve, performance tuning |

| REST API Wrappers | Existing backend services | Gradual migration, reuse existing APIs | Network overhead, error handling |

DataLoader solves the N+1 query problem that plagues naive GraphQL implementations. When resolving a list of users with their posts, a naive implementation executes one query for users and then N queries for each user's posts. DataLoader batches these requests, collecting all post queries within a single execution tick and fetching them with one database query. Additionally, DataLoader provides per-request caching, ensuring identical queries within a single request execute only once.

Implementing DataLoader requires creating loader instances for each data type and adding them to the context object. Loader functions receive arrays of keys and return promises resolving to arrays of values in corresponding order. The batching and caching happen automatically, transforming inefficient query patterns into optimized database access. This pattern proves essential for production GraphQL APIs serving real user traffic.

"The N+1 problem isn't just a performance concern—it can bring production systems to their knees as query complexity increases and traffic scales."

Implementing Authentication and Authorization

Securing GraphQL APIs requires different approaches than traditional REST endpoints. With a single endpoint handling all operations, authentication and authorization logic must integrate into the GraphQL execution layer. Properly implemented security ensures users access only data they're permitted to see while maintaining performance and developer experience.

Authentication Strategies

JWT (JSON Web Tokens) provides stateless authentication suitable for distributed GraphQL servers. Clients include tokens in request headers, and the server validates signatures and extracts user information. Middleware intercepts requests before reaching GraphQL resolvers, verifying tokens and adding user context. This approach scales horizontally since servers don't maintain session state, and tokens can include custom claims for role-based access control.

Session-based authentication stores user state on the server, typically in Redis or database-backed sessions. After successful login, the server creates a session and returns a session ID cookie. Subsequent requests include this cookie, allowing the server to retrieve user information. While requiring server-side state, this approach enables immediate session invalidation and doesn't expose user data in client-accessible tokens. The choice between JWT and sessions depends on scalability requirements, security considerations, and existing infrastructure.

Context injection makes authentication information available throughout the resolver chain. Authentication middleware extracts user data from tokens or sessions and adds it to the GraphQL context object. Resolvers access this context to determine the current user, eliminating the need to pass authentication data through resolver chains. This pattern centralizes authentication logic and simplifies resolver implementations.

Authorization Patterns

Field-level authorization controls access to specific data within types. Before returning sensitive information, resolvers check whether the current user has permission to access that field. For example, a user's email field might only be visible to the user themselves or administrators. Implementing authorization logic directly in resolvers keeps security close to data access, but can lead to repetitive code across similar fields.

Directive-based authorization provides declarative security policies. Custom directives like @auth or @hasRole attach to schema fields, automatically enforcing access rules without resolver modifications. The GraphQL server intercepts field resolution, evaluating directive conditions before executing resolvers. This approach separates authorization concerns from business logic, making security policies visible in the schema and easier to audit.

- 🔐 Validate authentication on every request before executing any resolvers

- 👤 Use context to share user information across all resolvers

- 🛡️ Implement authorization at the field level for fine-grained control

- 📋 Consider directive-based authorization for cleaner schema definitions

- ⚠️ Return appropriate errors that don't leak sensitive information

Role-based access control (RBAC) groups permissions into roles assigned to users. Rather than checking individual permissions, resolvers verify whether users have required roles. A roles array in the user context enables quick role checks. More sophisticated implementations might use permission-based systems where roles grant specific permissions, and resolvers check for permissions rather than roles directly. This flexibility accommodates complex authorization requirements as applications evolve.

"Authorization should fail closed by default—deny access unless explicitly granted, never the reverse."

Data filtering ensures users only receive records they're authorized to access. Rather than fetching all data and filtering in resolvers, authorization logic should integrate with data access layers. Database queries include WHERE clauses that restrict results based on user permissions. This approach prevents accidental data leaks and improves performance by reducing data transfer and processing overhead.

Optimizing Performance and Scalability

GraphQL's flexibility introduces performance challenges that don't exist in traditional REST APIs. Clients can construct arbitrarily complex queries that overwhelm servers if left unchecked. Implementing proper safeguards, caching strategies, and monitoring ensures your API remains responsive under load while preventing abuse.

Query Complexity Analysis

Query complexity analysis prevents expensive queries from consuming excessive server resources. By assigning complexity scores to fields and limiting total query complexity, servers reject queries that would strain performance. Simple scalar fields might have complexity of 1, while fields that trigger database queries or external API calls receive higher scores. Nested fields multiply complexity, reflecting the exponential cost of deep queries.

Depth limiting restricts how deeply clients can nest queries. Without limits, malicious or poorly designed clients might request data structures like user.friends.friends.friends continuing indefinitely. Setting maximum depth to reasonable values (typically 5-10) prevents these scenarios while allowing legitimate use cases. Depth limiting complements complexity analysis, providing multiple layers of protection against query abuse.

Query timeout mechanisms ensure long-running queries don't monopolize server resources. Setting execution timeouts at the GraphQL server level terminates queries that exceed thresholds. This protection proves essential when dealing with unpredictable data sources or complex resolver logic. Timeouts should balance legitimate use cases against resource protection, with monitoring helping identify appropriate values.

Caching Strategies

Response caching stores complete query results for subsequent identical requests. Unlike REST where URL-based caching works naturally, GraphQL requires query-aware caching that accounts for variables and different field selections. Automated persisted queries (APQ) help by assigning IDs to queries, enabling traditional HTTP caching. Full response caching works best for public data that changes infrequently, while authenticated requests require per-user cache segmentation.

Field-level caching provides granular control over what data gets cached and for how long. Cache directives specify TTL (time-to-live) for individual fields, allowing frequently accessed but slowly changing data to serve from cache while keeping real-time data fresh. This approach requires cache-aware resolvers that check cache before fetching data and populate cache after retrieval. Redis typically serves as the backing store, providing fast access and automatic expiration.

DataLoader's per-request caching prevents duplicate data fetching within single requests but doesn't persist across requests. For cross-request caching, implementing a shared cache layer in data source classes provides efficiency gains. Cache keys based on query parameters enable precise invalidation when data changes. Cache warming strategies preload frequently accessed data during low-traffic periods, ensuring fast response times during peak usage.

"Caching in GraphQL requires more sophistication than REST, but the performance gains justify the additional complexity when implemented correctly."

Monitoring and Observability

Tracing reveals performance bottlenecks by tracking resolver execution times. Apollo Server includes built-in tracing that measures each resolver's duration, identifying slow queries and inefficient data access patterns. Distributed tracing extends this visibility across microservices, showing how GraphQL queries trigger downstream service calls. This observability enables data-driven optimization decisions rather than guessing where problems exist.

Error tracking aggregates and categorizes errors occurring in production. Unlike traditional APIs where each endpoint has distinct error patterns, GraphQL's single endpoint requires operation-level error tracking. Grouping errors by query name, resolver path, and error type reveals patterns that indicate bugs or infrastructure issues. Integration with services like Sentry or Datadog provides alerting when error rates exceed thresholds.

Performance metrics track query execution time, resolver performance, and resource utilization. Monitoring average response times, 95th percentile latencies, and throughput helps identify degradation before users complain. Query-level metrics reveal which operations consume most resources, guiding optimization efforts. Tracking DataLoader batch sizes and cache hit rates validates that optimization strategies work as intended.

Testing GraphQL APIs Effectively

Comprehensive testing ensures GraphQL APIs behave correctly across all scenarios. The strongly-typed nature of GraphQL enables powerful testing strategies that catch errors early in development. Combining unit tests, integration tests, and schema validation creates confidence that changes won't break existing functionality.

Unit Testing Resolvers

Resolver unit tests verify individual resolver functions in isolation. Mocking data sources and context objects allows testing resolver logic without database dependencies. Each test should cover different argument combinations, error conditions, and edge cases. Since resolvers are pure functions receiving explicit inputs, they're inherently testable without complex setup requirements.

Testing error handling ensures resolvers gracefully handle failure scenarios. When data sources throw exceptions, do resolvers catch and transform them appropriately? Do null values propagate correctly through resolver chains? Testing these scenarios prevents production errors and clarifies expected behavior. Parameterized tests efficiently verify resolver behavior across multiple input combinations.

Integration Testing

Integration tests execute complete GraphQL operations against a running server instance. Rather than mocking everything, these tests use test databases or containerized dependencies to verify end-to-end functionality. Creating a test client that sends GraphQL queries and validates responses ensures the entire stack works together correctly. These tests catch issues that unit tests miss, like resolver interactions and database query problems.

Snapshot testing captures query responses and alerts when they change unexpectedly. After verifying a query returns correct data, saving the response as a snapshot creates a baseline. Future test runs compare actual responses against snapshots, failing when differences appear. This approach quickly identifies breaking changes in API responses, though snapshots require manual review to distinguish intentional changes from bugs.

Schema testing validates that schema changes maintain backward compatibility. Tools like GraphQL Inspector compare schema versions, identifying breaking changes like removed fields or modified types. Running schema validation in CI/CD pipelines prevents accidentally deploying breaking changes. For public APIs, maintaining multiple schema versions or using deprecation warnings provides migration paths for clients.

"Automated testing isn't optional for production GraphQL APIs—the flexibility that makes GraphQL powerful also makes it easy to break clients without comprehensive test coverage."

Integrating with Databases and External Services

Production GraphQL APIs rarely operate in isolation. Connecting to databases, calling REST APIs, integrating with message queues, and coordinating microservices requires careful orchestration. These integrations must handle failures gracefully, maintain performance under load, and provide consistent experiences across different data sources.

Database Integration Patterns

SQL databases integrate with GraphQL through ORMs like Prisma, TypeORM, or Sequelize. These tools provide type-safe query builders that map to database tables. Resolvers use ORM methods to fetch data, with the ORM handling connection pooling, query generation, and result mapping. Prisma particularly shines in GraphQL contexts, generating TypeScript types that align with GraphQL schemas and providing efficient query APIs that prevent N+1 problems.

NoSQL databases like MongoDB offer flexible schemas that sometimes align naturally with GraphQL types. However, document structure doesn't always match query patterns, requiring careful index design. Mongoose provides schema validation and query helpers for MongoDB, though its middleware system can complicate resolver implementations. Consider whether denormalization improves query performance, accepting some data duplication to avoid complex joins or multiple queries.

Database migration strategies ensure schema changes deploy safely. Tools like Prisma Migrate or TypeORM migrations generate and apply database schema changes. Running migrations before deploying new API versions prevents runtime errors from schema mismatches. For zero-downtime deployments, migrations must maintain backward compatibility, adding new columns as nullable and removing old columns only after all instances run updated code.

REST API Integration

Wrapping existing REST APIs with GraphQL creates unified interfaces for clients. Data source classes make HTTP requests to REST endpoints, transforming responses into GraphQL types. This approach enables gradual migration from REST to GraphQL without rewriting backend services. Clients benefit from GraphQL's advantages while backend teams migrate at their own pace.

Error handling becomes critical when calling external services. REST APIs return various HTTP status codes and error formats that need consistent translation to GraphQL errors. Implementing retry logic with exponential backoff handles transient failures. Circuit breakers prevent cascading failures when downstream services become unhealthy. These reliability patterns ensure your GraphQL API remains available even when dependencies experience issues.

Response transformation maps REST API data structures to GraphQL types. REST responses often include unnecessary fields or use different naming conventions. Data source methods extract relevant data, rename fields to match GraphQL conventions, and construct objects matching schema definitions. This transformation layer insulates resolvers from REST API changes, localizing impact when external APIs evolve.

Deploying to Production Environments

Moving GraphQL APIs from development to production requires careful planning around infrastructure, monitoring, security, and operational concerns. Production deployments must handle real user traffic, provide reliability guarantees, and enable rapid response to issues. Proper deployment strategies balance velocity with stability.

Infrastructure Considerations

Containerization with Docker packages GraphQL servers with all dependencies, ensuring consistency across environments. Docker images include the Node.js runtime, application code, and configuration, eliminating "works on my machine" problems. Container orchestration platforms like Kubernetes manage deployment, scaling, and health checking. Horizontal scaling adds server instances behind load balancers, distributing traffic across multiple containers.

Serverless deployment options like AWS Lambda or Google Cloud Functions offer automatic scaling and pay-per-use pricing. Apollo Server supports serverless deployments with minimal configuration changes. However, serverless introduces cold start latency and limits on execution time. Evaluating whether your API's traffic patterns suit serverless versus traditional server deployments requires understanding trade-offs between cost, performance, and operational complexity.

Environment configuration management separates secrets from code. Services like AWS Secrets Manager, HashiCorp Vault, or Kubernetes Secrets store sensitive values like database passwords and API keys. Applications retrieve secrets at startup or runtime, never hardcoding them in source control. Configuration as code tools like Terraform or Pulumi define infrastructure declaratively, enabling reproducible deployments and disaster recovery.

Security Hardening

Rate limiting prevents abuse by restricting request frequency per client. Implementing rate limits at the API gateway or application level protects against denial-of-service attacks and accidental runaway clients. GraphQL-specific rate limiting might count queries, mutations, or complexity scores rather than simple request counts. Different limits for authenticated versus anonymous users balance accessibility with security.

CORS (Cross-Origin Resource Sharing) configuration controls which domains can access your GraphQL API from browsers. Restrictive CORS policies prevent unauthorized websites from making requests on behalf of users. For public APIs, allowing specific origins rather than wildcards provides security without blocking legitimate clients. Credentials mode requires careful configuration to prevent CSRF attacks.

Input validation sanitizes client data before processing. While GraphQL's type system provides basic validation, additional checks prevent injection attacks and malformed data. Validating string lengths, numeric ranges, and format patterns catches malicious inputs. Custom scalars with validation logic enforce constraints at the schema level, centralizing validation rules.

"Production readiness isn't a checkbox—it's an ongoing commitment to monitoring, updating, and responding to real-world usage patterns and security threats."

Advanced GraphQL Patterns and Techniques

As GraphQL APIs mature, advanced patterns address complex requirements like real-time updates, file uploads, schema federation, and custom directives. These techniques extend GraphQL's capabilities beyond basic query and mutation operations, enabling sophisticated applications.

Subscription Implementation

Subscriptions enable real-time data push from server to client using WebSocket connections. Clients subscribe to specific events, and the server sends updates when those events occur. Implementing subscriptions requires a pub/sub system like Redis or in-memory event emitters. Subscription resolvers return AsyncIterator instances that yield values when events fire.

Scaling subscriptions across multiple server instances requires shared pub/sub infrastructure. Redis Pub/Sub or message brokers like RabbitMQ coordinate events across servers, ensuring clients receive updates regardless of which server handles their connection. Managing WebSocket connections consumes server resources, so monitoring connection counts and implementing connection limits prevents resource exhaustion.

File Upload Handling

File uploads in GraphQL use multipart form data with the Upload scalar type. Libraries like graphql-upload provide middleware that processes file uploads, making them available in resolver arguments. Resolvers receive file metadata and read streams, enabling efficient handling of large files without loading entire contents into memory. Storing uploaded files in object storage like S3 provides scalability and durability.

Security considerations for file uploads include validating file types, scanning for malware, and limiting file sizes. Rejecting executable files and validating MIME types prevents malicious uploads. Generating unique filenames prevents path traversal attacks. Streaming uploads directly to storage services minimizes server memory usage and improves performance.

Schema Federation

Apollo Federation enables multiple GraphQL services to compose a unified schema. Each service owns specific types and fields, with a gateway aggregating schemas and routing queries. This architecture supports large teams working on different domains without coordination overhead. Services evolve independently while maintaining a cohesive API for clients.

Implementing federation requires designating entities with @key directives and implementing reference resolvers. The gateway handles query planning, determining which services to call and how to combine results. Federation supports advanced patterns like extending types across services, enabling rich relationships between domains owned by different teams.

Frequently Asked Questions

What's the main difference between GraphQL and REST APIs?

GraphQL provides a single endpoint where clients specify exactly what data they need through queries, while REST uses multiple endpoints that return fixed data structures. This fundamental difference means GraphQL eliminates over-fetching and under-fetching problems, allows clients to retrieve multiple resources in one request, and provides strong typing through schemas. REST remains simpler for basic CRUD operations and benefits from better HTTP caching, but GraphQL excels when clients need flexible data access patterns or when building APIs that serve diverse client requirements.

How do I prevent malicious queries from overloading my GraphQL server?

Implement multiple protective layers including query complexity analysis that assigns costs to fields and rejects expensive queries, depth limiting to prevent infinitely nested queries, query timeouts that terminate long-running operations, and rate limiting to restrict request frequency per client. Additionally, use DataLoader to solve N+1 query problems, implement proper authentication to identify clients, and monitor query patterns to identify abuse. Automated persisted queries can whitelist known queries, rejecting arbitrary client queries entirely for maximum security.

Should I use code-first or schema-first approach when building GraphQL APIs?

Schema-first approaches define types using SDL before writing code, promoting clear contracts and enabling non-developers to understand APIs. Code-first approaches use decorators or programmatic builders to generate schemas from code, providing better IDE support and type safety. Choose schema-first when working with diverse teams, building public APIs, or prioritizing clear documentation. Choose code-first when type safety is critical, working primarily with TypeScript or other typed languages, or when rapid iteration matters more than external communication. Many teams successfully combine both approaches, using schema-first for planning and code-first for implementation.

How do I handle authentication and authorization in GraphQL?

Implement authentication at the server level before GraphQL execution begins, extracting user information from JWT tokens or sessions and adding it to the context object. Handle authorization at the field level within resolvers, checking permissions before returning sensitive data. Consider directive-based authorization for declarative security policies that attach directly to schema fields. Always fail closed, denying access by default unless explicitly granted. Implement proper error handling that doesn't leak information about why access was denied, and consider using data filtering at the database level to ensure users never receive unauthorized data.

What tools should I use for testing GraphQL APIs?

Use Jest or Mocha for unit testing individual resolvers with mocked dependencies, ensuring business logic works correctly in isolation. Implement integration tests using tools like Apollo Server Testing or graphql-request that execute complete operations against running servers. Use GraphQL Inspector or similar tools to detect breaking schema changes in CI/CD pipelines. Consider snapshot testing for response validation, though manual review of changes remains important. Implement load testing with tools like k6 or Artillery to verify performance under realistic traffic patterns. For end-to-end testing, tools like Cypress or Playwright can test GraphQL APIs through actual client applications.

How do I migrate from REST to GraphQL without breaking existing clients?

Implement GraphQL alongside existing REST endpoints rather than replacing them immediately, allowing gradual migration. Create GraphQL resolvers that call existing REST endpoints internally, providing GraphQL benefits without rewriting backend logic. Use feature flags to control which clients use GraphQL versus REST. Implement versioning strategies that maintain backward compatibility during transition periods. Communicate migration plans clearly to API consumers, providing migration guides and deprecation timelines. Monitor usage of both APIs to understand when REST endpoints can be safely retired. Consider maintaining REST for simple operations while using GraphQL for complex data requirements, leveraging strengths of both approaches.