How to Design Real-Time Data Processing Systems

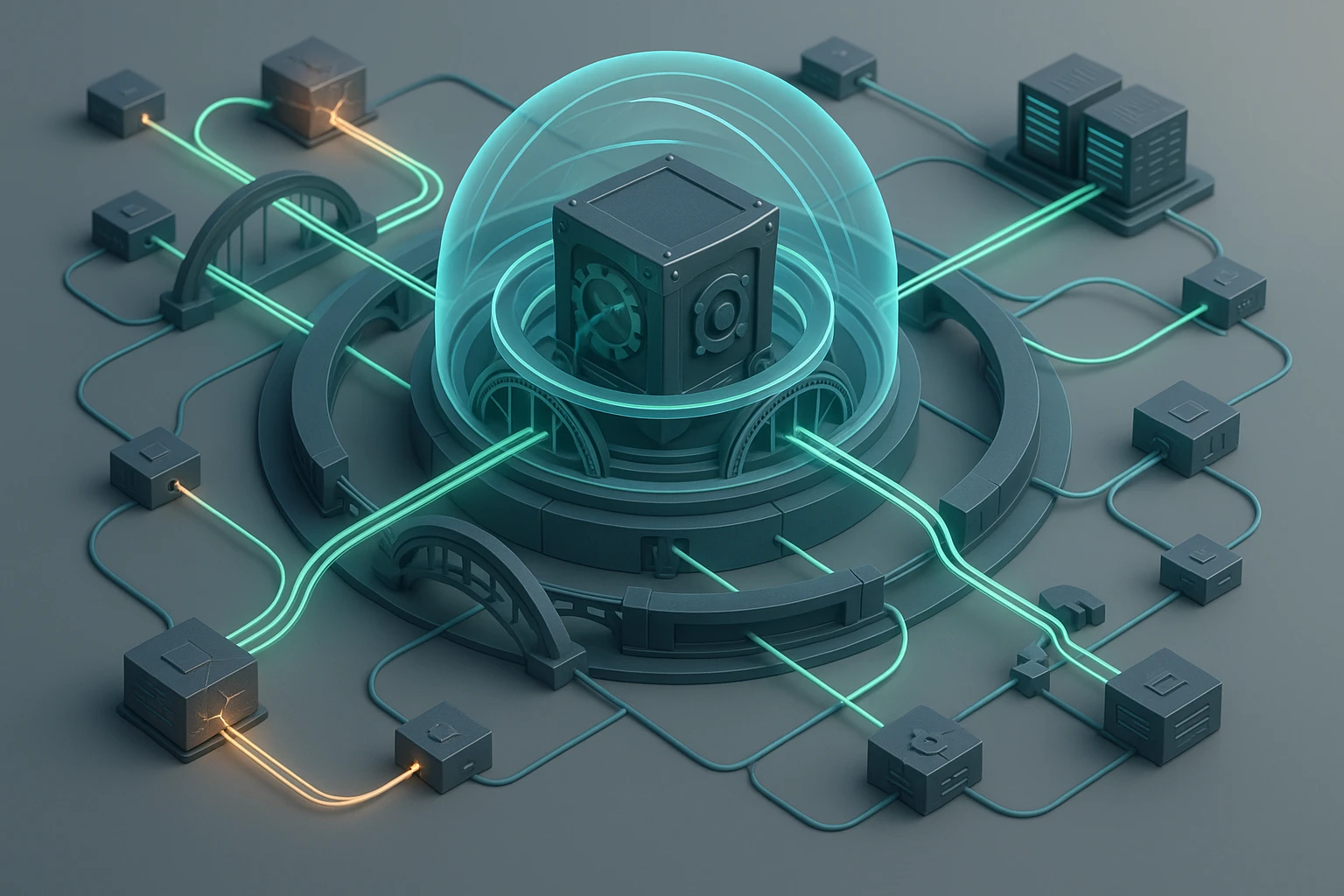

Diagram of real-time data processing architecture streaming ingestion, low-latency processing event queues, stateful operators, scalable services, fault-tolerant storage, monitoring

How to Design Real-Time Data Processing Systems

In today's hyper-connected digital landscape, businesses face an unprecedented challenge: making sense of massive volumes of data as it arrives, not hours or days later. The ability to process information in real-time has transformed from a competitive advantage into a fundamental requirement for organizations across industries. From detecting fraudulent transactions within milliseconds to personalizing customer experiences on-the-fly, real-time data processing systems have become the backbone of modern digital operations. Companies that fail to implement these systems risk falling behind competitors who can react instantly to market changes, customer behaviors, and operational anomalies.

Real-time data processing refers to the continuous input, processing, and output of data with minimal latency—typically measured in milliseconds or seconds rather than minutes or hours. Unlike traditional batch processing that collects data over time before analysis, real-time systems demand immediate action on streaming information. This approach encompasses multiple architectural patterns, technologies, and methodologies, each suited to different use cases and organizational needs. The complexity lies not just in speed, but in maintaining accuracy, consistency, and reliability while handling thousands or millions of events per second.

Throughout this comprehensive exploration, you'll discover the fundamental principles that govern real-time data processing architecture, from selecting appropriate technologies to implementing fault-tolerant designs. We'll examine practical strategies for handling data streams, managing state, ensuring scalability, and maintaining system reliability under pressure. Whether you're building financial trading platforms, IoT monitoring systems, or customer analytics engines, you'll gain actionable insights into designing systems that deliver immediate value from your data while maintaining the robustness required for production environments.

Foundational Architecture Principles

Building effective real-time data processing systems requires understanding several core architectural principles that differentiate them from traditional batch processing approaches. The fundamental shift involves moving from a "store then process" mentality to a "process as it arrives" paradigm. This transformation affects every layer of your system architecture, from data ingestion to storage, processing, and delivery.

Event-driven architecture forms the cornerstone of real-time systems. Rather than polling for changes or waiting for scheduled batch jobs, these systems react immediately to events as they occur. Each data point—whether a user click, sensor reading, or transaction—triggers processing pipelines that can filter, transform, aggregate, or route information to downstream consumers. This reactive model enables sub-second response times and ensures that insights are generated when they're most valuable.

"The latency requirements of your system should drive every architectural decision, from message broker selection to database choices and processing framework capabilities."

Stream processing differs fundamentally from batch processing in how it handles data windows and state management. While batch systems work with complete datasets, stream processors must make decisions based on incomplete information, using techniques like sliding windows, tumbling windows, and session windows to group events logically. Understanding these windowing concepts is essential for accurate aggregations, joins, and pattern detection in streaming data.

Selecting the Right Processing Model

Two primary processing models dominate real-time architectures: stream processing and complex event processing. Stream processing focuses on continuous computation over unbounded data streams, applying transformations, filters, and aggregations to each event or small batches of events. Technologies like Apache Kafka Streams, Apache Flink, and Apache Storm excel in this domain, offering high throughput and low latency for data transformation pipelines.

Complex event processing (CEP) takes a different approach, emphasizing pattern detection and correlation across multiple event streams. CEP engines excel at identifying meaningful patterns in real-time data—detecting fraud by correlating unusual transaction patterns, monitoring system health by analyzing log streams, or triggering alerts based on specific event sequences. The choice between these models depends on whether your primary goal is data transformation or pattern recognition.

| Processing Model | Primary Use Cases | Key Technologies | Latency Characteristics |

|---|---|---|---|

| Stream Processing | Data enrichment, filtering, aggregation, ETL pipelines | Kafka Streams, Flink, Spark Streaming, Storm | Milliseconds to seconds |

| Complex Event Processing | Fraud detection, anomaly detection, pattern matching | Esper, Drools Fusion, WSO2 CEP | Sub-millisecond to milliseconds |

| Micro-batch Processing | Near real-time analytics, balanced throughput/latency | Spark Structured Streaming, Storm Trident | Seconds to minutes |

| Lambda Architecture | Comprehensive analytics with speed and batch layers | Hybrid: Kafka + Spark + Hadoop | Variable by layer |

Data Ingestion Strategies

The entry point of your real-time system—data ingestion—requires careful consideration of throughput requirements, data sources, and reliability guarantees. Message brokers like Apache Kafka, Amazon Kinesis, and Apache Pulsar serve as the nervous system of real-time architectures, providing durable, scalable, and fault-tolerant data ingestion. These systems decouple data producers from consumers, allowing each component to scale independently while maintaining delivery guarantees.

When designing ingestion layers, consider the semantic guarantees your system requires. At-most-once delivery offers the lowest latency but risks data loss. At-least-once delivery ensures no data is lost but may create duplicates that downstream systems must handle. Exactly-once semantics provide the strongest guarantees but introduce complexity and latency overhead. Your choice should align with business requirements—financial transactions typically demand exactly-once processing, while clickstream analytics might tolerate at-least-once with deduplication.

- 🔄 Push vs. Pull Models: Determine whether data sources push events to your system or your system polls sources for changes

- 📊 Schema Management: Implement schema registries to enforce data contracts and enable evolution without breaking consumers

- ⚡ Backpressure Handling: Design mechanisms to handle situations where producers generate data faster than consumers can process

- 🔐 Security and Authentication: Ensure encrypted transmission and proper authentication for all data sources

- 📈 Monitoring and Metrics: Instrument ingestion points to track throughput, lag, and error rates

"Designing for failure is not pessimism—it's realism. Every component in a distributed real-time system will eventually fail, and your architecture must gracefully handle these inevitable failures."

Choosing and Implementing Processing Frameworks

The processing framework represents the computational engine of your real-time system, transforming raw events into actionable insights. Modern frameworks offer varying trade-offs between latency, throughput, state management capabilities, and operational complexity. Understanding these trade-offs enables you to select the right tool for your specific requirements rather than defaulting to the most popular option.

Apache Flink has emerged as a leading choice for stateful stream processing, offering true event-time processing, exactly-once state consistency, and sophisticated windowing capabilities. Flink's architecture separates computation from state management, allowing it to handle massive state sizes while maintaining low latency. The framework excels in scenarios requiring complex event-time processing, such as session analysis, real-time joins across multiple streams, or temporal pattern detection.

State Management Considerations

Stateful processing—maintaining information across multiple events—presents one of the most challenging aspects of real-time system design. Unlike stateless transformations that treat each event independently, stateful operations must remember previous events to perform aggregations, joins, or pattern matching. This state must be managed efficiently, replicated for fault tolerance, and scaled as data volumes grow.

Modern frameworks offer different approaches to state management. Embedded state stores state locally within processing nodes, offering the lowest latency but complicating scaling and recovery. External state stores like Redis or Apache Cassandra separate state from computation, simplifying scaling but introducing network latency. Hybrid approaches cache frequently accessed state locally while persisting it externally, balancing performance with operational flexibility.

"State management is where most real-time systems encounter their first scaling bottleneck. Plan for state growth from day one, not when your system starts failing under load."

Windowing and Time Semantics

Time represents a fundamental challenge in distributed real-time systems. Events may arrive out of order due to network delays, system failures, or distributed data sources. Processing frameworks must handle three distinct time concepts: event time (when the event actually occurred), ingestion time (when the event entered the system), and processing time (when the event is processed).

Event-time processing provides the most accurate results but requires handling late-arriving data through watermarks—timestamps that indicate when the system believes all events up to a certain time have arrived. Watermarks balance completeness (waiting for late events) against latency (processing results quickly). Configuring watermark strategies involves understanding your data source characteristics and acceptable trade-offs between accuracy and timeliness.

- ⏱️ Tumbling Windows: Fixed-size, non-overlapping time intervals ideal for periodic aggregations

- 🔄 Sliding Windows: Overlapping intervals that provide continuous, updated calculations

- 👥 Session Windows: Dynamic intervals based on activity gaps, perfect for user behavior analysis

- 🎯 Global Windows: All events in a single window, useful for custom windowing logic

- ⚙️ Custom Windows: Application-specific windowing logic for unique business requirements

Parallelism and Scalability

Achieving horizontal scalability requires careful attention to data partitioning and parallelism. Processing frameworks divide data streams into partitions that can be processed independently across multiple nodes. The partitioning strategy—whether by key, round-robin, or custom logic—directly impacts both performance and correctness of stateful operations.

Key-based partitioning ensures that all events with the same key are processed by the same task instance, maintaining consistency for stateful operations like aggregations or joins. However, skewed key distributions can create hot partitions that become bottlenecks. Monitoring partition load distribution and implementing strategies like dynamic rebalancing or composite keys helps maintain balanced processing across your cluster.

Storage Layer Design for Real-Time Systems

The storage layer in real-time architectures serves multiple purposes: providing fast access to state during processing, persisting results for downstream consumption, and maintaining historical data for analytics. Unlike batch systems that can afford slower storage with higher capacity, real-time systems demand low-latency access patterns while maintaining consistency and durability.

Multi-model storage strategies have become standard practice, with different storage technologies optimized for specific access patterns. Time-series databases like InfluxDB or TimescaleDB excel at storing and querying temporal data with high write throughput. Document stores like MongoDB or Elasticsearch provide flexible schemas and powerful query capabilities for semi-structured data. Key-value stores like Redis offer sub-millisecond read latency for frequently accessed state.

Consistency Models and Trade-offs

Real-time systems must carefully balance consistency requirements against performance. Strong consistency guarantees that all readers see the same data at the same time but introduces latency through coordination overhead. Eventual consistency allows higher throughput and lower latency but means different parts of your system might temporarily see different values.

The CAP theorem—stating that distributed systems can provide at most two of Consistency, Availability, and Partition tolerance—guides storage decisions. Most real-time systems prioritize availability and partition tolerance, accepting eventual consistency for improved performance. However, critical operations like financial transactions might require strong consistency despite performance costs, leading to hybrid approaches where different data types receive different consistency guarantees.

| Storage Technology | Best For | Latency Profile | Consistency Model |

|---|---|---|---|

| Redis/Memcached | Hot state, caching, session storage | Sub-millisecond reads | Eventually consistent (configurable) |

| Apache Cassandra | High-volume writes, time-series data | Single-digit milliseconds | Tunable consistency |

| Apache HBase | Random read/write, structured data | Low milliseconds | Strong consistency |

| RocksDB | Embedded state, local persistence | Microseconds | Local strong consistency |

| Elasticsearch | Full-text search, log analysis | Tens of milliseconds | Eventually consistent |

"The fastest storage solution is the one you don't need to access. Intelligent caching strategies can reduce storage latency by orders of magnitude while dramatically improving system throughput."

Data Retention and Archival

Real-time systems generate enormous data volumes, making retention policies critical for cost control and performance. Implementing tiered storage strategies allows you to keep recent data in fast, expensive storage while moving historical data to cheaper, slower storage. Time-based partitioning facilitates efficient data lifecycle management, enabling quick deletion or archival of old data without impacting current operations.

Consider implementing data compaction strategies that reduce storage requirements while preserving essential information. Techniques like downsampling (reducing data resolution over time), aggregation (storing summaries instead of raw events), and compression (using efficient encoding schemes) can dramatically reduce storage costs for historical data while maintaining analytical value.

Building Fault-Tolerant and Resilient Systems

Real-time systems operate in inherently unreliable environments where network partitions, hardware failures, and software bugs are inevitable. Designing for resilience means assuming failure and implementing mechanisms to detect, isolate, and recover from problems automatically. The goal isn't preventing all failures—that's impossible—but ensuring failures don't cascade and the system continues providing value even in degraded states.

Checkpointing and state recovery form the foundation of fault tolerance in stateful stream processing. Frameworks periodically snapshot processing state to durable storage, creating consistent recovery points. When failures occur, processing can resume from the last successful checkpoint rather than reprocessing all historical data. The checkpoint interval balances recovery time (shorter intervals mean less reprocessing) against overhead (checkpointing consumes resources and may impact throughput).

Replication and Redundancy

Redundancy at every layer protects against component failures. Message brokers replicate data across multiple nodes, ensuring event durability even if individual brokers fail. Processing frameworks run multiple instances of each task, with standby instances ready to take over when primary instances fail. Storage systems maintain multiple replicas of data, using consensus protocols like Raft or Paxos to coordinate updates and maintain consistency.

The replication factor—how many copies of data exist—directly impacts both reliability and cost. Higher replication factors provide better fault tolerance but consume more storage and network bandwidth. Most production systems use replication factors of three, providing good fault tolerance while remaining cost-effective. Critical data might warrant higher replication factors, while less critical data might accept lower factors.

- 🔄 Active-Active Replication: Multiple processing instances handle requests simultaneously, providing load balancing and immediate failover

- ⏸️ Active-Passive Replication: Standby instances remain idle until primary instances fail, reducing resource consumption

- 🌍 Geographic Replication: Distributing replicas across data centers or regions protects against site-wide failures

- 📋 Asynchronous Replication: Replicas update after primary writes complete, improving write latency at the cost of potential data loss

- ⚡ Synchronous Replication: All replicas update before acknowledging writes, ensuring no data loss but increasing latency

"Chaos engineering—deliberately introducing failures in production—sounds counterintuitive but remains the only way to truly validate your system's resilience before customers encounter problems."

Circuit Breakers and Graceful Degradation

When downstream dependencies fail or become slow, circuit breakers prevent cascading failures by temporarily stopping requests to failing services. After detecting repeated failures, the circuit breaker "opens," immediately returning errors rather than waiting for timeouts. This fail-fast approach prevents resource exhaustion and allows failing services time to recover without continuous request pressure.

Graceful degradation strategies ensure your system continues providing value even when components fail. This might mean serving slightly stale data from cache when the database is unavailable, disabling non-essential features to reduce load, or providing approximate results when exact calculations would be too slow. Designing degradation strategies requires understanding which features are truly critical and which can be temporarily compromised.

Monitoring, Alerting, and Observability

Comprehensive observability enables rapid problem detection and diagnosis in production systems. The three pillars of observability—metrics, logs, and traces—provide complementary views into system behavior. Metrics offer high-level performance indicators like throughput, latency, and error rates. Logs provide detailed event information for debugging specific issues. Distributed traces show request flows across multiple services, revealing performance bottlenecks and failure points.

Effective alerting balances sensitivity (catching real problems) against specificity (avoiding false alarms). Alert on symptoms that impact users—increased error rates, elevated latency, processing lag—rather than low-level component metrics. Implement alert escalation policies that route notifications based on severity and time of day, ensuring critical issues receive immediate attention while avoiding alert fatigue from minor problems.

Performance Optimization Techniques

Achieving optimal performance in real-time systems requires attention to numerous factors across the entire processing pipeline. While modern frameworks provide reasonable out-of-the-box performance, production workloads often demand careful tuning and optimization. Understanding where bottlenecks occur and how to address them separates adequate systems from exceptional ones.

Serialization efficiency significantly impacts performance, as every event must be serialized for network transmission and deserialized for processing. Text-based formats like JSON offer human readability but consume excessive bandwidth and CPU cycles. Binary formats like Apache Avro, Protocol Buffers, or MessagePack provide 3-5x better performance while supporting schema evolution. The choice depends on your interoperability requirements and performance constraints.

Batching and Buffering Strategies

Micro-batching—processing small groups of events together rather than individually—can dramatically improve throughput by amortizing per-event overhead. Network round trips, disk writes, and state updates all benefit from batching. However, batching introduces latency, as events wait for batches to fill before processing begins. Tuning batch size and timeout parameters balances throughput against latency requirements.

Buffering protects against temporary load spikes by queuing events during high-traffic periods and processing them during quieter times. Bounded buffers prevent memory exhaustion by rejecting new events when full, while unbounded buffers risk out-of-memory errors but never reject data. Most production systems use bounded buffers with monitoring to detect when buffers approach capacity, triggering autoscaling or load shedding.

"Premature optimization is the root of all evil, but so is ignoring performance until production. Profile your system under realistic load early and often to identify actual bottlenecks rather than assumed ones."

Resource Management and Tuning

Memory management requires particular attention in long-running stream processing applications. Java-based frameworks like Flink and Kafka Streams require careful JVM tuning to balance heap memory (for application objects) against off-heap memory (for network buffers and state storage). Insufficient heap memory causes frequent garbage collection pauses that spike processing latency. Too much heap memory increases GC pause duration when collections do occur.

Network configuration impacts both throughput and latency. TCP buffer sizes affect how much data can be in flight before requiring acknowledgment. Compression reduces network bandwidth but consumes CPU cycles. Batching network operations reduces overhead but increases latency. Optimal settings depend on your network characteristics, data patterns, and performance requirements, often requiring experimentation to find the right balance.

- ⚙️ Parallelism Tuning: Match processing parallelism to available CPU cores and partition count for optimal resource utilization

- 💾 Memory Allocation: Configure memory pools appropriately for network buffers, state storage, and application heap

- 🔧 Thread Pool Sizing: Balance thread pools for network I/O, disk I/O, and computation to prevent resource starvation

- 📊 State Backend Selection: Choose between in-memory, RocksDB, or custom state backends based on state size and access patterns

- 🎯 Checkpoint Configuration: Tune checkpoint intervals, timeouts, and storage locations for your reliability requirements

Data Partitioning Optimization

Intelligent partitioning strategies prevent hot spots and ensure even work distribution across processing nodes. When key distributions are skewed—some keys appearing far more frequently than others—simple key-based partitioning creates imbalanced load. Techniques like salting (adding random prefixes to keys), splitting hot keys across multiple partitions, or using composite partitioning keys can improve balance.

Dynamic repartitioning allows systems to adapt to changing data patterns. As key distributions shift over time, monitoring partition load and triggering rebalancing operations maintains even resource utilization. However, repartitioning disrupts processing and may cause temporary latency spikes, so it should be triggered judiciously based on sustained imbalance rather than transient spikes.

Deployment and Operational Considerations

Deploying real-time data processing systems to production environments introduces challenges beyond development concerns. Operational excellence requires careful planning around deployment strategies, capacity planning, cost optimization, and day-to-day management. Systems that work perfectly in development can fail spectacularly in production without proper operational preparation.

Container orchestration platforms like Kubernetes have become standard for deploying distributed stream processing applications. Containers provide consistent environments across development, testing, and production while orchestrators handle deployment, scaling, and recovery automatically. However, stateful stream processing introduces complications that stateless web services don't face, requiring careful configuration of persistent volumes, network policies, and resource limits.

Capacity Planning and Autoscaling

Accurate capacity planning prevents both over-provisioning (wasting money on unused resources) and under-provisioning (causing performance degradation or outages). Understanding your workload characteristics—peak event rates, processing complexity, state size, retention requirements—enables you to size infrastructure appropriately. Load testing with realistic data volumes and patterns reveals system limits before production traffic does.

Autoscaling policies automatically adjust resource allocation based on demand, maintaining performance during traffic spikes while reducing costs during quiet periods. Effective autoscaling requires choosing appropriate metrics (CPU utilization, processing lag, queue depth) and configuring thresholds that trigger scaling actions. Scaling stateful applications is more complex than stateless ones, as state must be redistributed when instances are added or removed, potentially causing temporary processing delays.

"The best time to plan for 10x traffic growth is before you're experiencing it. Scaling real-time systems under pressure is possible but far more stressful than having capacity ready when needed."

Deployment Strategies and Blue-Green Deployments

Rolling deployments—gradually replacing old instances with new ones—minimize downtime but can create version compatibility issues when new and old code run simultaneously. Blue-green deployments maintain two complete environments, allowing instant cutover and easy rollback but doubling infrastructure costs during deployment. Canary deployments route a small percentage of traffic to new versions, validating changes before full rollout.

For stream processing applications, deployment complexity increases due to state management. Savepoints—externalized snapshots of processing state—enable version upgrades without losing state. Before deployment, trigger a savepoint, stop the old version, deploy the new version, and restore from the savepoint. This process works well for compatible changes but requires careful planning for schema evolution or topology changes that affect state structure.

Cost Optimization Strategies

Real-time systems can become expensive at scale, consuming resources continuously rather than during batch windows. Optimizing costs requires balancing performance requirements against infrastructure expenses. Techniques like spot instances for fault-tolerant workloads, reserved instances for baseline capacity, and aggressive autoscaling for variable workloads can significantly reduce cloud costs.

Data transfer costs often surprise teams new to real-time systems. Moving large data volumes between regions, availability zones, or cloud providers incurs substantial charges. Architecting systems to minimize cross-region traffic, using compression, and carefully selecting data center locations based on data sources and consumers helps control these costs. Monitoring data transfer patterns identifies unexpected flows that might indicate architectural inefficiencies.

- 💰 Right-Sizing Instances: Use monitoring data to identify over-provisioned resources and adjust instance types accordingly

- 📉 Compression: Enable compression for network transmission and storage to reduce bandwidth and storage costs

- ⏰ Retention Policies: Implement aggressive data retention policies to minimize storage costs for historical data

- 🔄 Resource Sharing: Consolidate multiple processing jobs on shared infrastructure where isolation requirements permit

- 📊 Cost Monitoring: Implement detailed cost tracking and alerting to identify unexpected expense increases quickly

Security and Compliance Requirements

Real-time data processing systems handle sensitive information flowing through multiple components, creating numerous security challenges. Unlike batch systems where data might be encrypted at rest in a data warehouse, streaming data moves constantly through networks, message brokers, processing frameworks, and storage systems. Securing this data in motion while maintaining low latency requires careful architectural planning and implementation.

Encryption at multiple layers protects data throughout its lifecycle. Transport layer security (TLS) encrypts data during network transmission between components. Application-level encryption protects data within message brokers and storage systems. End-to-end encryption ensures data remains encrypted from source to destination, even if intermediate systems are compromised. However, encryption introduces computational overhead that can impact latency, requiring careful performance testing.

Authentication and Authorization

Fine-grained access control ensures only authorized services and users can access sensitive data streams. Role-based access control (RBAC) assigns permissions based on user roles, while attribute-based access control (ABAC) makes decisions based on user attributes, resource properties, and environmental conditions. Modern message brokers support topic-level access control, allowing different teams to produce and consume specific data streams without accessing others.

Service-to-service authentication in distributed systems typically uses mutual TLS (mTLS) or token-based authentication like OAuth 2.0. These mechanisms verify that services are who they claim to be before allowing communication. Certificate management—issuing, rotating, and revoking certificates—becomes critical operational work that must be automated to prevent expired certificates from causing outages.

"Security cannot be an afterthought in real-time systems. Retrofitting security into production systems is exponentially more difficult and risky than building it in from the start."

Data Privacy and Compliance

Regulations like GDPR, CCPA, and HIPAA impose strict requirements on how personal data is collected, processed, stored, and deleted. Real-time systems must implement privacy-preserving techniques while maintaining processing performance. Techniques like tokenization (replacing sensitive values with non-sensitive tokens), masking (obscuring parts of sensitive data), and anonymization (removing personally identifiable information) protect privacy without completely preventing data analysis.

The "right to be forgotten" creates particular challenges for stream processing systems. When users request data deletion, systems must remove their information from storage systems, processing state, and message broker topics. Implementing deletion in immutable event logs requires careful design—either through periodic compaction that removes deleted records or through encryption where "deletion" means destroying encryption keys, making data unrecoverable.

Audit Logging and Compliance Monitoring

Comprehensive audit logs track who accessed what data and when, providing accountability and supporting compliance investigations. However, logging every event in high-throughput systems can create performance bottlenecks and storage challenges. Selective logging—capturing access to sensitive data while sampling routine operations—balances compliance requirements against operational constraints.

Automated compliance monitoring continuously validates that systems operate within regulatory requirements. This includes verifying encryption is enabled, access controls are properly configured, data retention policies are enforced, and audit logs are complete. Alerting on compliance violations enables rapid remediation before minor issues become major problems during regulatory audits.

Testing and Validation Strategies

Testing real-time data processing systems presents unique challenges compared to traditional applications. The distributed nature, stateful processing, and continuous operation require comprehensive testing strategies that go beyond unit tests. Effective testing validates not just correctness under ideal conditions, but behavior under load, during failures, and with real-world data patterns.

Stream processing unit tests verify individual transformation logic by feeding test events through processing functions and asserting expected outputs. Modern frameworks provide testing harnesses that simulate streaming behavior without requiring full cluster deployment. These tests run quickly in continuous integration pipelines, catching logic errors early in development.

Integration and End-to-End Testing

Integration tests validate that components work together correctly, testing interactions between message brokers, processing frameworks, and storage systems. These tests use test instances of infrastructure rather than mocking, revealing configuration issues, serialization problems, and integration bugs that unit tests miss. Containerization simplifies integration testing by providing consistent, reproducible test environments.

End-to-end tests validate complete data flows from ingestion through processing to output, ensuring the system delivers correct results under realistic conditions. These tests use production-like data volumes and patterns, revealing performance issues and race conditions that only appear under load. Automated end-to-end testing in staging environments provides confidence before production deployment.

- 🧪 Property-Based Testing: Generate random test inputs to discover edge cases and unexpected behaviors

- ⏱️ Time-Based Testing: Validate windowing logic and late data handling using controllable time sources

- 🔀 Chaos Testing: Inject failures to verify fault tolerance and recovery mechanisms

- 📈 Load Testing: Simulate production traffic patterns to identify performance bottlenecks

- 🎯 Regression Testing: Maintain test suites that prevent previously fixed bugs from reappearing

Performance and Load Testing

Performance testing under realistic load reveals how systems behave at scale. Gradually increasing event rates identifies the maximum sustainable throughput and how latency degrades under pressure. Spike testing validates behavior during sudden traffic increases, while soak testing reveals memory leaks and resource exhaustion that only appear after extended operation.

Effective load testing requires realistic data patterns and event distributions. Using production data (properly anonymized) or generating synthetic data that matches production characteristics ensures test results reflect actual system behavior. Monitoring all system metrics during load tests—CPU, memory, network, disk I/O, processing lag—identifies bottlenecks and guides optimization efforts.

"Testing in production sounds dangerous but is actually essential for real-time systems. No test environment perfectly replicates production, so carefully controlled production experiments reveal issues testing misses."

Data Quality Validation

Real-time systems must validate data quality continuously, detecting and handling malformed events, schema violations, and unexpected value ranges. Input validation at ingestion prevents bad data from entering the system, while processing-time validation catches issues that slip through. Implementing dead-letter queues for invalid events enables investigation and reprocessing after fixes.

Reconciliation processes compare real-time results against batch-computed ground truth, identifying processing errors and data quality issues. While reconciliation happens after the fact, it provides confidence in real-time results and catches subtle bugs that might otherwise go unnoticed. Automated reconciliation with alerting on discrepancies enables rapid problem identification and resolution.

Implementation Best Practices and Common Pitfalls

Building production-grade real-time data processing systems requires learning from the successes and failures of those who came before. Certain patterns consistently lead to robust, maintainable systems, while common pitfalls cause recurring problems. Understanding these lessons accelerates development and prevents painful production issues.

Start simple and evolve rather than building complex architectures prematurely. Many teams over-engineer initial implementations, adding complexity that provides no immediate value. Begin with the simplest architecture that meets current requirements, then evolve as needs become clear. This approach delivers value faster while avoiding unnecessary complexity that complicates maintenance and operations.

Design for Observability from Day One

Systems that lack comprehensive observability become impossible to debug in production. Instrument every component with metrics, structured logging, and distributed tracing from the beginning. The overhead of observability is minimal compared to the cost of debugging production issues without visibility into system behavior. Include correlation IDs in all log messages to trace individual events through the entire processing pipeline.

Establish dashboards and alerts before production deployment, not after problems occur. Critical metrics include end-to-end latency, processing lag, error rates, throughput, and resource utilization. Dashboards provide at-a-glance system health, while alerts notify teams of problems requiring attention. Document what each metric means and what actions to take when alerts fire, enabling efficient incident response.

Common Pitfalls to Avoid

Ignoring backpressure causes cascading failures when producers overwhelm consumers. Implement flow control mechanisms that slow producers when consumers can't keep up, preventing memory exhaustion and system crashes. This might mean rejecting new events during overload, buffering with bounded queues, or dynamically scaling consumer capacity.

Underestimating state size leads to out-of-memory errors and performance degradation. State grows with key cardinality and retention periods, often exceeding initial estimates as systems scale. Monitor state size continuously and implement strategies like state TTL (time-to-live), aggregation, or external state stores when state exceeds memory capacity.

- ⚠️ Ignoring Data Skew: Uneven key distributions create hot partitions that become bottlenecks

- 🔄 Improper Error Handling: Failing to handle errors gracefully causes data loss or processing stops

- 📊 Inadequate Monitoring: Blind spots in observability prevent detecting and diagnosing issues

- 🔐 Security Afterthoughts: Retrofitting security is far more difficult than building it in initially

- 💾 Insufficient Testing: Skipping load and chaos testing leads to production surprises

"The difference between a proof of concept and a production system is approximately 80% of the work. Plan accordingly when estimating timelines and resources for real-time system development."

Documentation and Knowledge Sharing

Comprehensive documentation accelerates onboarding, simplifies troubleshooting, and prevents knowledge silos. Document architecture decisions, explaining not just what was chosen but why alternatives were rejected. Maintain runbooks for common operational tasks and incident response procedures. Keep documentation close to code—in README files, inline comments, and wiki pages—where it's easily discoverable and maintainable.

Knowledge sharing through code reviews, design discussions, and post-mortems spreads expertise across teams. Real-time systems are complex, and concentrating knowledge in a few individuals creates organizational risk. Regular technical discussions and pair programming sessions distribute understanding while improving system design through diverse perspectives.

Continuous Improvement and Evolution

Real-time systems require ongoing attention and evolution. Monitor production metrics to identify optimization opportunities. Review incident post-mortems to prevent recurrence. Stay current with framework updates and new technologies that might improve performance or reduce operational complexity. Schedule regular architecture reviews to ensure the system still meets evolving requirements.

Technical debt accumulates in long-running systems through quick fixes, workarounds, and outdated dependencies. Allocate time for refactoring, dependency updates, and paying down technical debt before it becomes overwhelming. Balancing feature development with maintenance and improvement work keeps systems healthy and maintainable over time.

Frequently Asked Questions

What is the difference between real-time and near-real-time data processing?

Real-time processing typically refers to systems that process data within milliseconds to seconds of arrival, with latency measured in sub-second ranges. Near-real-time processing accepts latencies from several seconds to minutes, often using micro-batch processing techniques. The distinction depends on business requirements—financial trading needs true real-time processing, while analytics dashboards might accept near-real-time updates. The choice significantly impacts architecture complexity and cost.

How do I choose between Apache Kafka, Amazon Kinesis, and Apache Pulsar for message brokering?

Apache Kafka offers the most mature ecosystem with extensive tooling and community support, making it ideal for complex processing pipelines. Amazon Kinesis integrates seamlessly with AWS services and simplifies operations through managed service offerings, best for AWS-native architectures. Apache Pulsar provides multi-tenancy and geo-replication features that Kafka lacks, suitable for organizations requiring strong isolation between teams or global data distribution. Consider your cloud strategy, operational expertise, and specific feature requirements when choosing.

What are the typical latency ranges I should expect from different processing frameworks?

Apache Flink typically achieves single-digit millisecond latencies for simple transformations and tens of milliseconds for stateful operations. Apache Storm provides similar latency characteristics but with less sophisticated state management. Spark Structured Streaming uses micro-batching with latencies typically in the hundreds of milliseconds to seconds range. The actual latency depends heavily on processing complexity, state size, cluster resources, and network characteristics. Always benchmark with your specific workload rather than relying on theoretical numbers.

How do I handle schema evolution in production streaming systems?

Implement a schema registry like Confluent Schema Registry or AWS Glue Schema Registry to manage schema versions centrally. Use serialization formats that support schema evolution, such as Apache Avro or Protocol Buffers. Design schemas with backward and forward compatibility in mind—add optional fields rather than modifying existing ones, use default values appropriately, and avoid removing fields. Test schema changes thoroughly in staging environments before production deployment, and maintain version documentation for troubleshooting.

What monitoring metrics are most critical for real-time data processing systems?

End-to-end latency measures how long events take from ingestion to output, directly reflecting user experience. Processing lag indicates how far behind real-time your system is running, critical for detecting capacity issues. Error rates and types reveal data quality and processing problems. Resource utilization (CPU, memory, network) shows whether infrastructure is appropriately sized. Throughput metrics confirm the system handles expected data volumes. Monitor all these metrics continuously with alerts for anomalies.

How can I ensure exactly-once processing semantics in my stream processing application?

Exactly-once semantics require coordination between message brokers, processing frameworks, and output systems. Use frameworks like Apache Flink or Kafka Streams that provide exactly-once guarantees through distributed snapshots and transactional writes. Ensure your message broker supports idempotent producers and transactional consumers. Implement idempotent operations in your processing logic where possible, allowing safe retries. Test failure scenarios thoroughly to verify exactly-once behavior under various failure conditions, as configuration mistakes can silently degrade to at-least-once semantics.

What strategies work best for handling late-arriving data in event-time processing?

Configure watermarks with appropriate delays that balance completeness against latency—longer delays capture more late events but increase processing latency. Implement allowed lateness windows that accept events arriving after watermarks pass, updating results retroactively. Use side outputs or dead-letter queues for extremely late events that exceed allowed lateness. Monitor late event patterns to tune watermark strategies appropriately. Consider whether your use case truly requires event-time processing or if processing-time semantics would simplify architecture without sacrificing business value.

How do I debug performance issues in production streaming applications?

Start with high-level metrics to identify the bottleneck layer—ingestion, processing, or output. Use distributed tracing to follow individual events through the system, identifying slow operations. Examine processing framework metrics like backpressure indicators, checkpoint durations, and garbage collection pauses. Profile CPU and memory usage to find expensive operations. Check for data skew causing hot partitions. Review network metrics for bandwidth saturation. Correlate performance degradation with deployment changes, traffic patterns, or infrastructure events. Reproduce issues in staging environments with production-like load for detailed investigation.