How to Implement API Gateway Pattern

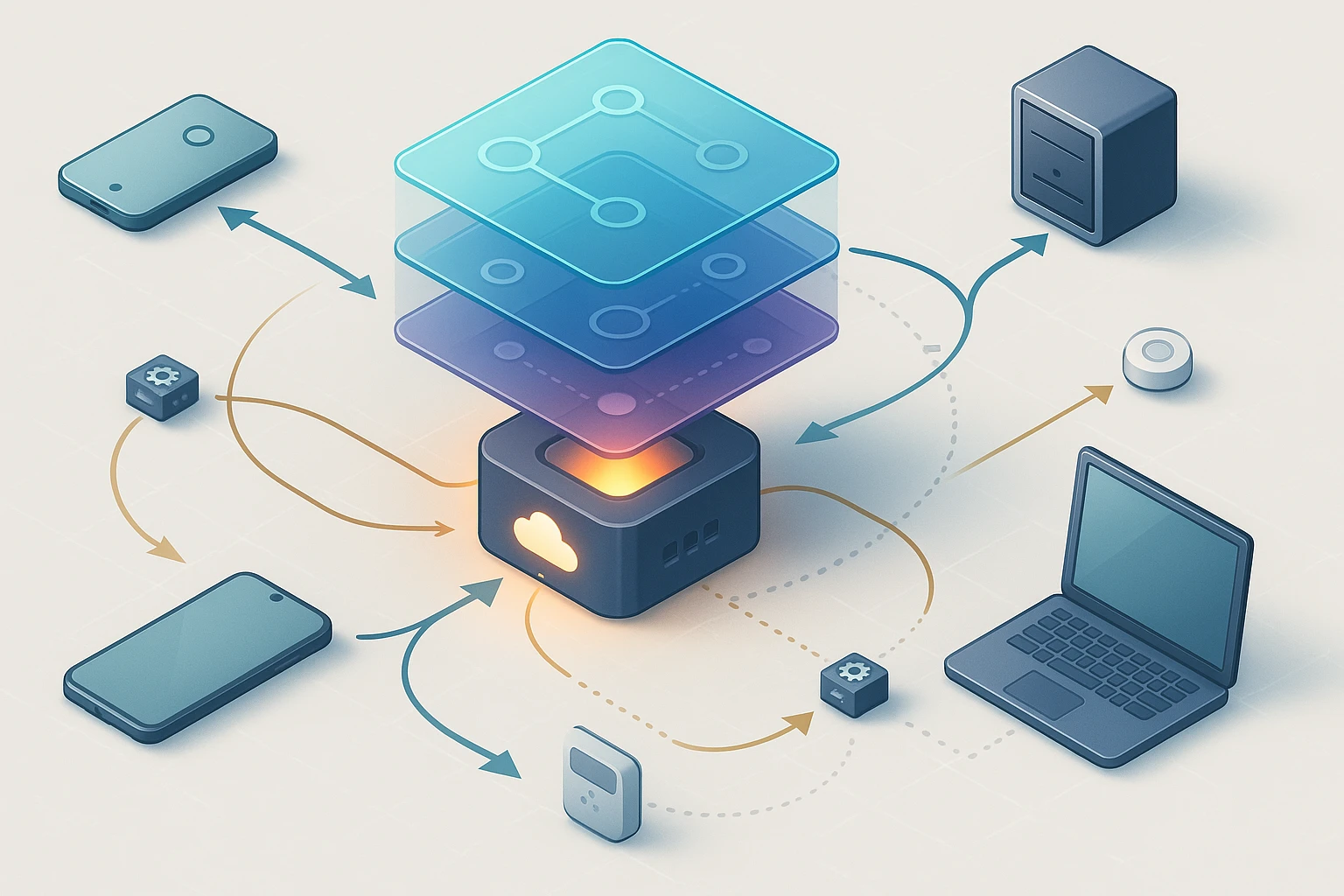

API Gateway diagram centralized gateway routes and secures requests, handles auth rate limiting, service discovery, aggregation, transformation and observability for microservices.

Modern applications are increasingly distributed across multiple microservices, each handling specific business functions. This architectural shift has created a fundamental challenge: how do client applications efficiently and securely communicate with dozens or even hundreds of backend services? Without a proper intermediary layer, clients would need to manage complex service discovery, authentication, and protocol translation—creating tight coupling and operational nightmares that can cripple development velocity and system reliability.

The API Gateway pattern serves as a single entry point that sits between client applications and backend microservices, acting as a reverse proxy to route requests, aggregate responses, and enforce cross-cutting concerns. This architectural pattern has become essential for organizations building scalable, maintainable distributed systems, offering solutions to challenges ranging from security enforcement to performance optimization.

Throughout this comprehensive guide, you'll discover the technical foundations of API Gateway implementation, including architecture design principles, popular technology choices, security configurations, performance optimization strategies, and real-world implementation patterns. Whether you're building a new microservices architecture or refactoring an existing monolith, understanding how to properly implement an API Gateway will fundamentally improve your system's resilience, security, and developer experience.

Understanding the Core Responsibilities of an API Gateway

An API Gateway functions as the orchestration layer in your microservices architecture, shouldering responsibilities that would otherwise burden individual services or client applications. At its foundation, the gateway handles request routing, directing incoming API calls to the appropriate backend service based on URL patterns, headers, or other request attributes. This routing capability abstracts the internal service topology from external consumers, allowing backend services to be reorganized, scaled, or replaced without impacting clients.

Beyond simple routing, the gateway performs protocol translation, converting between different communication protocols as needed. A mobile application might communicate with the gateway using HTTP/REST, while the gateway translates these requests into gRPC calls for efficient internal service communication. This translation layer provides flexibility in choosing the most appropriate protocol for each context without forcing a single standard across your entire architecture.

"The gateway becomes the contract between external consumers and internal implementation details, creating the flexibility to evolve your architecture without breaking existing integrations."

The gateway also implements authentication and authorization, validating credentials and permissions before requests reach backend services. Rather than duplicating authentication logic across dozens of microservices, centralizing this concern at the gateway reduces code duplication and ensures consistent security enforcement. The gateway can validate JWT tokens, integrate with OAuth providers, or implement custom authentication schemes, then pass validated identity information to downstream services through headers or tokens.

Rate limiting and throttling represent another critical responsibility, protecting backend services from overload by enforcing request quotas per client, API key, or time window. This capability prevents individual consumers from monopolizing system resources and provides a mechanism for implementing tiered service levels. The gateway tracks request counts in memory or distributed caches, rejecting requests that exceed configured thresholds before they consume backend resources.

Finally, the gateway handles response aggregation, combining data from multiple backend services into unified responses. When a client needs information spread across several microservices, the gateway can execute parallel requests, merge the results, and return a single coherent response. This aggregation reduces network round trips and simplifies client logic, particularly valuable for mobile applications operating over high-latency connections.

Request Transformation and Data Mapping

API Gateways frequently need to transform request and response payloads to bridge differences between client expectations and backend service contracts. This transformation might involve restructuring JSON objects, converting between data formats, or enriching requests with additional context. The gateway can inject correlation IDs for distributed tracing, add tenant identifiers for multi-tenant architectures, or append metadata required by downstream services but irrelevant to clients.

Response transformation works symmetrically, allowing the gateway to reshape backend responses before returning them to clients. This capability proves particularly valuable when maintaining backward compatibility—the gateway can translate new service response formats into legacy structures expected by older client versions. Through versioning strategies implemented at the gateway layer, you can evolve backend APIs independently of client release cycles.

Cross-Cutting Concerns Centralization

Centralizing cross-cutting concerns at the gateway layer eliminates duplication and ensures consistent implementation across your service ecosystem. Logging, monitoring, and metrics collection become standardized when implemented once at the gateway rather than replicated across individual services. The gateway can capture detailed request/response logs, track latency percentiles, and emit metrics about API usage patterns—providing comprehensive observability without instrumenting every microservice.

Error handling and resilience patterns also benefit from gateway-level implementation. The gateway can implement circuit breakers that prevent cascading failures, retry logic for transient errors, and fallback responses when backend services are unavailable. These resilience mechanisms protect the overall system health and provide graceful degradation, ensuring that partial failures don't result in complete system outages.

Architectural Design Patterns for API Gateways

Implementing an API Gateway requires careful architectural decisions that balance flexibility, performance, and operational complexity. The single gateway pattern represents the simplest approach, where one gateway instance handles all incoming traffic and routes to all backend services. This pattern works well for smaller systems with limited traffic, offering straightforward deployment and management. However, as systems scale, a single gateway can become a bottleneck and single point of failure.

The gateway per client pattern deploys separate gateway instances optimized for different client types—web applications, mobile apps, and third-party integrations each get dedicated gateways. This pattern allows tailoring gateway behavior to specific client needs: mobile gateways might implement aggressive response compression and data minimization, while web gateways prioritize caching strategies. The tradeoff involves increased operational complexity and potential code duplication across gateway implementations.

| Pattern | Best For | Advantages | Challenges |

|---|---|---|---|

| Single Gateway | Small to medium systems with unified client needs | Simple deployment, centralized management, lower operational overhead | Potential bottleneck, limited customization per client type |

| Gateway per Client | Systems with diverse client requirements (mobile, web, IoT) | Optimized for specific clients, independent scaling, isolation | Increased complexity, potential duplication, more infrastructure |

| Backend for Frontend (BFF) | Complex UIs with specific data aggregation needs | UI-optimized APIs, reduced over-fetching, team ownership alignment | More codebases to maintain, coordination overhead |

| Layered Gateway | Large enterprises with multiple security zones | Defense in depth, clear security boundaries, flexible routing | Increased latency, complex troubleshooting, higher costs |

The Backend for Frontend (BFF) pattern takes client-specific gateways further by creating dedicated backend services that aggregate and transform data specifically for each frontend experience. Rather than a generic gateway that serves all clients, each BFF is built and maintained by the team responsible for the corresponding frontend. This pattern aligns with organizational boundaries and allows frontend teams to iterate on their APIs without coordinating with other teams, though it requires accepting some duplication across BFFs.

"Choosing the right gateway pattern isn't about finding the universally best approach—it's about matching architectural decisions to your organization's scale, team structure, and operational capabilities."

Synchronous versus Asynchronous Communication

API Gateways traditionally handle synchronous request-response communication, but modern implementations increasingly support asynchronous patterns. For long-running operations, the gateway can accept a request, immediately return an acknowledgment with a tracking identifier, then notify the client when processing completes through webhooks or server-sent events. This asynchronous approach prevents client timeouts and improves resource utilization by freeing connection pools during processing.

WebSocket support enables bidirectional real-time communication through the gateway, allowing backend services to push updates to connected clients. The gateway manages WebSocket connections, handles authentication, and routes messages between clients and appropriate backend services. This capability is essential for building collaborative applications, live dashboards, and notification systems while maintaining the security and routing benefits of the gateway layer.

Service Mesh Integration Considerations

In environments using service mesh technologies like Istio or Linkerd, the relationship between the API Gateway and service mesh requires thoughtful design. The gateway typically sits at the edge of your infrastructure, handling north-south traffic from external clients, while the service mesh manages east-west traffic between internal services. This division of responsibilities prevents duplication while leveraging each technology's strengths.

The gateway can delegate certain responsibilities to the service mesh, such as mutual TLS for internal service communication or advanced traffic management for canary deployments. However, client-facing concerns like authentication, rate limiting, and API versioning remain gateway responsibilities since the service mesh focuses on service-to-service communication. Understanding this boundary prevents architectural confusion and ensures each layer handles appropriate concerns.

Technology Selection and Implementation Options

Selecting an API Gateway technology involves evaluating factors including performance requirements, feature needs, operational expertise, and ecosystem compatibility. Cloud-native managed services like AWS API Gateway, Azure API Management, and Google Cloud Endpoints offer fully managed solutions with minimal operational overhead. These services provide built-in scaling, monitoring, and security features, making them attractive for teams wanting to focus on business logic rather than infrastructure management.

Managed gateway services integrate seamlessly with their respective cloud platforms, offering native support for authentication services, logging systems, and deployment pipelines. However, they introduce vendor lock-in and may have limitations around customization or specific feature requirements. Cost models typically based on request volume can become expensive at scale, requiring careful capacity planning and optimization.

- 🔧 Kong Gateway provides a plugin-based architecture built on NGINX, offering extensive customization through Lua scripting and a rich ecosystem of community and enterprise plugins for authentication, rate limiting, and transformation

- 🔧 Tyk delivers a lightweight Go-based gateway with strong analytics capabilities, supporting REST, GraphQL, and gRPC protocols with built-in developer portal functionality

- 🔧 Apigee targets enterprise use cases with comprehensive API management features including monetization, analytics, and developer engagement tools, though with higher complexity and cost

- 🔧 Ambassador integrates natively with Kubernetes, leveraging Envoy proxy for high-performance routing and offering GitOps-friendly configuration management

- 🔧 Express Gateway provides a Node.js-based solution ideal for JavaScript-centric teams, offering flexibility through middleware and plugin extensibility

Open-source self-hosted options provide maximum flexibility and control, allowing customization to exact requirements without vendor constraints. Solutions like Kong, Tyk, and Envoy can be deployed on any infrastructure, from on-premises data centers to multi-cloud Kubernetes clusters. This flexibility comes with operational responsibility—your team must handle deployment, scaling, monitoring, security patching, and troubleshooting.

"The best API Gateway isn't determined by feature checklists or benchmark numbers—it's the one that aligns with your team's skills, your infrastructure constraints, and your specific architectural requirements."

Building Custom Gateway Solutions

Some organizations choose to build custom API Gateway solutions using frameworks and libraries rather than adopting existing products. This approach makes sense when requirements are highly specialized, when existing solutions don't support needed protocols or patterns, or when minimizing dependencies is critical. Building custom gateways using frameworks like Spring Cloud Gateway, Zuul, or Ocelot provides complete control but requires significant development and maintenance investment.

Custom gateway development should leverage existing libraries for common concerns rather than implementing everything from scratch. Use established authentication libraries, proven rate limiting algorithms, and well-tested HTTP client implementations. The custom code should focus on your unique routing logic, transformation requirements, or integration patterns—not reimplementing solved problems.

Performance and Scalability Characteristics

Different gateway technologies exhibit varying performance characteristics based on their implementation language, architecture, and optimization focus. NGINX-based gateways like Kong excel at raw throughput and connection handling, making them suitable for high-traffic scenarios. Go-based solutions like Tyk offer excellent performance with lower memory footprints, while Node.js gateways provide good performance for I/O-bound workloads with simpler deployment models.

Scalability strategies differ across technologies—some gateways scale primarily through horizontal replication with load balancing, while others support more sophisticated approaches like sharding by tenant or API. Understanding your traffic patterns and growth projections helps select a gateway that scales appropriately. Consider not just peak throughput requirements but also scaling velocity—how quickly can you add capacity when traffic spikes occur?

Security Implementation Strategies

Security represents one of the most critical responsibilities of an API Gateway, requiring multiple layers of defense to protect backend services and sensitive data. Authentication mechanisms verify the identity of clients making requests, with common approaches including API keys, JWT tokens, OAuth 2.0, and mutual TLS. The gateway should support multiple authentication schemes simultaneously, allowing different client types to use appropriate methods while maintaining consistent security enforcement.

API key authentication provides simplicity for service-to-service communication and third-party integrations. The gateway validates keys against a registry, then associates authenticated requests with specific clients or applications for rate limiting and auditing. While straightforward to implement, API keys lack built-in expiration and require careful key rotation procedures to maintain security over time.

JWT (JSON Web Token) authentication offers stateless verification where the gateway validates token signatures without requiring database lookups or external service calls. This approach scales efficiently since the gateway can verify tokens independently, though it requires careful key management and consideration of token revocation strategies. The gateway can extract claims from validated tokens and pass identity information to backend services through headers or token forwarding.

| Security Mechanism | Implementation Approach | Use Cases | Considerations |

|---|---|---|---|

| API Keys | Header or query parameter validation against key registry | Service-to-service, third-party integrations | Key rotation procedures, secure storage, limited metadata |

| JWT Tokens | Signature verification, claim extraction, expiration checking | User authentication, mobile apps, SPAs | Key management, revocation strategy, token size |

| OAuth 2.0 | Authorization server integration, token introspection | Third-party authorization, delegated access | Flow selection, scope management, refresh tokens |

| Mutual TLS | Certificate validation, client certificate verification | High-security environments, B2B integrations | Certificate lifecycle, performance impact, complexity |

"Security isn't a feature you add to an API Gateway—it's the foundation upon which every other capability must be built, requiring defense in depth across authentication, authorization, encryption, and monitoring."

Authorization and Access Control

While authentication verifies identity, authorization determines permissions—what authenticated clients are allowed to do. The gateway can implement role-based access control (RBAC), checking whether the authenticated user has roles required to access specific APIs or operations. More sophisticated attribute-based access control (ABAC) evaluates policies considering user attributes, resource properties, and environmental factors like time of day or request origin.

Authorization logic can be implemented directly in the gateway or delegated to specialized policy engines. Embedded authorization provides lower latency since decisions happen without external calls, but requires deploying policy updates with gateway configuration changes. External policy engines like Open Policy Agent (OPA) separate authorization logic from gateway deployment, allowing security teams to update policies independently while adding network latency for policy evaluation.

Encryption and Data Protection

The gateway must enforce encryption for data in transit, terminating TLS connections from clients and establishing secure connections to backend services. Modern implementations should support TLS 1.3, strong cipher suites, and proper certificate validation. The gateway can handle certificate management, including automatic renewal through services like Let's Encrypt, relieving backend services of this operational burden.

For highly sensitive data, the gateway can implement field-level encryption, encrypting specific response fields before returning them to clients. This approach ensures that even if transport encryption is compromised, sensitive data remains protected. The gateway might also implement data masking, redacting or obfuscating sensitive information based on client permissions—showing full credit card numbers to authorized administrators while displaying only the last four digits to regular users.

Threat Protection and Attack Mitigation

API Gateways serve as the first line of defense against various attacks, implementing protections that shield backend services from malicious traffic. Input validation ensures requests conform to expected schemas, rejecting malformed or suspicious inputs before they reach application code. The gateway can validate request sizes, enforce content type restrictions, and check for common injection attack patterns.

DDoS protection mechanisms limit the impact of distributed denial-of-service attacks through rate limiting, IP blocking, and traffic pattern analysis. The gateway can detect and block requests from suspicious sources, implement CAPTCHA challenges for suspicious traffic, and integrate with dedicated DDoS mitigation services for large-scale attacks. Geographic restrictions can block traffic from regions where you don't operate, reducing exposure to certain attack vectors.

Performance Optimization Techniques

Optimizing API Gateway performance requires addressing multiple dimensions including latency, throughput, and resource efficiency. Caching strategies represent one of the most impactful optimizations, storing frequently accessed responses at the gateway to serve subsequent requests without invoking backend services. The gateway can implement HTTP caching semantics, respecting cache-control headers while allowing override configuration for specific routes or clients.

Response caching works best for data that changes infrequently or where slight staleness is acceptable. Product catalogs, reference data, and configuration information are excellent caching candidates. The gateway can cache based on full URLs, specific query parameters, or custom cache keys derived from headers or request attributes. Implementing cache invalidation strategies ensures stale data doesn't persist—the gateway can purge cache entries when backend services indicate data has changed or after configured time-to-live periods expire.

Connection pooling to backend services reduces the overhead of establishing new connections for each request. The gateway maintains pools of persistent connections to frequently accessed services, reusing them across requests. Proper pool sizing balances resource utilization against connection availability—too few connections create bottlenecks during traffic spikes, while excessive connections waste memory and file descriptors.

"Performance optimization isn't about making everything faster—it's about identifying bottlenecks through measurement, then applying targeted improvements where they deliver the greatest impact on user experience."

Request Batching and Aggregation

When clients need data from multiple backend services, the gateway can batch and parallelize requests rather than executing them sequentially. This aggregation reduces total latency by executing independent requests concurrently, waiting only for the slowest response rather than the sum of all response times. The gateway can implement sophisticated aggregation logic, including partial success handling where some backend failures don't prevent returning available data.

GraphQL support at the gateway layer enables efficient data fetching where clients specify exactly what data they need. The gateway translates GraphQL queries into REST or gRPC calls to backend services, aggregates responses, and returns precisely the requested data. This approach eliminates over-fetching and under-fetching problems common in traditional REST APIs, reducing bandwidth usage and improving client performance.

Load Balancing and Traffic Distribution

The gateway distributes incoming requests across multiple backend service instances using various load balancing algorithms. Round-robin distribution provides simple, even distribution across instances, while least-connections routing directs traffic to instances handling the fewest active requests. More sophisticated algorithms consider instance health, response times, or geographic proximity when making routing decisions.

Sticky sessions maintain affinity between clients and specific backend instances, routing subsequent requests from a client to the same instance. This pattern benefits stateful services or those using local caching, though it complicates scaling and failover. The gateway can implement session affinity through cookies, consistent hashing on client identifiers, or custom routing logic based on request attributes.

Compression and Protocol Optimization

Response compression reduces bandwidth usage and improves performance over slow networks. The gateway can automatically compress responses using gzip or Brotli based on client capabilities indicated in Accept-Encoding headers. Compression particularly benefits text-based formats like JSON and XML, often achieving 70-90% size reduction. The gateway should skip compression for already-compressed formats like images or videos to avoid wasting CPU cycles.

HTTP/2 support enables multiplexing multiple requests over a single connection, eliminating head-of-line blocking and reducing connection overhead. The gateway can accept HTTP/2 connections from clients while using HTTP/1.1 for backend communication, or vice versa, providing protocol translation that optimizes each segment. Server push capabilities allow the gateway to proactively send resources to clients before they're requested, further improving page load times.

Monitoring, Logging, and Observability

Comprehensive observability is essential for operating API Gateways in production, providing visibility into system behavior, performance characteristics, and potential issues. Metrics collection tracks quantitative measurements including request rates, latency percentiles, error rates, and throughput. The gateway should expose metrics in standard formats compatible with monitoring systems like Prometheus, Datadog, or CloudWatch, enabling integration with existing observability infrastructure.

Key metrics to monitor include request count by route and status code, response time percentiles (p50, p95, p99), error rates by type, and upstream service health. Infrastructure metrics like CPU utilization, memory usage, and network throughput help identify resource constraints before they impact performance. Tracking these metrics over time reveals trends, capacity planning needs, and anomalies that might indicate problems.

- 📊 Request rate and throughput trends identify traffic patterns and growth trajectories

- 📊 Latency percentiles reveal performance characteristics and detect degradation

- 📊 Error rates by category distinguish between client errors and backend failures

- 📊 Cache hit rates demonstrate caching effectiveness and optimization opportunities

- 📊 Rate limiting metrics show throttling frequency and potential capacity issues

"Observability isn't just about collecting data—it's about transforming that data into actionable insights that enable proactive problem resolution and continuous improvement."

Structured Logging Implementation

Structured logging captures detailed information about each request in machine-parseable formats like JSON, enabling sophisticated analysis and troubleshooting. The gateway should log request details including timestamps, client identifiers, requested routes, response codes, and processing duration. Including correlation IDs that flow through the entire request chain enables tracing requests across multiple services and identifying where issues occur in distributed transactions.

Log aggregation systems like ELK Stack (Elasticsearch, Logstash, Kibana), Splunk, or Loki collect logs from all gateway instances, providing centralized search and analysis capabilities. Proper log management balances detail against volume—logging every request with full payloads quickly generates overwhelming data volumes and costs. Implement sampling strategies that capture all errors and a representative sample of successful requests, with the ability to temporarily increase sampling when investigating issues.

Distributed Tracing Integration

Distributed tracing follows requests as they flow through the gateway and downstream services, providing end-to-end visibility into request processing. The gateway integrates with tracing systems like Jaeger, Zipkin, or AWS X-Ray by generating trace spans for each request and propagating trace context to backend services. This integration reveals latency contributions from each service, identifies performance bottlenecks, and helps diagnose complex issues in distributed systems.

Trace data complements metrics and logs by showing the specific path each request takes through your system, including parallel calls, retries, and fallbacks. When investigating performance problems or errors, traces provide the detailed context needed to understand what happened and why. The gateway should support standard trace context propagation formats like W3C Trace Context to ensure compatibility across different services and tracing systems.

Health Checks and Alerting

The gateway should expose health check endpoints that monitoring systems can poll to verify operational status. Basic health checks confirm the gateway process is running and responding, while more sophisticated checks verify connectivity to critical dependencies like databases, caches, or authentication services. Load balancers use health checks to route traffic only to healthy gateway instances, automatically removing failing instances from rotation.

Alerting rules notify operations teams when metrics exceed thresholds or anomalies are detected. Configure alerts for high error rates, elevated latency, approaching rate limits, and infrastructure resource exhaustion. Effective alerting balances sensitivity and specificity—too many false alarms cause alert fatigue, while too few alerts allow problems to escalate before detection. Implement alert escalation policies that notify appropriate team members based on severity and time of day.

Deployment Strategies and Operational Considerations

Deploying API Gateways requires careful planning around high availability, disaster recovery, and change management. High availability architectures deploy multiple gateway instances across different availability zones or regions, ensuring that individual instance or infrastructure failures don't cause service outages. Load balancers distribute traffic across gateway instances, automatically routing around failures while health checks detect and remove unhealthy instances.

Stateless gateway design simplifies high availability by allowing any instance to handle any request without requiring session affinity or state synchronization. Configuration, rate limiting counters, and cache data should be stored in shared external systems like Redis or distributed caches rather than local instance memory. This approach enables seamless scaling and failover—new instances can be added or removed without affecting active requests or requiring state migration.

Geographic distribution deploys gateway instances in multiple regions close to users, reducing latency and providing disaster recovery capabilities. Global load balancing routes users to their nearest gateway instance based on geography or latency measurements. When regional failures occur, traffic automatically fails over to healthy regions. This architecture requires careful consideration of data consistency, especially for rate limiting and caching—distributed systems must balance consistency against availability and partition tolerance.

Configuration Management and GitOps

Configuration as code treats gateway configuration as source code, storing it in version control systems and applying software development practices like code review, testing, and automated deployment. This approach provides audit trails showing who changed what and when, enables rolling back problematic changes, and supports environment-specific configurations through templating or overlays.

GitOps workflows automate configuration deployment by monitoring Git repositories for changes and automatically applying them to gateway instances. When developers merge configuration changes to the main branch, automation systems validate the configuration, run tests, and deploy to production following defined promotion strategies. This automation reduces manual errors, accelerates deployment velocity, and ensures consistency across environments.

Blue-Green and Canary Deployments

Safe gateway updates require deployment strategies that minimize risk and enable rapid rollback if issues occur. Blue-green deployments maintain two complete environments—the current production version (blue) and the new version (green). After deploying and validating the green environment, traffic switches from blue to green instantaneously. If problems emerge, switching back to blue provides immediate rollback without requiring redeployment.

Canary deployments gradually shift traffic to new gateway versions, starting with a small percentage of requests while monitoring for errors or performance degradation. If metrics remain healthy, the canary percentage increases incrementally until all traffic uses the new version. This gradual rollout limits the blast radius of potential issues—problems affect only the canary percentage of users rather than the entire user base. Automated canary analysis can detect anomalies and automatically halt or rollback deployments without human intervention.

Capacity Planning and Scaling

Effective capacity planning ensures the gateway can handle current and projected traffic without over-provisioning resources. Analyze historical traffic patterns to identify peak usage periods, growth trends, and seasonal variations. Load testing validates that the gateway meets performance requirements under expected and peak loads, revealing bottlenecks before they impact production users.

Horizontal scaling adds or removes gateway instances based on traffic demand, while vertical scaling increases resources (CPU, memory) allocated to each instance. Cloud deployments can leverage auto-scaling that automatically adjusts capacity based on metrics like CPU utilization or request rate. Configure auto-scaling policies with appropriate thresholds and scaling velocity—scale up quickly to handle traffic spikes but scale down gradually to avoid thrashing.

Common Implementation Challenges and Solutions

Implementing API Gateways introduces several recurring challenges that teams must address for successful deployments. Gateway as a bottleneck concerns arise when the gateway becomes a performance or availability constraint. While gateways add latency to each request, proper implementation keeps overhead minimal—typically single-digit milliseconds. Performance issues usually stem from inefficient configuration, inadequate resources, or inappropriate use cases rather than inherent gateway limitations.

Mitigate bottleneck risks through horizontal scaling, performance optimization, and careful feature selection. Not every request needs to flow through all gateway capabilities—simple health checks or static assets might bypass authentication and rate limiting. Implement caching aggressively for appropriate use cases, and consider edge caching or CDN integration for static content. Monitor gateway performance continuously and scale proactively based on traffic trends rather than reacting to capacity exhaustion.

"The most common API Gateway failures don't stem from technology limitations—they result from treating the gateway as an afterthought rather than designing it as a critical architectural component deserving careful planning and operational excellence."

Managing Gateway Configuration Complexity

As systems grow, gateway configuration becomes increasingly complex with hundreds of routes, authentication schemes, rate limiting policies, and transformation rules. This complexity makes configuration difficult to understand, error-prone to modify, and challenging to test. Configuration sprawl occurs when each team adds their own routes and policies without coordination, creating inconsistent patterns and duplicated logic.

Address configuration complexity through modularization, standardization, and automation. Define standard patterns for common scenarios—authentication, rate limiting, CORS—and provide reusable templates that teams can apply consistently. Implement configuration validation that checks for common errors, conflicting rules, or security misconfigurations before deployment. Use configuration management tools that support composition, allowing complex configurations to be built from simpler, tested components.

Handling Backward Compatibility

Evolving APIs while maintaining backward compatibility for existing clients presents ongoing challenges. The gateway can implement API versioning strategies that allow multiple API versions to coexist, routing requests to appropriate backend services based on version indicators in URLs, headers, or content negotiation. This approach enables backend evolution without breaking existing integrations, though it requires maintaining multiple API versions simultaneously.

Version deprecation policies define how long old versions remain supported and communicate timelines to API consumers. The gateway can track usage by version, identifying clients still using deprecated APIs and facilitating migration communications. Implement sunset headers that inform clients when APIs will be retired, and consider throttling or limiting features in deprecated versions to encourage upgrades while maintaining basic functionality.

Debugging Distributed System Issues

Troubleshooting problems in systems with API Gateways requires different approaches than monolithic applications. Issues might originate in the gateway itself, downstream services, network infrastructure, or interactions between components. Correlation IDs that flow through the entire request chain enable tracing requests across multiple systems, connecting logs and traces from the gateway, backend services, and databases.

Implement comprehensive logging that captures sufficient context for debugging without overwhelming log storage. Log request identifiers, timestamps, client information, requested routes, response codes, and processing duration. When errors occur, include exception details, stack traces, and relevant request context. Structured logging enables searching across distributed logs to find all entries related to specific requests, users, or time periods.

Testing Strategies for API Gateways

Thorough testing ensures gateway implementations meet functional requirements, perform adequately under load, and handle failure scenarios gracefully. Functional testing verifies that routing, authentication, authorization, and transformation logic work correctly. Test suites should cover happy paths, error conditions, and edge cases—verifying that valid requests route correctly, invalid authentication is rejected, rate limits are enforced, and error responses match specifications.

Integration testing validates the gateway's interaction with backend services, authentication providers, and external dependencies. These tests use real or realistic test doubles for dependencies, verifying that the gateway correctly handles various backend responses including success, errors, timeouts, and partial failures. Integration tests should validate retry logic, circuit breaker behavior, and fallback mechanisms that protect against cascading failures.

Contract testing ensures compatibility between the gateway and backend services by validating that the gateway's requests match what services expect and that service responses match what the gateway expects. Tools like Pact enable consumer-driven contract testing where the gateway defines expectations for backend services, and services verify they meet those contracts. This approach catches breaking changes before deployment, reducing integration issues.

Performance and Load Testing

Performance testing validates that the gateway meets latency and throughput requirements under expected and peak loads. Use tools like JMeter, Gatling, or k6 to simulate realistic traffic patterns, gradually increasing load while monitoring response times, error rates, and resource utilization. Performance tests should identify the gateway's capacity limits, revealing bottlenecks and informing capacity planning decisions.

Load testing scenarios should reflect production traffic patterns including request distribution across routes, payload sizes, and client behaviors. Test with realistic authentication mechanisms rather than bypassing security, as authentication adds overhead that affects performance. Include think time between requests to simulate real user behavior rather than generating unrealistic sustained maximum throughput.

Chaos Engineering and Resilience Testing

Chaos engineering validates that the gateway handles failures gracefully by deliberately introducing problems and observing system behavior. Resilience testing might simulate backend service failures, network latency, partial outages, or resource exhaustion. These tests verify that circuit breakers activate appropriately, retries work correctly, and fallback mechanisms provide graceful degradation rather than cascading failures.

Tools like Chaos Monkey randomly terminate gateway instances to verify that redundancy and health checking work correctly. Network chaos tools introduce latency, packet loss, or connection failures to validate timeout configurations and error handling. Resource exhaustion tests verify that the gateway remains stable when memory, CPU, or file descriptors approach limits, failing gracefully rather than crashing or hanging.

Real-World Implementation Examples

Examining practical implementation scenarios illustrates how API Gateway patterns solve real business problems. Consider an e-commerce platform with separate services for product catalog, inventory, orders, payments, and recommendations. Without a gateway, the mobile app would need to make individual requests to each service, managing authentication separately for each, handling different error formats, and dealing with varying response times.

Implementing an API Gateway provides a unified entry point where the mobile app authenticates once, then makes requests to a consistent API. The gateway handles authentication, routes requests to appropriate services, aggregates data from multiple services for complex queries, and implements caching for frequently accessed product information. Rate limiting prevents individual users from overwhelming the system, while monitoring provides visibility into API usage patterns and performance characteristics.

The gateway enables the e-commerce platform to evolve backend services independently of the mobile app. The product catalog service can be rewritten from a monolith to microservices without mobile app changes—the gateway maintains the existing API contract while routing to new backend services. New features like personalized recommendations can be added by creating new services and gateway routes without modifying existing functionality.

Multi-Tenant SaaS Application

A multi-tenant SaaS application uses the gateway to implement tenant isolation, routing, and resource allocation. The gateway extracts tenant identifiers from authentication tokens or request headers, then routes requests to tenant-specific service instances or includes tenant context in requests to shared services. This architecture enables offering different service tiers—premium tenants might bypass rate limiting or access additional features not available to free tier users.

The gateway implements tenant-specific rate limiting, ensuring that one tenant's usage doesn't impact others. Monitoring and logging include tenant identifiers, enabling per-tenant usage analysis and billing. The gateway can implement tenant-specific customizations like custom domains, branded error pages, or specialized routing rules without requiring changes to backend services.

Mobile Backend with Offline Support

A mobile application with offline support leverages the gateway to implement synchronization, conflict resolution, and optimized data transfer. The gateway provides specialized endpoints for batch operations where the mobile app uploads multiple changes in a single request, reducing network overhead and improving performance over cellular connections. Response aggregation combines data from multiple services into single responses optimized for mobile bandwidth constraints.

The gateway implements delta synchronization, returning only changed data since the client's last sync rather than full datasets. Compression reduces payload sizes for bandwidth-constrained mobile networks. The gateway handles conflict resolution when offline changes conflict with server state, implementing policies like last-write-wins or custom conflict resolution logic based on business rules.

How do I choose between building a custom API Gateway and using an existing solution?

Start by evaluating your specific requirements against existing solutions' capabilities. If commercial or open-source gateways provide 80% of needed functionality, extending them through plugins or customization is typically more efficient than building from scratch. Build custom solutions only when requirements are truly unique, when existing solutions don't support critical protocols or patterns, or when minimizing dependencies is essential for your organization. Consider your team's expertise—building custom gateways requires deep knowledge of networking, security, and distributed systems. Factor in total cost of ownership including development time, ongoing maintenance, security patching, and opportunity cost of not focusing on core business features.

What's the best way to handle authentication and authorization in an API Gateway?

Implement authentication at the gateway to verify identity, then pass validated identity information to backend services through headers or tokens. Use JWT tokens for stateless authentication with good scalability, API keys for service-to-service communication, or OAuth 2.0 for third-party integrations. For authorization, decide whether to implement policies in the gateway or delegate to backend services—gateway authorization works well for coarse-grained access control (which APIs can this user access), while fine-grained authorization (can this user edit this specific resource) typically belongs in backend services with domain knowledge. Consider using external policy engines like OPA for complex authorization logic that needs to be updated independently of gateway deployment.

How should I handle versioning when multiple API versions need to coexist?

Implement versioning through URL paths (api/v1/users, api/v2/users) for clear version identification and routing simplicity. Alternatively, use header-based versioning (Accept: application/vnd.company.v2+json) for cleaner URLs but more complex client implementation. The gateway routes requests to appropriate backend service versions based on version indicators. Maintain multiple versions simultaneously during transition periods, but establish deprecation policies that define support timelines and communicate them clearly to API consumers. Track usage by version to identify clients still using old versions and facilitate migration. Consider implementing automatic version upgrades for compatible changes while requiring explicit version selection for breaking changes.

What's the right approach for handling rate limiting across multiple gateway instances?

For accurate rate limiting across distributed gateway instances, use a shared data store like Redis to track request counts. Implement sliding window or token bucket algorithms that provide smooth rate limiting without burst allowances at window boundaries. Consider approximate algorithms like Redis INCR with expiring keys for better performance at the cost of slightly less accuracy. For extremely high-traffic scenarios, evaluate whether approximate rate limiting (where limits might be exceeded by small margins) is acceptable—this allows local counting with periodic synchronization for better performance. Implement different rate limits for different client tiers or API operations, and expose rate limit information in response headers so clients can implement backoff strategies.

How do I ensure my API Gateway doesn't become a single point of failure?

Deploy multiple gateway instances across different availability zones or regions with load balancing distributing traffic across instances. Implement health checks that automatically remove failing instances from rotation. Design gateways to be stateless, storing configuration, rate limiting counters, and cache data in external systems rather than local instance memory—this enables any instance to handle any request. Use auto-scaling to automatically add capacity during traffic spikes and remove instances during quiet periods. Implement circuit breakers and fallbacks that gracefully degrade functionality when dependencies fail rather than cascading failures. Monitor gateway health continuously and establish runbooks for common failure scenarios to enable rapid response when issues occur.

What metrics should I monitor to ensure gateway health and performance?

Track request rate and throughput to understand traffic patterns and capacity utilization. Monitor latency percentiles (p50, p95, p99) to detect performance degradation—focus on high percentiles since average latency masks problems affecting a subset of users. Measure error rates by category (4xx client errors, 5xx server errors) to distinguish between client problems and backend issues. Track upstream service health and response times to identify which backend services contribute to latency. Monitor infrastructure metrics including CPU utilization, memory usage, network throughput, and connection pool statistics. Measure cache hit rates to evaluate caching effectiveness. Track rate limiting metrics to identify clients approaching limits and potential capacity constraints. Set up alerts for anomalies in these metrics to enable proactive problem resolution.