How to Implement Blue-Green Deployment Strategy

Blue-green deployment diagram: two identical production environments (blue and green) with load balancer switching traffic for zero-downtime releases and fast rollback on failures.

How to Implement Blue-Green Deployment Strategy

In today's fast-paced digital landscape, downtime is not just an inconvenience—it's a business risk that can cost companies thousands of dollars per minute and erode customer trust. Organizations across industries face the constant challenge of updating their applications while maintaining seamless service availability. The pressure to deliver new features rapidly while ensuring zero disruption has never been greater, making deployment strategies a critical component of modern software delivery.

A blue-green deployment strategy represents a release management approach where two identical production environments run simultaneously, with only one serving live traffic at any given time. This methodology promises reduced downtime, simplified rollback procedures, and increased confidence in deployment processes. By examining this approach from technical, operational, and business perspectives, we can understand how it transforms the way organizations handle software releases.

Throughout this comprehensive guide, you'll discover the foundational concepts behind blue-green deployments, practical implementation steps across various platforms, real-world considerations for infrastructure and tooling, and strategies to overcome common challenges. Whether you're a DevOps engineer planning your first implementation or a technical leader evaluating deployment strategies, you'll find actionable insights to make informed decisions about adopting this powerful technique.

Understanding the Foundation of Blue-Green Deployments

The fundamental principle behind blue-green deployment revolves around maintaining two separate but identical production environments. At any moment, one environment (traditionally called "blue") serves all production traffic while the other (called "green") remains idle or serves as a staging area. When a new release is ready, teams deploy it to the inactive environment, conduct thorough testing, and then switch traffic from the active environment to the newly updated one. This switch happens through load balancer configuration, DNS changes, or router updates, depending on the infrastructure architecture.

This approach differs significantly from traditional deployment methods where updates are applied directly to production servers, often requiring maintenance windows and accepting periods of degraded performance. The beauty of blue-green deployments lies in their simplicity and effectiveness—by maintaining a complete duplicate environment, organizations eliminate the complexity of in-place updates and gain the ability to instantly revert to the previous version if issues arise.

"The ability to switch between environments in seconds rather than rolling back deployments over hours has fundamentally changed how we approach release management and incident response."

Core Components Required for Success

Implementing blue-green deployments requires several essential infrastructure components working in harmony. The first critical element is a load balancer or traffic router capable of directing user requests to either environment based on configuration. This component serves as the traffic control mechanism that enables the instantaneous switch between blue and green environments. Modern load balancers offer sophisticated health checking, session persistence, and gradual traffic shifting capabilities that enhance the deployment process.

The second requirement involves infrastructure automation and provisioning tools that can create and maintain identical environments. Whether using infrastructure-as-code frameworks like Terraform, CloudFormation, or Ansible, the ability to programmatically define and deploy infrastructure ensures consistency between environments. Manual configuration leads to environment drift, where subtle differences between blue and green environments cause unexpected behavior during switches.

Database management represents the third critical component, often the most challenging aspect of blue-green deployments. Unlike stateless application servers that can be easily duplicated, databases contain state that must be carefully managed during transitions. Strategies for handling databases include:

- 🔄 Shared database approach: Both environments connect to the same database, requiring backward-compatible schema changes

- 🗄️ Database replication: Maintaining separate databases with real-time replication between them

- 📊 Read-only periods: Temporarily placing the database in read-only mode during switches

- 🔀 Blue-green database migrations: Creating separate database instances and migrating data during the switch

- ⚡ Event sourcing patterns: Using event logs to reconstruct state across environments

Architectural Patterns and Variations

While the classic blue-green model involves two complete production environments, several variations exist to address different organizational needs and constraints. The full blue-green pattern maintains entirely separate infrastructure stacks, including compute resources, databases, caching layers, and supporting services. This approach offers maximum isolation and the cleanest rollback capability but comes with doubled infrastructure costs and increased management complexity.

The partial blue-green pattern applies the blue-green concept selectively to specific application tiers while sharing other components. Organizations commonly implement blue-green deployments for their application servers and API gateways while maintaining a single shared database. This hybrid approach balances cost efficiency with deployment safety, though it requires careful consideration of backward compatibility and state management.

| Pattern Type | Infrastructure Duplication | Cost Impact | Rollback Speed | Complexity Level |

|---|---|---|---|---|

| Full Blue-Green | Complete environment duplication | 2x production costs | Immediate (seconds) | High |

| Partial Blue-Green | Application tier only | 1.3-1.5x production costs | Very fast (minutes) | Medium |

| Blue-Green with Canary | Complete with gradual traffic shift | 2x production costs | Gradual with quick revert | High |

| Database Blue-Green | Data tier duplication | Variable based on data size | Fast (5-15 minutes) | Very High |

Another increasingly popular variation combines blue-green deployments with canary release strategies. Rather than switching all traffic instantaneously, this approach gradually shifts a small percentage of users to the green environment while monitoring key metrics. If the new version performs well, traffic gradually increases until the green environment serves all users. This hybrid approach provides the instant rollback benefits of blue-green deployments while adding the risk mitigation of gradual rollouts.

Practical Implementation Across Different Platforms

Translating blue-green deployment theory into practice requires platform-specific approaches and tooling. The implementation details vary significantly depending on whether you're working with cloud-native services, containerized applications, or traditional server-based infrastructure. Understanding these platform-specific considerations ensures successful adoption and helps teams avoid common pitfalls during the transition.

Cloud-Native Implementation Strategies

Cloud platforms like AWS, Azure, and Google Cloud provide native services that simplify blue-green deployment implementation. AWS Elastic Beanstalk offers built-in blue-green deployment support through environment cloning and URL swapping. Teams create a new environment version, deploy the updated application, test thoroughly, and then swap the environment URLs to redirect traffic. This approach handles load balancer reconfiguration automatically, reducing manual intervention and potential errors.

For more granular control, AWS Application Load Balancers (ALB) combined with target groups enable sophisticated traffic management. Implementation involves creating separate target groups for blue and green environments, deploying application updates to the inactive target group, and then modifying ALB listener rules to redirect traffic. This method supports weighted traffic distribution, allowing gradual transitions and canary testing within the blue-green framework.

"Cloud-native blue-green deployments reduce our deployment risk by 95% while cutting our rollback time from 45 minutes to under 30 seconds. The infrastructure automation eliminates human error from our most critical operations."

Azure App Service provides deployment slots that function as built-in blue-green environments. Each slot runs a separate instance of the application with its own hostname, and Azure handles the traffic swap through a simple portal action or CLI command. The platform warms up the target slot before switching traffic, ensuring that applications are fully initialized and ready to handle requests. This warming feature prevents the cold-start performance degradation that can occur when suddenly redirecting traffic to idle instances.

Google Cloud Platform offers similar capabilities through Cloud Run revisions and Traffic Splitting. Each deployment creates a new revision while preserving previous versions. Traffic management allows precise control over the percentage of requests directed to each revision, enabling both instant blue-green switches and gradual migration patterns. The platform's automatic scaling ensures that newly activated revisions scale appropriately to handle incoming traffic.

Container Orchestration Approaches

Kubernetes has become the de facto standard for container orchestration, and it provides multiple mechanisms for implementing blue-green deployments. The most straightforward approach uses Services and Labels to control traffic routing. Teams deploy the new application version as a separate Deployment with a distinct label, test it thoroughly, and then update the Service selector to point to the new pods. This label-based switching happens instantly across all cluster nodes.

A more advanced pattern leverages Ingress Controllers with traffic splitting capabilities. NGINX Ingress, Traefik, and Istio service mesh all support weighted traffic distribution between different backend services. This enables gradual traffic migration from blue to green environments while monitoring application performance and error rates. The service mesh approach adds observability features like distributed tracing and detailed metrics that provide visibility into deployment health.

Implementation steps for Kubernetes blue-green deployments typically follow this workflow:

- Deploy the current production version with a "blue" label and expose it through a Service

- Create a new Deployment with the updated application version using a "green" label

- Verify the green deployment's health through readiness probes and manual testing

- Update the Service selector to point to the green label, switching traffic instantly

- Monitor application metrics and user impact for any anomalies

- Scale down or delete the blue deployment after confirming green environment stability

Docker Swarm offers similar capabilities through service updates and labels, though with a simpler configuration model. Teams create services with specific labels, deploy updated versions as new services, and use Docker's built-in load balancing to redirect traffic. While less feature-rich than Kubernetes, Docker Swarm provides a lower-complexity option for organizations with simpler orchestration needs.

Traditional Infrastructure Implementation

Organizations running applications on traditional server infrastructure can still implement blue-green deployments, though with additional manual orchestration. The approach typically involves maintaining two sets of application servers behind a load balancer, with traffic directed to one set at a time. HAProxy, NGINX, and F5 load balancers all support backend pool management that enables blue-green switching.

Configuration management tools like Ansible, Chef, and Puppet automate the deployment process to inactive servers. Teams write playbooks or recipes that deploy new application versions, update configurations, restart services, and verify health checks. Once the green environment passes all validation tests, load balancer configuration changes redirect traffic from blue to green servers.

"Moving from in-place updates to blue-green deployments on our physical infrastructure required cultural change as much as technical implementation. The upfront investment in automation paid for itself within three months through reduced downtime and faster feature delivery."

DNS-based blue-green deployments represent another option, particularly for globally distributed applications. This method involves maintaining separate blue and green environments with different DNS records. Traffic switching occurs by updating DNS entries to point to the green environment's IP addresses. While simple to implement, DNS-based switching introduces latency due to DNS propagation delays and client-side caching, making instant rollbacks more challenging.

Infrastructure and Resource Management

Successfully implementing blue-green deployments extends beyond the initial setup—it requires careful consideration of infrastructure costs, resource optimization, and operational sustainability. Organizations must balance the benefits of zero-downtime deployments against the financial and operational overhead of maintaining duplicate environments. Strategic planning in these areas determines whether blue-green deployments remain a sustainable long-term practice or become an unsustainable burden.

Cost Optimization Strategies

The most obvious challenge with blue-green deployments is the requirement to maintain two complete production environments, effectively doubling infrastructure costs. However, several strategies can significantly reduce this financial impact. Just-in-time environment provisioning creates the green environment only when needed for deployments, then destroys it after successful traffic switching and a stabilization period. This approach works well with infrastructure-as-code and cloud platforms that support rapid provisioning.

Cloud providers offer reserved instances, spot instances, and committed use discounts that reduce compute costs for predictable workloads. Organizations can apply these cost-saving mechanisms to their blue environment (always running) while using on-demand pricing for the temporary green environment. This hybrid pricing strategy balances cost efficiency with deployment flexibility.

Another effective approach involves resource right-sizing based on deployment phases. The inactive green environment doesn't require the same capacity as the production blue environment during testing phases. Teams can provision the green environment with reduced capacity for initial testing and validation, then scale it up to match production capacity just before the traffic switch. After confirming stability, the now-inactive blue environment can be scaled down or terminated.

| Cost Optimization Technique | Potential Savings | Implementation Complexity | Best Use Case |

|---|---|---|---|

| Just-in-Time Provisioning | 40-60% | Medium | Cloud-native applications with fast provisioning |

| Reserved Instance Strategy | 30-50% | Low | Stable workloads with predictable capacity |

| Dynamic Scaling | 20-40% | Medium | Variable traffic patterns with auto-scaling |

| Shared Supporting Services | 15-25% | High | Applications with expensive backing services |

| Environment Hibernation | 50-70% | Low | Infrequent deployments with long stable periods |

Database Management and State Handling

Managing stateful components, particularly databases, represents the most complex aspect of blue-green deployments. The challenge stems from the need to maintain data consistency while enabling instant rollbacks. Several patterns address this complexity, each with distinct tradeoffs. The shared database pattern uses a single database instance for both blue and green environments, requiring all schema changes to be backward compatible with both application versions.

Backward compatibility means new database migrations must not break the currently running application version. This typically involves a multi-phase migration strategy: first, add new columns or tables without removing old ones; deploy the new application version that uses both old and new schema elements; verify stability; then deploy a second migration that removes deprecated schema elements. This approach adds deployment complexity but eliminates the need for database duplication.

"Database backward compatibility transformed how our development teams think about schema evolution. The discipline required for blue-green deployments actually improved our overall data architecture and reduced technical debt."

The database replication pattern maintains separate database instances for blue and green environments with real-time replication between them. When switching from blue to green, the replication direction reverses, making the green database the primary and the blue database the replica. This approach provides better isolation and simpler rollback capabilities but introduces replication lag concerns and requires careful handling of the cutover moment to prevent data loss.

For applications with high data consistency requirements, database blue-green migrations with brief read-only periods offer the safest approach. The process involves placing the database in read-only mode, ensuring replication is fully synchronized, switching application traffic to the green environment, and then re-enabling writes. The read-only period typically lasts only seconds to minutes, significantly less than traditional maintenance windows.

Monitoring and Observability Requirements

Effective blue-green deployments demand robust monitoring and observability to quickly detect issues in the green environment before switching traffic. Synthetic monitoring runs automated tests against the green environment to verify functionality without exposing real users to potential problems. These tests should cover critical user journeys, API endpoints, and integration points with external systems.

Implementing environment-aware monitoring ensures that metrics, logs, and traces clearly indicate which environment (blue or green) generated them. This labeling enables rapid troubleshooting when issues arise and helps teams understand performance differences between environments. Modern observability platforms like Datadog, New Relic, and Prometheus support multi-environment tagging and filtering.

Key metrics to monitor during blue-green deployments include:

- 📈 Application response times: Compare latency percentiles between environments

- 🚨 Error rates and status codes: Watch for increased 4xx and 5xx responses

- 💾 Database query performance: Monitor slow queries and connection pool utilization

- 🔌 External integration health: Verify third-party API calls succeed

- 👥 User session behavior: Track authentication, checkout, or other critical flows

Automated rollback triggers can monitor these metrics and automatically revert to the blue environment if predefined thresholds are exceeded. This automation reduces the mean time to recovery (MTTR) by eliminating the need for manual intervention during incidents. However, rollback automation requires careful threshold tuning to avoid false positives that unnecessarily abort valid deployments.

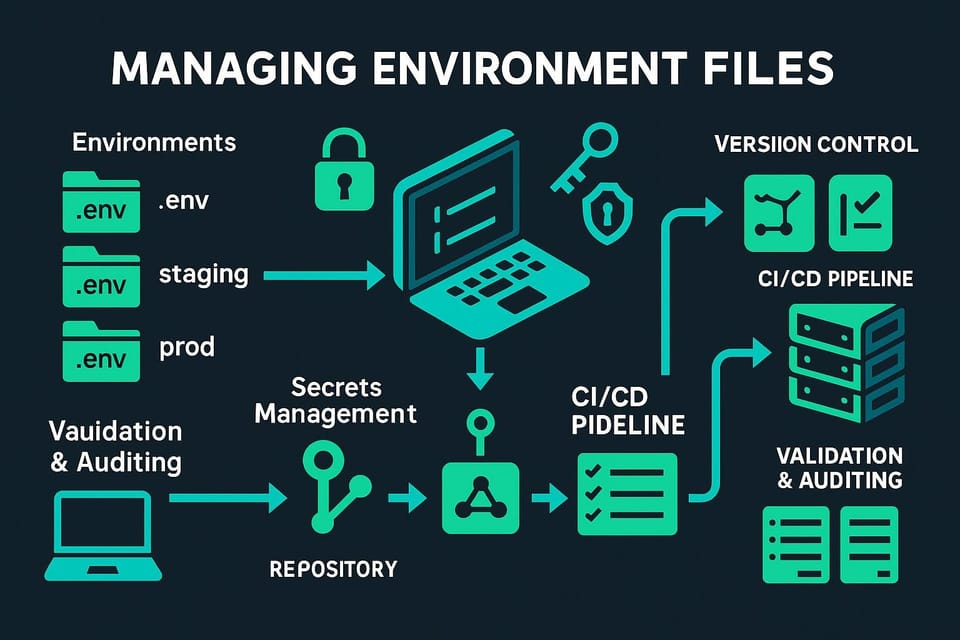

Automation Tools and CI/CD Integration

Manual blue-green deployments quickly become error-prone and unsustainable as deployment frequency increases. Automation transforms blue-green deployments from a complex manual process into a reliable, repeatable operation that can happen multiple times per day. Integrating blue-green deployment workflows into continuous integration and continuous deployment (CI/CD) pipelines ensures consistency and reduces the cognitive load on engineering teams.

CI/CD Pipeline Design Patterns

A well-designed blue-green deployment pipeline orchestrates multiple stages, from code commit through production traffic switching. The pipeline typically begins with source code changes triggering automated builds that compile code, run unit tests, and create deployable artifacts. These artifacts—whether Docker images, compiled binaries, or application packages—are versioned and stored in artifact repositories for traceability.

The next stage involves deploying to the green environment using infrastructure automation tools. This deployment should be identical to how production deployments occur, ensuring that the green environment accurately represents what will serve production traffic. Configuration management tools apply environment-specific settings, database migrations execute, and supporting services initialize.

Following deployment, automated testing in the green environment validates functionality before traffic switching. This testing phase should include integration tests, end-to-end tests, performance tests, and security scans. The comprehensiveness of this testing directly impacts confidence in the deployment and determines whether manual verification is needed before proceeding.

"Our automated blue-green pipeline runs 47 different test suites against the green environment before switching traffic. This automation gives us the confidence to deploy to production 15 times per day without manual approval gates."

After successful testing, the pipeline executes the traffic switch operation, redirecting users from blue to green. This step varies based on infrastructure—it might involve updating load balancer rules, modifying DNS records, or changing Kubernetes service selectors. The automation should include health checks that verify the green environment is successfully serving traffic before proceeding.

The final pipeline stage implements post-deployment monitoring and validation. Automated scripts watch key metrics for a defined period (often 15-30 minutes) to detect anomalies that might indicate deployment issues. If problems arise, the pipeline can automatically trigger a rollback to the blue environment. If the monitoring period passes without issues, the pipeline may optionally scale down or destroy the blue environment to reduce costs.

Popular Tools and Frameworks

Jenkins remains one of the most widely used CI/CD platforms, with extensive plugin support for blue-green deployments. The Kubernetes Continuous Deploy plugin enables blue-green deployments to Kubernetes clusters, while the AWS CodeDeploy plugin integrates with AWS services. Jenkins Pipeline-as-Code allows teams to version control their deployment workflows, ensuring consistency and enabling easy rollback of pipeline changes themselves.

GitLab CI/CD provides native support for blue-green deployments through its environment management features. GitLab tracks deployments to different environments, maintains deployment history, and supports manual approval gates for production switches. The platform's integration with Kubernetes and cloud providers simplifies infrastructure management within the same tool used for source control.

Spinnaker, originally developed by Netflix, specializes in continuous delivery with sophisticated deployment strategies including blue-green, canary, and rolling updates. Spinnaker's strength lies in its multi-cloud support and advanced deployment pipeline capabilities. The platform provides visual pipeline builders, automated rollback triggers, and extensive integration with monitoring systems for deployment validation.

For AWS-centric organizations, AWS CodePipeline and CodeDeploy offer native blue-green deployment support for EC2, ECS, Lambda, and other AWS services. CodeDeploy handles traffic shifting, health checks, and automatic rollbacks, while CodePipeline orchestrates the overall deployment workflow. This tight integration with AWS services reduces configuration complexity for teams already invested in the AWS ecosystem.

ArgoCD and FluxCD represent the GitOps approach to deployments, where Git repositories serve as the single source of truth for infrastructure and application state. These tools continuously monitor Git repositories and automatically apply changes to Kubernetes clusters. Blue-green deployments in GitOps involve updating manifests in Git, with the GitOps operator handling the deployment and traffic switching based on defined strategies.

Infrastructure as Code Integration

Blue-green deployments and infrastructure as code (IaC) form a powerful combination that ensures environment consistency and reproducibility. Terraform enables teams to define both blue and green environments as code, with modules that abstract common patterns. A typical Terraform-based blue-green implementation uses workspaces or separate state files to manage the two environments independently.

The workflow involves defining infrastructure modules for compute resources, networking, databases, and load balancers. When deploying to the green environment, Terraform applies the same infrastructure definition with environment-specific variables. After validation, a separate Terraform configuration or script updates the load balancer to point to the green environment. This approach provides complete infrastructure versioning and makes rollbacks as simple as reverting to a previous Terraform state.

CloudFormation and ARM templates offer similar capabilities within AWS and Azure respectively. These cloud-native IaC tools integrate tightly with platform services and provide built-in support for stack updates that can implement blue-green patterns. CloudFormation change sets allow teams to preview infrastructure changes before applying them, reducing the risk of unexpected modifications during deployments.

Container-focused IaC tools like Helm and Kustomize manage Kubernetes resources and support blue-green deployment patterns through templating and overlay systems. Helm charts can include logic for deploying multiple releases side-by-side, while Kustomize overlays enable environment-specific configurations without duplicating base manifests. Both tools integrate seamlessly with GitOps workflows for automated deployments.

Common Challenges and Proven Solutions

While blue-green deployments offer significant benefits, teams inevitably encounter challenges during implementation and operation. Understanding these common obstacles and proven solutions helps organizations avoid pitfalls and accelerate their adoption journey. The challenges span technical, operational, and organizational dimensions, each requiring different mitigation strategies.

Database Schema Evolution

As previously mentioned, database changes represent one of the most significant challenges in blue-green deployments. The requirement for backward compatibility can feel constraining to development teams accustomed to making breaking schema changes. A proven solution involves adopting the expand-contract pattern for database migrations, which breaks schema changes into multiple phases.

The expand phase adds new schema elements (columns, tables, indexes) without removing existing ones. Both old and new application code can operate against this expanded schema. After deploying the new application version and confirming stability, the contract phase removes deprecated schema elements. This multi-phase approach requires discipline but enables safe blue-green deployments with databases.

Another effective strategy uses database views and abstraction layers to decouple application code from physical schema structure. Views can present different schema representations to blue and green environments while underlying tables evolve. This abstraction adds a layer of indirection but provides flexibility for more complex schema transformations.

Session Management and Stateful Applications

Applications that maintain server-side session state face challenges during blue-green switches. Users with active sessions on blue servers may experience disruption when traffic switches to green servers that don't have their session data. Several approaches address this challenge, each with different complexity levels.

Externalized session storage represents the most robust solution. By storing session data in Redis, Memcached, or a database accessible to both environments, sessions persist across the blue-green switch. Both environments read from the same session store, ensuring users experience no disruption. This approach requires application changes but provides the cleanest user experience.

"Externalizing session state was the single most impactful change we made to enable blue-green deployments. It eliminated the last source of user-visible disruption during our releases and enabled us to deploy during peak traffic hours."

For applications where session externalization is impractical, session draining offers an alternative. This technique involves stopping new session creation on blue servers while allowing existing sessions to complete naturally. The deployment process waits until all blue sessions expire before switching traffic completely to green. Load balancers can implement session affinity rules that route users to their original environment until their session expires.

Stateless application design eliminates session management challenges entirely by storing all necessary state on the client side (typically in JWTs or cookies) or fetching it from databases on each request. While this approach may increase database load, it dramatically simplifies deployment processes and improves application resilience.

Third-Party Integration Complications

Applications that integrate with external systems face unique challenges in blue-green deployments. Third-party services may not distinguish between requests from blue and green environments, potentially causing issues with rate limiting, webhook configurations, or API key management. Careful planning and testing are essential to avoid disrupting these integrations.

Webhook reconfiguration represents a common issue. Many third-party services send webhooks to specific URLs configured in their dashboard. During a blue-green switch, webhook URLs may need updating to point to the green environment. Automation can handle this reconfiguration, but it introduces additional complexity and potential failure points. An alternative approach uses a stable webhook endpoint that routes to the active environment, eliminating the need for reconfiguration.

Rate limiting and API quotas can cause problems when both blue and green environments make requests to the same external service. Testing in the green environment may consume API quota, leaving insufficient capacity for production traffic. Solutions include using separate API keys for blue and green environments, implementing request throttling during testing phases, or negotiating higher rate limits with service providers.

Cost Management at Scale

As organizations scale their blue-green deployment practice across multiple applications and teams, infrastructure costs can become prohibitive. Strategic approaches to cost management become essential for sustainable adoption. Deployment scheduling can reduce costs by timing green environment provisioning to avoid peak usage periods when cloud resources are most expensive.

Implementing deployment frequency tiers allows organizations to apply different strategies based on application criticality and deployment frequency. Mission-critical applications with frequent deployments justify maintaining persistent blue-green environments, while less critical applications with infrequent deployments can use just-in-time provisioning. This tiered approach optimizes costs while maintaining deployment reliability where it matters most.

Shared infrastructure patterns enable multiple applications to share blue-green infrastructure components. For example, a single pair of Kubernetes clusters can host many applications, with blue-green switching happening at the application level rather than the infrastructure level. This approach requires careful namespace and resource management but significantly reduces overall infrastructure costs.

Team Coordination and Communication

Blue-green deployments introduce new coordination requirements, particularly in organizations with multiple teams deploying to shared infrastructure. Without proper coordination, teams may inadvertently interfere with each other's deployments or consume shared resources needed by other teams. Establishing clear processes and communication channels prevents these conflicts.

Deployment calendars and scheduling systems help teams coordinate their releases and avoid conflicts. These systems can enforce deployment windows, prevent simultaneous deployments to shared infrastructure, and provide visibility into upcoming changes. Integration with ChatOps platforms like Slack enables real-time deployment notifications and status updates.

Deployment ownership and responsibility models clarify who can approve production switches and who responds when issues arise. Some organizations implement a "you build it, you run it" model where development teams own their deployments end-to-end. Others maintain dedicated DevOps or SRE teams that manage production switches. Either model works, but clarity about roles and responsibilities prevents confusion during critical moments.

Best Practices for Long-Term Success

Sustainable blue-green deployment practices extend beyond initial implementation to encompass ongoing operations, continuous improvement, and organizational learning. Teams that treat blue-green deployments as a living practice rather than a one-time project achieve better outcomes and higher adoption rates across their organizations.

Testing and Validation Strategies

Comprehensive testing in the green environment before switching traffic is non-negotiable for successful blue-green deployments. Smoke tests should execute immediately after deployment to verify basic functionality—can the application start, respond to health checks, and connect to dependencies? These quick tests catch obvious configuration errors before investing time in deeper validation.

Integration testing validates that the application correctly interacts with databases, message queues, caching layers, and external APIs. These tests should use production-like data volumes and realistic usage patterns to expose performance issues or resource constraints. Running integration tests in the green environment while blue serves production traffic provides the most realistic validation environment.

Performance testing in the green environment helps identify scalability issues before they impact users. Load testing tools like JMeter, Gatling, or Locust can simulate production traffic patterns against the green environment. Comparing performance metrics between blue and green environments highlights regressions that might not surface in functional testing.

Security scanning should be integrated into the deployment pipeline, with tools checking for vulnerabilities, configuration issues, and compliance violations. Dynamic application security testing (DAST) runs against the live green environment, identifying runtime security issues that static analysis might miss. Failing deployments based on security findings prevents vulnerabilities from reaching production.

Rollback Procedures and Incident Response

The ability to quickly rollback is a primary benefit of blue-green deployments, but this capability requires preparation and practice. Documented rollback procedures should specify exactly how to revert to the blue environment, including commands to execute, people to notify, and metrics to monitor. These procedures should be tested regularly through game days or chaos engineering exercises.

Automated rollback triggers based on monitoring metrics can reduce mean time to recovery by eliminating manual decision-making during incidents. However, these triggers require careful tuning to avoid false positives. A common pattern uses multiple signals—for example, requiring both error rate increases and latency degradation before triggering automatic rollback.

"We practice our rollback procedures monthly through simulated incidents. This practice has reduced our actual incident response time by 70% and given our teams confidence that they can quickly recover from any deployment issue."

Maintaining deployment history and audit logs provides crucial context during incident response. Teams should know exactly what changed between the blue and green environments, who approved the switch, and what tests passed before traffic switched. This information accelerates troubleshooting and helps teams understand whether a rollback is necessary or if the issue has other causes.

Documentation and Knowledge Sharing

Comprehensive documentation ensures that blue-green deployment knowledge spreads throughout the organization rather than remaining concentrated in a few experts. Runbooks should cover standard deployment procedures, common troubleshooting steps, and escalation paths. These living documents evolve based on lessons learned from each deployment.

Architecture decision records (ADRs) document why specific blue-green implementation choices were made, including alternatives considered and tradeoffs accepted. These records help future teams understand the reasoning behind current practices and make informed decisions about when to deviate from established patterns.

Internal training programs and workshops spread blue-green deployment expertise across teams. Hands-on labs where engineers practice deployments in safe environments build confidence and competence. Recording deployment demos and post-mortems creates a knowledge base that new team members can reference during onboarding.

Continuous Improvement Through Metrics

Measuring deployment success enables data-driven improvement of blue-green practices. Key metrics to track include deployment frequency, lead time for changes, mean time to recovery, and change failure rate—the four key metrics from the DORA research on DevOps performance. Tracking these metrics over time reveals whether blue-green deployments are delivering expected benefits.

Additional blue-green-specific metrics include:

- ⏱️ Time from green environment readiness to traffic switch

- 🎯 Percentage of deployments completed without rollback

- 💰 Infrastructure cost per deployment

- 🔄 Average time to rollback when issues occur

- 📊 Green environment test coverage and pass rate

Regular retrospectives focused on deployment experiences help teams identify friction points and improvement opportunities. These sessions should involve not just DevOps engineers but also developers, QA engineers, and product managers who participate in or are affected by deployments. Cross-functional perspectives reveal issues that might not be visible to any single team.

Advanced Patterns and Future Directions

As organizations mature in their blue-green deployment practices, they often explore advanced patterns that extend the basic concept or combine it with other deployment strategies. These sophisticated approaches address specific use cases or optimize for particular organizational constraints.

Multi-Region Blue-Green Deployments

Organizations operating globally face additional complexity when implementing blue-green deployments across multiple regions. The challenge involves coordinating deployments to ensure consistent versions across regions while maintaining the ability to rollback regionally if issues arise. Sequential regional rollouts deploy to one region at a time, validating success before proceeding to the next region.

This approach uses the first region as a production canary—if issues arise, only users in that region are affected while other regions continue running the previous version. DNS-based traffic management or CDN configuration controls which regions serve which versions. The sequential approach extends overall deployment time but significantly reduces blast radius for deployment issues.

Synchronized multi-region switches represent the opposite approach, where all regions switch from blue to green simultaneously. This pattern maintains version consistency across regions but increases risk since issues affect all users globally. Organizations using this approach typically invest heavily in pre-deployment testing and monitoring to minimize the risk of widespread issues.

Blue-Green for Microservices Architectures

Microservices architectures introduce unique considerations for blue-green deployments. With dozens or hundreds of services, coordinating blue-green switches across all services simultaneously becomes impractical. Instead, teams typically apply blue-green deployments at the individual service level, with each service maintaining its own blue and green environments.

This approach requires careful management of service-to-service communication and API compatibility. Services must maintain backward-compatible APIs to ensure that blue and green versions of different services can interoperate. Service mesh technologies like Istio or Linkerd help manage this complexity by providing sophisticated traffic routing and observability for microservices deployments.

Contract testing becomes essential in microservices blue-green deployments. Tools like Pact verify that service interfaces remain compatible across versions, catching breaking changes before they cause production issues. These tests run as part of the deployment pipeline, preventing incompatible service versions from deploying simultaneously.

Combining Blue-Green with Feature Flags

Feature flags (also called feature toggles) complement blue-green deployments by enabling runtime control over feature availability independent of deployment. This combination provides even finer-grained control over change rollout. Teams can deploy code to the green environment with new features disabled, switch traffic to green, and then gradually enable features for specific user segments.

This pattern separates deployment risk from feature release risk. If issues arise after switching to green, teams can quickly disable problematic features without rolling back the entire deployment. This capability is particularly valuable for large releases containing multiple features—teams can selectively enable stable features while keeping riskier features disabled for additional validation.

Feature flag platforms like LaunchDarkly, Split, or open-source alternatives like Unleash provide the infrastructure for this pattern. These platforms offer targeting rules, gradual rollouts, and kill switches that integrate with monitoring systems. The combination of blue-green deployments and feature flags represents a best-in-class approach to deployment safety and flexibility.

Emerging Trends and Technologies

The deployment landscape continues evolving with new technologies and patterns emerging. Progressive delivery represents an umbrella term for advanced deployment strategies that combine blue-green, canary, and feature flag approaches. Progressive delivery platforms provide unified control planes for managing these various strategies, with automation that adapts deployment speed based on observed metrics.

Serverless architectures introduce new possibilities for blue-green deployments. Platforms like AWS Lambda and Google Cloud Functions handle infrastructure provisioning automatically, with built-in versioning and traffic splitting. These capabilities make blue-green deployments simpler to implement in serverless contexts, though they introduce different challenges around cold starts and state management.

GitOps is becoming the preferred approach for managing blue-green deployments in Kubernetes environments. By treating Git as the single source of truth for infrastructure and application state, GitOps provides natural versioning, rollback capabilities, and audit trails. Tools like ArgoCD and FluxCD implement sophisticated deployment strategies including blue-green as declarative configurations stored in Git.

Frequently Asked Questions

What is the main difference between blue-green deployment and canary deployment?

Blue-green deployment switches all traffic from the old version to the new version at once, maintaining two complete environments. Canary deployment gradually shifts traffic from the old version to the new version, exposing a small percentage of users first and increasing over time. Blue-green provides instant rollback but requires double infrastructure, while canary reduces risk through gradual exposure but takes longer to complete. Many organizations combine both approaches, using blue-green infrastructure with canary traffic shifting patterns.

How do I handle database migrations in a blue-green deployment?

Database migrations in blue-green deployments require backward-compatible changes that work with both the old and new application versions. Use the expand-contract pattern: first add new schema elements without removing old ones, deploy the new application version, verify stability, then remove deprecated schema elements in a second migration. Alternatively, use separate databases for blue and green environments with replication, or implement brief read-only periods during the switch to ensure data consistency.

Is blue-green deployment suitable for small teams or startups?

Blue-green deployment can work for small teams, especially when using cloud platforms with built-in support like AWS Elastic Beanstalk, Azure App Service deployment slots, or Kubernetes. These platforms reduce implementation complexity and automate much of the infrastructure management. However, small teams should consider whether the infrastructure cost of maintaining two environments is justified by their deployment frequency and downtime requirements. For teams deploying infrequently, simpler strategies like rolling updates might be more cost-effective.

What happens to user sessions during a blue-green switch?

User session handling depends on your architecture. The best approach uses externalized session storage (Redis, Memcached, or database) accessible to both blue and green environments, ensuring sessions persist across the switch. Alternatively, implement session draining where the load balancer maintains session affinity, routing users to their original environment until sessions expire naturally. For stateless applications using client-side session storage (JWTs), the switch has no impact on user sessions.

How long should I wait before destroying the blue environment after switching to green?

The waiting period depends on your confidence in the deployment and your monitoring capabilities. Common practices range from 30 minutes to 24 hours. Organizations with comprehensive monitoring and automated rollback triggers can use shorter periods (30 minutes to 2 hours). Those with less mature monitoring or deploying critical changes often wait longer (4-24 hours) to ensure no latent issues emerge. Consider your mean time to detect (MTTD) for production issues—wait at least 2-3 times that duration before destroying the blue environment.

Can I implement blue-green deployment without Kubernetes or cloud platforms?

Yes, blue-green deployment is possible on traditional infrastructure using load balancers (HAProxy, NGINX, F5) and configuration management tools (Ansible, Chef, Puppet). Maintain two sets of application servers, deploy updates to the inactive set, validate functionality, then reconfigure the load balancer to point to the updated servers. This approach requires more manual orchestration than cloud-native solutions but provides the same core benefits of zero-downtime deployments and instant rollback capability.

What are the minimum monitoring requirements for safe blue-green deployments?

At minimum, monitor application health checks, error rates, response times, and key business metrics during and after the switch. Implement synthetic monitoring to test critical user journeys in the green environment before switching traffic. Set up alerts for anomalies in these metrics with thresholds that trigger investigation or automatic rollback. More mature implementations add distributed tracing, user session tracking, and detailed performance metrics to quickly identify and diagnose issues that arise during deployments.

How does blue-green deployment work with microservices?

In microservices architectures, blue-green deployment typically applies at the individual service level rather than the entire system. Each service maintains its own blue and green environments, deploying independently. This requires maintaining backward-compatible APIs so blue and green versions of different services can interoperate. Service mesh technologies like Istio provide sophisticated traffic routing for microservices blue-green deployments. Contract testing validates API compatibility across service versions to prevent breaking changes.