How to Implement GitOps Workflow

GitOps workflow diagram: devs push code to Git; CI builds images; declarative manifests in repo drive automated CD to clusters; monitoring, alerts and Git-based rollback. in GitOps

How to Implement GitOps Workflow

Modern software development demands efficiency, reliability, and speed. Teams struggle with deployment inconsistencies, configuration drift, and the constant challenge of maintaining infrastructure across multiple environments. The traditional approach of manual deployments and scattered configuration management creates bottlenecks that slow down innovation and increase the risk of production failures. GitOps emerges as a transformative methodology that addresses these pain points by treating infrastructure and application deployments as code, stored in version control systems.

GitOps represents a paradigm shift in how organizations manage their infrastructure and application lifecycle. At its core, it's an operational framework that uses Git repositories as the single source of truth for declarative infrastructure and applications. This approach brings the same rigor, collaboration, and audit trail that developers enjoy with application code to the operations domain. From startups to enterprise organizations, teams are discovering that GitOps provides multiple perspectives on solving deployment challenges—whether you're focused on security, compliance, developer experience, or operational efficiency.

Throughout this comprehensive guide, you'll discover practical strategies for implementing GitOps workflows in your organization. You'll learn about the fundamental principles that make GitOps effective, explore the essential tools and platforms that enable this approach, and understand how to structure your repositories for maximum benefit. We'll examine real-world implementation patterns, discuss security considerations, and provide actionable steps for transitioning from traditional deployment methods to a GitOps-driven infrastructure. Whether you're managing Kubernetes clusters, cloud infrastructure, or hybrid environments, this guide equips you with the knowledge to successfully adopt GitOps practices.

Understanding the Core Principles of GitOps

The foundation of any successful GitOps implementation rests on understanding its core principles. These principles aren't arbitrary rules but rather carefully considered practices that emerged from years of operational experience across diverse organizations. When you embrace these principles, you create a system that's not only more reliable but also more transparent and collaborative.

The first principle centers on declarative configuration. Instead of writing imperative scripts that describe how to achieve a desired state, you define what the desired state should be. This distinction might seem subtle, but it's transformative. When your infrastructure is described declaratively, the system can continuously reconcile the actual state with the desired state, automatically correcting any drift. This approach eliminates the "it works on my machine" problem and ensures consistency across all environments.

"The moment we moved to declarative configurations in Git, our deployment failures dropped by seventy percent. We stopped arguing about what changed and started focusing on what we wanted to achieve."

Version control serves as the second pillar of GitOps. Every change to your infrastructure and applications must be committed to a Git repository. This creates an immutable audit trail that answers critical questions: Who made the change? When was it made? Why was it necessary? What was the previous state? This level of transparency transforms troubleshooting from detective work into a straightforward review of commit history. Version control also enables powerful collaboration features like pull requests, code reviews, and automated testing before changes reach production.

The third principle involves automated synchronization. Once your desired state is defined in Git, specialized tools continuously monitor these repositories and automatically apply changes to your infrastructure. This automation eliminates manual intervention, reduces human error, and dramatically speeds up deployment cycles. The system becomes self-healing—if someone manually modifies infrastructure outside of Git, the automation detects this drift and reverts to the declared state.

| Principle | Traditional Approach | GitOps Approach | Key Benefit |

|---|---|---|---|

| Configuration Management | Imperative scripts and manual steps | Declarative definitions in Git | Predictable, reproducible deployments |

| Change Tracking | Documentation, tickets, tribal knowledge | Complete Git history with context | Full audit trail and easy rollbacks |

| Deployment Process | Manual execution or scheduled jobs | Continuous automated reconciliation | Reduced deployment time and errors |

| Access Control | Direct cluster/infrastructure access | Git-based permissions and reviews | Enhanced security and compliance |

| Disaster Recovery | Backup restoration and manual rebuild | Redeploy from Git repository | Rapid recovery with known-good state |

The Pull vs Push Deployment Model

A critical architectural decision in GitOps involves choosing between pull-based and push-based deployment models. Traditional CI/CD pipelines typically use a push model where the pipeline has credentials to access production infrastructure and pushes changes directly. GitOps favors a pull model where an agent running inside your infrastructure continuously pulls the desired state from Git and applies changes.

The pull model offers significant security advantages. Your CI/CD system never needs production credentials, reducing the attack surface considerably. If your CI/CD system is compromised, attackers cannot directly access your production environment. The agent inside your infrastructure only needs read access to your Git repository and write access to the local infrastructure it manages. This inversion of control fundamentally changes your security posture.

"Switching to a pull-based model meant our CI/CD pipeline no longer held the keys to the kingdom. The security team finally stopped having nightmares about pipeline compromises."

Establishing the Single Source of Truth

Git becomes your single source of truth, but this requires discipline and clear conventions. Everything that defines your system—application configurations, infrastructure definitions, policies, and even documentation—should live in Git. This doesn't mean everything goes into a single repository, but rather that Git repositories collectively represent the complete desired state of your systems.

Many organizations struggle with the question of repository structure. Should you use a monorepo containing everything, or should you split configurations across multiple repositories? The answer depends on your organization's size, team structure, and security requirements. Smaller teams often benefit from the simplicity of a monorepo, while larger organizations typically need multiple repositories to enforce proper access controls and enable teams to work independently.

Selecting and Configuring GitOps Tools

The GitOps ecosystem has matured significantly, offering several robust tools that implement GitOps principles. Your choice of tools depends on your infrastructure platform, team expertise, and specific requirements. While Kubernetes has become the most common platform for GitOps implementations, the principles apply equally to traditional infrastructure, cloud resources, and hybrid environments.

ArgoCD has emerged as one of the most popular GitOps tools for Kubernetes environments. It provides a powerful web interface that visualizes your applications, their health status, and synchronization state. ArgoCD excels at managing multiple clusters from a single control plane and offers sophisticated features like progressive delivery, automated sync policies, and extensive customization options through hooks and resource health checks.

Installing ArgoCD begins with deploying it to your Kubernetes cluster. The tool runs as a set of services within your cluster, continuously monitoring Git repositories for changes. Once installed, you define applications using custom resources that specify the Git repository location, target cluster, and synchronization policies. ArgoCD's application model supports various configuration management tools including plain Kubernetes manifests, Helm charts, Kustomize, and Jsonnet.

🔧 Essential Tool Capabilities

- Multi-cluster management: Deploy and manage applications across development, staging, and production clusters from a unified interface. This capability is essential for organizations running multiple environments or serving different geographical regions.

- Automated drift detection: Continuously compare the actual state in your cluster with the desired state in Git. When differences are detected, the tool can either alert operators or automatically correct the drift based on configured policies.

- Progressive delivery support: Implement advanced deployment strategies like canary releases, blue-green deployments, and feature flags. These strategies reduce risk by gradually rolling out changes and providing quick rollback capabilities.

- RBAC and security integration: Integrate with existing identity providers and define granular permissions controlling who can deploy what to which environments. This integration ensures compliance with security policies and audit requirements.

- Observability and notifications: Connect with monitoring systems, send notifications to chat platforms, and provide detailed logs of all synchronization activities. These features keep teams informed and enable rapid response to issues.

"The visibility ArgoCD provided transformed our operations. We went from asking 'what's deployed?' to simply looking at the dashboard and seeing the entire state of our infrastructure at a glance."

Flux represents another mature GitOps solution, particularly favored for its lightweight architecture and GitOps Toolkit approach. Flux operates as a set of specialized controllers that handle different aspects of the GitOps workflow. The source controller manages Git repository access, the kustomize controller handles Kustomize-based deployments, and the helm controller manages Helm releases. This modular design allows teams to use only the components they need.

Flux particularly shines in scenarios requiring sophisticated automation and integration with other tools. Its image automation feature can detect new container image versions and automatically update Git repositories with the new versions, creating a complete CI/CD loop. The notification controller provides flexible alerting and webhook capabilities, enabling integration with external systems.

🎯 Tool Selection Criteria

- Team expertise and learning curve: Consider your team's familiarity with the tools' underlying technologies. ArgoCD's web interface may be more approachable for teams new to GitOps, while Flux's CLI-centric approach appeals to teams comfortable with command-line tools.

- Scale and complexity requirements: Evaluate how many clusters, applications, and teams you need to support. Some tools handle extreme scale better than others, and your choice should accommodate future growth.

- Integration ecosystem: Examine how well each tool integrates with your existing infrastructure, monitoring systems, and development workflows. Strong integrations reduce friction and increase adoption.

- Community and enterprise support: Consider the size and activity of the tool's community, availability of documentation, and options for commercial support if needed. A vibrant community provides valuable resources and ensures the tool's longevity.

- Feature alignment: Match tool capabilities with your specific needs. If you require advanced progressive delivery, ensure your chosen tool supports it natively or integrates well with specialized tools like Flagger.

Configuring Tool Behavior and Policies

After selecting your GitOps tool, proper configuration ensures it behaves according to your operational requirements. Synchronization policies determine how aggressively the tool applies changes from Git. Automated synchronization provides the fastest feedback loop but requires confidence in your testing processes. Manual synchronization with automated drift detection offers a middle ground, alerting teams to changes while requiring explicit approval for application.

Health checks define how the tool determines whether a deployment succeeded. Default health checks work well for standard Kubernetes resources, but custom applications often require custom health checks. These checks might verify that a database migration completed successfully, that an application is responding to health endpoints, or that a certain number of replicas are running and ready.

Pruning policies control whether the tool deletes resources that exist in the cluster but are no longer defined in Git. Enabling automatic pruning keeps your cluster clean and ensures Git truly represents the complete desired state. However, this requires careful consideration—accidentally removing a resource definition from Git would trigger deletion in the cluster. Many teams start with pruning disabled and enable it after gaining confidence in their processes.

| Configuration Aspect | Conservative Approach | Aggressive Approach | Recommended Starting Point |

|---|---|---|---|

| Sync Policy | Manual sync only | Automatic sync on every commit | Auto-sync with manual promotion to production |

| Pruning | Disabled, manual cleanup | Automatic pruning enabled | Enabled for non-production, manual for production |

| Self-Healing | Alert on drift, no correction | Immediate automatic correction | Auto-heal non-production, alert for production |

| Retry Strategy | Single attempt, manual intervention | Unlimited retries with backoff | Limited retries with exponential backoff |

| Notification | Email on failures only | Real-time notifications for all events | Chat notifications for failures and production syncs |

Structuring Repositories for GitOps Success

Repository structure significantly impacts the success of your GitOps implementation. A well-designed structure makes it easy to find configurations, understand dependencies, and maintain proper separation of concerns. Conversely, a poorly structured repository creates confusion, increases the risk of errors, and makes collaboration difficult.

The fundamental decision involves choosing between application repositories and environment repositories. Application repositories contain the source code and often include basic deployment manifests. Environment repositories contain environment-specific configurations for deploying applications. This separation enables different teams to own different aspects of the deployment process and allows for different promotion workflows.

"Separating application code from environment configurations was a game-changer. Developers could iterate on features without worrying about production configurations, and operations could manage infrastructure without touching application code."

📁 Repository Organization Patterns

- Monorepo pattern: All applications and environments live in a single repository with clear directory structures. This pattern works well for smaller organizations or tightly coupled applications. It simplifies dependency management and enables atomic changes across multiple applications. However, it requires careful access control to prevent unauthorized changes.

- Repo-per-application pattern: Each application has its own repository containing both code and deployment configurations. This pattern provides strong boundaries between applications and enables teams to work independently. It works best when applications are truly independent and don't require coordinated deployments.

- Repo-per-environment pattern: Separate repositories for each environment (development, staging, production) containing all application configurations for that environment. This pattern provides excellent access control and makes promotion workflows explicit. Changes flow through environments by copying or promoting configurations between repositories.

- Hybrid pattern: Application repositories contain base configurations, while environment repositories contain overlays and environment-specific settings. This pattern leverages tools like Kustomize to compose final configurations from multiple sources, balancing reusability with environment-specific customization.

Directory Structure Best Practices

Within your repositories, consistent directory structures help teams navigate and understand the codebase. A typical structure might separate base configurations from environment-specific overlays. The base directory contains common configurations that apply across all environments, while environment directories contain patches or overrides specific to each environment.

Consider organizing by application and then by component rather than by resource type. Instead of having separate directories for deployments, services, and config maps, group all resources related to a specific component together. This organization makes it easier to understand what comprises a complete application and reduces the chance of forgetting to update related resources.

Documentation should live alongside configurations. A README file in each directory explaining the purpose of configurations, any special considerations, and links to related documentation helps onboard new team members and serves as a reference during troubleshooting. This documentation is particularly valuable for explaining non-obvious design decisions or complex configurations.

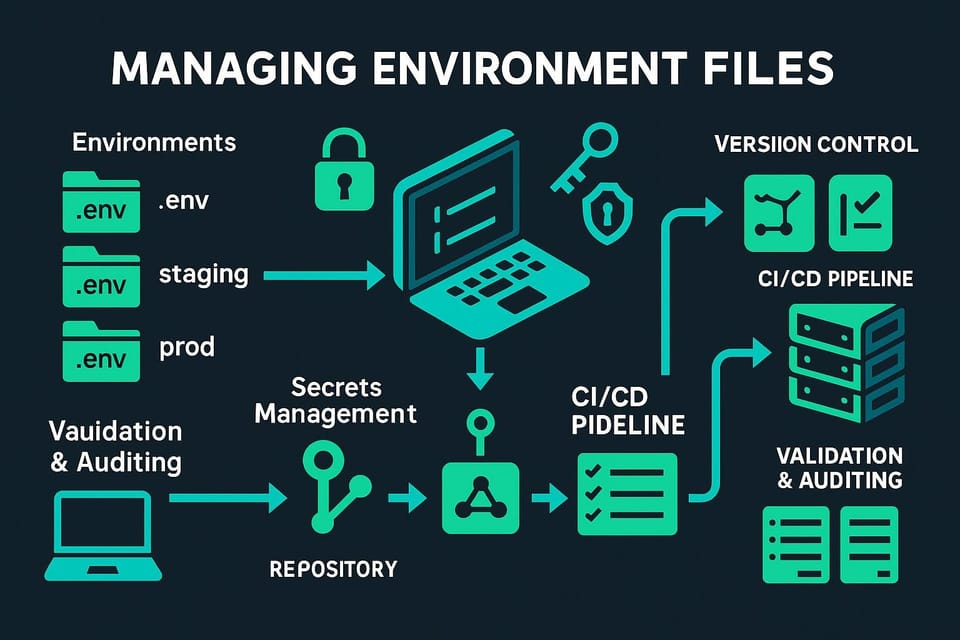

Managing Secrets in GitOps Workflows

Secrets management represents one of the most challenging aspects of GitOps implementations. The principle of storing everything in Git conflicts with the security requirement of never committing secrets to version control. Several approaches solve this problem, each with different tradeoffs.

Sealed Secrets encrypts secrets using asymmetric cryptography, allowing you to safely commit encrypted secrets to Git. A controller running in your cluster holds the private key and can decrypt these secrets. This approach keeps secrets in Git alongside other configurations, but requires careful key management and rotation procedures.

External secrets operators reference secrets stored in external systems like HashiCorp Vault, AWS Secrets Manager, or Azure Key Vault. Your Git repository contains only references to secrets, not the secrets themselves. The operator fetches actual secret values from the external system and creates Kubernetes secrets. This approach provides better separation of concerns and leverages enterprise secret management systems, but adds complexity and external dependencies.

"We tried committing encrypted secrets at first, but key rotation became a nightmare. Moving to an external secrets operator integrated with our existing Vault infrastructure was the right choice for our security requirements."

Secret generation creates secrets dynamically during deployment rather than storing them. For example, you might generate random passwords or API keys as part of the deployment process. This approach works well for internal secrets that don't need to be shared across systems but doesn't solve the problem of secrets that must be provided from external sources.

Implementing Progressive Delivery Strategies

GitOps enables sophisticated deployment strategies that reduce risk and enable rapid rollback when issues arise. Progressive delivery represents an evolution beyond simple rolling updates, providing fine-grained control over how changes reach users. These strategies are particularly valuable for high-traffic applications where even brief outages impact significant numbers of users.

Canary deployments release changes to a small subset of users before rolling out to everyone. The new version runs alongside the old version, receiving a small percentage of traffic. Monitoring and automated analysis determine whether the canary is healthy. If metrics remain within acceptable bounds, traffic gradually shifts to the new version. If problems are detected, traffic immediately routes back to the stable version.

Implementing canary deployments in a GitOps workflow involves defining traffic splitting rules in your Git repository. Tools like Flagger automate the canary process, progressively shifting traffic based on metrics from your monitoring system. The entire process—from initial deployment through traffic shifting to final promotion or rollback—is driven by configurations in Git and executed automatically.

🚀 Progressive Delivery Techniques

- Blue-green deployments: Maintain two complete production environments, only one serving live traffic. Deploy the new version to the inactive environment, verify it's working correctly, then switch traffic. This technique enables instant rollback by switching traffic back to the previous environment and works well for applications with complex startup procedures or extensive data migrations.

- Feature flags integration: Combine GitOps deployments with feature flag systems to decouple deployment from release. Deploy code with new features disabled, then enable features gradually through the feature flag system. This separation allows technical deployment processes to proceed independently from business release decisions.

- A/B testing deployments: Route different user segments to different versions to test features or performance improvements. Unlike canary deployments focused on risk mitigation, A/B testing focuses on gathering data to make informed decisions about which version performs better for business metrics.

- Shadow deployments: Deploy new versions that receive copies of production traffic without serving responses to users. This technique validates that new versions can handle production load and data patterns without risking user experience. It's particularly valuable for testing performance improvements or infrastructure changes.

Automated Rollback and Recovery

The true power of GitOps emerges during incidents. When something goes wrong, rolling back is as simple as reverting a Git commit. Your GitOps tool automatically applies the previous state, restoring your system to a known-good configuration. This simplicity reduces stress during incidents and enables faster recovery.

Automated rollback takes this further by detecting problems and reverting changes without human intervention. By integrating your GitOps tool with monitoring systems, you can define conditions that trigger automatic rollback. If error rates spike, response times increase beyond thresholds, or critical metrics fall outside acceptable ranges, the system automatically reverts to the previous version.

"Automated rollback saved us during a late-night deployment. The new version had a subtle memory leak that would have taken hours to debug. Instead, the system detected rising memory usage, automatically rolled back, and paged us with details. We fixed the issue the next morning without any user impact."

Establishing Governance and Compliance

GitOps naturally supports governance and compliance requirements by creating an immutable audit trail of all changes. Every modification to your infrastructure has a corresponding Git commit with author information, timestamp, and description. This audit trail satisfies many regulatory requirements and simplifies compliance audits.

Implementing proper governance starts with branch protection rules. Require pull requests for changes to production configurations, mandate reviews from appropriate team members, and enforce status checks that must pass before merging. These rules ensure that changes receive proper scrutiny and testing before affecting production systems.

Policy-as-code tools like Open Policy Agent (OPA) integrate seamlessly with GitOps workflows. Define policies in code that specify what configurations are allowed, then automatically validate all changes against these policies. Policies might enforce security requirements like ensuring all containers run as non-root users, compliance requirements like mandatory labels for cost allocation, or operational requirements like resource limits on all deployments.

🔒 Governance Mechanisms

- Approval workflows: Implement multi-stage approval processes where changes must be approved by security teams, compliance officers, or senior engineers before reaching production. GitOps tools can integrate with these workflows, blocking synchronization until approvals are granted.

- Scheduled deployment windows: Restrict when changes can be applied to production systems. Many organizations limit production deployments to business hours when full teams are available, or implement change freeze periods during critical business events.

- Separation of duties: Ensure that the person who writes code cannot be the sole approver for deploying that code to production. This separation reduces the risk of malicious or accidental harmful changes and is often required by compliance frameworks.

- Automated compliance scanning: Integrate security scanning and compliance checking tools into your GitOps workflow. Scan container images for vulnerabilities, check configurations against security benchmarks, and validate that deployments meet organizational standards before they reach production.

- Change advisory boards: For organizations with formal change management processes, integrate GitOps workflows with change advisory board (CAB) processes. Automatically create change requests, track approvals, and ensure all changes are properly documented and communicated.

Monitoring and Observability in GitOps

Effective monitoring is essential for successful GitOps implementations. You need visibility into both the GitOps process itself and the applications it deploys. Monitoring the GitOps process means tracking synchronization status, detecting failures, and understanding the state of your deployments. Monitoring applications means collecting metrics, logs, and traces that reveal how your software is performing.

GitOps tools provide built-in metrics about their operation. These metrics reveal synchronization frequency, success rates, and drift detection. Exposing these metrics to your monitoring system enables dashboards showing the health of your GitOps infrastructure and alerting when synchronization fails or drift is detected.

Integration with observability platforms creates a complete picture of your system. When a deployment occurs, your monitoring system should automatically correlate this event with changes in application behavior. This correlation makes it immediately obvious whether a performance degradation or error rate increase relates to a recent deployment.

"Correlating deployments with metrics transformed our incident response. We stopped asking 'what changed?' and started asking 'how do we fix it?' because the correlation was automatic and obvious."

Key Metrics to Track

Deployment frequency measures how often you deploy changes to production. Higher deployment frequency generally indicates a mature development process and enables faster delivery of features and fixes. GitOps makes it easy to track this metric since every deployment corresponds to a Git commit.

Lead time for changes measures the time from code commit to production deployment. GitOps typically reduces this lead time significantly by automating the deployment process. Tracking this metric helps identify bottlenecks in your development and deployment pipeline.

Mean time to recovery (MTTR) measures how quickly you can restore service after an incident. GitOps should dramatically reduce MTTR since rolling back is as simple as reverting a Git commit. If your MTTR remains high despite GitOps, it indicates problems with your monitoring, alerting, or incident response processes.

Change failure rate measures the percentage of deployments that cause production incidents. GitOps helps reduce this rate through better testing, automated validation, and progressive delivery strategies. However, the metric remains valuable for identifying areas where additional safeguards might be beneficial.

Handling Multi-Cluster and Multi-Environment Scenarios

Most organizations operate multiple clusters and environments, creating complexity in GitOps implementations. You might have separate clusters for development, staging, and production, or multiple production clusters serving different regions or customers. Managing these scenarios requires careful planning and appropriate tooling.

The hub-and-spoke model uses a central management cluster running your GitOps tools, which then deploy to multiple target clusters. This model provides a single pane of glass for managing all your clusters but requires network connectivity from the management cluster to all target clusters. It works well for organizations with centralized operations teams.

The distributed model runs GitOps tools in each cluster, with each cluster managing itself. This model provides better isolation and resilience—if one cluster fails, others continue operating independently. However, it requires more careful configuration management to ensure consistency across clusters.

Environment Promotion Strategies

Promoting changes through environments requires deciding how configurations flow from development to production. The simplest approach uses long-lived branches, with each branch representing an environment. Changes merge from development branches to staging branches to production branches. This approach is intuitive but can lead to merge conflicts and makes it difficult to hotfix production.

A more sophisticated approach uses tags or releases to promote specific versions through environments. Development occurs on main branches, and when ready for promotion, you tag a release. Environment-specific repositories or directories reference these tags. Promoting to the next environment means updating the tag reference. This approach provides better control and makes the promotion process explicit.

Some organizations implement pull request-based promotion where moving to the next environment requires creating a pull request that updates configurations. This pull request becomes the approval and documentation point for the promotion, ensuring proper review and providing a clear audit trail.

Disaster Recovery and Business Continuity

GitOps fundamentally changes disaster recovery strategies. Since your Git repository contains the complete desired state of your infrastructure, recovering from a catastrophic failure becomes a matter of deploying your GitOps tool and pointing it at your repository. The tool automatically recreates your entire infrastructure.

This approach requires ensuring your Git repositories themselves are properly backed up and highly available. Most organizations use hosted Git services like GitHub, GitLab, or Bitbucket, which provide their own redundancy and backup strategies. For organizations with strict data sovereignty requirements, self-hosted Git servers should implement proper backup and disaster recovery procedures.

Regular disaster recovery drills validate that your GitOps-based recovery procedures work as expected. These drills might involve creating a new cluster and verifying that it can be fully configured from Git, or simulating the loss of your GitOps tool and validating that you can redeploy it. Such drills build confidence and often reveal gaps in documentation or automation.

Backup Strategies Beyond Git

While Git contains your desired state, certain runtime data requires separate backup strategies. Persistent data in databases or object storage, secrets stored in external systems, and state maintained by applications all need appropriate backup procedures. GitOps should integrate with these backup systems, potentially defining backup policies as code in your Git repository.

Stateful applications present particular challenges. Your Git repository might define a database deployment, but the data within that database requires separate backup and restore procedures. Document these procedures clearly and automate them where possible. Consider defining backup schedules and retention policies in Git, using tools that can read these definitions and execute appropriate backup operations.

Migrating to GitOps from Traditional Workflows

Transitioning from traditional deployment methods to GitOps requires careful planning and a phased approach. Attempting to migrate everything at once typically leads to confusion and setbacks. Instead, start with a pilot project—choose a non-critical application or environment to implement GitOps, learn from the experience, and gradually expand.

Begin by documenting your current state. What applications are deployed? How are they currently deployed? What configurations exist? This documentation becomes the baseline for your GitOps implementation. Next, choose your GitOps tools and set up the basic infrastructure. Deploy the GitOps tool itself, configure access to Git repositories, and establish basic synchronization for a simple application.

The migration process involves converting existing deployment processes to declarative configurations stored in Git. This conversion often reveals inconsistencies between environments or undocumented manual steps. Document these discoveries and decide whether to preserve the existing behavior or use this opportunity to standardize.

Common Migration Challenges

Resistance to change often represents the biggest challenge. Team members comfortable with existing processes may resist adopting GitOps. Address this resistance through education, demonstrating the benefits of GitOps, and involving skeptics in the pilot project. When people see firsthand how GitOps simplifies their work, resistance typically transforms into enthusiasm.

Knowledge gaps can slow adoption. GitOps requires understanding Git workflows, declarative configuration, and often Kubernetes or other infrastructure platforms. Invest in training and documentation. Pair experienced team members with those learning GitOps. Create runbooks that guide people through common tasks.

"The hardest part of our GitOps migration wasn't the technology—it was getting everyone comfortable with the idea that Git was now the source of truth. Once that mental shift happened, everything else fell into place."

Technical debt in existing applications may complicate migration. Applications with hard-coded configurations, tight coupling to specific infrastructure, or complex deployment procedures require refactoring before they can benefit from GitOps. Prioritize these refactoring efforts based on the value GitOps would provide for each application.

Advanced GitOps Patterns and Techniques

As your GitOps implementation matures, advanced patterns enable even greater efficiency and capability. These patterns address complex scenarios and optimize workflows for specific use cases.

Application sets provide a way to template applications across multiple clusters or environments. Instead of duplicating configuration for each cluster, you define a template and parameters that vary between clusters. The GitOps tool generates actual application configurations from these templates. This pattern dramatically reduces duplication and makes it easy to ensure consistency across clusters.

Progressive sync waves control the order in which resources are created or updated. Some resources must exist before others—for example, a namespace must exist before resources within that namespace can be created. Sync waves explicitly define this ordering, ensuring deployments proceed in the correct sequence.

GitOps for Infrastructure as Code

While GitOps originated in the Kubernetes ecosystem, the principles apply equally to traditional infrastructure. Tools like Terraform Cloud, Pulumi, and Crossplane enable GitOps workflows for cloud infrastructure, virtual machines, and network configurations. Your Git repository contains infrastructure definitions, and automation ensures the actual infrastructure matches these definitions.

Implementing GitOps for infrastructure requires addressing unique challenges. Infrastructure changes often take longer than application deployments and may have dependencies on external systems. State management becomes critical—tools must track the current state of infrastructure to make appropriate changes. Many teams implement separate GitOps workflows for infrastructure and applications, with infrastructure changes following more conservative synchronization policies.

Integrating with Service Mesh

Service mesh technologies like Istio or Linkerd provide advanced networking capabilities for microservices. GitOps can manage service mesh configurations, treating traffic routing rules, security policies, and observability settings as code stored in Git. This integration enables sophisticated traffic management strategies and ensures network policies remain consistent with application deployments.

When a new application version deploys, corresponding service mesh configurations can deploy simultaneously, enabling or adjusting traffic routing, updating security policies, or modifying observability settings. This coordination ensures that network behavior evolves alongside applications.

Cost Optimization Through GitOps

GitOps implementations can significantly impact infrastructure costs, both positively and negatively. Understanding these impacts enables you to optimize costs while maintaining the benefits of GitOps.

Resource right-sizing becomes easier with GitOps. When all resource requests and limits are defined in Git, you can analyze these definitions across all applications, identify over-provisioned resources, and make adjustments. The Git history shows how resource requirements have changed over time, helping predict future needs.

Environment management costs can be reduced through GitOps automation. Automatically spin up development environments when needed and tear them down when not in use. Define schedules in Git that control when non-production environments run, potentially saving significant costs by shutting down unused environments during nights and weekends.

Multi-tenancy becomes more manageable with GitOps. Properly configured, a single cluster can safely host multiple teams or applications, reducing the overhead of managing separate clusters. GitOps ensures proper isolation through namespace configurations and resource quotas defined in Git.

Security Hardening for GitOps Workflows

While GitOps improves security in many ways, it also introduces new security considerations that require attention. A comprehensive security strategy addresses these concerns systematically.

Git repository security forms the foundation. Enable two-factor authentication for all users, use signed commits to verify commit authenticity, and implement branch protection rules that prevent unauthorized changes. Regularly audit repository access and remove permissions for users who no longer require them.

Supply chain security ensures that the artifacts deployed through GitOps are trustworthy. Scan container images for vulnerabilities, verify signatures on artifacts, and maintain an inventory of all dependencies. Tools like Sigstore and in-toto provide frameworks for establishing supply chain security.

🛡️ Security Best Practices

- Least privilege access: Grant the minimum permissions necessary for GitOps tools and users. The GitOps tool should have write access only to the specific resources it manages, not cluster-admin privileges. Users should have read access to production repositories but write access only through pull requests.

- Network segmentation: Isolate GitOps tools and managed infrastructure using network policies. Prevent unauthorized network access to GitOps components and limit what resources can communicate with each other.

- Audit logging: Enable comprehensive audit logging for all GitOps operations. Log who accessed what resources, what changes were made, and when they occurred. Regularly review these logs for suspicious activity.

- Secrets rotation: Implement regular rotation of secrets, credentials, and certificates. Automate this rotation where possible and ensure the rotation process integrates with your GitOps workflow.

- Vulnerability management: Continuously scan for vulnerabilities in your GitOps tools, deployed applications, and infrastructure. Establish processes for quickly addressing identified vulnerabilities and track remediation efforts.

Troubleshooting Common GitOps Issues

Even well-implemented GitOps workflows encounter issues. Understanding common problems and their solutions reduces downtime and frustration.

Synchronization failures occur when the GitOps tool cannot apply configurations from Git. These failures might result from invalid YAML syntax, resource conflicts, or insufficient permissions. GitOps tools typically provide detailed error messages explaining why synchronization failed. Review these messages carefully, fix the underlying issue in Git, and the tool will automatically retry.

Drift detection false positives happen when the tool reports drift that doesn't actually represent a problem. Some resources are intentionally modified outside of Git—for example, horizontal pod autoscalers modify replica counts based on load. Configure your GitOps tool to ignore these expected differences, either by excluding specific fields from comparison or by marking certain resources as exempt from drift detection.

Performance issues can emerge as your GitOps implementation scales. Large repositories with many applications or frequent synchronization of large manifests can strain GitOps tools. Address performance issues by splitting large repositories, adjusting synchronization intervals, or increasing resources allocated to GitOps tools.

Debugging Techniques

When problems occur, systematic debugging identifies root causes quickly. Start by checking the GitOps tool's logs and status information. Most tools provide detailed information about synchronization attempts, including what resources were processed and any errors encountered.

Compare the desired state in Git with the actual state in your cluster. Tools like kubectl diff show differences between configurations, helping identify where actual state diverges from desired state. This comparison often reveals the source of problems.

Test configurations in isolation before committing to Git. Use tools like kubectl apply --dry-run or kubeval to validate YAML syntax and resource definitions. These tools catch many common errors before they reach your GitOps workflow.

Building a GitOps Culture

Technical implementation represents only part of successful GitOps adoption. Building a culture that embraces GitOps principles ensures long-term success and maximizes benefits.

Documentation culture becomes even more important with GitOps. Since configurations live in Git, documentation should too. README files explaining repository structure, architectural decision records capturing why certain choices were made, and runbooks describing operational procedures should all live alongside configurations. This co-location ensures documentation stays current and remains easily accessible.

Collaboration practices evolve with GitOps. Pull requests become the primary mechanism for proposing and reviewing changes. Establish clear guidelines for pull request size, description quality, and review expectations. Encourage constructive feedback and knowledge sharing during reviews. Pull requests serve not just as approval mechanisms but as learning opportunities.

"GitOps transformed our team culture. Pull requests became teaching moments where senior engineers could share knowledge with junior engineers, and everyone could understand why changes were being made."

Continuous improvement should be embedded in your GitOps practice. Regularly review your workflows, identify pain points, and make incremental improvements. Track metrics about deployment frequency, lead time, and failure rates. Use these metrics to guide improvement efforts and demonstrate progress.

Training and Onboarding

Effective onboarding accelerates new team members' productivity and ensures they understand GitOps principles. Create a structured onboarding program that includes hands-on exercises with your GitOps tools, guided tours of your repository structure, and opportunities to make small changes under supervision.

Maintain a library of training resources including documentation, recorded demonstrations, and example configurations. Encourage new team members to improve these resources as they learn—fresh perspectives often identify gaps or confusing explanations that veterans no longer notice.

Pair programming and shadowing enable knowledge transfer. New team members should work alongside experienced practitioners, observing how they troubleshoot issues, make configuration changes, and respond to incidents. This experiential learning builds confidence and shares tacit knowledge that's difficult to document.

What is the main difference between GitOps and traditional CI/CD?

GitOps uses Git as the single source of truth for both application code and infrastructure configuration, with automated agents pulling desired state from Git repositories. Traditional CI/CD typically pushes changes from the pipeline to production environments. GitOps provides better security by eliminating the need for CI/CD systems to have production credentials, creates an immutable audit trail of all changes, and enables automatic drift correction when actual state diverges from desired state.

Can GitOps work with non-Kubernetes infrastructure?

Yes, GitOps principles apply to any infrastructure that can be described declaratively. Tools like Terraform Cloud, Pulumi, and Crossplane enable GitOps workflows for cloud resources, virtual machines, databases, and network configurations. While Kubernetes is the most common platform for GitOps implementations, the core principles of declarative configuration, version control, and automated synchronization work equally well for traditional infrastructure.

How do we handle secrets in a GitOps workflow?

Several approaches address secret management in GitOps. Sealed Secrets encrypts secrets using public-key cryptography, allowing safe storage in Git. External Secrets Operators fetch secrets from external systems like HashiCorp Vault or cloud provider secret managers, storing only references in Git. Some organizations generate secrets dynamically during deployment. The best approach depends on your security requirements, existing infrastructure, and compliance needs.

What happens if our Git repository becomes unavailable?

GitOps tools typically cache the last known state from Git, allowing existing deployments to continue running even if Git becomes temporarily unavailable. New deployments cannot occur until Git access is restored, but this doesn't affect running applications. This is one reason why using reliable, highly available Git hosting services is important. For critical environments, some organizations maintain Git repository mirrors or implement failover strategies.

How long does it typically take to implement GitOps?

Implementation timelines vary significantly based on organization size, existing infrastructure complexity, and team experience. A pilot project with a single application can be operational in days or weeks. Organization-wide adoption typically takes months, involving migration of existing applications, team training, process refinement, and cultural adaptation. Starting with a small pilot, learning from the experience, and gradually expanding is more successful than attempting a complete migration at once.

Does GitOps require Kubernetes expertise?

While many GitOps implementations use Kubernetes, the level of required expertise depends on your specific implementation. Teams new to Kubernetes should invest in training before implementing GitOps, as understanding the underlying platform is essential for effective troubleshooting and configuration. However, GitOps can actually simplify Kubernetes operations by providing a clear, version-controlled way to manage configurations and reducing the need for direct cluster access.

How do we handle emergency hotfixes with GitOps?

Emergency hotfixes follow an accelerated version of the normal GitOps workflow. Create a hotfix branch, make necessary changes, and merge through an expedited review process. Some organizations maintain separate "emergency" synchronization policies that apply changes more quickly than normal deployments. The key is that even emergency changes go through Git, maintaining the audit trail and ensuring the fix is properly documented and can be reviewed after the emergency is resolved.

What are the main costs associated with implementing GitOps?

Costs include GitOps tooling (though many options are open source), infrastructure to run GitOps components, training and time for team members to learn new workflows, and potential consulting or support services. However, GitOps often reduces costs over time through improved efficiency, reduced deployment errors, and better resource utilization. Many organizations find that GitOps pays for itself through operational improvements and reduced incident response time.