How to Implement Infrastructure as Code with Terraform

Diagram of workflow for implementing Infrastructure as Code with Terraform: plan, write modular configs, use version control, run tests, CI/CD deploy and provision cloud infra ops.

How to Implement Infrastructure as Code with Terraform

Modern cloud infrastructure management demands precision, repeatability, and speed that manual configuration simply cannot deliver. Organizations struggling with inconsistent environments, deployment delays, and configuration drift are discovering that traditional infrastructure management approaches create bottlenecks that slow innovation and increase operational risk. The shift toward treating infrastructure with the same rigor as application code has become essential for teams seeking competitive advantage in rapidly evolving digital landscapes.

Terraform represents a declarative approach to infrastructure management that enables teams to define, provision, and manage cloud resources through human-readable configuration files. This methodology transforms infrastructure from a collection of manually configured components into versioned, testable, and reproducible code artifacts. By bridging the gap between development and operations practices, this approach promises consistency across environments, reduced deployment times, and enhanced collaboration across technical teams.

Throughout this exploration, you'll discover practical implementation strategies spanning initial setup through advanced deployment patterns. We'll examine core concepts that form the foundation of successful infrastructure automation, walk through hands-on configuration examples, and address common challenges teams encounter during adoption. Whether you're managing a handful of cloud resources or orchestrating complex multi-cloud architectures, you'll gain actionable insights for transforming infrastructure management practices within your organization.

Understanding the Foundational Principles

Before diving into implementation details, grasping the philosophical underpinnings of infrastructure automation proves essential. The declarative paradigm represents a fundamental shift from imperative scripting approaches. Rather than specifying step-by-step instructions for resource creation, you describe the desired end state, allowing the orchestration engine to determine the necessary actions to achieve that configuration.

This state-based management model maintains a comprehensive record of your infrastructure's current configuration. Every resource under management gets tracked within a state file that serves as the single source of truth for your environment. This mechanism enables the system to calculate differences between your declared intentions and actual infrastructure, executing only the changes required to reconcile discrepancies.

"The transition from clicking through cloud consoles to declaring infrastructure in code files fundamentally changes how teams think about resource management and collaboration."

Provider plugins form the extensibility layer that connects your configuration declarations to actual cloud platforms and services. These specialized components translate your high-level resource definitions into API calls specific to each platform. With hundreds of providers available, you can manage resources across AWS, Azure, Google Cloud, Kubernetes, and countless other platforms using consistent syntax and workflows.

Resource dependencies create relationships between infrastructure components, ensuring proper creation and destruction sequences. The system automatically constructs a dependency graph by analyzing resource references within your configuration. When you reference one resource's attributes within another's definition, an implicit dependency forms, guaranteeing correct provisioning order without explicit sequencing instructions.

The Configuration Language Structure

HashiCorp Configuration Language (HCL) provides the syntax for expressing infrastructure requirements. This domain-specific language balances human readability with machine parseability, enabling both manual authoring and programmatic generation. The block-based structure organizes configuration into logical groupings, with resource blocks defining infrastructure components, variable blocks accepting inputs, and output blocks exposing values for consumption by other configurations or external systems.

Type constraints and validation rules ensure configuration correctness before any infrastructure changes occur. Variables can specify expected data types, default values, and custom validation logic that enforces organizational standards. This compile-time checking catches errors early in the development cycle, preventing invalid configurations from reaching production environments.

| Configuration Element | Purpose | Common Use Cases |

|---|---|---|

| Resource Blocks | Define infrastructure components to be created and managed | Virtual machines, networks, storage buckets, databases |

| Data Sources | Query existing infrastructure or external information | AMI lookups, availability zones, existing VPC details |

| Variables | Parameterize configurations for reusability | Environment names, instance sizes, region specifications |

| Outputs | Expose values for external consumption | Load balancer URLs, database endpoints, resource identifiers |

| Modules | Package reusable configuration components | Standard network architectures, security configurations |

Establishing Your Initial Environment

Beginning your infrastructure automation journey requires careful preparation of your development environment and cloud credentials. The installation process varies by operating system but generally involves downloading a single binary executable that requires no additional dependencies. Package managers like Homebrew, Chocolatey, and apt simplify installation on macOS, Windows, and Linux respectively, handling path configuration automatically.

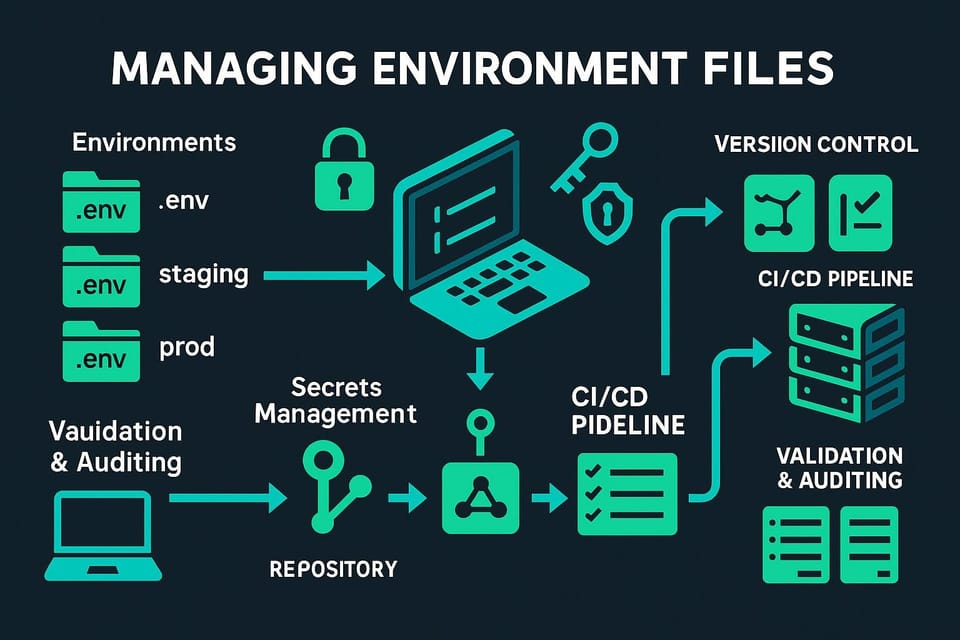

Cloud provider authentication represents the first configuration hurdle most practitioners encounter. Each platform offers multiple authentication methods, from environment variables and credential files to instance profiles and managed identities. Selecting the appropriate method depends on your execution environment—local development machines typically use credential files or environment variables, while CI/CD pipelines benefit from role-based authentication mechanisms that avoid storing static credentials.

Structuring Your First Configuration

Creating a well-organized directory structure establishes patterns that scale as your infrastructure grows. A typical project begins with a root directory containing provider configuration, followed by subdirectories organizing resources by function, environment, or architectural tier. Separating variable definitions, resource declarations, and output specifications into distinct files improves maintainability and enables team members to quickly locate specific configuration elements.

The provider configuration block establishes the connection between your configuration and the target cloud platform. This section specifies authentication details, default regions, and provider-specific settings that apply to all resources within the configuration. Version constraints within this block prevent unexpected behavior from provider updates, ensuring consistent execution across team members and deployment pipelines.

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.0"

}

}

required_version = ">= 1.6"

}

provider "aws" {

region = var.aws_region

default_tags {

tags = {

Environment = var.environment

ManagedBy = "Terraform"

Project = var.project_name

}

}

}Initialization prepares your working directory for use by downloading provider plugins and configuring backend storage for state management. This one-time setup step creates a hidden directory containing provider binaries and metadata. Re-running initialization becomes necessary when adding new providers, changing provider versions, or modifying backend configuration.

"The first successful resource creation marks a pivotal moment when infrastructure management transitions from abstract concepts to tangible automated deployments."

Defining and Managing Cloud Resources

Resource blocks form the core of infrastructure definitions, each declaring a specific component to be created and managed. The block structure begins with the resource type—a provider-specific identifier like "aws_instance" or "azurerm_virtual_network"—followed by a local name used for referencing the resource elsewhere in your configuration. Arguments within the block specify the resource's properties, from required parameters like instance type and image ID to optional settings for monitoring, networking, and security.

Attribute references enable resources to share information, creating the dependency relationships that define your infrastructure's topology. When one resource references another's attributes, the system recognizes the dependency and ensures proper creation order. These references use interpolation syntax that combines the resource type, local name, and specific attribute being accessed, forming a path to the desired value.

Working with Complex Data Structures

Many resources require nested configuration blocks for complex properties like network interfaces, disk attachments, or security rules. These nested blocks follow the same syntax as top-level resources but exist within the context of their parent resource. Understanding when to use nested blocks versus separate resource declarations impacts both configuration clarity and resource lifecycle management.

Collections and iteration constructs transform static configurations into dynamic templates that adapt to varying requirements. The count meta-argument creates multiple instances of a resource based on a numeric value, while for_each enables iteration over maps or sets, creating resources with distinct configurations for each element. These mechanisms eliminate configuration duplication and enable data-driven infrastructure definitions.

resource "aws_instance" "application_server" {

count = var.instance_count

ami = data.aws_ami.ubuntu.id

instance_type = var.instance_type

subnet_id = aws_subnet.private[count.index % length(aws_subnet.private)].id

vpc_security_group_ids = [

aws_security_group.application.id

]

tags = {

Name = "${var.project_name}-app-${count.index + 1}"

Role = "application"

}

user_data = templatefile("${path.module}/scripts/init.sh", {

environment = var.environment

app_version = var.application_version

})

}Data Sources for External Information

Data sources query existing infrastructure or external systems, incorporating information not directly managed by your configuration. These read-only references enable configurations to adapt to pre-existing resources like shared networking infrastructure or to discover dynamic values like the latest AMI version matching specific criteria. Data sources prove particularly valuable when integrating with resources created outside your automation or when building configurations that span multiple management boundaries.

Filtering and querying capabilities within data sources enable precise resource selection from potentially large result sets. Most data sources support filter blocks that narrow results based on tags, names, or other attributes. Combining multiple filters creates compound queries that identify exactly the resources your configuration requires, ensuring stability even as the underlying infrastructure evolves.

State Management Strategies

The state file represents the most critical component of infrastructure automation, serving as the mapping between your configuration declarations and actual cloud resources. This JSON-formatted file records resource identifiers, current attribute values, and metadata necessary for managing the infrastructure lifecycle. Understanding state management mechanics proves essential for avoiding data loss, preventing conflicts in team environments, and recovering from unexpected failures.

"Proper state management separates successful infrastructure automation from configurations that create duplicate resources and management chaos."

Local state storage suffices for individual experimentation and learning but introduces significant limitations in team environments. The state file residing on a single developer's machine creates a single point of failure and prevents concurrent operations by multiple team members. Transitioning to remote state storage becomes necessary as soon as multiple people begin collaborating on infrastructure management.

Remote State Configuration

Backend configuration directs state storage to remote locations that support locking, versioning, and team access. Cloud object storage services like AWS S3, Azure Blob Storage, and Google Cloud Storage provide durable, accessible storage with encryption and access control capabilities. Configuring a backend involves specifying the storage location, authentication method, and locking mechanism that prevents simultaneous modifications by multiple operators.

State locking prevents race conditions when multiple operations attempt to modify infrastructure concurrently. Distributed locking systems like DynamoDB for AWS or Cosmos DB for Azure coordinate access, ensuring only one operation proceeds at a time. This coordination proves critical in CI/CD environments where automated pipelines might trigger simultaneously or when team members work on different aspects of shared infrastructure.

| Backend Type | Key Features | Ideal Scenarios |

|---|---|---|

| S3 with DynamoDB | Versioning, encryption, distributed locking | AWS-centric organizations, multi-region teams |

| Azure Blob Storage | Integrated authentication, lease-based locking | Azure environments, Active Directory integration |

| Terraform Cloud | Built-in collaboration, run history, policy enforcement | Teams seeking managed solution, compliance requirements |

| Consul | Service discovery integration, distributed consensus | Existing Consul deployments, hybrid environments |

| PostgreSQL | Transactional consistency, familiar technology | Organizations with existing database infrastructure |

State File Security Considerations

State files contain sensitive information including resource identifiers, IP addresses, and occasionally secrets passed through variables. Protecting state files requires encryption at rest, access controls limiting who can read or modify state, and audit logging tracking all state access. Never commit state files to version control systems, as this exposes sensitive data and creates merge conflicts that corrupt state integrity.

State file versioning provides recovery mechanisms when operations go wrong or unintended changes occur. Most remote backends support versioning natively, maintaining historical copies of state that enable rollback to previous configurations. Regular state backups supplement versioning, providing additional protection against accidental deletion or corruption.

Execution Workflow and Operations

The standard workflow consists of four primary operations that form an iterative cycle: writing configuration, planning changes, applying modifications, and destroying resources when no longer needed. This deliberate, multi-step process builds confidence through preview capabilities and creates audit trails documenting all infrastructure modifications.

Planning generates an execution plan showing exactly what changes will occur without actually modifying infrastructure. This preview capability enables review and validation before committing to changes, catching potential issues and unexpected modifications before they impact production systems. The plan output categorizes changes as additions, modifications, or deletions, providing clear visibility into the operation's scope and impact.

The Planning Phase

Detailed plan output reveals not just which resources will change but specifically which attributes will be modified and their before-and-after values. Reading plans effectively requires understanding the symbols indicating change types: plus signs for additions, minus signs for deletions, tildes for in-place updates, and minus-plus combinations for resources requiring replacement. Resources showing replacement typically indicate attribute changes that cannot be applied to existing resources, necessitating destroy-then-create operations.

"The planning phase transforms infrastructure changes from potentially disruptive surprises into predictable, reviewable operations that build team confidence."

Plan files capture execution plans for later application, enabling separation between planning and execution phases. This capability proves valuable in approval workflows where infrastructure changes require review before implementation. Saved plans guarantee that reviewed changes match exactly what gets applied, preventing drift between approval and execution.

Applying Changes

Application executes the planned changes, making API calls to cloud providers in the correct dependency order. Progress updates stream to the console as each resource gets created, modified, or destroyed. The operation continues until all changes complete successfully or an error occurs, at which point the system halts to prevent cascading failures.

Partial application scenarios occur when errors interrupt the execution process. The state file reflects all successfully completed operations, while failed resources remain in their previous state. Understanding how to recover from partial applications—whether by fixing configuration errors and re-applying or manually addressing resource issues—proves essential for maintaining infrastructure integrity.

Targeted Operations

Resource targeting enables operations on specific resources rather than entire configurations. The target flag restricts planning and application to designated resources and their dependencies, useful when addressing issues with particular components or when testing changes in isolation. However, targeted operations should be used judiciously, as they can create inconsistencies between state and configuration if not carefully managed.

Refresh operations update state to match actual infrastructure without making changes. This synchronization proves valuable when resources have been modified outside automation or when recovering from state inconsistencies. The refresh operation queries all managed resources, updating state with current attribute values and identifying resources that no longer exist.

Building Reusable Modules

Modules package related resources into reusable components, promoting consistency and reducing configuration duplication. A module consists of configuration files in a directory, with a well-defined interface of input variables and output values. This encapsulation enables teams to create standardized infrastructure patterns that can be instantiated multiple times with different parameters.

Module composition creates hierarchical structures where higher-level modules combine lower-level modules into complete architectures. This layering enables different abstraction levels—from low-level network components to complete application stacks—each appropriate for different audiences and use cases. Infrastructure architects might work with high-level modules representing entire environments, while platform engineers create the foundational modules those compositions rely upon.

Module Design Principles

Effective modules balance flexibility with simplicity, exposing configuration options for legitimate variations while providing sensible defaults for common scenarios. Over-parameterization creates complex interfaces that reduce usability, while insufficient flexibility forces users to fork and modify modules rather than consuming them directly. Finding this balance requires understanding how the module will be used and what variations represent genuine requirements versus premature optimization.

Input validation within modules enforces correctness at module boundaries, catching configuration errors before resource creation. Variable validation blocks specify constraints on acceptable values, from simple type checking to complex conditional logic. These guardrails prevent misconfigurations that would otherwise result in runtime errors or security issues.

variable "environment" {

description = "Deployment environment name"

type = string

validation {

condition = contains(["dev", "staging", "prod"], var.environment)

error_message = "Environment must be dev, staging, or prod."

}

}

variable "instance_count" {

description = "Number of instances to create"

type = number

default = 2

validation {

condition = var.instance_count >= 1 && var.instance_count <= 10

error_message = "Instance count must be between 1 and 10."

}

}Module Sources and Versioning

Modules can be sourced from local directories, version control repositories, module registries, or HTTP URLs. Each source type offers different trade-offs between convenience, versioning, and access control. Local modules simplify development and testing, while remote sources enable sharing across teams and projects with proper version management.

Version constraints for module dependencies prevent unexpected breaking changes from impacting infrastructure. Specifying version requirements ensures that module updates occur deliberately rather than automatically, giving teams control over when to adopt changes. Semantic versioning conventions communicate the nature of changes, helping consumers understand whether updates contain new features, bug fixes, or breaking changes.

"Well-designed modules transform infrastructure code from collections of resource definitions into composable building blocks that accelerate development and ensure consistency."

Advanced Configuration Patterns

Conditional resource creation enables configurations to adapt to different scenarios without maintaining separate configuration files. The count and for_each meta-arguments combined with conditional expressions create resources only when specific conditions are met. This capability proves valuable for optional components like monitoring dashboards that exist in production but not development environments, or backup configurations that vary by environment.

Dynamic blocks generate repeating nested configuration blocks based on collection values, eliminating repetitive block declarations. These constructs iterate over lists or maps, creating a nested block for each element with attributes derived from the collection values. Dynamic blocks shine when configuring resources with variable numbers of similar sub-components, like security group rules or load balancer listeners.

Workspace Management

Workspaces provide isolated state environments within a single configuration, enabling management of multiple infrastructure instances from one codebase. Each workspace maintains separate state, allowing the same configuration to deploy development, staging, and production environments with different variable values. This approach simplifies configuration management but requires discipline to ensure workspace-specific values are properly parameterized rather than hardcoded.

The workspace selection mechanism determines which state file operations target. Commands to create, select, and delete workspaces enable switching between environments. The current workspace name can be referenced within configurations, enabling workspace-specific resource naming and conditional logic based on the active workspace.

Import and Migration Strategies

Importing existing resources brings manually created infrastructure under automated management without destroying and recreating resources. The import command associates existing cloud resources with configuration blocks, populating state with the resource's current attributes. This operation requires writing configuration matching the existing resource before importing, ensuring state and configuration alignment.

Migration scenarios arise when restructuring configurations, changing module boundaries, or adopting new organizational patterns. State manipulation commands enable moving resources between state files, renaming resources, and adjusting resource addresses. These operations require careful planning and testing, as incorrect state modifications can lead to resource deletion or duplication.

Testing Infrastructure Code

Validation and testing ensure configuration correctness before applying changes to real infrastructure. Built-in validation checks syntax correctness and basic semantic rules, catching obvious errors early. Format checking ensures consistent code style across team members, while linting tools identify potential issues and anti-patterns.

Automated testing frameworks enable verification of infrastructure behavior beyond basic validation. Tools like Terratest execute configurations in isolated test environments, verify resulting infrastructure meets requirements, and clean up test resources. These integration tests catch issues that static analysis cannot detect, like incorrect resource configurations or missing dependencies.

Collaboration and Team Workflows

Version control integration forms the foundation of team collaboration, treating infrastructure code with the same rigor as application code. Committing configurations to Git or similar systems provides change history, enables code review, and creates a definitive source for infrastructure definitions. Branching strategies separate development work from stable configurations, while pull requests facilitate review and discussion before merging changes.

Code review processes catch errors, share knowledge, and ensure consistency across infrastructure definitions. Reviewers examine not just syntax correctness but design decisions, security implications, and alignment with organizational standards. Automated checks integrated into pull request workflows provide immediate feedback on formatting, validation, and policy compliance.

CI/CD Integration

Continuous integration pipelines automate validation, testing, and deployment of infrastructure changes. Pipeline stages progress from basic validation through planning to conditional application based on branch or approval status. This automation ensures all changes undergo consistent checking and reduces manual errors in deployment processes.

Pipeline design balances automation with safety, automatically applying changes to development environments while requiring approval for production deployments. Plan artifacts generated in pipeline runs provide reviewers with detailed change previews, enabling informed approval decisions. Audit trails created by pipeline execution document who approved changes and when they were applied.

Policy as Code

Policy enforcement mechanisms validate configurations against organizational requirements before allowing deployment. Policy frameworks evaluate planned changes, checking for security violations, cost implications, or deviations from architectural standards. These automated guardrails prevent misconfigurations from reaching production while providing clear feedback about policy violations.

"Effective collaboration transforms infrastructure management from individual heroics into systematic team practices that scale across organizations."

Sentinel and Open Policy Agent represent two popular policy frameworks that integrate with infrastructure automation workflows. These systems evaluate configurations and plans against policy rules written in domain-specific languages, returning pass/fail results with detailed violation information. Policy libraries can be shared across teams, encoding organizational knowledge and ensuring consistent enforcement.

Security and Compliance Considerations

Secrets management represents one of the most critical security challenges in infrastructure automation. Hardcoding credentials, API keys, or sensitive configuration values in configuration files creates security vulnerabilities and complicates rotation. Instead, sensitive values should be sourced from secure secret stores, passed through environment variables, or retrieved at runtime from services like AWS Secrets Manager or HashiCorp Vault.

Variable marking enables designation of sensitive values that should not appear in logs or console output. Marking variables as sensitive prevents their values from being displayed during planning and application, reducing the risk of accidental exposure. However, this marking only affects display—sensitive values still appear in state files, emphasizing the importance of state file security.

Access Control and Authentication

Role-based access control limits who can view, modify, or apply infrastructure configurations. Cloud provider IAM policies control what resources can be created and modified, while backend access controls determine who can read or write state. Principle of least privilege should guide permission assignment, granting only the access necessary for specific roles and responsibilities.

Service accounts and assumed roles provide authentication for automated systems without requiring long-lived credentials. CI/CD pipelines should use temporary credentials obtained through OIDC federation or similar mechanisms, eliminating the need to store static credentials in pipeline configurations. This approach reduces credential exposure and simplifies rotation.

Audit and Compliance

Change tracking and audit logging document all infrastructure modifications, supporting compliance requirements and incident investigation. Version control history provides detailed records of configuration changes, while backend audit logs track state access and modifications. Cloud provider audit services like CloudTrail complement these records with details of actual API calls made during infrastructure operations.

Compliance validation ensures infrastructure meets regulatory and organizational requirements. Policy enforcement can check for required encryption, network isolation, logging configuration, and other compliance controls. Automated compliance checking integrated into deployment pipelines prevents non-compliant infrastructure from being created.

"Security in infrastructure automation requires defense in depth, combining secrets management, access control, audit logging, and policy enforcement."

Troubleshooting and Problem Resolution

Debugging infrastructure issues requires understanding both the automation tool and the underlying cloud platforms. Error messages often originate from provider APIs rather than the automation tool itself, requiring familiarity with cloud platform error codes and meanings. Verbose logging modes provide additional detail about operations, revealing the sequence of API calls and responses that led to failures.

State inconsistencies arise when actual infrastructure diverges from state file records, typically due to manual changes or external automation. Detecting these inconsistencies requires refresh operations that query actual infrastructure and compare against state. Resolving inconsistencies might involve importing manually created resources, removing deleted resources from state, or reverting manual changes to match declared configuration.

Common Error Patterns

Authentication and permission errors represent the most frequent issues encountered, manifesting as access denied messages or missing credential errors. Verifying credential configuration, checking IAM policies, and confirming service account permissions typically resolve these issues. Temporary credential expiration can cause intermittent failures, particularly in long-running operations.

Resource dependency errors occur when the system cannot determine the correct creation order or when circular dependencies exist. Explicit dependency declarations using depends_on resolve ordering issues, while refactoring eliminates circular dependencies. Understanding the dependency graph helps identify why certain resources fail to create or destroy in expected sequences.

Recovery Procedures

State recovery procedures restore functionality when state files become corrupted or lost. Backend versioning enables restoration from historical state versions, while state backups provide additional recovery options. In worst-case scenarios, state can be rebuilt by importing existing resources, though this labor-intensive process emphasizes the importance of proper state protection.

Rollback strategies enable reverting problematic changes when issues arise after application. Version control history provides the previous configuration, while state versioning enables restoration of previous state if needed. Testing rollback procedures in non-production environments builds confidence in recovery capabilities before emergencies occur.

Performance and Scale Considerations

Configuration performance impacts developer productivity and pipeline execution times. Large configurations with hundreds or thousands of resources can take significant time to plan and apply. Optimization strategies include modularization to reduce the scope of individual operations, parallelism tuning to control concurrent resource operations, and targeted operations when working with specific components.

State file size grows with infrastructure complexity, eventually impacting operation performance. Very large state files slow down state loading, locking, and network transfer. Splitting infrastructure across multiple state files—by environment, application, or architectural layer—keeps individual state files manageable and enables parallel operations by different teams.

Resource Graph Optimization

Dependency graph complexity affects planning and application performance. Unnecessary dependencies slow operations by forcing sequential processing of resources that could be created concurrently. Reviewing and optimizing dependencies, removing unnecessary depends_on declarations, and restructuring configurations to reduce coupling improve performance.

Parallel execution settings control how many resources get processed simultaneously. Default parallelism balances speed with API rate limiting and resource dependencies. Increasing parallelism speeds operations but can trigger rate limits or create resource contention. Tuning requires understanding your infrastructure's dependency patterns and provider API limits.

Provider Caching and Optimization

Provider plugin caching reduces initialization time when working with multiple configurations using the same providers. Shared plugin directories eliminate redundant downloads, particularly valuable in CI/CD environments where pipelines initialize frequently. Mirror configurations enable organizations to host provider plugins internally, reducing external dependencies and improving reliability.

Adoption and Migration Strategies

Incremental adoption enables organizations to realize benefits while managing risk and learning curves. Starting with non-critical infrastructure or new projects provides opportunities to build expertise before automating business-critical systems. Pilot projects demonstrate value, identify challenges, and build organizational confidence in automation approaches.

Brownfield migration brings existing infrastructure under automation control without disrupting running systems. Import strategies enable gradual automation of manually created resources, while parallel management approaches maintain both manual and automated processes during transition periods. Complete migration often spans months or years, requiring sustained commitment and careful planning.

Team Training and Enablement

Skill development programs ensure team members possess necessary knowledge for effective infrastructure automation. Training should cover both tool-specific skills and broader infrastructure-as-code principles. Hands-on labs in safe environments enable practice without risk, while mentoring programs pair experienced practitioners with those learning automation approaches.

Documentation and runbooks codify organizational knowledge, providing reference material for common tasks and troubleshooting procedures. Internal documentation should supplement official tool documentation with organization-specific patterns, approved modules, and operational procedures. Living documentation maintained alongside code ensures accuracy and relevance.

Organizational Change Management

Cultural shifts accompany technical automation adoption, requiring attention to organizational dynamics and resistance patterns. Infrastructure automation changes roles and workflows, potentially threatening individuals comfortable with existing approaches. Addressing concerns, demonstrating benefits, and involving stakeholders in automation design builds buy-in and eases transitions.

"Successful automation adoption requires equal attention to technical implementation and organizational change, recognizing that tools alone cannot transform infrastructure management practices."

Success metrics demonstrate automation value and guide continuous improvement. Metrics might track deployment frequency, lead time for infrastructure changes, change failure rates, or time to recover from incidents. Measuring and communicating these metrics builds support for continued automation investment and identifies areas needing improvement.

Emerging Patterns and Future Directions

GitOps workflows extend infrastructure automation with Git-based operational models where repository state drives infrastructure state. Automated reconciliation processes continuously compare declared configuration with actual infrastructure, automatically correcting drift. This approach provides clear audit trails, simplifies rollback, and enables declarative operational models.

Multi-cloud and hybrid architectures increase in prevalence as organizations seek to avoid vendor lock-in and optimize for specific workload requirements. Managing infrastructure across multiple platforms requires abstraction layers that handle provider differences while maintaining consistent operational models. Standardized modules and policies become even more critical in these complex environments.

Integration with Application Delivery

Closer integration between infrastructure and application deployment pipelines enables more sophisticated deployment patterns. Infrastructure changes can be coordinated with application updates, enabling blue-green deployments, canary releases, and progressive delivery strategies. This coordination requires careful design to maintain appropriate separation of concerns while enabling necessary coupling.

Service mesh and Kubernetes integration represent growing automation domains as containerized workloads proliferate. Managing cluster infrastructure, namespace configuration, and service mesh policies through infrastructure automation extends consistent operational models to these platforms. The declarative nature aligns well with Kubernetes' own declarative APIs.

AI and Machine Learning Applications

Artificial intelligence applications in infrastructure automation range from configuration generation to anomaly detection and optimization recommendations. Language models can assist with configuration authoring, policy creation, and documentation generation. Predictive analytics might forecast capacity needs or identify cost optimization opportunities based on usage patterns.

Automated remediation systems respond to detected issues without human intervention, applying fixes for common problems and drift scenarios. These systems require careful design to prevent automated responses from causing cascading failures or making incorrect assumptions. Human oversight and approval workflows ensure appropriate guardrails on automated actions.

Frequently Asked Questions

What distinguishes infrastructure as code from traditional configuration management tools?

Infrastructure as code focuses on provisioning and managing cloud resources and infrastructure components, while configuration management tools primarily handle software installation and configuration on existing systems. The declarative approach describes desired end states rather than procedural steps, and the state management system tracks infrastructure across its lifecycle. These tools excel at cloud resource orchestration, while configuration management tools like Ansible or Puppet better suit application-level configuration tasks. Many organizations use both categories of tools in complementary ways.

How should teams handle state file access and security in production environments?

Production state files should be stored in remote backends with encryption at rest and in transit, access controlled through IAM policies limiting who can read or modify state. State locking prevents concurrent modifications, while versioning enables recovery from accidental changes. Never commit state files to version control systems, and ensure backup procedures protect against data loss. Service accounts used by automation should have minimal necessary permissions, and human access should be logged and audited.

What strategies work best for managing infrastructure across multiple environments?

Organizations typically choose between workspace-based approaches using a single configuration with environment-specific variables, or directory-based structures with separate configurations per environment. Workspaces reduce duplication but require discipline around variable management, while separate directories provide clear isolation but increase maintenance overhead. Modules enable sharing common patterns across environments while allowing environment-specific customization. The choice depends on team size, infrastructure complexity, and organizational preferences around isolation versus consistency.

How can teams safely test infrastructure changes before applying them to production?

Comprehensive testing strategies include syntax validation, plan review, policy checking, and deployment to non-production environments that mirror production architecture. Automated testing frameworks can provision test infrastructure, verify expected behavior, and clean up resources. Plan files enable review of exact changes before application, while targeted operations allow testing specific components in isolation. Progressive rollout strategies apply changes to subsets of infrastructure, monitoring for issues before full deployment.

What approaches help manage the learning curve when adopting infrastructure automation?

Start with small, non-critical projects to build familiarity before automating business-critical infrastructure. Structured training combining conceptual learning with hands-on practice accelerates skill development, while mentoring pairs experienced practitioners with those learning. Documentation of organizational patterns and approved practices provides ongoing reference material. Community resources including forums, documentation, and example configurations supplement organizational knowledge. Patience and recognition that expertise develops over time helps manage expectations during the learning process.

How should organizations handle infrastructure drift when resources are modified outside automation?

Detect drift through regular refresh operations that compare state against actual infrastructure, identifying discrepancies. Address drift by either importing manual changes into configuration or reverting manual modifications to match declared state. Preventive measures include IAM policies restricting manual changes, monitoring for out-of-band modifications, and organizational policies requiring all changes through automation. Some drift is inevitable and acceptable, but systematic drift indicates process issues requiring attention.