How to Implement Microservices Architecture

Microservices implementation guide: define bounded contexts, split services, design APIs, use containers & orchestration, enable CI/CD, observability, security, testing, versioning

How to Implement Microservices Architecture

Modern software development demands systems that can scale, adapt, and evolve without bringing entire platforms to their knees. Traditional monolithic applications, while straightforward to build initially, often become unwieldy beasts that slow innovation and frustrate development teams. When a single code change requires redeploying an entire application, or when one component's failure cascades through the system, organizations find themselves trapped in a cycle of technical debt and compromised agility.

Microservices architecture represents a fundamental shift in how we design and build software systems—breaking down applications into small, independent services that communicate through well-defined interfaces. Each service owns a specific business capability, runs in its own process, and can be developed, deployed, and scaled independently. This approach promises flexibility, resilience, and the ability to use the right tool for each job, but it also introduces complexity in areas like distributed system management, data consistency, and inter-service communication.

This comprehensive guide walks you through the practical realities of implementing microservices architecture, from initial planning and service decomposition to deployment strategies and operational considerations. You'll discover proven patterns for service communication, learn how to handle distributed data management, understand the infrastructure requirements, and gain insights into the organizational changes needed to succeed. Whether you're migrating from a monolith or building greenfield applications, you'll find actionable strategies backed by real-world experience.

Understanding the Foundation of Microservices

Before diving into implementation details, establishing a solid conceptual foundation proves essential for making informed architectural decisions. Microservices architecture isn't simply about creating small services—it's about designing systems that align with business capabilities while maintaining operational independence. Each service should represent a bounded context with clear responsibility boundaries, owning its data and business logic completely.

The philosophy centers on loose coupling and high cohesion, where services interact through stable contracts but remain free to evolve internally. This independence extends beyond code to include deployment pipelines, scaling decisions, and even technology choices. A payment service might use PostgreSQL while an analytics service leverages a time-series database, each optimized for its specific workload without forcing compromise on others.

"The goal isn't to have the smallest possible services, but to have services that can change independently without coordinating with other teams."

Understanding the trade-offs becomes critical before committing to this architectural style. Microservices introduce distributed system complexity—network latency, partial failures, and eventual consistency become daily concerns rather than edge cases. The operational overhead increases significantly, requiring sophisticated monitoring, logging, and deployment automation. Teams must honestly assess whether their organization has the maturity, tooling, and personnel to handle these challenges.

Core Principles Driving Success

Several fundamental principles guide successful microservices implementations. Single Responsibility Principle at the service level means each microservice should have one reason to change, focusing on a specific business capability. The Checkout service handles order processing, the Inventory service manages stock levels, and the Notification service sends communications—each with clear, non-overlapping responsibilities.

Decentralized governance empowers teams to make technology decisions appropriate for their service's needs. Rather than mandating a single technology stack across the organization, teams choose languages, frameworks, and databases that best solve their specific problems. This freedom accelerates innovation but requires establishing guidelines around observability, security, and operational standards to prevent chaos.

The principle of design for failure acknowledges that in distributed systems, failures aren't exceptional—they're inevitable. Services must gracefully handle downstream failures, implement timeout and retry logic thoughtfully, and provide fallback mechanisms. Circuit breakers prevent cascading failures, bulkheads isolate resources, and health checks enable automated recovery.

| Principle | Description | Implementation Approach | Common Pitfalls |

|---|---|---|---|

| Service Autonomy | Services operate independently with minimal coordination | Separate databases, async communication, independent deployment | Shared databases, tight coupling through synchronous calls |

| Business Capability Alignment | Services map to business functions, not technical layers | Domain-driven design, bounded contexts | Creating services around data models or UI components |

| Decentralized Data Management | Each service owns and manages its data exclusively | Database per service, event sourcing, CQRS | Direct database access between services |

| Infrastructure Automation | Deployment, scaling, and recovery happen automatically | CI/CD pipelines, container orchestration, IaC | Manual deployments, snowflake servers |

Strategic Planning and Service Decomposition

The journey toward microservices begins not with code, but with careful analysis of your domain and business capabilities. Rushing into decomposition without proper planning creates distributed monoliths—systems with all the complexity of microservices but none of the benefits. Start by mapping your business capabilities and identifying natural boundaries where services can operate independently.

Domain-Driven Design (DDD) provides invaluable tools for this decomposition work. Bounded contexts identify areas where specific terms and models have consistent meaning, suggesting natural service boundaries. The concept of "Customer" means something different in Marketing (demographic data, preferences) versus Billing (payment methods, invoices) versus Support (ticket history, contact preferences). These different contexts suggest separate services, each with its own customer model optimized for its needs.

Identifying Service Boundaries

Several techniques help identify appropriate service boundaries. Event storming sessions bring domain experts and technical teams together to map business processes, identifying events, commands, and aggregates. These sessions reveal natural clustering of functionality and highlight areas of high cohesion that belong together.

🎯 Analyzing data access patterns exposes coupling. Services that frequently need data from each other might belong together, while those with minimal data dependencies make good candidates for separation. Look for transactional boundaries—operations that must succeed or fail together often belong in the same service.

Team structure significantly influences service boundaries. Conway's Law observes that systems mirror the communication structures of organizations that build them. Aligning service boundaries with team boundaries reduces coordination overhead and enables teams to work independently. If you have separate teams for user management and order processing, those capabilities likely belong in separate services.

"Start with a monolith and extract services only when you understand the domain well enough to know where the boundaries should be."

The Strangler Fig Pattern for Migration

When migrating from existing monolithic systems, the strangler fig pattern offers a low-risk, incremental approach. Rather than attempting a risky big-bang rewrite, this pattern gradually replaces functionality by routing new features and refactored components to new services while the monolith continues handling everything else.

Implementation begins by placing a facade or proxy in front of the monolith. As you extract services, the proxy routes relevant requests to the new service while passing others to the monolith. Over time, the monolith shrinks as more functionality moves to services, eventually disappearing entirely—like a strangler fig gradually consuming its host tree.

🔄 Start extraction with services at the edges of your system—functionality with minimal dependencies on the core. Authentication, notification services, or reporting features often make good candidates. These extractions provide learning opportunities without risking core business logic, allowing teams to develop microservices expertise before tackling more complex decomposition.

Maintain feature parity during migration. Users shouldn't notice that functionality has moved to a new service. Implement comprehensive testing, including contract tests between services and end-to-end tests covering critical user journeys. Gradual rollout with feature flags enables quick rollback if issues emerge.

Communication Patterns Between Services

How services communicate fundamentally shapes system behavior, performance, and resilience. The choice between synchronous and asynchronous communication, the protocols employed, and the patterns implemented determine whether your microservices architecture thrives or struggles under load. Each approach carries distinct trade-offs that must align with your specific requirements.

Synchronous communication via REST APIs or gRPC provides simplicity and immediate responses. When Service A calls Service B, it waits for a response before continuing. This request-response pattern feels natural and works well for queries and operations requiring immediate feedback. REST's ubiquity and human-readable format make it a popular choice, while gRPC offers superior performance through binary serialization and HTTP/2 multiplexing.

RESTful API Design Considerations

Designing effective REST APIs for microservices requires careful attention to resource modeling, versioning strategies, and error handling. Resources should represent business concepts, not database tables. Use proper HTTP methods—GET for retrieval, POST for creation, PUT for updates, DELETE for removal—and return appropriate status codes that convey meaning.

🌐 API versioning becomes critical as services evolve independently. URL-based versioning (api/v1/orders) provides clarity and explicit version control. Header-based versioning offers cleaner URLs but less visibility. Whatever approach you choose, maintain backward compatibility within major versions and provide clear deprecation timelines when introducing breaking changes.

Implement circuit breakers for synchronous calls to prevent cascading failures. When a downstream service becomes unhealthy, the circuit breaker trips, immediately returning errors rather than waiting for timeouts. This fail-fast approach prevents resource exhaustion and allows the struggling service time to recover. Libraries like Hystrix, Resilience4j, or Polly provide battle-tested implementations.

Asynchronous Messaging for Loose Coupling

Asynchronous communication through message queues or event streams enables higher decoupling and better resilience. Services publish events or messages without waiting for responses, allowing the system to continue processing while consumers handle messages at their own pace. This temporal decoupling means services don't need to be available simultaneously.

"Asynchronous messaging transforms tight coupling into loose coupling, but it trades immediate consistency for eventual consistency."

Message brokers like RabbitMQ, Apache Kafka, or AWS SQS facilitate this communication. Event-driven architecture publishes domain events—facts about things that have happened—allowing multiple services to react independently. When an order is placed, the Order service publishes an OrderPlaced event. The Inventory service decrements stock, the Notification service sends confirmation emails, and the Analytics service updates dashboards—all without the Order service knowing or caring about these downstream processes.

📬 Consider message delivery guarantees carefully. At-most-once delivery risks message loss but avoids duplicates. At-least-once delivery ensures messages aren't lost but requires idempotent consumers that handle duplicates safely. Exactly-once delivery provides the strongest guarantees but comes with performance costs and implementation complexity.

Event sourcing takes asynchronous patterns further by storing all state changes as a sequence of events. Rather than persisting current state, you record every event that led to that state. This approach provides complete audit trails, enables temporal queries, and simplifies building new projections of data. However, it introduces complexity in event schema evolution and requires careful consideration of event versioning.

| Communication Pattern | Best Use Cases | Advantages | Challenges |

|---|---|---|---|

| Synchronous REST | Queries, user-facing operations requiring immediate response | Simple, familiar, immediate feedback | Tight coupling, cascading failures, latency accumulation |

| gRPC | Internal service-to-service calls, performance-critical paths | High performance, strong typing, code generation | Less human-readable, requires tooling |

| Message Queues | Background processing, task distribution, load leveling | Decoupling, buffering, guaranteed delivery | Complexity, eventual consistency, debugging difficulty |

| Event Streaming | Real-time data pipelines, event sourcing, reactive systems | Scalability, replay capability, multiple consumers | Learning curve, operational complexity, ordering guarantees |

Service Mesh for Advanced Communication Management

As microservices proliferate, managing service-to-service communication becomes increasingly complex. Service meshes like Istio, Linkerd, or Consul Connect provide infrastructure layers handling concerns like service discovery, load balancing, encryption, authentication, and observability without requiring application code changes.

Service meshes deploy sidecar proxies alongside each service instance, intercepting all network traffic. These proxies implement sophisticated traffic management—canary deployments, A/B testing, fault injection—while collecting detailed metrics and traces. The control plane configures these proxies declaratively, enabling consistent policies across your entire service landscape.

⚙️ The operational complexity of service meshes shouldn't be underestimated. They introduce additional components, consume resources, and require expertise to configure and troubleshoot. Start with simpler approaches and adopt service meshes when their benefits clearly outweigh their costs—typically when managing dozens of services with complex traffic management requirements.

Data Management in Distributed Systems

Data management represents one of microservices architecture's most challenging aspects. The principle of database per service ensures autonomy but creates complexity around data consistency, querying across services, and managing transactions. Traditional ACID transactions spanning multiple databases become impossible, requiring new patterns and mental models.

Each service maintaining its own database prevents tight coupling through shared data stores. Services expose data through APIs rather than allowing direct database access. This encapsulation enables teams to change database schemas, optimize queries, or even switch database technologies without impacting other services. A service might migrate from PostgreSQL to MongoDB without any other service noticing.

Handling Distributed Transactions

The Saga pattern manages distributed transactions by breaking them into a series of local transactions, each updating a single service. If any step fails, compensating transactions undo previously completed work. Two implementation approaches exist: choreography, where services publish events and react to them, and orchestration, where a coordinator explicitly directs the saga's flow.

Choreography-based sagas keep services decoupled. When creating an order, the Order service publishes OrderCreated. The Payment service processes payment and publishes PaymentCompleted. The Inventory service reserves items and publishes InventoryReserved. If payment fails, the Payment service publishes PaymentFailed, triggering the Order service to cancel the order. Each service knows how to react to events but doesn't coordinate with others directly.

"Embracing eventual consistency isn't giving up on consistency—it's acknowledging the reality of distributed systems and designing accordingly."

Orchestration-based sagas use a coordinator that explicitly calls each service in sequence. The Order Saga Orchestrator calls Payment service, then Inventory service, then Shipping service. If any step fails, the orchestrator executes compensating transactions in reverse order. This approach provides clearer workflow visibility and easier debugging but creates a potential single point of failure and coordination bottleneck.

Command Query Responsibility Segregation (CQRS)

CQRS separates read and write operations into different models, optimizing each for its specific use case. The write model enforces business rules and maintains consistency, while read models are optimized for query performance. This separation proves particularly valuable in microservices where different services need different views of data.

🎨 Implementation typically involves services publishing events when data changes. Read model services consume these events, building and maintaining query-optimized projections. An e-commerce system might have separate read models for product catalogs (optimized for search), order history (optimized for customer queries), and analytics (optimized for aggregation and reporting).

The benefits include independent scaling of read and write workloads, query optimization without impacting write performance, and flexibility in choosing different databases for different query patterns. The read model for full-text search might use Elasticsearch, while the transactional write model uses PostgreSQL. However, CQRS introduces eventual consistency between write and read models, requiring careful consideration of user experience during this consistency window.

Maintaining Data Consistency

Eventual consistency becomes a fact of life in microservices architecture. Rather than fighting it, successful implementations embrace it and design user experiences accordingly. Show users that their action was received and is being processed rather than pretending everything completed instantly. Provide status updates and notifications when long-running processes complete.

💾 Implement idempotency throughout your system. Operations should produce the same result whether executed once or multiple times. Use unique request identifiers, check for duplicate operations before processing, and design APIs that naturally support retries. This idempotency enables safe retry logic and handles duplicate messages from at-least-once delivery systems.

Data replication strategies help services access data they need without tight coupling. Services can maintain local caches or read replicas of data from other services, updated through event subscriptions. These replicas remain eventually consistent with the source but provide fast local access without synchronous calls to other services.

Infrastructure and Deployment Strategies

Microservices architecture demands robust infrastructure and automation. Managing dozens or hundreds of services manually becomes impossible—successful implementations rely heavily on containerization, orchestration, and comprehensive automation. The infrastructure must support independent deployment, scaling, and monitoring of services while maintaining overall system health.

Containerization with Docker provides consistent packaging for services regardless of their technology stack. Each service runs in a container with its dependencies bundled, eliminating "works on my machine" problems. Containers start quickly, consume fewer resources than virtual machines, and enable efficient resource utilization through density.

Container Orchestration with Kubernetes

Kubernetes has emerged as the de facto standard for container orchestration, managing deployment, scaling, and operations of containerized applications. It handles service discovery, load balancing, rolling updates, and self-healing automatically. Pods represent the smallest deployable units, typically containing one or more containers that share networking and storage.

Services in Kubernetes provide stable networking endpoints for pods, abstracting away individual pod IP addresses that change as pods restart or scale. Deployments manage desired state declaratively—specify that you want three replicas of the Payment service, and Kubernetes ensures three pods are always running, automatically replacing failed instances.

🚀 Kubernetes' learning curve is steep, and its complexity can overwhelm teams new to container orchestration. Managed Kubernetes services like Amazon EKS, Google GKE, or Azure AKS reduce operational burden by handling control plane management, upgrades, and integration with cloud provider services. Start with managed services unless you have specific requirements demanding self-hosted clusters.

Resource management in Kubernetes requires careful attention. Define resource requests (guaranteed resources) and limits (maximum resources) for each container. Requests ensure pods are scheduled on nodes with sufficient capacity, while limits prevent runaway processes from consuming all node resources. Horizontal Pod Autoscaling automatically adjusts replica counts based on CPU, memory, or custom metrics.

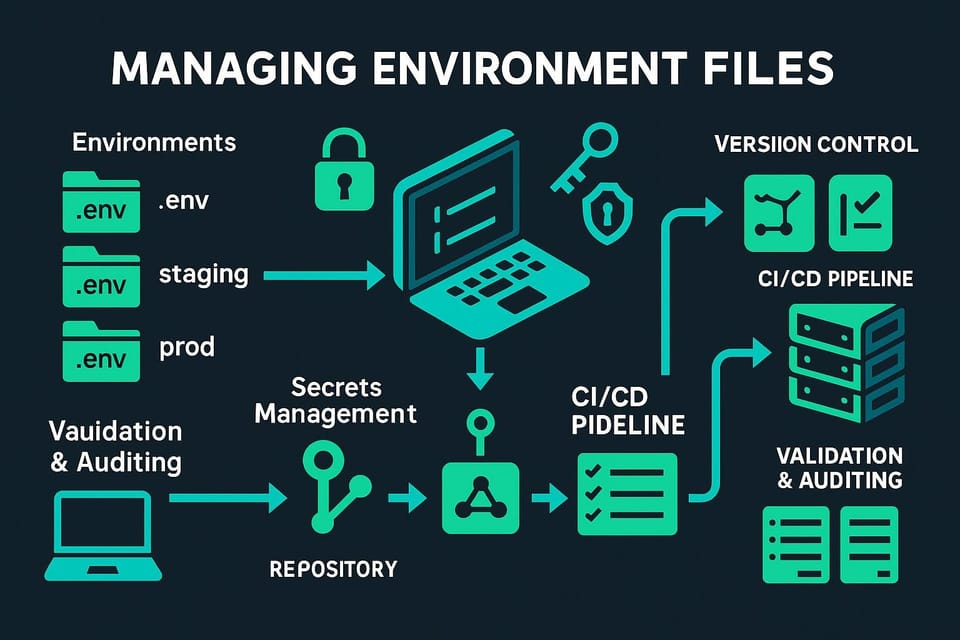

Continuous Integration and Deployment

CI/CD pipelines automate building, testing, and deploying services, enabling the rapid iteration that microservices promise. Each service should have its own pipeline, allowing independent deployment without coordinating with other teams. Pipelines typically include unit tests, integration tests, security scanning, and automated deployment to multiple environments.

"The goal isn't continuous deployment for its own sake—it's reducing the risk and friction of releasing changes so teams can deliver value quickly and safely."

Deployment strategies balance speed with safety. Blue-green deployments maintain two identical production environments, routing traffic to one while deploying to the other. Once validated, traffic switches to the new version. This approach enables instant rollback but doubles infrastructure costs. Canary deployments gradually route increasing percentages of traffic to new versions, monitoring for errors before full rollout. This progressive exposure limits blast radius if issues emerge.

🎯 Feature flags decouple deployment from release, allowing code to be deployed to production but disabled until ready. Teams can deploy incomplete features hidden behind flags, enable them for internal testing, gradually roll out to users, and quickly disable if problems arise. Tools like LaunchDarkly, Unleash, or custom solutions provide sophisticated targeting and rollout capabilities.

Infrastructure as Code (IaC) treats infrastructure configuration as versioned code, enabling reproducible environments and automated provisioning. Terraform, AWS CloudFormation, or Pulumi define infrastructure declaratively—specify what you want, and the tool creates it. Version control tracks changes, code review catches errors, and automation ensures consistency across environments.

Observability and Monitoring

Understanding system behavior becomes exponentially harder in distributed systems. A single user request might touch a dozen services, making debugging failures or performance issues challenging. Comprehensive observability—combining metrics, logs, and traces—provides the visibility needed to operate microservices effectively.

Distributed tracing tracks requests as they flow through multiple services, providing end-to-end visibility. Tools like Jaeger, Zipkin, or cloud provider solutions instrument services to propagate trace context. When a request enters the system, it receives a unique trace ID that follows it through all service calls. Traces reveal which services were involved, how long each took, and where failures occurred.

The Three Pillars of Observability

Metrics provide quantitative measurements of system behavior—request rates, error rates, latency percentiles, resource utilization. Time-series databases like Prometheus store metrics efficiently, and visualization tools like Grafana create dashboards showing system health at a glance. Define Service Level Indicators (SLIs) measuring user-visible behavior and Service Level Objectives (SLOs) setting targets for these indicators.

📊 Focus on the four golden signals: latency (how long requests take), traffic (how much demand the system is receiving), errors (rate of failed requests), and saturation (how full the service is). These metrics provide a comprehensive view of service health and quickly highlight problems. Alert on SLO violations rather than arbitrary thresholds—notify when user experience degrades, not just when CPU exceeds 80%.

Logs capture detailed event information, essential for debugging specific issues. Structured logging in JSON format enables powerful querying and aggregation. Include correlation IDs in logs to connect related events across services. Centralized logging systems like ELK Stack (Elasticsearch, Logstash, Kibana), Splunk, or cloud provider solutions aggregate logs from all services, providing unified search and analysis.

Distributed tracing connects metrics and logs, showing exactly what happened during a specific request. When users report slow checkout, traces reveal whether the delay occurred in the Payment service, Inventory service, or network communication. Traces expose unexpected service dependencies and help optimize critical paths.

Implementing Effective Alerting

Alert fatigue undermines on-call effectiveness. Too many alerts train teams to ignore them, while too few leave problems undetected. Design alerts around user impact—alert when customers are affected, not just when internal metrics cross thresholds. Use alert severity levels appropriately: critical alerts require immediate action, warnings indicate degradation worth investigating, and informational alerts provide context.

🔔 Implement runbooks for common alerts, documenting investigation steps and remediation procedures. When the Payment Service High Error Rate alert fires, the runbook should guide responders through checking recent deployments, examining error logs, verifying downstream dependencies, and escalation procedures. Runbooks reduce mean time to recovery and help less experienced team members handle incidents effectively.

Synthetic monitoring proactively tests system functionality from the user's perspective. Automated scripts simulate user journeys—browsing products, adding to cart, checking out—running continuously from multiple locations. Synthetic tests detect issues before users report them and validate that critical paths work across the entire system.

Security Considerations

Microservices architecture expands the attack surface significantly. Each service represents a potential entry point, and service-to-service communication creates numerous internal pathways requiring protection. Security can't be an afterthought—it must be designed into the architecture from the beginning and maintained vigilantly throughout the system's lifecycle.

Authentication and authorization become more complex in distributed systems. Rather than authenticating once at a perimeter, requests flow through multiple services, each needing to verify caller identity and permissions. Token-based authentication using JSON Web Tokens (JWT) or OAuth 2.0 enables stateless authentication where services can validate tokens without calling back to a central authentication service.

API Gateway as Security Perimeter

API gateways provide a single entry point for external clients, consolidating security enforcement. The gateway handles authentication, rate limiting, request validation, and threat detection before requests reach internal services. This centralization simplifies security implementation and provides consistent protection across all services.

🛡️ Implement defense in depth—don't rely solely on perimeter security. Services should validate inputs, enforce authorization, and protect against common vulnerabilities even for internal requests. Assume breaches will occur and limit blast radius through network segmentation, least privilege access, and comprehensive logging of security-relevant events.

Service-to-service authentication prevents unauthorized internal access. Mutual TLS (mTLS) provides strong authentication where both client and server verify each other's identity using certificates. Service meshes typically handle mTLS automatically, encrypting all service-to-service communication and verifying service identity without application code changes.

"Security in microservices isn't about building higher walls—it's about assuming breach and limiting what attackers can do once inside."

Secrets Management

Managing secrets—database passwords, API keys, encryption keys—securely across many services requires dedicated solutions. Never hardcode secrets or commit them to version control. Use secrets management services like HashiCorp Vault, AWS Secrets Manager, or Azure Key Vault to store, rotate, and audit secret access.

🔐 Implement secret rotation regularly and automatically. Services should fetch secrets at startup and handle rotation gracefully without downtime. Use short-lived credentials when possible, regenerating them frequently to limit exposure if compromised. Audit all secret access, alerting on unusual patterns that might indicate compromise.

Container security deserves particular attention. Scan container images for vulnerabilities before deployment, use minimal base images to reduce attack surface, and run containers with least privilege—avoid running as root. Implement pod security policies or their successors to enforce security standards across your cluster.

Organizational and Cultural Considerations

Technical architecture alone doesn't determine success—organizational structure and culture play equally critical roles. Microservices architecture works best when teams are organized around services, empowered to make decisions, and equipped with the skills and tools to operate independently. The transition requires changes in team structure, processes, and mindset that many organizations underestimate.

Team topology significantly impacts microservices success. Cross-functional teams owning services end-to-end—from development through deployment to operations—move faster than teams split by function. A team owning the Payment service includes developers, testers, and operations expertise, enabling them to deliver features without dependencies on other teams.

DevOps Culture and Practices

Microservices and DevOps complement each other naturally. DevOps emphasizes automation, collaboration, and shared responsibility for system reliability—principles essential for operating many independent services. Teams that build services should run them, creating tight feedback loops and incentivizing operational excellence.

🤝 Breaking down silos between development and operations enables the rapid iteration microservices promise. Developers gain operational awareness, understanding how their code behaves in production and taking responsibility for its reliability. Operations teams shift from manual gatekeeping to building platforms and tools that enable developer self-service.

Blameless postmortems foster learning from failures rather than punishment. When incidents occur—and they will—focus on understanding systemic factors that contributed and implementing improvements to prevent recurrence. Psychological safety enables teams to acknowledge mistakes, share knowledge, and continuously improve.

Skills and Training Requirements

Microservices demand broader skill sets from team members. Developers need understanding of distributed systems, networking, containerization, and operations. Operations engineers benefit from programming skills for automation and infrastructure as code. Invest in training and create communities of practice where teams share knowledge and experiences.

📚 Start small and build expertise gradually. Don't attempt to migrate your entire system to microservices immediately. Extract one or two services, learn from the experience, develop patterns and practices, and expand as organizational capability grows. Early services provide learning opportunities and help identify necessary tooling and process changes.

Documentation becomes more critical as system complexity increases. Maintain up-to-date architecture diagrams, API documentation, runbooks, and decision records. Tools like Swagger/OpenAPI for API documentation, architecture decision records (ADRs) for capturing important decisions, and regularly updated system diagrams help new team members understand the system and prevent knowledge silos.

Common Pitfalls and How to Avoid Them

Many microservices implementations stumble over predictable obstacles. Learning from common mistakes helps avoid painful experiences and accelerates success. Understanding these pitfalls and their solutions enables more informed decision-making throughout your microservices journey.

Creating a distributed monolith represents perhaps the most common failure mode. This occurs when services are technically separate but tightly coupled through synchronous communication, shared databases, or coordinated deployments. You get all the complexity of microservices with none of the benefits—services can't be deployed independently, failures cascade, and teams remain blocked by dependencies.

Avoiding Premature Decomposition

Starting with microservices for greenfield projects often backfires. Without understanding domain boundaries, teams create services that don't align with actual business capabilities. As understanding evolves, these boundaries prove wrong, requiring expensive refactoring across service boundaries.

⚠️ Begin with a modular monolith—a well-structured monolithic application with clear internal boundaries. This approach provides many benefits of good architecture while avoiding distributed system complexity. As domain understanding deepens and clear boundaries emerge, extract services strategically. The monolith-first approach reduces risk and builds expertise before tackling distributed systems.

Insufficient automation leads to operational nightmares. Managing dozens of services manually proves impossible—deployments take forever, environments drift, and incidents become lengthy firefighting sessions. Invest in automation early: CI/CD pipelines, infrastructure as code, automated testing, and monitoring. The automation investment pays dividends as service count grows.

Data Consistency Challenges

Underestimating eventual consistency challenges creates poor user experiences and data integrity issues. Teams accustomed to immediate consistency struggle to design systems where data updates take time to propagate. Users see stale data, operations fail due to race conditions, and business logic becomes complex trying to handle partial states.

"Most microservices failures aren't technical—they're organizational, stemming from misaligned incentives, insufficient skills, or poor communication."

Design for eventual consistency from the start. Use patterns like sagas for distributed transactions, CQRS for separating reads and writes, and event sourcing for maintaining consistency through event streams. Communicate clearly to users when operations are processing asynchronously rather than pretending they complete instantly.

Monitoring and Debugging Difficulties

Inadequate observability makes debugging impossible. When requests span multiple services, finding the source of errors or performance issues without distributed tracing becomes guesswork. Logs scattered across services, inconsistent correlation IDs, and missing metrics leave teams blind.

🔍 Implement comprehensive observability before problems arise. Instrument services for metrics, logging, and tracing from day one. Establish correlation ID standards that propagate through all service calls. Build dashboards showing system health and create runbooks for common issues. The investment in observability enables rapid problem resolution and prevents lengthy outages.

Ignoring organizational readiness dooms technical implementations. If teams lack skills, processes remain waterfall-style, or culture punishes failure, microservices architecture will struggle regardless of technical excellence. Assess organizational readiness honestly and invest in cultural transformation alongside technical changes.

Testing Strategies for Microservices

Testing distributed systems requires different approaches than monolithic applications. The testing pyramid shifts, with greater emphasis on integration and contract testing to ensure services work together correctly. Comprehensive testing builds confidence in independent deployments while catching issues before production.

Unit tests remain important for testing individual components in isolation. Each service should have thorough unit test coverage of its business logic, ensuring correct behavior under various conditions. Unit tests run quickly, provide fast feedback, and help maintain code quality. However, they can't verify that services integrate correctly.

Contract Testing Between Services

Contract tests verify that services honor their agreements without requiring all services to run simultaneously. Consumer-driven contract testing has consumers define expectations for provider behavior, and providers verify they meet these expectations. Tools like Pact enable this testing approach, catching breaking changes before deployment.

🧪 The consumer service defines contracts specifying requests it will make and responses it expects. These contracts are verified against the provider service, ensuring it satisfies consumer expectations. When the provider changes, contract tests immediately reveal whether changes break existing consumers, preventing runtime failures.

Integration tests verify that services work together correctly, testing actual service-to-service communication. These tests are more expensive than unit tests but provide confidence that services integrate properly. Use test containers to run dependencies like databases or message brokers, ensuring tests run in environments closely resembling production.

End-to-End Testing Considerations

End-to-end tests validate complete user journeys through the entire system. While valuable, they're expensive to maintain and slow to execute. Focus end-to-end tests on critical business flows—checkout, payment processing, account creation—rather than attempting comprehensive coverage.

⚡ Minimize end-to-end test count and run them less frequently than unit and integration tests. Consider running them nightly or before releases rather than on every commit. Use production-like environments for end-to-end tests, but accept that perfect production fidelity is impossible and expensive.

Chaos engineering proactively tests system resilience by injecting failures. Tools like Chaos Monkey randomly terminate instances, test how the system handles network partitions, or simulate resource exhaustion. These experiments verify that resilience mechanisms like circuit breakers, retries, and fallbacks work correctly under failure conditions.

Performance Optimization

Microservices architecture introduces performance challenges—network latency accumulates across service calls, serialization overhead increases, and distributed transactions take longer than local ones. Understanding these challenges and applying appropriate optimization techniques ensures acceptable performance while maintaining architectural benefits.

Network latency becomes a primary concern when requests traverse multiple services. Each service call adds latency—even a fast 10ms call becomes problematic when a request makes ten such calls sequentially. Minimize synchronous service-to-service calls, especially in critical paths. Consider whether data can be cached locally, whether operations can happen asynchronously, or whether service boundaries should be adjusted.

Caching Strategies

Caching reduces load on services and improves response times by storing frequently accessed data closer to consumers. Multiple caching layers provide different benefits: in-memory caches within services for ultra-fast access, distributed caches like Redis for sharing data across service instances, and CDNs for static content delivery.

💨 Cache invalidation remains challenging in distributed systems. When data changes, all caches holding that data must be invalidated or updated. Time-based expiration provides simplicity but risks serving stale data. Event-based invalidation offers fresher data but adds complexity. Choose strategies appropriate for each data type's consistency requirements.

Consider cache-aside, read-through, and write-through patterns. Cache-aside has applications check cache first, fetching from the database on miss and populating the cache. Read-through caches automatically fetch data on miss. Write-through caches update both cache and database synchronously, ensuring consistency but adding latency to writes.

Database Optimization

Each service owning its database enables optimization for specific workloads. Use read replicas to scale read-heavy services, partition large tables for better performance, and choose database technologies suited to access patterns. A service performing complex queries benefits from a relational database, while a service storing user sessions might prefer Redis.

🗄️ Implement database connection pooling to reduce connection overhead. Creating database connections is expensive—connection pools maintain a set of ready connections, dramatically improving performance under load. Configure pool sizes based on service needs and database capacity, monitoring for connection exhaustion or idle connections.

Query optimization becomes critical as services scale. Use database query analyzers to identify slow queries, add appropriate indexes, and consider denormalization for read-heavy workloads. CQRS enables aggressive optimization of read models without compromising write model integrity.

Asynchronous Processing

Moving non-critical operations to asynchronous processing improves perceived performance. Users don't need to wait for email sending, analytics updates, or report generation. Return success immediately and process these operations in the background, notifying users when complete if necessary.

Message queues enable this asynchronous processing, buffering work and allowing services to process at their own pace. This approach also provides natural load leveling—during traffic spikes, queues grow but services process steadily rather than becoming overwhelmed. Consumers can scale independently based on queue depth.

Cost Considerations and Resource Management

Microservices architecture often increases infrastructure costs compared to monolithic applications. Each service requires compute resources, databases, networking, and monitoring. Understanding these costs and implementing effective resource management prevents budget overruns while maintaining system performance and reliability.

Right-sizing resources balances cost and performance. Over-provisioning wastes money on unused capacity, while under-provisioning causes performance issues and outages. Monitor actual resource utilization and adjust allocations accordingly. Kubernetes resource requests and limits help ensure efficient resource use across services.

Scaling Strategies

Horizontal scaling adds more instances of a service to handle increased load. This approach works well for stateless services and enables fine-grained scaling—scale only the services experiencing load rather than the entire application. Kubernetes Horizontal Pod Autoscaler automatically adjusts replica counts based on metrics.

📈 Vertical scaling increases resources for existing instances—more CPU, memory, or disk. This approach works for stateful services or when horizontal scaling isn't feasible. Cloud providers enable vertical scaling with minimal downtime, though there are practical limits to how large a single instance can grow.

Implement auto-scaling to match capacity with demand automatically. Define scaling policies based on CPU utilization, memory usage, request rate, or custom metrics. Auto-scaling reduces costs during low-traffic periods while ensuring capacity during peaks. Set appropriate minimum and maximum instance counts to balance cost and availability.

Cost Optimization Techniques

Use spot instances or preemptible VMs for non-critical workloads, achieving significant cost savings. These instances can be terminated with short notice but cost a fraction of regular instances. Batch processing, development environments, and stateless services with multiple replicas make good candidates for spot instances.

💰 Implement resource cleanup policies to remove unused resources. Delete old container images, remove terminated instances, and clean up test environments. These "zombie" resources accumulate over time, consuming storage and sometimes compute resources without providing value.

Consider multi-tenancy for services with low resource requirements. Rather than dedicating infrastructure to each small service, run multiple services on shared infrastructure. Kubernetes namespaces provide isolation while sharing underlying nodes, reducing infrastructure costs for services that don't require dedicated resources.

Frequently Asked Questions

When should I consider moving to microservices architecture?

Consider microservices when your team has grown beyond 10-15 developers working on a single codebase, when different parts of your application have vastly different scaling requirements, or when deployment coordination is slowing releases significantly. Don't adopt microservices simply because they're popular—ensure you have the organizational maturity, operational expertise, and genuine need for independent service deployment before making the transition.

How small should a microservice be?

Size matters less than responsibility boundaries. A microservice should be small enough that a team can understand and maintain it completely, but large enough to provide meaningful business value independently. Focus on single responsibility and bounded contexts rather than arbitrary size metrics. Some services might be a few hundred lines of code, others several thousand—what matters is cohesion and loose coupling.

What's the difference between microservices and service-oriented architecture (SOA)?

While both involve breaking applications into services, microservices emphasize smaller, more focused services with independent deployment and decentralized data management. SOA typically involves larger services, shared databases, and enterprise service buses for communication. Microservices favor lightweight protocols like REST or messaging, while SOA often uses heavier protocols like SOAP. Think of microservices as an evolution of SOA principles with stronger emphasis on autonomy and organizational alignment.

How do I handle authentication across multiple microservices?

Implement token-based authentication using JWT or OAuth 2.0. An API gateway or authentication service validates user credentials and issues tokens containing user identity and permissions. Services validate these tokens without calling back to the authentication service, enabling stateless authentication. Include necessary claims in tokens to minimize service-to-service calls for authorization decisions, but avoid storing sensitive information in tokens since they're typically not encrypted.

What's the best way to test microservices?

Employ a balanced testing strategy: comprehensive unit tests for business logic, contract tests to verify service agreements, integration tests for service interactions, and selective end-to-end tests for critical user journeys. Use consumer-driven contract testing to catch breaking changes early. Invest in test automation and continuous integration to maintain confidence as services evolve independently. Remember that testing in production through canary deployments and feature flags provides additional safety.

How do I manage database migrations across microservices?

Each service manages its own database migrations independently, typically using tools like Flyway or Liquibase. Maintain backward compatibility during migrations—add new columns before removing old ones, support both old and new formats during transition periods, and coordinate breaking changes carefully. For changes affecting multiple services, use expand-contract pattern: expand schemas to support both old and new versions, migrate services gradually, then contract by removing old versions once all services are updated.

Should every service have its own database?

Ideally yes, to maintain service autonomy and enable independent scaling and technology choices. However, pragmatism matters—sharing a database server while maintaining separate schemas or databases per service provides many benefits with less operational complexity. Avoid sharing tables between services, as this creates tight coupling. Start with logical separation and move to physical separation as needs dictate.

How do I handle distributed logging and debugging?

Implement correlation IDs that propagate through all service calls, allowing you to trace requests across service boundaries. Use structured logging in JSON format for easier parsing and querying. Centralize logs using tools like ELK Stack, Splunk, or cloud provider logging services. Implement distributed tracing with tools like Jaeger or Zipkin to visualize request flows. Ensure all services log consistently, including correlation IDs, timestamps, and relevant context.