How to Implement Reinforcement Learning Algorithms

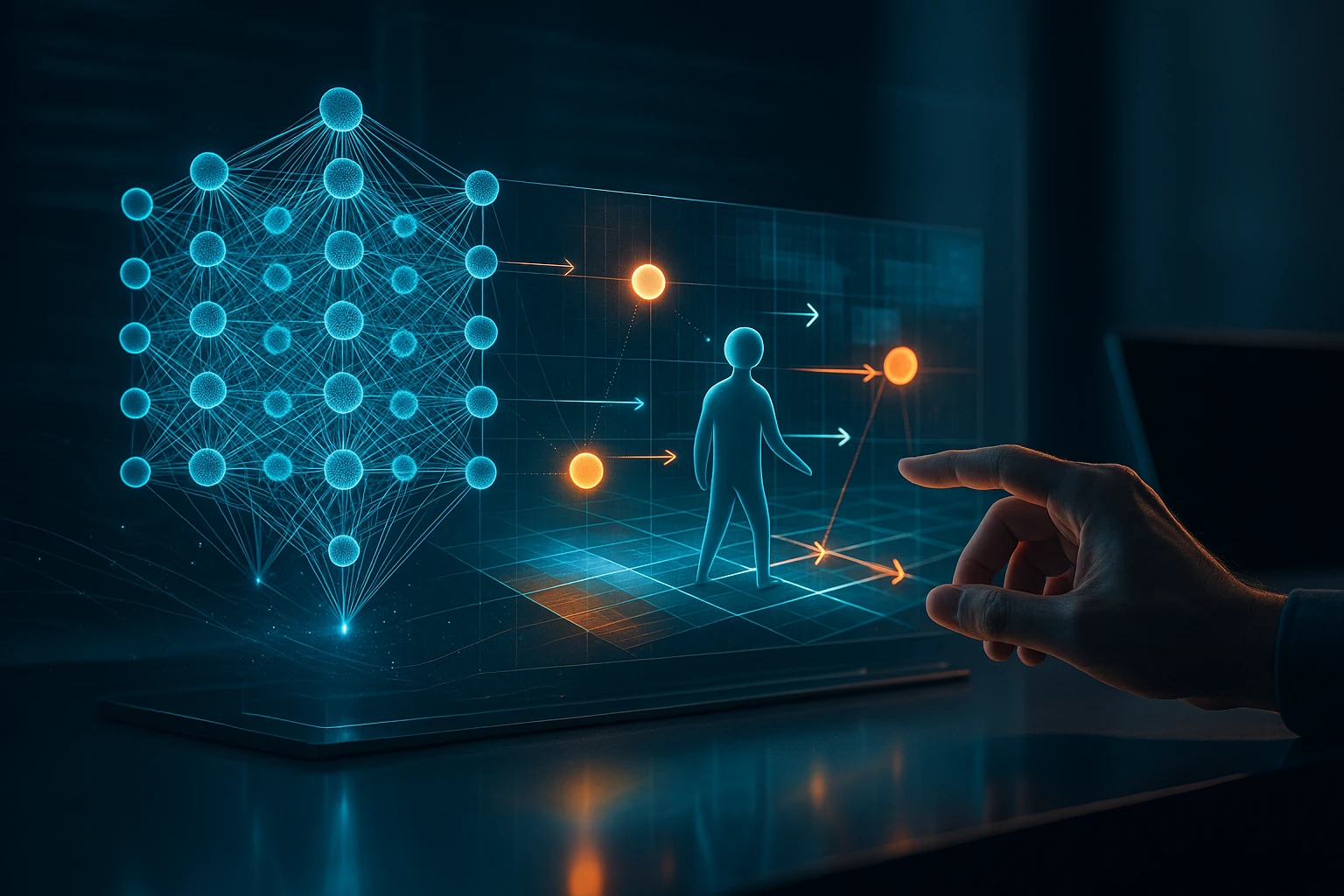

Reinforcement learning diagram: agent interacts with environment, receives states and rewards, updates policy in training loop, balances exploration-exploitation and tracks rewards.

How to Implement Reinforcement Learning Algorithms

The landscape of artificial intelligence has been fundamentally transformed by reinforcement learning, a paradigm that enables machines to learn optimal behaviors through trial and error, much like humans do. From AlphaGo defeating world champions to autonomous vehicles navigating complex traffic scenarios, these algorithms have proven their capacity to solve problems that were once considered insurmountable. Understanding how to implement these systems isn't just an academic exercise—it's becoming an essential skill for developers, data scientists, and engineers who want to build intelligent systems that can adapt and improve over time.

Reinforcement learning represents a distinct approach to machine learning where an agent learns to make decisions by interacting with an environment, receiving rewards or penalties based on its actions. Unlike supervised learning, which relies on labeled datasets, or unsupervised learning, which finds patterns in data, this methodology focuses on sequential decision-making and long-term optimization. This guide explores multiple perspectives on implementation—from mathematical foundations to practical coding strategies, from simple tabular methods to sophisticated deep learning architectures.

Throughout this comprehensive exploration, you'll gain practical knowledge about setting up environments, choosing appropriate algorithms, structuring your code for scalability, and debugging common pitfalls. Whether you're building a game-playing AI, optimizing resource allocation systems, or developing robotic control mechanisms, you'll find actionable insights that bridge the gap between theoretical concepts and working implementations. We'll examine real-world considerations, performance optimization techniques, and best practices that experienced practitioners use to build robust reinforcement learning systems.

Understanding the Core Components

Before writing a single line of code, grasping the fundamental architecture of reinforcement learning systems proves essential. Every implementation revolves around several key elements that interact in a continuous loop. The agent represents the learner or decision-maker, the environment encompasses everything the agent interacts with, states describe the current situation, actions are the choices available to the agent, and rewards provide feedback signals that guide learning.

The interaction cycle follows a predictable pattern: the agent observes the current state, selects an action based on its policy, executes that action in the environment, receives a reward signal, and observes the new state. This sequence repeats continuously, with the agent gradually learning which actions lead to better outcomes. The mathematical framework underlying this process is typically modeled as a Markov Decision Process, where the future depends only on the current state and action, not on the history of how you arrived there.

"The most critical mistake beginners make is underestimating the importance of proper environment design—your algorithm is only as good as the feedback signals it receives."

Defining Your State Space

The state representation fundamentally determines what information your agent can use for decision-making. A well-designed state space includes all relevant information needed to make optimal decisions while excluding unnecessary details that increase computational complexity. For a chess-playing agent, the state might be the current board configuration; for a stock trading system, it could include price history, volume data, and market indicators.

Continuous state spaces, like robot joint angles or vehicle positions, require different handling than discrete spaces like grid positions or game board states. Many implementations use state normalization to ensure all features have similar scales, preventing some dimensions from dominating the learning process. Feature engineering often plays a crucial role—transforming raw observations into representations that make patterns more apparent to the learning algorithm.

Structuring Action Spaces

Action spaces come in two primary varieties: discrete and continuous. Discrete actions, such as moving up, down, left, or right in a grid world, are simpler to implement and work well with classic algorithms like Q-learning and SARSA. Continuous actions, like steering angles or motor torques, require specialized approaches such as policy gradient methods or actor-critic architectures.

The size and structure of your action space dramatically impact learning efficiency. Larger action spaces increase the exploration challenge—the agent must try more possibilities to discover good behaviors. Some implementations use action masking to eliminate invalid actions in certain states, reducing the search space and accelerating learning. Hierarchical action spaces break complex decisions into multiple levels, making it easier to learn sophisticated behaviors.

Setting Up Training Environments

The environment serves as the testing ground where your agent learns through experience. Creating or selecting an appropriate environment represents one of the most important implementation decisions. For beginners, starting with standardized environments from OpenAI Gym or similar libraries provides a solid foundation without the complexity of building custom simulation systems from scratch.

OpenAI Gym has become the de facto standard for reinforcement learning environments, offering a consistent interface across hundreds of different tasks. The basic structure requires implementing several methods: reset() to initialize a new episode, step(action) to execute an action and return the new state, reward, and done flag, and optionally render() for visualization. This standardization means algorithms written for one environment can easily transfer to others.

import gym

import numpy as np

class CustomEnvironment(gym.Env):

def __init__(self):

super(CustomEnvironment, self).__init__()

self.action_space = gym.spaces.Discrete(4)

self.observation_space = gym.spaces.Box(

low=0, high=255, shape=(84, 84, 3), dtype=np.uint8

)

self.state = None

def reset(self):

self.state = self._initialize_state()

return self.state

def step(self, action):

self.state = self._apply_action(action)

reward = self._calculate_reward()

done = self._check_termination()

info = {}

return self.state, reward, done, info

def render(self, mode='human'):

# Visualization logic here

passReward Function Design

The reward function acts as the primary communication channel between you and your agent, defining what success means. Poorly designed rewards lead to unexpected behaviors—agents that find loopholes, learn unintended strategies, or fail to learn at all. Reward shaping involves crafting intermediate rewards that guide the agent toward desired behaviors without completely specifying the optimal policy.

Sparse rewards, where feedback occurs only at episode completion, create difficult learning problems because the agent receives little guidance during exploration. Dense rewards provide more frequent feedback but risk introducing bias or making the problem more complex. Many practitioners start with sparse rewards to ensure they're solving the right problem, then add shaped rewards if learning proves too slow.

"Reward engineering is often more important than algorithm selection—a simple algorithm with well-designed rewards outperforms sophisticated methods with poor feedback signals."

| Reward Strategy | Advantages | Disadvantages | Best Use Cases |

|---|---|---|---|

| Sparse Rewards | Simple to design, clearly defines objective, avoids reward hacking | Slow learning, requires extensive exploration, difficult credit assignment | Well-defined tasks, games with clear win/loss conditions |

| Dense Rewards | Faster learning, provides continuous feedback, easier credit assignment | Risk of unintended behaviors, requires careful design, may not generalize | Continuous control, navigation tasks, robotic manipulation |

| Shaped Rewards | Guides exploration, accelerates learning, can encode domain knowledge | Complex to design, may introduce bias, requires domain expertise | Complex tasks with known subgoals, curriculum learning scenarios |

| Intrinsic Motivation | Encourages exploration, works without external rewards, discovers novel behaviors | Computationally expensive, may distract from main objective, requires tuning | Exploration-heavy environments, open-ended learning, curiosity-driven tasks |

Choosing the Right Algorithm

The reinforcement learning ecosystem offers dozens of algorithms, each with distinct characteristics, strengths, and appropriate use cases. Selecting the right approach depends on multiple factors: whether your action space is discrete or continuous, whether you have access to a model of the environment, how much computational resources you can allocate, and whether you need the policy to be deterministic or stochastic.

Value-based methods like Q-learning and Deep Q-Networks learn to estimate the value of taking each action in each state, then select actions by choosing the highest-valued option. These approaches work excellently for discrete action spaces and benefit from relatively straightforward implementation. Policy-based methods like REINFORCE and Proximal Policy Optimization directly learn a policy that maps states to actions, making them suitable for continuous action spaces and stochastic policies.

🎯 Q-Learning and Deep Q-Networks

Q-learning represents one of the most fundamental algorithms, using a table or function approximator to learn action values. The core update rule adjusts Q-values based on the difference between predicted and observed returns, gradually converging to optimal values. Deep Q-Networks extend this concept by using neural networks to approximate the Q-function, enabling learning in high-dimensional state spaces like images.

import torch

import torch.nn as nn

import torch.optim as optim

import random

from collections import deque

class DQN(nn.Module):

def __init__(self, state_size, action_size):

super(DQN, self).__init__()

self.fc1 = nn.Linear(state_size, 128)

self.fc2 = nn.Linear(128, 128)

self.fc3 = nn.Linear(128, action_size)

def forward(self, x):

x = torch.relu(self.fc1(x))

x = torch.relu(self.fc2(x))

return self.fc3(x)

class DQNAgent:

def __init__(self, state_size, action_size):

self.state_size = state_size

self.action_size = action_size

self.memory = deque(maxlen=10000)

self.gamma = 0.99

self.epsilon = 1.0

self.epsilon_min = 0.01

self.epsilon_decay = 0.995

self.learning_rate = 0.001

self.model = DQN(state_size, action_size)

self.target_model = DQN(state_size, action_size)

self.optimizer = optim.Adam(self.model.parameters(), lr=self.learning_rate)

self.update_target_model()

def update_target_model(self):

self.target_model.load_state_dict(self.model.state_dict())

def remember(self, state, action, reward, next_state, done):

self.memory.append((state, action, reward, next_state, done))

def act(self, state):

if random.random() <= self.epsilon:

return random.randrange(self.action_size)

state = torch.FloatTensor(state).unsqueeze(0)

with torch.no_grad():

q_values = self.model(state)

return q_values.argmax().item()

def replay(self, batch_size):

if len(self.memory) < batch_size:

return

minibatch = random.sample(self.memory, batch_size)

for state, action, reward, next_state, done in minibatch:

state = torch.FloatTensor(state).unsqueeze(0)

next_state = torch.FloatTensor(next_state).unsqueeze(0)

target = reward

if not done:

target = reward + self.gamma * self.target_model(next_state).max().item()

current_q = self.model(state)

target_q = current_q.clone()

target_q[0][action] = target

loss = nn.MSELoss()(current_q, target_q)

self.optimizer.zero_grad()

loss.backward()

self.optimizer.step()

if self.epsilon > self.epsilon_min:

self.epsilon *= self.epsilon_decayThe implementation above demonstrates several critical techniques: experience replay stores past transitions and samples them randomly during training, breaking correlations in the data; target networks provide stable learning targets by updating slowly; and epsilon-greedy exploration balances trying new actions with exploiting known good actions.

🚀 Policy Gradient Methods

Policy gradient algorithms optimize the policy directly by computing gradients that increase the probability of actions that led to high rewards. REINFORCE, the simplest policy gradient method, collects complete episodes and updates the policy based on the total return. More sophisticated variants like Advantage Actor-Critic and Proximal Policy Optimization improve sample efficiency and stability.

These methods excel in continuous action spaces where enumerating all possible actions becomes impractical. The policy network outputs parameters of a probability distribution over actions—for continuous actions, typically a Gaussian distribution with learned mean and variance. During training, actions are sampled from this distribution, and the policy is adjusted to make high-reward actions more likely.

"Policy gradient methods shine when you need smooth, continuous control, but they require careful hyperparameter tuning and often need more samples than value-based approaches."

⚖️ Actor-Critic Architectures

Actor-critic methods combine the best aspects of value-based and policy-based approaches. The actor learns a policy that selects actions, while the critic learns a value function that evaluates those actions. This combination reduces variance in policy gradient estimates, leading to more stable and efficient learning. The critic provides a baseline that helps the actor understand whether an action was better or worse than average.

Popular implementations like A3C, A2C, and SAC have achieved impressive results across diverse domains. Asynchronous Advantage Actor-Critic runs multiple agents in parallel, each exploring different parts of the state space, dramatically accelerating learning. Soft Actor-Critic adds an entropy term that encourages exploration and has shown remarkable sample efficiency in continuous control tasks.

Building a Complete Training Pipeline

Implementing the algorithm itself represents just one piece of a larger system. A production-ready reinforcement learning pipeline includes data collection, preprocessing, training loops, evaluation protocols, checkpointing, logging, and visualization. Organizing these components thoughtfully makes the difference between a research prototype and a maintainable system that can scale to complex problems.

The training loop forms the heart of your implementation, orchestrating the interaction between agent and environment. A typical structure involves resetting the environment at the start of each episode, running the agent until termination or a maximum step limit, collecting transitions, performing learning updates, and periodically evaluating performance. Proper error handling and resource management prevent training runs from failing hours into execution.

def train_agent(env, agent, num_episodes=1000, max_steps=500, batch_size=32):

scores = []

scores_window = deque(maxlen=100)

for episode in range(1, num_episodes + 1):

state = env.reset()

score = 0

for step in range(max_steps):

action = agent.act(state)

next_state, reward, done, info = env.step(action)

agent.remember(state, action, reward, next_state, done)

agent.replay(batch_size)

state = next_state

score += reward

if done:

break

scores_window.append(score)

scores.append(score)

# Update target network periodically

if episode % 10 == 0:

agent.update_target_model()

# Logging

if episode % 100 == 0:

print(f'Episode {episode}\tAverage Score: {np.mean(scores_window):.2f}')

# Early stopping if solved

if np.mean(scores_window) >= 195.0:

print(f'Environment solved in {episode} episodes!')

break

return scores🔍 Exploration Strategies

Balancing exploration and exploitation stands as one of the central challenges in reinforcement learning. Pure exploitation means the agent only takes actions it currently believes are best, potentially missing better strategies it hasn't discovered. Pure exploration means randomly trying actions without regard for what it has learned, wasting time on known poor choices.

Epsilon-greedy exploration, where the agent takes random actions with probability epsilon and greedy actions otherwise, provides a simple but effective baseline. The epsilon parameter typically decays over time, starting with high exploration early in training and gradually shifting toward exploitation as the agent gains experience. More sophisticated approaches include Boltzmann exploration, which samples actions proportionally to their estimated values, and Upper Confidence Bound methods that explicitly track uncertainty.

- Epsilon-Greedy: Simple to implement, works well for discrete actions, requires tuning decay schedule

- Boltzmann Exploration: Provides smooth probability distribution, controlled by temperature parameter, naturally balances exploration

- Ornstein-Uhlenbeck Noise: Designed for continuous actions, adds temporally correlated noise, commonly used with DDPG

- Parameter Space Noise: Adds noise to network weights rather than actions, encourages consistent exploration

- Curiosity-Driven Exploration: Provides intrinsic rewards for novel states, effective in sparse reward environments, computationally intensive

📊 Monitoring and Debugging

Reinforcement learning debugging presents unique challenges because failures can stem from multiple sources: environment bugs, algorithm implementation errors, poor hyperparameters, or simply insufficient training time. Comprehensive logging and visualization prove essential for diagnosing issues and understanding learning dynamics.

Track multiple metrics beyond just episode rewards: average Q-values, policy entropy, loss values, exploration rate, episode length, and success rate. Plotting these over time reveals patterns that indicate specific problems. Steadily increasing Q-values suggest learning is occurring; decreasing policy entropy might indicate premature convergence to suboptimal policies; exploding losses point to instability in the learning process.

"If your agent isn't learning, check the basics first: Is the reward signal actually changing? Is the agent exploring enough? Are gradients flowing through the network? Simple sanity checks catch most issues."

| Problem Symptom | Possible Causes | Diagnostic Steps | Solutions |

|---|---|---|---|

| No Learning | Insufficient exploration, wrong learning rate, broken reward signal | Check if rewards vary, verify gradient magnitudes, test random policy performance | Increase exploration, adjust learning rate, simplify environment, check reward calculation |

| Unstable Learning | Learning rate too high, lack of normalization, replay buffer issues | Plot loss curves, check gradient norms, visualize Q-value distributions | Reduce learning rate, normalize states/rewards, use gradient clipping, increase batch size |

| Catastrophic Forgetting | Insufficient replay buffer, correlated samples, large policy updates | Monitor performance on previous states, check buffer diversity | Increase buffer size, improve sampling strategy, reduce update frequency |

| Slow Convergence | Network too small/large, poor exploration, suboptimal hyperparameters | Compare to baseline implementations, profile computational bottlenecks | Tune network architecture, adjust exploration parameters, use curriculum learning |

Scaling to Complex Problems

Moving from toy problems to real-world applications requires additional techniques that improve sample efficiency, stability, and generalization. Simple implementations that work on CartPole often fail on high-dimensional observation spaces, long-horizon tasks, or environments with complex dynamics. Advanced methods address these challenges through better architectures, training procedures, and algorithmic improvements.

Prioritized experience replay samples important transitions more frequently, focusing learning on experiences where the agent was most surprised. Transitions with large temporal difference errors receive higher sampling probability, accelerating learning on difficult aspects of the task. Hindsight experience replay, particularly valuable for sparse reward problems, artificially creates successful trajectories by relabeling goals after the fact.

🧠 Network Architecture Considerations

The neural network architecture significantly impacts learning performance. For image-based observations, convolutional layers extract spatial features efficiently. Recurrent networks or transformers handle partial observability by maintaining memory of past states. Dueling network architectures separate value and advantage streams, improving learning stability in value-based methods.

Network size involves tradeoffs: larger networks can represent more complex functions but require more data and computation to train; smaller networks train faster but may lack capacity for difficult tasks. Starting with proven architectures from published papers provides a solid baseline before experimenting with modifications. Proper initialization schemes like Xavier or He initialization prevent vanishing or exploding gradients early in training.

⚡ Parallel and Distributed Training

Many modern reinforcement learning implementations leverage parallelism to accelerate training. Multiple environment instances run simultaneously, each collecting experience that contributes to learning. This approach increases sample collection rate and improves exploration by having different agents explore different regions of the state space concurrently.

Asynchronous methods like A3C run independent learners that periodically sync with a central parameter server. Synchronous approaches like A2C wait for all workers to complete before updating, providing more stable gradients at the cost of waiting for the slowest worker. GPU acceleration becomes crucial for deep networks, with careful batching and data transfer minimizing computational bottlenecks.

"Parallelization is not just about speed—running multiple agents simultaneously improves exploration and makes learning more robust to the stochasticity of individual trajectories."

🎓 Curriculum Learning and Transfer

Curriculum learning starts with simpler versions of a task and gradually increases difficulty as the agent improves. This approach prevents the agent from becoming overwhelmed by complexity early in training when it has little knowledge. For a robotic manipulation task, you might start with objects in easy-to-grasp positions before introducing challenging orientations.

Transfer learning leverages knowledge from related tasks to accelerate learning on new problems. Pre-training on simpler or related environments provides a better initialization than random weights. Domain randomization, where training occurs across varied environment parameters, improves robustness and facilitates transfer from simulation to real-world deployment. Fine-tuning pre-trained policies often requires fewer samples than training from scratch.

Production Deployment Strategies

Transitioning from research code to production systems introduces concerns beyond algorithm performance. Reliability, maintainability, monitoring, and safe deployment become paramount. Real-world systems must handle edge cases, degrade gracefully under unexpected conditions, and provide interpretable outputs that stakeholders can understand and trust.

Containerization with Docker ensures consistent environments across development, testing, and production. Version control for both code and trained models enables rollback if deployed policies perform poorly. Continuous integration pipelines automatically test changes, catching bugs before they reach production. Monitoring dashboards track key performance indicators in real-time, alerting engineers to anomalies or degradation.

🛡️ Safety and Constraints

Reinforcement learning agents can discover unexpected behaviors that technically maximize reward but violate implicit constraints or safety requirements. Constrained reinforcement learning explicitly incorporates safety constraints into the optimization problem, ensuring the agent respects limits on resource usage, risk, or prohibited actions.

Safe exploration techniques prevent the agent from taking dangerous actions during training. Conservative policy updates limit how much the policy can change between iterations, avoiding catastrophic performance drops. Formal verification methods prove that policies satisfy certain properties, though these techniques currently apply only to relatively simple systems. Human-in-the-loop approaches allow operators to intervene when the agent attempts risky actions.

📈 Hyperparameter Optimization

Reinforcement learning algorithms expose numerous hyperparameters that significantly impact performance: learning rates, discount factors, network architectures, batch sizes, exploration parameters, and more. Manual tuning proves time-consuming and may miss optimal configurations. Automated hyperparameter search using grid search, random search, or Bayesian optimization systematically explores the parameter space.

Population-based training evolves a population of agents with different hyperparameters, periodically copying parameters from high-performing agents and mutating hyperparameters. This approach adapts hyperparameters during training rather than fixing them at the start. Meta-learning methods learn hyperparameter schedules that work well across multiple tasks, reducing the tuning burden for new problems.

💾 Model Management and Versioning

Trained reinforcement learning models require careful management. Saving checkpoints at regular intervals protects against training failures and enables analysis of learning dynamics. Storing both model weights and the exact code version, hyperparameters, and environment configuration ensures reproducibility. Model registries provide centralized storage with metadata, versioning, and access control.

A/B testing compares new policies against existing ones before full deployment. Shadow mode runs the new policy alongside the production system, logging what actions it would take without actually executing them. Gradual rollout deploys new policies to a small percentage of users initially, monitoring for issues before expanding coverage. These strategies minimize risk when deploying updated policies.

Avoiding Common Implementation Mistakes

Even experienced practitioners encounter subtle bugs and conceptual errors when implementing reinforcement learning systems. Awareness of common pitfalls helps you avoid hours of debugging and failed training runs. Many issues arise from mismatches between mathematical formulations and code implementations, incorrect handling of terminal states, or subtle bugs in environment dynamics.

Off-by-one errors in discount factor application, incorrect handling of episode boundaries in value calculations, and improper normalization of observations or rewards cause surprisingly frequent problems. The agent might appear to learn initially but plateau far below expected performance, or training might be unstably alternating between good and poor performance. Systematic testing and comparison against reference implementations helps identify these issues.

🐛 State Representation Issues

Providing the agent with insufficient information in the state representation prevents it from learning optimal policies. If the optimal action depends on information not included in the state, the environment becomes partially observable, and the agent cannot distinguish between situations requiring different actions. Conversely, including too much irrelevant information increases the difficulty of learning by expanding the state space unnecessarily.

Numerical issues with state representations cause subtle problems. Unnormalized features with vastly different scales bias learning toward the larger-magnitude features. States with high variance make it difficult for neural networks to learn stable representations. Applying standardization or normalization to observations, ensuring all features have similar ranges, dramatically improves learning stability and speed.

⚠️ Reward Engineering Mistakes

Misaligned reward functions lead to policies that optimize the specified reward but fail to achieve the intended objective. The agent exploits any loopholes or shortcuts in the reward function, discovering behaviors that technically maximize reward while violating the spirit of the task. This reward hacking occurs more frequently than many practitioners expect, requiring careful reward design and testing.

Reward scaling affects learning dynamics significantly. Very small rewards can be lost in numerical precision, while very large rewards cause instability in gradient-based updates. Clipping rewards to a fixed range provides a simple solution but may lose information about relative magnitudes. Reward normalization based on running statistics adapts to the natural scale of the problem.

"The hardest bugs to find are the ones where your code does exactly what you told it to do, but you didn't realize what you were actually asking for—reward functions are particularly susceptible to this problem."

🔄 Training Instability

Neural network training in reinforcement learning suffers from unique instability issues beyond those in supervised learning. The non-stationary nature of the data distribution—the agent's behavior changes during training, altering the states it visits—violates assumptions of many optimization algorithms. Correlations between consecutive samples can cause the network to overfit to recent experiences.

Several techniques mitigate instability: target networks update slowly, providing stable learning targets; gradient clipping prevents individual large gradients from causing destructive updates; learning rate schedules reduce the step size over time as the policy improves; and batch normalization or layer normalization stabilize internal representations. Monitoring gradient norms and loss values helps detect instability early before it derails training completely.

Evaluation and Performance Analysis

Proper evaluation distinguishes genuinely capable policies from those that merely overfit to training environments. Reinforcement learning agents can appear successful during training but fail when deployed in slightly different conditions. Rigorous testing across diverse scenarios, including edge cases and adversarial situations, reveals true policy robustness and generalization capability.

Evaluation protocols should separate training and test environments completely. The agent should never see test scenarios during training to prevent overfitting. Multiple random seeds ensure results aren't due to lucky initialization. Statistical significance testing determines whether performance differences between algorithms or hyperparameters are meaningful rather than noise. Confidence intervals provide context about performance variability.

📉 Metrics Beyond Average Return

Average episode return provides a convenient single-number summary but obscures important aspects of policy behavior. Examining the distribution of returns reveals risk—does the policy consistently achieve moderate performance, or does it alternate between excellent and terrible outcomes? Worst-case performance matters for safety-critical applications where occasional failures are unacceptable.

Task-specific metrics often provide more meaningful evaluation than generic reward metrics. For a recommendation system, click-through rate, user satisfaction, and diversity of recommendations matter more than the abstract reward signal used during training. For robotic control, success rate, smoothness of motion, and energy efficiency complement reward-based evaluation. Defining these metrics early guides algorithm development toward practically useful policies.

🔬 Ablation Studies

Ablation studies systematically remove or modify components of your implementation to understand their contribution to overall performance. Does prioritized replay actually help, or would uniform sampling work equally well? How much does the target network matter compared to direct updates? These experiments build understanding of what techniques are essential versus peripheral for your specific problem.

Comparing against baselines establishes context for your results. A random policy provides a lower bound on performance; a hand-crafted heuristic policy offers a domain-specific comparison; published results on the same environment indicate whether your implementation achieves competitive performance. Significant deviations from expected baselines suggest implementation bugs or environmental differences requiring investigation.

🎯 Generalization Testing

True intelligence requires generalization beyond training conditions. Procedural generation creates infinite variations of environments, testing whether the agent learned robust strategies or memorized specific scenarios. Domain randomization varies environment parameters like friction, mass, or visual appearance during training, forcing the agent to learn policies that work across conditions.

Zero-shot transfer tests whether policies trained in one environment work in related but different environments without additional training. Few-shot adaptation evaluates how quickly policies can adjust to new conditions given limited experience. These tests reveal whether the agent learned transferable skills or merely overfit to the training distribution. Failure to generalize indicates the need for more diverse training, better state representations, or different algorithmic approaches.

Essential Tools and Libraries

The reinforcement learning ecosystem offers mature libraries that handle low-level details, allowing you to focus on algorithm design and application-specific challenges. Choosing appropriate tools accelerates development and provides battle-tested implementations of complex techniques. Understanding the strengths and limitations of different libraries helps you select the right foundation for your project.

Stable Baselines3 provides high-quality implementations of popular algorithms with consistent APIs and extensive documentation. Built on PyTorch, it offers excellent starting points for most projects. Ray RLlib focuses on scalability, with built-in support for distributed training and a wide variety of algorithms. TensorFlow Agents integrates tightly with the TensorFlow ecosystem, offering both high-level and low-level APIs for different use cases.

🛠️ Environment Libraries

OpenAI Gym remains the standard interface for reinforcement learning environments, with hundreds of pre-built environments and a simple API for creating custom ones. Gymnasium, a maintained fork of Gym, provides ongoing updates and improvements. PettingZoo extends the Gym interface to multi-agent scenarios, enabling research on cooperation and competition between agents.

Specialized libraries target specific domains: MuJoCo and PyBullet for physics simulation and robotics; Atari Learning Environment for classic video games; ProcGen for procedurally generated environments testing generalization; CARLA for autonomous driving simulation. These domain-specific tools provide realistic testing grounds for algorithms intended for real-world deployment.

📊 Visualization and Analysis

TensorBoard provides real-time visualization of training metrics, network architectures, and hyperparameter distributions. Weights & Biases offers cloud-based experiment tracking with advanced visualization, hyperparameter sweeps, and collaboration features. MLflow manages the complete machine learning lifecycle, including experiment tracking, model packaging, and deployment.

Custom visualization tools help understand policy behavior beyond aggregate metrics. Recording videos of agent behavior reveals qualitative aspects that numbers miss. Attention visualization shows what the agent focuses on in visual observations. State visitation heatmaps indicate which regions of the state space the agent explores. These tools provide intuition that guides debugging and improvement efforts.

🚀 Deployment Frameworks

Deploying trained policies requires different infrastructure than training. ONNX Runtime provides efficient inference for models exported from various frameworks, with optimizations for different hardware platforms. TensorFlow Serving and TorchServe offer production-ready model serving with versioning, monitoring, and scalability. These tools handle the operational concerns of running models in production environments.

Edge deployment for robotics or embedded systems requires additional considerations. Model quantization reduces precision to decrease memory footprint and increase inference speed with minimal accuracy loss. Pruning removes unnecessary network connections, further reducing model size. Knowledge distillation trains smaller student networks to mimic larger teacher policies, enabling deployment on resource-constrained devices.

Emerging Trends and Future Directions

Reinforcement learning continues to evolve rapidly, with new techniques addressing current limitations and expanding the range of solvable problems. Staying aware of emerging trends helps you anticipate which methods might benefit your applications and where the field is heading. Many recent advances focus on sample efficiency, offline learning, and bridging the sim-to-real gap for robotic applications.

Offline reinforcement learning learns from fixed datasets without environment interaction, enabling learning from historical data or expert demonstrations. This approach proves valuable when environment interaction is expensive, dangerous, or impossible. Model-based reinforcement learning builds explicit models of environment dynamics, using them for planning or generating synthetic experience, dramatically improving sample efficiency in many domains.

🤖 Real-World Robotics Applications

Applying reinforcement learning to physical robots presents challenges beyond simulation: sample efficiency becomes critical when each interaction takes real time, safety constraints prevent exploration of dangerous actions, and the reality gap means policies trained in simulation often fail on real hardware. Recent work on sim-to-real transfer, domain randomization, and learning from demonstrations addresses these challenges.

Combining reinforcement learning with classical control theory leverages the strengths of both approaches. Hierarchical methods learn high-level strategies with reinforcement learning while using traditional controllers for low-level execution. This division of labor often proves more sample-efficient than learning everything from scratch, and provides better safety guarantees through the use of verified low-level controllers.

🌐 Multi-Agent Systems

Many real-world scenarios involve multiple agents interacting, cooperating, or competing. Multi-agent reinforcement learning extends single-agent methods to these settings, but introduces new challenges: non-stationarity as other agents learn and change their behavior, credit assignment when rewards depend on joint actions, and communication or coordination between agents.

Centralized training with decentralized execution provides a practical framework: during training, agents can access global information and coordinate learning, but at deployment, each agent acts based only on local observations. This approach works well for cooperative scenarios like multi-robot coordination. Competitive settings require different techniques, often drawing from game theory to find equilibrium policies.

🧬 Meta-Learning and Adaptation

Meta-learning, or learning to learn, trains agents that can quickly adapt to new tasks with minimal additional experience. Rather than learning a single policy for one task, meta-learning produces policies that can be fine-tuned efficiently for related tasks. This capability becomes increasingly important as we move toward more general-purpose agents that must handle diverse situations.

Contextual bandits and continual learning address scenarios where the task distribution changes over time. Rather than catastrophically forgetting previous knowledge when learning new tasks, continual learning methods preserve important capabilities while adapting to new requirements. These techniques prove essential for deployed systems that must handle evolving requirements without complete retraining.

How long does it typically take to train a reinforcement learning agent?

Training time varies enormously depending on problem complexity, algorithm choice, and computational resources. Simple environments like CartPole might converge in minutes, while complex tasks like Dota 2 required months of training across thousands of GPUs. Most practical applications fall somewhere in between, with training times ranging from hours to days. Factors affecting training time include state and action space sizes, reward sparsity, required sample efficiency, and available parallelization. Starting with proven hyperparameters from similar problems and using transfer learning can significantly reduce training time.

What's the difference between model-free and model-based reinforcement learning?

Model-free methods learn policies or value functions directly from experience without building an explicit model of how the environment works. They're simpler to implement and make fewer assumptions but typically require more samples. Model-based methods learn a model of environment dynamics—predicting how states change given actions—and use this model for planning or generating synthetic experience. They're more sample-efficient but can suffer if the learned model is inaccurate. Hybrid approaches combining both paradigms often achieve the best results, using models to improve sample efficiency while maintaining the robustness of model-free learning.

How do I know if my reward function is well-designed?

A well-designed reward function clearly specifies the objective without introducing unintended incentives. Test it by asking: Does maximizing this reward actually achieve my goal? Are there loopholes or shortcuts that give high reward without solving the problem? Can I write down what optimal behavior looks like, and does this reward encourage it? Start simple with sparse rewards that only signal task completion, then add shaped rewards if learning is too slow. Visualize agent behavior during training—if it's doing something unexpected, the reward function might be misaligned. Remember that the agent will optimize exactly what you specify, not what you intend.

Should I use on-policy or off-policy algorithms?

On-policy algorithms like PPO learn from data collected by the current policy, requiring fresh data for each update. They're often more stable but less sample-efficient. Off-policy algorithms like DQN and SAC can learn from data collected by different policies, enabling experience replay and better sample efficiency. Choose on-policy methods when you can collect data quickly and want stable, reliable learning. Choose off-policy methods when sample collection is expensive or you want to learn from demonstrations or historical data. For continuous control, SAC and TD3 (off-policy) often outperform PPO (on-policy). For discrete actions, both DQN (off-policy) and PPO work well.

How can I debug when my agent isn't learning?

Start with sanity checks: verify the environment returns varying rewards, confirm the agent can overfit to a single episode, test that a random policy achieves non-zero reward, and ensure gradients are flowing through your network. Check that exploration is sufficient—an agent that exploits too early never discovers good strategies. Verify reward scaling isn't too small or large. Compare learning curves against reference implementations on the same environment. Visualize what the agent is doing—often you'll immediately see it's stuck in local optima or hasn't discovered key environment mechanics. Simplify the problem temporarily to isolate issues: reduce state space, make rewards denser, or start with a simpler environment variant.

What hardware do I need for reinforcement learning?

Hardware requirements depend heavily on your specific problem. Simple environments with small networks run fine on CPUs, completing training in reasonable time on a laptop. High-dimensional observations like images benefit enormously from GPU acceleration—a modern GPU can speed up training by 10-100x compared to CPU-only. Very large-scale projects might use multiple GPUs or distributed training across many machines. For getting started, a single consumer GPU (like an NVIDIA RTX 3060 or better) provides excellent performance for most problems. Cloud platforms like Google Colab offer free GPU access for experimentation. Prioritize getting something working on available hardware before investing in expensive infrastructure.