How to Master Docker Container Management

Stylized cover showing a laptop with a Docker whale, stacked containers, CLI windows, gears and network lines, symbolizing practical Docker container management, orchestration pro.

How to Master Docker Container Management

In today's rapidly evolving technology landscape, the ability to efficiently manage containerized applications has become a critical skill for developers, system administrators, and DevOps professionals. Organizations worldwide are migrating their infrastructure to container-based architectures, making Docker container management not just a nice-to-have skill, but an essential competency that directly impacts deployment speed, application reliability, and operational costs. Whether you're running a small startup or managing enterprise-level infrastructure, understanding how to properly orchestrate, monitor, and optimize Docker containers can mean the difference between seamless operations and costly downtime.

Container management encompasses the entire lifecycle of Docker containers—from initial creation and configuration through deployment, scaling, monitoring, and eventual retirement. It involves understanding networking principles, storage management, security best practices, resource allocation, and orchestration strategies. This comprehensive approach ensures that your containerized applications run smoothly, scale efficiently, and remain secure throughout their operational lifespan while maintaining the flexibility and portability that makes Docker such a powerful platform.

Throughout this guide, you'll discover practical techniques for managing Docker containers at every stage of their lifecycle, learn industry-proven strategies for optimizing performance and resource utilization, explore essential tools and commands that streamline daily operations, and gain insights into advanced topics like multi-container orchestration and production-ready deployment patterns. You'll walk away with actionable knowledge that you can immediately apply to your projects, whether you're just starting with Docker or looking to refine your existing container management practices.

Understanding Container Lifecycle Fundamentals

The journey of a Docker container begins long before it starts running and extends well beyond its active operation period. Grasping this complete lifecycle is fundamental to effective management. Containers transition through distinct states—created, running, paused, stopped, and removed—each requiring different management approaches and considerations. When you create a container, Docker establishes a writable layer on top of the image, allocates network resources, and prepares the isolated environment. This initial phase sets the foundation for everything that follows, making it crucial to configure containers correctly from the start.

"The most common mistake in container management is treating containers like traditional virtual machines rather than ephemeral, stateless entities designed for specific tasks."

Running containers require continuous attention to resource consumption, health status, and performance metrics. Unlike traditional applications, containers should be designed to fail gracefully and restart automatically when issues arise. This philosophy of treating infrastructure as disposable and replaceable represents a fundamental shift in operational thinking. Monitoring running containers involves tracking CPU usage, memory consumption, network traffic, and disk I/O patterns. These metrics provide early warning signs of potential problems and help you make informed decisions about scaling and resource allocation.

The stopping and removal phases are equally important but often overlooked. Properly shutting down containers ensures that applications can save state, close connections gracefully, and release resources cleanly. Docker provides mechanisms for graceful shutdowns through signal handling, allowing containers to perform cleanup operations before termination. Understanding these signals and implementing proper shutdown handlers in your applications prevents data loss and ensures system stability during maintenance windows or scaling operations.

Essential Container States and Transitions

Containers exist in several distinct states, and understanding these states helps you diagnose issues and manage operations effectively. The created state represents a container that has been initialized but not yet started—all configuration is complete, but no processes are running. The running state indicates active containers executing their primary processes and consuming system resources. Containers can enter a paused state where all processes are frozen but memory is preserved, useful for temporary suspension without full shutdown. The stopped state means the container has exited, either normally or due to an error, but its filesystem and configuration remain intact for potential restart. Finally, removed containers have been deleted entirely, with only the underlying image remaining.

| Container State | Description | Resource Usage | Common Use Cases |

|---|---|---|---|

| Created | Container initialized but not started | Minimal (metadata only) | Pre-staging containers for rapid deployment |

| Running | Active container with executing processes | Full CPU, memory, and I/O allocation | Production workloads, active services |

| Paused | Processes frozen, memory preserved | Memory retained, CPU released | Temporary suspension during maintenance |

| Stopped | Container exited, filesystem preserved | Disk space only | Debugging, log inspection, restart preparation |

| Removed | Container deleted, only image remains | None | Cleanup after completion or failure |

Transitioning between these states requires specific commands and understanding the implications of each transition. Starting a stopped container is faster than creating a new one because the filesystem layers already exist. Pausing provides a quick way to free CPU resources without losing memory state, ideal for temporarily reducing system load. Removing stopped containers regularly prevents disk space accumulation and keeps your environment clean, but remember that removal is permanent—any data not stored in volumes or external systems will be lost forever.

Core Management Commands and Operations

Mastering Docker container management begins with fluency in the command-line interface. The Docker CLI provides comprehensive tools for every aspect of container operations, from basic lifecycle management to advanced debugging and optimization. Starting with fundamental commands establishes a solid foundation for more complex operations. The docker run command combines container creation and startup into a single operation, accepting numerous flags that control behavior, resource limits, networking, and volume mounts. Understanding these flags and their implications transforms you from a basic Docker user into a proficient container manager.

Listing containers with docker ps shows currently running containers by default, but adding the -a flag reveals all containers regardless of state. This visibility is essential for troubleshooting and understanding your container landscape. Each container receives a unique ID and name, either auto-generated or explicitly specified during creation. Using meaningful names rather than relying on auto-generated identifiers makes management significantly easier, especially when operating multiple containers simultaneously. The output includes crucial information like image name, creation time, status, exposed ports, and the command currently executing within the container.

Starting and Stopping Containers Effectively

The docker start command revives stopped containers, preserving their filesystem state and configuration. This differs fundamentally from creating new containers, as all previous changes to the writable layer remain intact. For applications that maintain state or configuration within the container filesystem (though this practice is generally discouraged), starting existing containers ensures continuity. The docker stop command initiates a graceful shutdown by sending a SIGTERM signal to the main process, allowing it to clean up resources and exit normally. After a configurable timeout (default 10 seconds), Docker sends SIGKILL to force termination if the process hasn't exited voluntarily.

"Understanding the difference between stop and kill is critical—stop gives your application a chance to shut down gracefully, while kill forces immediate termination that can corrupt data or leave resources in inconsistent states."

The docker restart command combines stop and start operations, useful for applying configuration changes or recovering from application errors. However, relying heavily on manual restarts often indicates underlying application issues that should be addressed. Modern container management embraces automated recovery through restart policies, which define how Docker should respond when containers exit. The --restart flag accepts several values: no (never restart automatically), on-failure (restart only if the container exits with a non-zero status), always (restart regardless of exit status), and unless-stopped (restart always except when explicitly stopped by the user).

Inspecting and Debugging Container Operations

The docker logs command retrieves output from container processes, essential for troubleshooting and monitoring. By default, Docker captures stdout and stderr from the main process, storing these logs until the container is removed. The --follow flag streams logs in real-time, similar to tail -f, while --tail limits output to the most recent lines. For production systems, configuring log rotation and forwarding logs to external systems prevents disk space exhaustion and enables centralized log analysis. Understanding log drivers—json-file, syslog, journald, gelf, fluentd, and others—allows you to integrate Docker logging with your existing monitoring infrastructure.

The docker inspect command returns comprehensive JSON-formatted information about containers, including network settings, volume mounts, environment variables, resource limits, and health check configurations. This detailed view proves invaluable when debugging connectivity issues or verifying that containers are configured as intended. Using JSON query tools like jq or Docker's built-in --format flag extracts specific information without parsing the entire output manually. For example, retrieving just the IP address or specific environment variables makes scripting and automation much more manageable.

Executing commands inside running containers with docker exec provides direct access for debugging and administration. The -it flags (interactive terminal) enable shell access, allowing you to explore the container's filesystem, check process status, or manually test application components. This capability is powerful but should be used judiciously—regularly needing to exec into containers often signals configuration problems or missing observability tools. For production environments, consider building debugging tools into your images or using specialized debugging containers that share network and process namespaces with target containers.

Resource Management and Constraints

Controlling resource allocation prevents individual containers from monopolizing system resources and impacting other workloads. Docker provides granular controls for CPU, memory, disk I/O, and network bandwidth. The --memory flag limits the maximum memory a container can consume, with Docker killing the container if it exceeds this limit. Setting memory limits too low causes frequent OOM (out of memory) kills, while setting them too high defeats the purpose of resource isolation. Finding the right balance requires monitoring actual memory usage patterns and adjusting limits accordingly.

Essential Resource Management Commands

- docker run --memory="512m" – Limits container memory to 512 megabytes, preventing memory exhaustion

- docker run --cpus="1.5" – Allocates 1.5 CPU cores to the container, balancing performance and resource sharing

- docker run --memory-swap="1g" – Sets total memory plus swap space, controlling virtual memory usage

- docker run --cpu-shares="512" – Assigns relative CPU priority when system resources are constrained

- docker run --pids-limit="100" – Restricts maximum process count, preventing fork bombs and runaway processes

- docker update --memory="1g" container_name – Modifies memory limits on running containers without restart

- docker stats – Displays real-time resource usage statistics for all running containers

- docker run --device-read-bps /dev/sda:1mb – Limits read throughput from specific block devices

CPU management differs from memory in that Docker uses shares rather than hard limits by default. CPU shares represent relative weights—a container with 1024 shares receives twice the CPU time of a container with 512 shares when both are competing for resources. The --cpus flag provides hard limits, specifying exactly how many CPU cores a container can use. For workloads with predictable CPU requirements, hard limits ensure consistent performance, while shares work better for variable workloads that benefit from burst capacity when available.

Networking Configuration and Management

Container networking represents one of the most complex aspects of Docker management, yet understanding it is essential for building functional multi-container applications. Docker creates isolated network environments for containers, controlling how they communicate with each other and the outside world. By default, Docker includes several network drivers—bridge, host, overlay, macvlan, and none—each serving different use cases and providing varying levels of isolation and performance. The bridge network driver creates a private internal network on the host, allowing containers to communicate with each other while providing NAT (Network Address Translation) for external connectivity.

Creating custom bridge networks offers significant advantages over the default bridge network. Containers on custom networks can resolve each other by name through Docker's embedded DNS server, eliminating the need for manual IP address management or link flags. Network isolation improves security by ensuring that only containers explicitly connected to a network can communicate with each other. Multiple networks allow you to segment application tiers—frontend, backend, database—controlling traffic flow and reducing attack surface. Creating a network is straightforward with docker network create, and containers join networks either at creation time with the --network flag or afterward with docker network connect.

"Proper network segmentation is not just a security best practice—it's fundamental to building scalable, maintainable container architectures where services communicate through well-defined interfaces rather than unrestricted access."

Port Mapping and External Access

Exposing container services to the host or external networks requires port mapping, which forwards traffic from host ports to container ports. The -p flag specifies these mappings in the format host_port:container_port. For example, -p 8080:80 forwards traffic from the host's port 8080 to the container's port 80. Omitting the host port causes Docker to assign a random available port, useful for avoiding conflicts but requiring inspection to discover the assigned port. Publishing all exposed ports with -P automatically maps all container ports declared in the image's EXPOSE instruction to random host ports.

Understanding the distinction between EXPOSE and publish is important. The EXPOSE instruction in Dockerfiles documents which ports the container listens on but doesn't actually publish them—it serves as metadata for documentation and as input to the -P flag. Publishing ports with -p or -P creates actual port forwarding rules, making services accessible. For production environments, explicitly specifying port mappings provides predictability and simplifies firewall configuration and load balancer setup.

Advanced Network Configurations

The host network driver removes network isolation, placing containers directly on the host's network stack. This eliminates the performance overhead of network address translation and provides maximum throughput, but sacrifices isolation and portability. Containers using host networking can bind to any host interface and see all network traffic, which has security implications. This mode suits high-performance scenarios where network latency is critical and containers run in trusted environments. However, port conflicts become possible since containers share the host's port space, requiring careful coordination.

Overlay networks enable communication between containers running on different Docker hosts, essential for distributed applications and container orchestration platforms. These networks use VXLAN encapsulation to create virtual networks spanning multiple hosts, making containers on different machines appear to be on the same local network. Setting up overlay networks requires a key-value store for coordination (though Docker Swarm includes this automatically) and proper firewall configuration to allow overlay traffic. Understanding overlay networking becomes crucial when scaling beyond single-host deployments or implementing high-availability architectures.

| Network Driver | Use Case | Isolation Level | Performance Impact |

|---|---|---|---|

| Bridge | Single-host container communication | High (separate network namespace) | Low (minimal NAT overhead) |

| Host | Maximum performance requirements | None (shares host network) | None (direct host network access) |

| Overlay | Multi-host container communication | High (encrypted tunnel option) | Medium (VXLAN encapsulation) |

| Macvlan | Legacy applications requiring direct network presence | Medium (separate MAC addresses) | Low (minimal overhead) |

| None | Complete network isolation | Complete (no network access) | None (no networking) |

Macvlan networks assign each container a unique MAC address, making containers appear as physical devices on the network. This driver suits scenarios where applications expect to be directly on the network, such as legacy applications or network monitoring tools. However, macvlan requires promiscuous mode on the host network interface, which some cloud providers and network environments don't support. Additionally, managing IP address allocation becomes your responsibility, as Docker doesn't provide DHCP services for macvlan networks.

Volume Management and Data Persistence

Containers are ephemeral by design—when removed, all data stored in the container's writable layer disappears. This characteristic aligns with the stateless service model but poses challenges for applications that need to persist data across container lifecycles. Docker volumes solve this problem by providing persistent storage that exists independently of containers. Volumes outlive containers, allowing data to survive container removal, updates, and restarts. They also enable data sharing between containers and offer better performance than bind mounts for many workloads, especially on Windows and macOS where Docker runs in a virtual machine.

Three primary methods exist for mounting data into containers: volumes, bind mounts, and tmpfs mounts. Volumes are managed by Docker, stored in a dedicated area of the host filesystem, and completely isolated from the host's directory structure. Docker handles volume lifecycle, permissions, and portability. Bind mounts directly map host directories or files into containers, providing full access to host filesystem paths. While flexible, bind mounts create tight coupling between containers and specific host configurations, reducing portability. Tmpfs mounts store data in host memory rather than disk, useful for sensitive information or temporary data that shouldn't persist or be written to disk.

Creating and Managing Volumes

Creating named volumes with docker volume create gives you explicit control over volume lifecycle and configuration. Named volumes can be created before containers start, configured with specific drivers or options, and reused across multiple containers. Anonymous volumes, created automatically when you specify a mount point without a name, serve temporary purposes but are harder to track and manage. Always prefer named volumes for production data that needs to persist beyond container lifecycles.

"The single biggest mistake in container data management is storing persistent data inside containers rather than using volumes—this leads to data loss, difficult backups, and inability to update containers without losing state."

Mounting volumes into containers requires the -v or --mount flag. The newer --mount syntax offers more explicit configuration with key-value pairs, making complex mounts more readable. For example, --mount type=volume,source=my-volume,target=/data clearly specifies the mount type, volume name, and container path. The older -v syntax uses a colon-separated format like -v my-volume:/data, which is more concise but less explicit about mount types and options.

Volume Drivers and External Storage

Docker's plugin architecture allows volume drivers to integrate external storage systems—network file systems, cloud storage services, distributed storage platforms—as Docker volumes. The default local driver stores volumes on the host filesystem, suitable for single-host deployments and development environments. For production clusters, volume drivers enable shared storage that multiple containers across different hosts can access simultaneously. Popular volume drivers include NFS, GlusterFS, Ceph, Amazon EBS, Azure Disk, and Google Persistent Disk.

Choosing the right volume driver depends on your infrastructure, performance requirements, and availability needs. Network-attached storage provides shared access but may introduce latency and become a single point of failure. Cloud provider block storage offers high performance and reliability but ties you to specific cloud platforms. Distributed storage systems provide redundancy and scalability but add complexity and operational overhead. Evaluating these trade-offs based on your specific workload characteristics ensures optimal performance and reliability.

Backup and Recovery Strategies

Backing up volume data protects against accidental deletion, corruption, and disaster scenarios. Since volumes exist as directories on the host filesystem (by default in /var/lib/docker/volumes/), traditional backup tools can capture volume data. However, directly accessing these directories requires root privileges and may cause consistency issues if containers are writing data during backup. A safer approach involves using containers to perform backups, mounting the volume read-only to ensure consistency.

Volume Management Commands

- 🗂️ docker volume create my-volume – Creates a named volume for persistent data storage

- 🗂️ docker volume ls – Lists all volumes on the system, showing names and drivers

- 🗂️ docker volume inspect my-volume – Displays detailed volume information including mount point and options

- 🗂️ docker volume rm my-volume – Removes a volume permanently, deleting all contained data

- 🗂️ docker volume prune – Removes all unused volumes, freeing disk space

Restoring from backups involves creating new volumes and copying data back. Using containers for restore operations maintains consistency with backup procedures and avoids direct filesystem manipulation. Testing restore procedures regularly ensures that backups are valid and that your recovery process works correctly. Many organizations discover backup problems only when attempting recovery, making regular restore testing a critical operational practice.

Security Best Practices and Hardening

Security in container environments requires attention at multiple layers—image security, runtime security, network security, and host security. Containers share the host kernel, meaning kernel vulnerabilities can potentially affect all containers on a host. This shared kernel model differs fundamentally from virtual machines, which provide stronger isolation through separate kernels. Understanding these security implications shapes how you build, configure, and operate containerized applications. Defense in depth remains the guiding principle—implementing security controls at multiple layers ensures that a breach at one level doesn't compromise the entire system.

Running containers as non-root users significantly reduces security risks. By default, containers run processes as root, which, combined with a container escape vulnerability, could grant attackers root access to the host system. Creating dedicated users in your Dockerfiles and using the USER instruction to switch to these users before starting application processes limits the damage potential from compromised containers. Some images require root for initialization but can drop privileges before starting the main application, providing a good balance between functionality and security.

Image Security and Vulnerability Management

Container images form the foundation of your security posture. Using official images from trusted registries reduces the risk of malicious code or backdoors. However, even official images contain vulnerabilities—security is a moving target as new vulnerabilities are discovered constantly. Regularly scanning images for known vulnerabilities helps identify problems before deployment. Tools like Docker Scan, Trivy, Clair, and Anchore analyze images against vulnerability databases, reporting issues by severity level.

"Security scanning should be integrated into your CI/CD pipeline, failing builds that contain critical vulnerabilities rather than discovering security issues in production environments where remediation is more difficult and costly."

Minimizing image size reduces attack surface by including fewer packages and libraries. Smaller images contain fewer potential vulnerabilities and reduce the code available to attackers. Using minimal base images like Alpine Linux, distroless images, or scratch images (for static binaries) dramatically reduces image size and vulnerability count. Multi-stage builds enable you to compile applications in full-featured builder images while copying only the necessary artifacts to minimal runtime images, achieving both convenience during development and security in production.

Runtime Security Controls

Docker provides several mechanisms for constraining container capabilities and system access. Linux capabilities divide root privileges into distinct units, allowing you to grant containers only the specific privileges they need. By default, Docker drops many capabilities, but you can further restrict containers with the --cap-drop flag or grant specific capabilities with --cap-add. Understanding which capabilities your applications require takes investigation but significantly improves security by following the principle of least privilege.

Security profiles using AppArmor or SELinux provide mandatory access control, defining exactly what resources containers can access. These profiles operate at the kernel level, enforcing restrictions even if applications are compromised. Docker includes a default AppArmor profile that provides reasonable security for most workloads, but custom profiles allow fine-tuning for specific application requirements. While creating custom profiles requires understanding your application's system call patterns, the security benefits justify this investment for high-security environments.

Read-only root filesystems prevent containers from modifying their filesystem, mitigating certain attack vectors. The --read-only flag makes the entire container filesystem read-only, though applications that need to write temporary data require tmpfs mounts for directories like /tmp. This approach forces explicit declaration of writable locations, making it clear where data persistence occurs and preventing attackers from installing malware or modifying system binaries within containers.

Network Security and Isolation

Restricting network access limits the potential impact of compromised containers. Using custom networks to segment application tiers ensures that frontend containers can't directly access database containers, forcing communication through designated API layers. This network segmentation provides defense in depth—even if attackers compromise a web server container, they can't directly access backend systems. Implementing network policies (supported by orchestration platforms like Kubernetes) further refines these controls, specifying exactly which containers can communicate and on which ports.

Avoiding privileged containers is critical for maintaining security boundaries. The --privileged flag grants containers nearly unlimited access to host resources, effectively disabling most security features. Privileged containers can load kernel modules, access all devices, and manipulate the host system in ways that break container isolation. While some specialized use cases require privileged access (like running Docker-in-Docker or certain network tools), these scenarios should be carefully evaluated and alternative approaches considered whenever possible.

Monitoring and Performance Optimization

Effective container management requires continuous visibility into container behavior, resource consumption, and performance characteristics. Without proper monitoring, issues remain invisible until they cause user-facing problems or system failures. Docker provides built-in tools for basic monitoring, while third-party solutions offer comprehensive observability platforms with advanced analytics, alerting, and visualization capabilities. Establishing monitoring early in your container journey prevents operational blind spots and enables proactive problem resolution rather than reactive firefighting.

The docker stats command provides real-time metrics for running containers, displaying CPU percentage, memory usage, network I/O, and block I/O. This command offers quick insights during troubleshooting but lacks historical data and alerting capabilities. For production environments, collecting metrics continuously and storing them in time-series databases enables trend analysis, capacity planning, and automated alerting. Prometheus has become the de facto standard for container metrics, offering powerful query capabilities and integration with visualization tools like Grafana.

Resource Utilization Analysis

Understanding resource utilization patterns helps optimize container configurations and identify performance bottlenecks. CPU usage patterns reveal whether containers are CPU-bound or waiting on I/O operations. Consistently high CPU usage might indicate inefficient code, insufficient resources, or the need for horizontal scaling. Memory usage should be monitored for trends—gradually increasing memory consumption often signals memory leaks that will eventually cause OOM kills. Network and disk I/O metrics identify bandwidth constraints or storage performance issues affecting application responsiveness.

"Performance optimization should be driven by metrics, not assumptions—measure first, identify bottlenecks, then optimize the components that actually impact your application's performance rather than prematurely optimizing based on theoretical concerns."

Setting appropriate resource limits based on actual usage prevents resource contention while avoiding over-provisioning. Start with generous limits and monitor actual consumption over time. Gradually tighten limits to match observed patterns, leaving headroom for traffic spikes and growth. Remember that resource limits affect scheduling decisions in orchestrated environments—overly restrictive limits might prevent containers from being scheduled even when sufficient resources exist, while overly generous limits might cause scheduling failures due to apparent resource exhaustion.

Log Management and Analysis

Centralized log management transforms individual container logs into actionable insights. Forwarding logs to centralized systems like Elasticsearch, Splunk, or cloud-based logging services enables searching across all containers, correlating events, and identifying patterns that wouldn't be visible in individual container logs. Structured logging, where applications output JSON-formatted logs with consistent fields, dramatically improves searchability and enables automated analysis. Including correlation IDs in logs allows tracing requests across multiple containers, essential for debugging distributed applications.

Log retention policies balance storage costs against troubleshooting needs. Keeping detailed logs indefinitely quickly becomes expensive, while aggressive deletion might remove evidence needed for debugging rare issues. Tiered retention strategies keep recent logs immediately accessible while archiving older logs to cheaper storage or reducing their detail level. Compliance requirements often dictate minimum retention periods, making log management both a technical and regulatory concern.

Health Checks and Automated Recovery

Health checks enable Docker to detect when containers are running but not functioning correctly. A container might have running processes but be unable to serve requests due to deadlocks, resource exhaustion, or external dependency failures. Implementing health checks in Dockerfiles or docker-compose files allows Docker to restart unhealthy containers automatically, improving availability without manual intervention. Health checks should verify that the application can perform its core functions, not just that the process is running—checking that a web server responds to requests is more valuable than verifying the process exists.

Designing effective health checks requires balancing responsiveness and reliability. Checks that run too frequently consume resources and might trigger false positives during temporary slowdowns. Checks that run too infrequently delay detection of problems. The interval, timeout, and failure threshold settings control this balance. Starting with conservative values and adjusting based on observed behavior prevents both excessive restarts and delayed problem detection. Health check endpoints should be lightweight, avoiding expensive operations that might themselves cause performance problems.

Multi-Container Applications and Orchestration

Real-world applications rarely consist of single containers. Modern applications comprise multiple services—web servers, application servers, databases, caches, message queues—each running in dedicated containers. Managing these multi-container applications requires orchestration tools that handle deployment, networking, scaling, and failure recovery across all components. Docker Compose simplifies development and testing by defining multi-container applications in declarative YAML files, while production environments typically require more sophisticated orchestration platforms like Docker Swarm or Kubernetes.

Docker Compose allows you to define entire application stacks in a single docker-compose.yml file, specifying services, networks, volumes, and their relationships. This declarative approach makes complex applications reproducible—running docker-compose up starts the entire application stack with proper networking and dependencies. Compose handles creating networks, mounting volumes, and starting containers in the correct order based on dependency declarations. For development environments, this capability dramatically reduces setup complexity and ensures consistency across team members' environments.

Service Dependencies and Startup Order

Multi-container applications often have startup dependencies—application servers need databases to be ready before starting, web servers need application servers available. Docker Compose's depends_on option controls startup order, ensuring dependent services start before the services that require them. However, "started" doesn't mean "ready"—a database container might be running but not yet accepting connections. Robust applications implement retry logic and connection pooling to handle these timing issues gracefully, rather than relying solely on startup order.

Health checks become even more important in multi-container environments. Compose can wait for health checks to pass before starting dependent services using the depends_on extended syntax with service_healthy conditions. This ensures that dependencies are not just running but actually functional before dependent services start. Implementing proper health checks throughout your application stack significantly improves reliability and reduces startup-related failures.

Scaling and Load Distribution

Docker Compose supports scaling services by running multiple instances of the same container. The docker-compose up --scale web=3 command starts three instances of the web service, with Compose automatically handling port assignment and load distribution. However, Compose's scaling capabilities are limited compared to production orchestration platforms. For development and testing, Compose scaling provides a quick way to verify that applications handle multiple instances correctly and identify any state management or session handling issues.

Production orchestration platforms like Kubernetes provide sophisticated scaling capabilities, including automatic scaling based on CPU usage, memory consumption, or custom metrics. These platforms handle service discovery, load balancing, rolling updates, and failure recovery automatically. Transitioning from Compose to Kubernetes or other orchestration platforms requires understanding their distinct concepts and capabilities, but the investment pays dividends in operational efficiency and application reliability.

Configuration Management

Managing configuration across multiple containers and environments presents challenges. Hard-coding configuration in images reduces flexibility and requires rebuilding images for configuration changes. Environment variables provide runtime configuration but become unwieldy for complex applications with many settings. Docker secrets and configs offer secure, manageable approaches for sensitive and non-sensitive configuration data respectively. Secrets encrypt data at rest and in transit, while configs provide a convenient way to inject configuration files into containers without embedding them in images.

External configuration management systems like Consul, etcd, or cloud provider parameter stores centralize configuration and enable dynamic updates without container restarts. Applications that support configuration reloading can adapt to changes without downtime, improving operational flexibility. Choosing between environment variables, Docker secrets/configs, and external configuration systems depends on your security requirements, operational complexity, and application capabilities.

Production Deployment Patterns

Deploying containers to production requires careful planning and adherence to proven patterns that ensure reliability, security, and maintainability. Development practices that work well on local machines often fail in production environments where scale, security, and operational requirements differ dramatically. Understanding production deployment patterns helps you avoid common pitfalls and build systems that meet enterprise requirements. These patterns have evolved through years of collective experience across organizations running containers at scale.

Immutable infrastructure treats containers as disposable units that are never modified after creation. Rather than updating running containers, you deploy new container versions and discard old ones. This approach eliminates configuration drift, simplifies rollback procedures, and makes deployments more predictable. All configuration and application code is baked into images or provided through external configuration systems, ensuring that every container instance is identical and reproducible. Embracing immutability requires shifting mindset from traditional server management where systems are updated in place to treating infrastructure as code that's versioned and deployed atomically.

Rolling Updates and Zero-Downtime Deployments

Rolling updates deploy new container versions gradually, replacing old containers with new ones incrementally while maintaining service availability. This pattern prevents the "all or nothing" risk of replacing all containers simultaneously. If problems arise during rollout, only a portion of traffic is affected, and rollback can occur before all instances are updated. Implementing rolling updates requires load balancing across container instances and health checks to verify that new containers are functioning correctly before routing traffic to them.

"Zero-downtime deployments aren't just about keeping services running—they're about maintaining user experience during updates, which requires careful attention to backward compatibility, database migrations, and API versioning."

Blue-green deployments maintain two complete environments—blue (current production) and green (new version). Traffic initially routes to blue while green is deployed and tested. Once validation completes, traffic switches to green, making it the new production environment. Blue remains available for quick rollback if issues appear. This pattern provides maximum safety and instant rollback capability but requires double the infrastructure resources during deployment. For critical systems where downtime is unacceptable, this resource cost is justified by the reduced risk and faster recovery.

Database Migrations and State Management

Handling database schema changes during container deployments requires careful coordination. Application code and database schema must remain compatible during rolling updates when both old and new application versions run simultaneously. Forward-compatible migrations—changes that work with both old and new code—enable safe rolling updates. This often requires deploying changes in multiple phases: first adding new columns or tables while maintaining old ones, then deploying application changes to use new schema, finally removing old schema in a subsequent deployment.

Separating stateful and stateless components simplifies container management. Stateless containers can be created, destroyed, and scaled freely without data loss concerns. Stateful components like databases require more careful handling, with data persistence through volumes, backup strategies, and potentially specialized orchestration. Many organizations run stateful services outside container environments initially, gradually containerizing them as they gain experience and confidence with container data management practices.

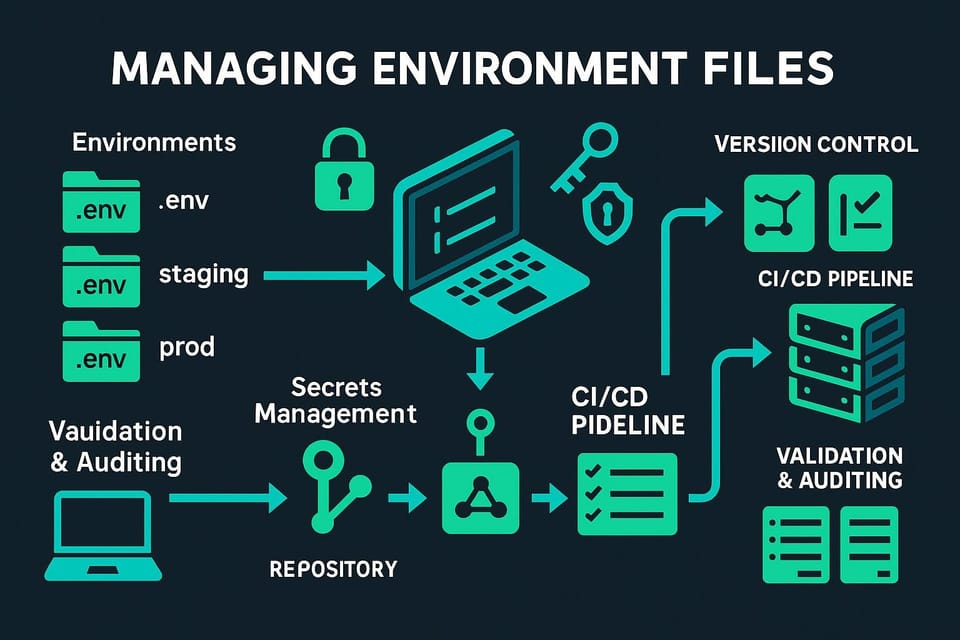

Continuous Integration and Deployment

Automating container builds, testing, and deployment through CI/CD pipelines ensures consistency and reduces human error. Pipelines typically include building images, running tests, scanning for vulnerabilities, pushing to registries, and deploying to environments. Each stage provides quality gates, preventing problematic changes from reaching production. Automated pipelines also create audit trails, documenting exactly what was deployed, when, and by whom—essential for compliance and troubleshooting.

Image tagging strategies impact deployment practices significantly. Using latest tags in production is dangerous because it's ambiguous—you can't tell which version is actually deployed. Semantic versioning or git commit hashes provide clear version identification. Some organizations use immutable tags that can never be overwritten, ensuring that pulling a specific tag always retrieves the exact same image. Others use date-based tags or build numbers, providing chronological ordering that simplifies understanding deployment history.

Troubleshooting Common Container Issues

Even with careful planning and implementation, container environments present unique troubleshooting challenges. The layered nature of container systems—images, containers, networks, volumes, orchestration—means problems can originate at multiple levels. Developing systematic troubleshooting approaches helps identify issues quickly and prevents wasted effort investigating the wrong layer. Understanding common failure modes and their symptoms accelerates problem resolution and reduces mean time to recovery.

Container startup failures often result from misconfiguration, missing dependencies, or permission issues. When containers exit immediately after starting, examining logs with docker logs usually reveals the cause. Common culprits include incorrect environment variables, missing volume mounts, network connectivity problems, or application crashes during initialization. Using docker inspect to verify container configuration helps identify mismatches between expected and actual settings. For containers that won't start at all, checking image integrity and ensuring the image exists locally or in accessible registries resolves many issues.

Network Connectivity Problems

Network issues manifest as connection timeouts, name resolution failures, or inability to reach external services. Verifying basic connectivity with tools like ping, curl, or telnet helps isolate whether problems are network-related or application-specific. Checking that containers are attached to the correct networks and that network configuration matches expectations resolves many connectivity issues. For inter-container communication problems, verifying that both containers are on the same network and that firewall rules or security groups allow the necessary traffic identifies most issues.

DNS resolution problems are particularly common in containerized environments. Docker's embedded DNS server handles name resolution for containers on custom networks, but misconfiguration or conflicts with host DNS settings can cause failures. Testing DNS resolution with nslookup or dig from within containers helps diagnose these issues. Ensuring that containers use Docker's DNS server (typically 127.0.0.11) rather than external DNS servers resolves many name resolution problems in custom networks.

Performance Degradation

Performance problems in container environments stem from resource constraints, inefficient application code, or infrastructure limitations. Monitoring resource usage with docker stats reveals whether containers are hitting CPU, memory, or I/O limits. Containers that consistently max out CPU allocation might need more resources or code optimization. Memory pressure causes frequent restarts due to OOM kills—increasing memory limits or fixing memory leaks resolves these issues. Disk I/O problems often result from inefficient storage drivers or volume configurations—testing different storage drivers or using volumes instead of bind mounts sometimes improves performance significantly.

Network performance issues might result from NAT overhead, inefficient routing, or bandwidth limitations. Using host networking eliminates NAT overhead but sacrifices isolation. Monitoring network traffic patterns helps identify whether network performance is actually the bottleneck or whether problems originate elsewhere. Many perceived network problems actually result from application inefficiencies like N+1 query problems or excessive API calls that would exist regardless of containerization.

Image and Registry Problems

Image pull failures prevent containers from starting and often result from registry authentication issues, network problems, or missing images. Verifying registry credentials and ensuring that the Docker daemon can reach the registry resolves authentication and connectivity problems. For private registries, ensuring that authentication tokens haven't expired and that network policies allow registry access prevents many issues. Rate limiting on public registries like Docker Hub can cause pull failures during periods of heavy usage—implementing local registry caches or using alternative registries mitigates these problems.

Image size and layer caching affect build times and storage consumption. Optimizing Dockerfiles to maximize layer reuse and minimize image size improves build performance and reduces storage requirements. Understanding how layer caching works—Docker reuses layers if the build context and commands haven't changed—helps structure Dockerfiles for optimal caching. Placing frequently changing content like application code after stable layers like base images and dependencies maximizes cache effectiveness.

Frequently Asked Questions

What is the difference between stopping and killing a Docker container?

Stopping a container sends a SIGTERM signal to the main process, allowing it to shut down gracefully by closing connections, saving state, and cleaning up resources. Docker waits for a configurable timeout (default 10 seconds) before forcibly terminating the process if it hasn't exited. Killing a container sends SIGKILL immediately, forcing instant termination without any cleanup opportunity. Always prefer stopping containers to prevent data corruption and ensure proper resource cleanup, only using kill when containers are unresponsive to normal shutdown signals.

How do I persist data when containers are removed?

Use Docker volumes to store data outside the container's writable layer. Volumes exist independently of containers and survive container removal, updates, and restarts. Create named volumes with docker volume create and mount them into containers using the -v or --mount flags. For databases and other stateful applications, always store data in volumes rather than the container filesystem. Bind mounts can also persist data by mapping host directories into containers, though volumes offer better performance and portability in most scenarios.

Why can't my containers communicate with each other by name?

Containers on the default bridge network cannot resolve each other by name—only by IP address. Create custom bridge networks using docker network create and attach containers to these networks. Docker's embedded DNS server automatically handles name resolution for containers on custom networks, allowing them to communicate using container names or network aliases. This approach is more maintainable than managing IP addresses manually and works seamlessly as containers are created, destroyed, and restarted with potentially different IP addresses.

What are the security implications of running containers as root?

Running containers as root means processes inside the container have root privileges within the container namespace. While container isolation provides some protection, vulnerabilities that allow container escape could grant attackers root access to the host system. Create non-root users in your Dockerfiles and use the USER instruction to run application processes with minimal privileges. Some containers require root for initialization but can drop privileges before starting the main application, balancing functionality and security effectively.

How do I handle environment-specific configuration without rebuilding images?

Use environment variables, Docker secrets, or external configuration management systems to inject environment-specific settings at runtime. Environment variables work well for simple configuration and can be set using the -e flag or through compose files. Docker secrets provide secure handling of sensitive data like passwords and API keys, encrypting data at rest and in transit. For complex configuration, external systems like Consul or cloud provider parameter stores centralize configuration management and enable updates without container restarts. Never hard-code environment-specific values in images, as this requires rebuilding and redeploying for configuration changes.

What causes containers to restart repeatedly and how do I fix it?

Containers restart repeatedly when they exit with errors and restart policies are configured to restart on failure. Common causes include application crashes, missing dependencies, configuration errors, or resource constraints. Check container logs with docker logs to identify the specific error causing exits. Verify that all required environment variables, volumes, and network connections are properly configured. Resource limits that are too restrictive can cause OOM kills or CPU throttling that prevents applications from functioning correctly. Adjust restart policies if necessary—using on-failure instead of always prevents infinite restart loops when containers have persistent configuration problems.