How to Monitor API Health and Metrics

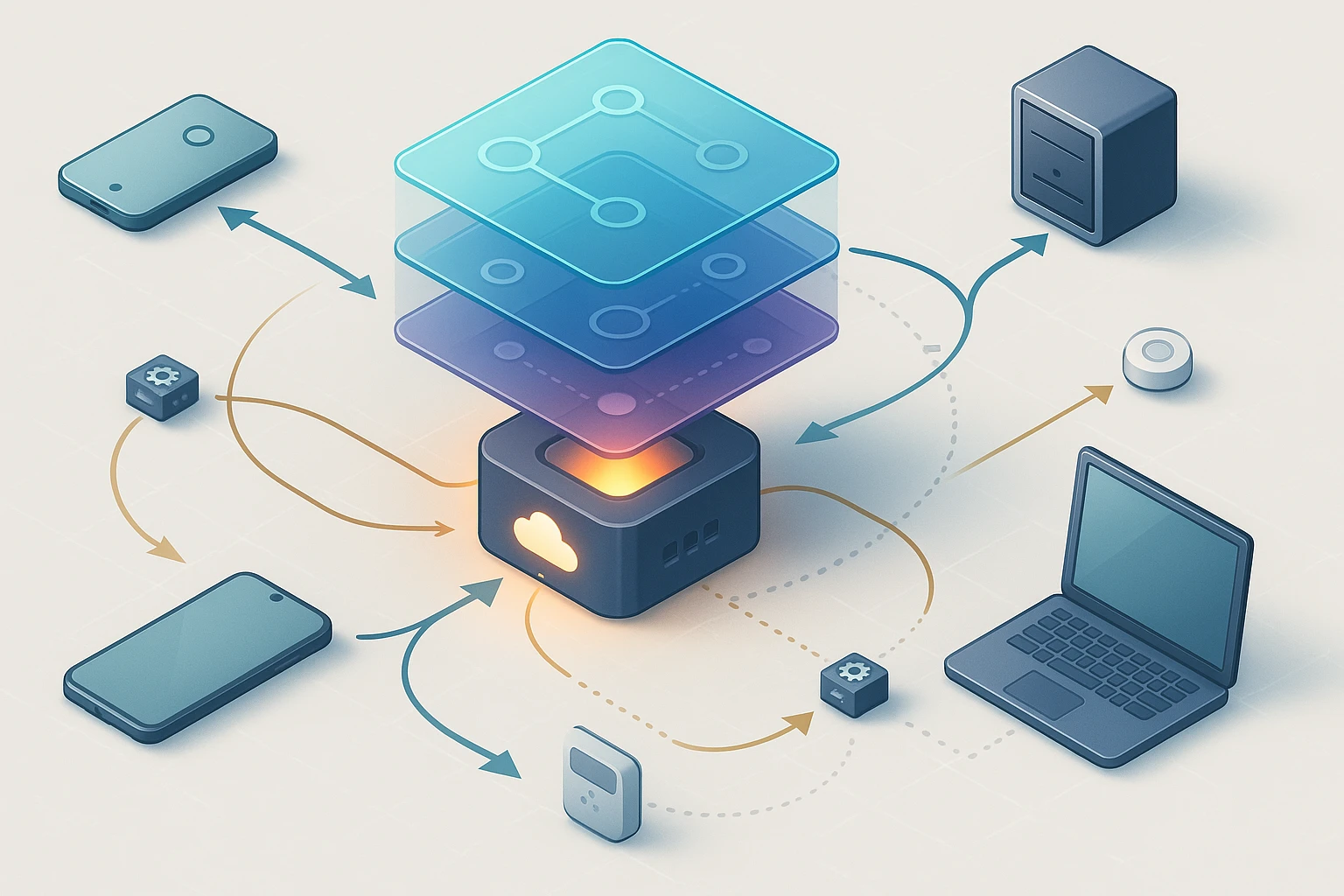

Dashboard showing API health and metrics: uptime, latency, error rate, throughput, status indicators, time series graphs, alert badges, logs, and monitoring tools to detect issues.

Why API Health Monitoring Matters in Modern Digital Infrastructure

In today's interconnected digital ecosystem, APIs serve as the critical bridges connecting applications, services, and data sources. When these bridges falter, even momentarily, the ripple effects can cascade through entire business operations—disrupting customer experiences, halting transactions, and eroding trust. The difference between organizations that thrive and those that struggle often comes down to their ability to detect, diagnose, and resolve API issues before they impact end users. This isn't merely about keeping systems running; it's about maintaining the integrity of digital promises made to customers, partners, and stakeholders.

API health monitoring encompasses the systematic observation and measurement of application programming interfaces to ensure they perform reliably, respond quickly, and remain available when needed. It involves tracking various metrics—from response times and error rates to throughput and resource utilization—while establishing baselines that help distinguish normal behavior from anomalies. This practice draws on perspectives from development teams focused on code quality, operations teams managing infrastructure, security professionals guarding against threats, and business leaders measuring customer satisfaction.

Throughout this exploration, you'll discover practical approaches to implementing comprehensive API monitoring strategies. We'll examine the essential metrics that reveal system health, explore tools and techniques for gathering meaningful data, discuss how to establish effective alerting systems, and provide actionable guidance for interpreting monitoring data to drive continuous improvement. Whether you're building your first monitoring solution or refining an existing approach, these insights will help you create resilient API ecosystems that support business objectives.

Essential Metrics That Reveal API Performance

Understanding which metrics to monitor represents the foundation of effective API health management. Different metrics illuminate different aspects of system behavior, and together they create a comprehensive picture of API wellness. The challenge lies not in collecting every possible data point, but in identifying those measurements that provide actionable insights into system performance and user experience.

Response Time and Latency Measurements

Response time measures the duration between when a request reaches your API and when the complete response returns to the caller. This metric directly impacts user experience—faster responses translate to smoother interactions, while delays create frustration. Breaking down response time into components reveals where delays originate: network transit time, authentication overhead, database query execution, external service calls, and application processing time. By monitoring these segments separately, teams can pinpoint optimization opportunities rather than treating response time as a monolithic measurement.

Latency distribution matters more than average latency. An API might show an average response time of 200 milliseconds, appearing perfectly healthy, while 5% of requests take over 5 seconds—a terrible experience for affected users. Percentile measurements (p50, p95, p99) provide this nuanced view, revealing how the slowest requests perform and helping teams set realistic service level objectives.

"Monitoring average response times alone creates dangerous blind spots; the worst experiences your users encounter hide in the tail latencies that percentile tracking reveals."

Availability and Uptime Tracking

Availability measures whether your API responds to requests successfully. While seemingly straightforward, this metric requires careful definition. Does a slow response count as available? What about responses with error codes? Most organizations define availability as the percentage of requests that receive successful responses (typically HTTP 2xx status codes) within an acceptable timeframe.

Tracking availability from multiple geographic locations provides insights into regional issues—perhaps your API performs excellently for North American users while European customers experience frequent timeouts due to network routing problems. Similarly, monitoring from different network types (cloud providers, mobile networks, corporate connections) reveals how various user segments experience your service.

Error Rates and Status Code Distribution

Error rates indicate the percentage of requests that fail, but not all errors carry equal weight. Client errors (4xx status codes) often reflect problems with request formatting or authentication, while server errors (5xx codes) signal issues within your infrastructure or application logic. Distinguishing between these categories helps teams prioritize responses—a spike in 500 errors demands immediate attention, while increased 400 errors might indicate a need for better API documentation or client SDK improvements.

Monitoring specific error types provides even deeper insights. Are 503 Service Unavailable errors occurring because downstream dependencies failed? Do 429 Too Many Requests responses indicate rate limiting is protecting your infrastructure, or that limits need adjustment? Each error pattern tells a story about system behavior and user interactions.

Throughput and Request Volume

Throughput measures how many requests your API processes over time, typically expressed as requests per second or requests per minute. This metric helps teams understand usage patterns, capacity requirements, and growth trends. Sudden throughput changes often signal significant events—a successful marketing campaign driving traffic, a bot attack overwhelming systems, or a major client integration going live.

Comparing actual throughput against capacity limits reveals how much headroom exists before performance degrades. If your infrastructure handles 10,000 requests per second comfortably but current peak usage reaches 9,500 requests per second, you're operating with minimal safety margin. This insight drives capacity planning decisions and infrastructure scaling strategies.

Resource Utilization Metrics

APIs don't operate in isolation—they consume computational resources including CPU cycles, memory, network bandwidth, and storage capacity. Monitoring these resources reveals whether performance issues stem from application inefficiencies or infrastructure constraints. High CPU utilization might indicate computational bottlenecks or inefficient algorithms, while memory pressure could signal memory leaks or excessive caching.

Database connection pool exhaustion, thread pool saturation, and file descriptor limits represent common resource constraints that degrade API performance. Tracking these specialized metrics helps teams identify scaling bottlenecks before they cause outages.

| Metric Category | Key Measurements | Primary Use Case | Typical Threshold |

|---|---|---|---|

| Response Time | p50, p95, p99 latency | User experience optimization | p95 < 500ms |

| Availability | Uptime percentage, success rate | Reliability measurement | > 99.9% |

| Error Rate | 4xx/5xx ratio, specific error codes | Problem detection | < 1% total errors |

| Throughput | Requests per second, daily volume | Capacity planning | Varies by system |

| Resource Usage | CPU, memory, connections | Infrastructure optimization | CPU < 70% |

Implementing Effective Monitoring Infrastructure

Building robust API monitoring requires more than selecting metrics—it demands thoughtful infrastructure that collects, stores, analyzes, and visualizes data reliably. The monitoring system itself must exhibit high availability, since a failed monitoring solution leaves teams blind during critical incidents. Effective implementations balance comprehensiveness with practicality, gathering sufficient detail without overwhelming storage systems or creating analysis paralysis.

Instrumentation and Data Collection Strategies

Instrumentation refers to the code and configurations that capture monitoring data from your API. Several approaches exist, each with distinct advantages. Application-level instrumentation embeds monitoring logic directly within API code, providing deep visibility into internal operations. This approach captures detailed timing information for specific functions, tracks business metrics alongside technical measurements, and enables custom monitoring tailored to application-specific needs.

Middleware-based instrumentation intercepts requests and responses as they flow through your API framework, automatically capturing standard metrics without requiring changes to business logic. This approach reduces development burden and ensures consistent monitoring across endpoints, though it may miss internal processing details that application-level instrumentation would reveal.

Distributed tracing extends monitoring across multiple services by propagating correlation identifiers through request chains. When a user request triggers calls to authentication services, database queries, cache lookups, and external APIs, distributed tracing connects these operations into a coherent narrative. This capability proves invaluable in microservices architectures where understanding cross-service interactions determines whether performance issues originate locally or in dependencies.

Logging Best Practices for API Monitoring

Logs provide narrative context that metrics alone cannot convey. While metrics answer "what happened," logs explain "why it happened" and "what was the system doing at the time." Effective API logging balances detail against volume—too little information hampers troubleshooting, while excessive logging overwhelms storage systems and obscures important signals.

Structured logging formats (JSON, key-value pairs) enable automated parsing and analysis. Rather than writing free-form text that requires complex regular expressions to extract information, structured logs present data in consistent formats that monitoring tools can query efficiently. Including correlation IDs in log entries connects related operations across services, while severity levels (DEBUG, INFO, WARN, ERROR) help filter logs based on importance.

"The logs you need during an outage are the ones you implemented before the outage; retrospective logging wishes don't resolve incidents."

Synthetic Monitoring and Health Checks

Synthetic monitoring proactively tests API functionality by executing scripted requests from various locations at regular intervals. Unlike passive monitoring that observes real user traffic, synthetic monitors verify API behavior even during low-usage periods. This approach detects issues before customers encounter them and validates that critical workflows function correctly.

Health check endpoints provide simple mechanisms for monitoring systems to verify API availability. These specialized endpoints typically perform lightweight operations—checking database connectivity, verifying cache access, confirming authentication service availability—and return status indicators. Load balancers, orchestration platforms, and monitoring tools query health endpoints to determine whether API instances should receive traffic.

Designing effective health checks requires balancing thoroughness against performance impact. A health check that executes complex database queries might accurately reflect system capability but creates unnecessary load when invoked frequently. Lightweight checks that merely return HTTP 200 without verifying dependencies might miss critical failures. The optimal approach validates essential dependencies without imposing significant overhead.

Real User Monitoring Techniques

Real user monitoring (RUM) captures actual user experiences by measuring API performance from client perspectives. This approach reveals issues that synthetic monitoring might miss—geographic variations in network performance, client-specific problems, and usage patterns that stress unexpected API aspects. RUM data helps teams prioritize optimizations based on actual user impact rather than theoretical concerns.

Implementing RUM requires instrumenting client applications to report performance measurements back to monitoring systems. Mobile apps might track API response times and error rates, web applications can use browser APIs to measure network timing, and server-to-server integrations can log detailed performance data. Privacy considerations become paramount when collecting real user data—ensure compliance with regulations and user expectations regarding data collection and retention.

Establishing Meaningful Alerts and Thresholds

Collecting monitoring data provides value only when teams respond to signals effectively. Alerting systems notify relevant personnel when metrics exceed acceptable thresholds, enabling rapid response to degrading conditions before they become full outages. However, poorly configured alerts create more problems than they solve—constant false alarms train teams to ignore notifications, while overly conservative thresholds delay awareness of genuine issues.

Defining Service Level Objectives

Service Level Objectives (SLOs) establish concrete, measurable targets for API performance and reliability. Rather than vague commitments to "high availability" or "fast responses," SLOs specify precise expectations: "99.9% of requests will succeed" or "95% of requests will complete within 300 milliseconds." These objectives guide monitoring configuration, alert threshold selection, and operational priorities.

Effective SLOs balance ambition against reality. Setting objectives too aggressively creates unachievable targets that demoralize teams and waste resources on diminishing returns. Conversely, overly conservative SLOs fail to drive meaningful improvement and may not meet user expectations. The process of defining SLOs encourages conversations between engineering teams, product managers, and business stakeholders about acceptable trade-offs between reliability investments and feature development.

Error budgets complement SLOs by quantifying acceptable failure rates. If your SLO promises 99.9% availability, you have a 0.1% error budget—roughly 43 minutes of downtime monthly or 8.7 hours annually. Teams can "spend" this budget on planned maintenance, risky deployments, or acceptable failures. When error budgets deplete, organizations freeze risky changes and focus on reliability improvements until budgets replenish.

Threshold Configuration Strategies

Static thresholds trigger alerts when metrics cross predefined values—CPU utilization exceeds 80%, error rate surpasses 5%, response time exceeds 1 second. This approach works well for metrics with predictable, stable ranges. However, many API metrics exhibit patterns that make static thresholds problematic. Request volume might vary dramatically between weekday business hours and weekend nights; a threshold appropriate for peak periods generates false alarms during quiet periods, while thresholds set for low-traffic times miss problems during busy periods.

Dynamic thresholds adapt to expected patterns by comparing current metrics against historical baselines. If request volume typically doubles on Monday mornings, the monitoring system expects this pattern and only alerts when actual volume deviates significantly from the Monday morning baseline. Anomaly detection algorithms identify unusual patterns without requiring manual threshold configuration, though they require sufficient historical data to establish reliable baselines.

"Alert fatigue kills incident response effectiveness faster than any technical failure; every alert must justify interrupting someone's focus or sleep."

Alert Routing and Escalation

Different alerts demand different responses. Critical issues affecting customer-facing functionality require immediate attention from on-call engineers, while warning-level alerts about gradually increasing resource utilization might route to ticketing systems for investigation during business hours. Effective alert routing ensures the right people receive relevant notifications through appropriate channels at suitable times.

Escalation policies define what happens when initial responders don't acknowledge alerts. Perhaps the primary on-call engineer receives notifications via SMS and mobile app, with escalation to a secondary engineer after 5 minutes without acknowledgment, then to an engineering manager after another 5 minutes. These policies prevent alerts from disappearing into ignored notification streams while avoiding unnecessary interruptions when responders actively handle incidents.

Alert Enrichment and Context

Valuable alerts provide sufficient context for responders to understand problems without requiring extensive investigation. Rather than simply stating "Error rate exceeded threshold," enriched alerts might include: current error rate and threshold value, comparison to typical values, affected endpoints or services, recent deployments or configuration changes, links to relevant dashboards and runbooks, and initial troubleshooting suggestions.

Runbook integration connects alerts to documented response procedures. When an alert fires, responders immediately access step-by-step troubleshooting guides specific to that alert type. This approach accelerates incident resolution, especially for less common issues or when less experienced team members respond to incidents.

Visualization and Dashboard Design

Dashboards transform raw monitoring data into visual representations that reveal patterns, trends, and anomalies at a glance. Well-designed dashboards help teams understand system behavior quickly, identify problems efficiently, and communicate status effectively to stakeholders. Poor dashboard design overwhelms viewers with irrelevant information, obscures important signals, or presents data in misleading ways.

Dashboard Hierarchy and Organization

Effective monitoring systems employ multiple dashboard types serving different purposes. Executive dashboards provide high-level overviews of system health and key business metrics, using simple visualizations that non-technical stakeholders can interpret quickly. Operational dashboards offer detailed views that engineers use during incident response, displaying comprehensive metrics with sufficient granularity for troubleshooting. Analytical dashboards support deep investigation of specific issues or performance optimization efforts, providing flexible querying and detailed historical data.

Organizing dashboards by service, functionality, or team creates logical groupings that help users find relevant information quickly. A microservices architecture might include dashboards for each service plus aggregate dashboards showing cross-service dependencies. Customer-facing APIs might have separate dashboards for authentication, data retrieval, and transaction processing.

Choosing Appropriate Visualizations

Different data types benefit from different visualization approaches. Time series graphs effectively display metrics that change over time—response latency, request volume, error rates—allowing viewers to identify trends and correlations. Line graphs work well for continuous metrics, while bar charts suit discrete measurements or comparisons between categories.

Heatmaps reveal distribution patterns that averages obscure. Rather than showing average response time, a heatmap might display how many requests fall into different latency buckets over time, revealing whether most requests complete quickly with occasional slow outliers, or whether response times spread across a wide range. This visualization makes performance variability immediately apparent.

Status indicators and gauges communicate current state at a glance. Traffic light colors (green, yellow, red) instantly convey whether metrics fall within acceptable ranges, approaching thresholds, or exceeding limits. These simple visualizations prove particularly valuable in executive dashboards and operations centers where quick status assessment matters more than detailed analysis.

| Visualization Type | Best Used For | Advantages | Limitations |

|---|---|---|---|

| Time Series Line Graph | Metrics changing over time | Shows trends, patterns, correlations | Cluttered with too many series |

| Heatmap | Distribution patterns | Reveals variability and outliers | Requires training to interpret |

| Status Indicator | Current health state | Immediate understanding | No historical context |

| Bar Chart | Comparing categories | Easy comparison of discrete values | Poor for continuous data |

| Percentile Graph | Performance distribution | Shows user experience range | More complex than averages |

Avoiding Dashboard Anti-Patterns

Common dashboard mistakes undermine monitoring effectiveness. Vanity metrics that look impressive but don't drive decisions waste valuable screen space—total API calls served might seem important, but rarely influences operational actions. Misleading scales manipulate perception, such as y-axes that don't start at zero, exaggerating minor fluctuations into apparent crises.

Information overload occurs when dashboards display too many metrics simultaneously, forcing viewers to search for relevant data during time-sensitive incidents. Prioritize the most important signals, using drill-down capabilities to access detailed information when needed rather than cramming everything into a single view.

"The best dashboard shows you exactly what you need to know without making you search for it; every additional chart should justify its presence by enabling better decisions."

Monitoring Tools and Platform Selection

Numerous monitoring solutions exist, ranging from open-source projects requiring significant implementation effort to fully-managed commercial platforms offering comprehensive capabilities out-of-the-box. Selecting appropriate tools depends on factors including team expertise, infrastructure complexity, budget constraints, integration requirements, and scalability needs. Understanding the monitoring tool landscape helps teams make informed decisions aligned with their specific circumstances.

Open Source Monitoring Solutions

Open source monitoring tools provide flexibility and cost advantages, particularly for organizations with engineering resources to implement and maintain them. Prometheus has become a popular choice for metrics collection and storage, offering a powerful query language, efficient time-series database, and extensive ecosystem of exporters for gathering metrics from various systems. Grafana complements Prometheus by providing sophisticated visualization capabilities, supporting multiple data sources, and offering extensive customization options.

The ELK stack (Elasticsearch, Logstash, Kibana) specializes in log aggregation and analysis. Logstash collects and processes logs from diverse sources, Elasticsearch provides powerful search and indexing capabilities, and Kibana delivers visualization and exploration interfaces. This combination excels at handling large log volumes and enabling complex queries across distributed systems.

Jaeger and Zipkin focus on distributed tracing, helping teams understand request flows through microservices architectures. These tools capture timing information for operations across service boundaries, visualize request paths, and identify performance bottlenecks in complex distributed systems.

Commercial Monitoring Platforms

Commercial monitoring solutions offer integrated capabilities, managed infrastructure, and professional support at the cost of ongoing subscription fees. Datadog provides comprehensive monitoring combining infrastructure metrics, application performance monitoring, log management, and distributed tracing in a unified platform. Its extensive integration library supports monitoring virtually any technology stack with minimal configuration.

New Relic focuses on application performance monitoring with deep visibility into code-level performance. It automatically instruments applications to track transaction performance, identify slow database queries, and pinpoint inefficient code paths. The platform's ability to connect performance data with business outcomes helps teams prioritize optimization efforts.

Splunk excels at log analysis and security monitoring, offering powerful search capabilities and machine learning features for anomaly detection. While often associated with security use cases, Splunk's flexibility makes it suitable for comprehensive API monitoring when log analysis represents a primary requirement.

Specialized API Monitoring Services

Purpose-built API monitoring services like Runscope, Postman Monitors, and APImetrics focus specifically on testing and monitoring API endpoints. These services provide synthetic monitoring from multiple global locations, automated testing of API functionality, and detailed performance analytics. They excel at monitoring external APIs or providing customer-perspective monitoring but may lack the infrastructure-level visibility that general-purpose monitoring platforms offer.

Evaluation Criteria for Tool Selection

Selecting monitoring tools requires evaluating multiple dimensions beyond feature lists. Consider the total cost of ownership including licensing fees, infrastructure requirements, and personnel time for implementation and maintenance. Open source tools may appear free but require significant engineering investment, while commercial platforms charge subscription fees but reduce implementation burden.

Scalability determines whether solutions handle current monitoring needs and future growth. Can the platform ingest metrics from thousands of API instances? Does it maintain query performance when storing months or years of historical data? Scalability limitations create painful migration projects when monitoring systems can't keep pace with growth.

Integration capabilities affect how easily monitoring solutions fit into existing workflows. Does the platform integrate with your incident management system? Can it authenticate against your identity provider? Does it support the programming languages and frameworks your teams use? Seamless integration reduces friction and increases monitoring adoption.

"The best monitoring tool is the one your team actually uses; sophisticated features matter less than usability and integration with existing workflows."

Analyzing Monitoring Data for Insights

Collecting monitoring data represents just the beginning—extracting actionable insights from that data drives meaningful improvements. Effective analysis transforms metrics into understanding, revealing not just that problems occurred but why they happened and how to prevent recurrence. This process combines statistical techniques, domain expertise, and systematic investigation to uncover patterns that inform optimization efforts.

Correlation Analysis and Root Cause Investigation

When API performance degrades, multiple metrics typically change simultaneously. Response times increase while throughput decreases and error rates climb. Correlation analysis helps distinguish symptoms from causes by identifying which metric changes preceded others. Perhaps CPU utilization spiked first, followed by increased response times, then rising error rates—suggesting that CPU exhaustion triggered the cascade of problems.

Examining metric changes in context reveals root causes that isolated metrics miss. A sudden increase in database query latency might correlate with a deployment that introduced inefficient queries, a database maintenance operation, or a marketing campaign that changed usage patterns. Cross-referencing monitoring data with deployment logs, configuration changes, and external events constructs narratives explaining system behavior.

Trend Analysis and Capacity Planning

Historical monitoring data reveals growth trends that inform capacity planning. Analyzing request volume over months or years shows seasonal patterns, growth rates, and usage changes. If API traffic grows 5% monthly, current infrastructure might suffice for six months but require expansion within a year. Proactive capacity planning based on trend analysis prevents performance degradation and outages caused by exceeding infrastructure limits.

Performance trends indicate whether optimizations succeed or technical debt accumulates. Gradually increasing response times despite stable traffic suggest that system efficiency degrades over time—perhaps due to growing databases, accumulating cached data, or code changes that introduce inefficiencies. Detecting these gradual degradations enables intervention before they impact users significantly.

Anomaly Detection and Pattern Recognition

Automated anomaly detection identifies unusual patterns without requiring manual threshold configuration. Machine learning algorithms establish baselines from historical data, then flag metrics that deviate significantly from expected values. This approach proves particularly valuable for metrics with complex patterns that make static thresholds impractical.

Pattern recognition reveals relationships between events. Perhaps API performance always degrades on the first Monday of each month—investigation might discover automated monthly reports that generate heavy database load. Recognizing these patterns enables proactive responses, such as scheduling resource-intensive operations during low-traffic periods or optimizing problematic queries.

Business Impact Analysis

Connecting technical metrics to business outcomes demonstrates monitoring value and guides prioritization. Rather than merely tracking error rates, correlate errors with failed transactions, lost revenue, or customer complaints. When technical teams can quantify that reducing p99 latency from 2 seconds to 500 milliseconds would improve conversion rates by 3%, they can justify optimization investments with business impact rather than technical arguments.

Segmenting monitoring data by customer tier, geographic region, or product feature reveals whether performance issues affect all users equally or disproportionately impact specific segments. Perhaps premium customers experience excellent performance while free-tier users encounter frequent timeouts due to resource prioritization. This visibility informs decisions about resource allocation and service differentiation.

Security Monitoring and Threat Detection

API monitoring extends beyond performance and availability to encompass security concerns. APIs represent attractive targets for attackers seeking to extract sensitive data, disrupt services, or exploit vulnerabilities. Security-focused monitoring detects suspicious patterns, identifies potential threats, and provides evidence for investigating security incidents. Integrating security monitoring with performance monitoring creates comprehensive visibility into both operational and security health.

Detecting Suspicious Request Patterns

Unusual request patterns often indicate security threats. A sudden surge in authentication attempts from a single IP address might signal a brute-force attack. Requests with malformed parameters could represent attempts to exploit input validation vulnerabilities. Geographic anomalies—such as a user account suddenly making requests from multiple countries simultaneously—suggest credential compromise.

Rate limiting violations provide early warning of potential abuse. Legitimate users rarely exceed reasonable rate limits, so frequent limit violations warrant investigation. Distinguishing between misconfigured clients and malicious actors requires examining request patterns, user agents, and historical behavior.

Monitoring Authentication and Authorization

Authentication failures deserve careful monitoring. While occasional failed login attempts occur naturally, patterns of failures indicate problems. Multiple failed attempts followed by success might represent successful credential guessing. Failed authentication attempts using valid usernames but incorrect passwords differ from attempts with nonexistent usernames—the former suggests targeted attacks, while the latter might indicate automated scanning.

Authorization failures reveal attempts to access restricted resources. Users repeatedly requesting endpoints they lack permission to access might indicate reconnaissance activities. Monitoring authorization patterns helps identify both security threats and usability issues—perhaps users genuinely need access to resources that current permissions deny.

Data Exfiltration Detection

Monitoring data access patterns helps detect unauthorized data extraction. A user account suddenly downloading thousands of records might indicate compromise. Requests for sensitive endpoints from unusual sources or at unusual times warrant investigation. Combining access monitoring with user behavior analytics establishes baselines for normal activity, making anomalies more apparent.

Response payload sizes provide another signal. If endpoints typically return kilobytes of data but suddenly serve megabytes, something changed—either legitimate usage patterns or potential data exfiltration. Tracking payload sizes alongside access patterns creates multiple signals that together reveal suspicious activity.

"Security monitoring and performance monitoring are complementary perspectives on the same system; neither provides complete visibility alone, but together they reveal comprehensive system health."

Continuous Improvement Through Monitoring Insights

Monitoring systems generate vast quantities of data, but data alone doesn't improve APIs—action based on insights does. Establishing processes that translate monitoring observations into concrete improvements creates virtuous cycles where monitoring informs optimization, optimizations improve metrics, and improved metrics validate efforts. This continuous improvement mindset transforms monitoring from a reactive practice focused on incident response into a proactive discipline driving systematic enhancement.

Post-Incident Reviews and Learning

When incidents occur, monitoring data provides the foundation for understanding what happened, why it happened, and how to prevent recurrence. Post-incident reviews (sometimes called postmortems or retrospectives) systematically examine incidents using monitoring data as evidence. These reviews reconstruct timelines, identify contributing factors, and generate action items for improvement.

Effective post-incident reviews avoid blame, focusing instead on systemic factors that enabled incidents. Perhaps monitoring detected a problem but alerts didn't reach on-call engineers due to notification system failures. Maybe alerts fired but lacked sufficient context for responders to diagnose issues quickly. Each incident reveals opportunities to strengthen monitoring, alerting, or response processes.

Performance Optimization Cycles

Monitoring data guides performance optimization by identifying bottlenecks and measuring improvement. Before optimization efforts, establish baseline metrics capturing current performance. After implementing changes, compare new metrics against baselines to validate that optimizations achieved intended effects. This data-driven approach prevents wasted effort on optimizations that don't meaningfully improve user experience.

Prioritizing optimization efforts based on monitoring insights ensures resources focus on changes with maximum impact. Perhaps 80% of slow requests originate from a single endpoint—optimizing that endpoint delivers more value than broad improvements across all endpoints. Monitoring data quantifies where problems exist and which improvements matter most.

Capacity Planning and Resource Optimization

Monitoring data informs decisions about infrastructure capacity and resource allocation. Tracking resource utilization over time reveals whether current infrastructure operates efficiently or wastes resources. Perhaps API servers consistently use only 30% of available CPU—you might reduce costs by downsizing instances. Conversely, if utilization regularly exceeds 80%, adding capacity before performance degrades prevents future incidents.

Understanding usage patterns enables cost optimization through scheduling and auto-scaling. If traffic drops 70% overnight, automatically reducing capacity during low-traffic periods cuts costs without impacting user experience. Monitoring data provides the usage patterns that make intelligent auto-scaling possible.

Measuring Success and Demonstrating Value

Monitoring systems themselves require justification—demonstrating that monitoring investments deliver value encourages continued support and resources. Quantifying monitoring benefits in concrete terms builds this case. Perhaps monitoring detected issues before customers reported them in 90% of incidents, significantly reducing customer impact. Maybe mean time to resolution decreased 40% after implementing distributed tracing, directly attributable to improved debugging capabilities.

Tracking reliability metrics over time shows whether monitoring-informed improvements actually enhance system stability. If SLO compliance improved from 99.5% to 99.95% over six months while simultaneously reducing incident frequency, monitoring clearly contributes to operational excellence. These success metrics justify monitoring investments and encourage continued improvement efforts.

Monitoring in Different Architectural Contexts

API monitoring strategies must adapt to architectural patterns and deployment models. Monolithic applications, microservices architectures, serverless functions, and edge computing environments each present unique monitoring challenges and opportunities. Understanding how architectural choices influence monitoring requirements helps teams implement appropriate strategies for their specific contexts.

Monolithic Application Monitoring

Monitoring monolithic APIs offers relative simplicity—a single application serves all endpoints, metrics aggregate naturally, and request tracing remains straightforward. Instrumentation focuses on the application itself, its database connections, and external service dependencies. This centralized structure simplifies correlation analysis since all operations occur within a single process or small cluster of identical processes.

However, monolithic architectures can obscure internal bottlenecks. While external metrics show overall API performance, identifying which internal components cause problems requires detailed application-level instrumentation. Profiling tools, detailed logging, and code-level performance monitoring become essential for optimization.

Microservices Architecture Monitoring

Microservices distribute functionality across multiple independent services, dramatically increasing monitoring complexity. A single user request might traverse authentication services, user profile services, recommendation engines, and transaction processors—each potentially running on different infrastructure with different performance characteristics. Understanding system behavior requires monitoring each service individually while also tracking cross-service interactions.

Distributed tracing becomes essential in microservices environments. Trace identifiers propagate through service calls, connecting operations across service boundaries into coherent request flows. This visibility reveals whether slow response times originate in specific services or result from cascading delays across multiple services.

Service mesh technologies like Istio or Linkerd provide infrastructure-level monitoring capabilities, automatically capturing metrics for service-to-service communication without requiring application changes. These platforms offer consistent monitoring across heterogeneous services, though they add infrastructure complexity and operational overhead.

Serverless and Function-Based Monitoring

Serverless architectures introduce unique monitoring challenges. Functions execute ephemerally, making traditional monitoring approaches difficult. Cold start latencies—delays when functions initialize after periods of inactivity—significantly impact user experience but occur unpredictably. Monitoring must account for these characteristics while adapting to environments where infrastructure details remain largely hidden.

Cloud provider monitoring services offer specialized capabilities for serverless functions. AWS CloudWatch, Azure Monitor, and Google Cloud Monitoring provide metrics specific to function execution—invocation counts, duration, error rates, and cold start frequencies. Third-party tools like Thundra or Epsagon add distributed tracing and detailed performance analysis tailored to serverless architectures.

Edge Computing and CDN Monitoring

APIs delivered through content delivery networks or edge computing platforms require monitoring at multiple layers. Edge locations provide low-latency responses by serving content geographically close to users, but this distribution complicates monitoring. Performance might vary significantly across edge locations due to regional infrastructure differences, network conditions, or localized issues.

Monitoring edge-deployed APIs requires collecting metrics from each edge location while aggregating data for overall health assessment. Understanding geographic performance variations helps identify regional issues and optimize content distribution. Some CDN providers offer built-in monitoring capabilities, while others require custom instrumentation to achieve comprehensive visibility.

Cost Considerations and Monitoring Efficiency

Comprehensive monitoring generates significant data volumes, potentially creating substantial costs for storage, processing, and analysis. Organizations must balance monitoring thoroughness against resource constraints, implementing strategies that maximize insight while controlling expenses. Understanding cost drivers and optimization opportunities helps teams maintain effective monitoring without unsustainable budgets.

Data Retention and Storage Strategies

Monitoring data accumulates rapidly—high-traffic APIs generate millions of data points daily. Storing this data indefinitely becomes prohibitively expensive, yet historical data provides valuable context for trend analysis and incident investigation. Implementing tiered retention policies balances these concerns by retaining recent data at full granularity while downsampling or aggregating older data.

Perhaps detailed metrics retain 1-minute granularity for 7 days, then downsample to 5-minute granularity for 30 days, and finally aggregate to hourly granularity for long-term storage. This approach preserves recent detail for incident response while maintaining long-term trends for capacity planning at reduced storage costs.

Sampling and Selective Monitoring

Monitoring every request in high-volume APIs generates overwhelming data volumes. Sampling techniques monitor representative subsets rather than complete populations. Perhaps you trace 1% of requests in detail while collecting basic metrics for all requests. This approach dramatically reduces monitoring overhead while maintaining statistical validity for performance analysis.

Adaptive sampling adjusts sampling rates based on conditions. During normal operations, low sampling rates suffice. When errors increase or performance degrades, sampling rates automatically increase to capture more detail about problematic requests. This dynamic approach optimizes the trade-off between monitoring costs and diagnostic capability.

Monitoring System Efficiency

Monitoring infrastructure itself consumes resources—monitoring agents use CPU and memory, metric collection generates network traffic, and analysis systems require computational capacity. Inefficient monitoring can significantly impact application performance, creating the ironic situation where monitoring systems degrade the very performance they're meant to observe.

Asynchronous metric collection minimizes impact on request processing. Rather than synchronously recording metrics during request handling, applications buffer metrics and transmit them asynchronously. This approach prevents monitoring overhead from directly increasing response times, though it introduces slight delays in metric availability.

"Monitoring systems should be invisible to users; if monitoring noticeably degrades performance, it defeats its own purpose."

Compliance and Regulatory Considerations

API monitoring intersects with various compliance requirements and regulatory frameworks. Organizations handling sensitive data must ensure monitoring practices align with privacy regulations, security standards, and industry-specific requirements. Understanding these obligations helps teams implement monitoring that provides necessary visibility while respecting legal and ethical boundaries.

Privacy and Data Protection

Monitoring often captures sensitive information—user identifiers, request parameters, response payloads. Privacy regulations like GDPR and CCPA impose restrictions on collecting, storing, and processing personal data. Monitoring implementations must carefully consider what data they capture and how long they retain it.

Techniques like data masking and tokenization allow monitoring without exposing sensitive information. Rather than logging actual credit card numbers, mask all but the last four digits. Replace personally identifiable information with tokens that preserve analytical value without revealing actual data. These approaches maintain monitoring effectiveness while respecting privacy requirements.

Audit Logging and Compliance Reporting

Many regulatory frameworks require detailed audit trails documenting system access and data operations. API monitoring can fulfill these requirements by capturing comprehensive logs of authentication events, authorization decisions, and data access patterns. Ensuring monitoring data meets audit requirements prevents implementing separate audit logging systems.

Compliance reporting often requires demonstrating system reliability and security controls. Monitoring data provides evidence for these reports—availability metrics demonstrate uptime commitments, security monitoring shows threat detection capabilities, and incident response times validate operational procedures. Structuring monitoring data to support compliance reporting reduces the burden of regulatory obligations.

Data Retention and Right to Deletion

Privacy regulations grant individuals rights to request deletion of their personal data. When monitoring systems capture user information, they must support these deletion requests. Implementing monitoring architectures that separate personal identifiers from operational metrics simplifies compliance—perhaps user IDs are hashed or tokenized, allowing deletion of the mapping between tokens and actual identities without losing operational monitoring data.

Building Monitoring Culture and Best Practices

Technical monitoring capabilities matter less than organizational commitment to using monitoring effectively. Building a culture where teams value monitoring, respond to signals promptly, and continuously improve based on insights determines whether monitoring investments deliver returns. This cultural dimension often determines success more than tool selection or technical implementation.

Shared Responsibility and Ownership

Effective monitoring requires clear ownership. Who maintains dashboards? Who responds to alerts? Who investigates performance degradations? Without explicit ownership, monitoring systems decay—dashboards become outdated, alerts go unacknowledged, and insights remain unacted upon. Assigning ownership creates accountability and ensures monitoring receives ongoing attention.

Shared responsibility models distribute monitoring duties across teams. Development teams own monitoring for services they build, operations teams maintain infrastructure monitoring, and security teams manage threat detection. This distribution aligns monitoring responsibilities with domain expertise while requiring coordination to maintain comprehensive visibility.

Documentation and Knowledge Sharing

Monitoring systems accumulate institutional knowledge—what metrics matter, what thresholds indicate problems, how to interpret specific patterns. Documenting this knowledge through runbooks, dashboard annotations, and alert descriptions prevents knowledge loss when team members change. New team members can quickly understand monitoring systems through comprehensive documentation rather than relying solely on tribal knowledge.

Regular knowledge sharing sessions where teams review interesting monitoring insights, discuss recent incidents, or demonstrate new monitoring capabilities spread expertise and maintain engagement. These sessions transform monitoring from a routine operational task into an opportunity for learning and improvement.

Continuous Learning and Adaptation

Monitoring requirements evolve as systems change, usage patterns shift, and organizational priorities adjust. Regularly reviewing monitoring effectiveness ensures systems remain relevant. Perhaps certain alerts never trigger and should be removed, while new failure modes require additional monitoring. Quarterly monitoring reviews provide opportunities to refine approaches based on accumulated experience.

Experimenting with new monitoring techniques and tools keeps practices current. The monitoring landscape evolves rapidly—new approaches like eBPF-based monitoring, AI-driven anomaly detection, or chaos engineering integration offer capabilities that didn't exist years ago. Organizations that experiment with emerging techniques position themselves to adopt valuable innovations early.

"Monitoring excellence comes not from perfect initial implementation but from continuous refinement based on real-world experience and changing needs."

Future Trends in API Monitoring

The monitoring landscape continues evolving as new technologies emerge and operational practices mature. Understanding emerging trends helps organizations prepare for future capabilities and challenges. While predicting the future remains inherently uncertain, several clear directions shape how API monitoring will likely develop.

AI and Machine Learning Integration

Artificial intelligence and machine learning increasingly augment monitoring capabilities. Automated anomaly detection algorithms identify unusual patterns without manual threshold configuration. Predictive analytics forecast potential issues before they occur, enabling proactive intervention. Natural language interfaces allow engineers to query monitoring data conversationally rather than learning complex query languages.

AI-driven root cause analysis automatically correlates metric changes, log patterns, and system events to suggest probable causes for incidents. While these systems won't replace human expertise, they accelerate investigation by highlighting relevant information and eliminating obvious non-causes.

Observability as a Unified Discipline

The term "observability" increasingly encompasses monitoring, logging, and tracing as complementary perspectives on system behavior. Rather than treating these as separate practices with distinct tools, unified observability platforms integrate metrics, logs, and traces into cohesive analysis environments. This integration enables more powerful investigation workflows where engineers seamlessly move between metrics showing what happened, logs explaining why, and traces revealing how requests flowed through systems.

Shift-Left Monitoring and Development Integration

Monitoring traditionally focused on production environments, but increasingly extends into development and testing phases. Developers run monitoring tools against local development environments, identifying performance issues before code reaches production. Continuous integration pipelines include performance testing with monitoring validation, catching regressions early in development cycles.

This "shift-left" approach reduces the cost of addressing issues by finding them when fixes remain cheap and easy. Monitoring becomes part of the development process rather than an operational afterthought.

Edge and IoT Monitoring Challenges

As computing moves closer to users through edge computing and IoT devices, monitoring must adapt to highly distributed, resource-constrained environments. Traditional monitoring approaches designed for data center deployments don't translate directly to devices with limited bandwidth, storage, and processing power. Lightweight monitoring agents, intelligent data aggregation, and selective metric transmission become essential for monitoring at scale in edge environments.

---

Frequently Asked Questions

What metrics should I prioritize when starting API monitoring?

Begin with the golden signals: latency (response time), traffic (request volume), errors (failure rate), and saturation (resource utilization). These four metrics provide fundamental visibility into API health. Specifically track p95 and p99 latency percentiles rather than just averages, monitor both 4xx and 5xx error rates separately, and measure CPU and memory utilization of your API infrastructure. Once these basics are established, expand to business-specific metrics relevant to your API's purpose.

How do I reduce alert fatigue while maintaining effective monitoring?

Alert fatigue stems from too many notifications, especially false alarms. Address this by implementing dynamic thresholds that adapt to expected patterns rather than static values, consolidating related alerts into single notifications, establishing clear severity levels with appropriate routing, and ruthlessly eliminating alerts that don't require immediate action. Every alert should answer "yes" to: Does this require human intervention now? Can this person do something about it? Is this more important than what they're currently doing?

What's the difference between monitoring and observability?

Monitoring traditionally involves collecting predefined metrics and setting thresholds to detect known failure modes. Observability emphasizes the ability to understand system behavior by examining outputs, particularly for debugging unknown problems. Monitoring asks "is this specific thing broken?" while observability asks "why is the system behaving this way?" In practice, effective API health management requires both: monitoring for known issues and observability capabilities for investigating novel problems.

How long should I retain monitoring data?

Retention requirements vary by use case. Keep high-resolution metrics (1-minute granularity) for 7-30 days to support incident investigation and recent performance analysis. Retain downsampled metrics (5-15 minute granularity) for 90 days to 1 year for trend analysis and capacity planning. Store long-term aggregated data (hourly or daily) indefinitely for historical comparison and compliance requirements. Logs typically need shorter retention—30-90 days for operational logs, though audit logs may require years of retention for compliance.

Should I build custom monitoring solutions or use commercial platforms?

This decision depends on your team's capabilities, budget, and requirements. Commercial platforms offer faster implementation, professional support, and integrated capabilities, making them ideal when time-to-value matters and budget permits ongoing costs. Custom solutions using open-source tools provide flexibility and cost advantages for teams with engineering resources to implement and maintain them. Many organizations adopt hybrid approaches—using commercial platforms for core monitoring while building custom solutions for specialized needs. Consider total cost of ownership including engineering time, not just licensing fees.

How do I monitor APIs that I don't control (third-party dependencies)?

Monitor third-party APIs from your perspective by tracking response times, error rates, and availability as your application experiences them. Implement synthetic monitoring that regularly tests critical third-party endpoints from your infrastructure. Track dependency health as part of your overall system monitoring, setting alerts for degraded third-party performance that impacts your service. Some third-party providers offer status pages or monitoring APIs—integrate these into your monitoring systems. Implement circuit breakers and fallback mechanisms that your monitoring can validate, ensuring your application degrades gracefully when dependencies fail.