How to Set Up Continuous Testing in DevOps

Diagram showing continuous testing in DevOps: automated unit, integration, and E2E tests in CI/CD pipeline; test environments, test data management, monitoring, and feedback loops.

How to Set Up Continuous Testing in DevOps

In today's fast-paced software development landscape, the ability to deliver quality applications at speed has become a critical competitive advantage. Organizations that fail to implement robust testing practices throughout their development pipeline face increased risks of production failures, security vulnerabilities, and dissatisfied users. The traditional approach of testing only at the end of development cycles simply cannot keep pace with the demands of modern software delivery, where updates and new features are deployed multiple times per day rather than once per quarter.

Continuous testing represents a fundamental shift in how quality assurance integrates with software development and operations. Rather than treating testing as a separate phase that happens after development, continuous testing embeds quality checks throughout the entire DevOps pipeline. This approach ensures that every code change is automatically validated against multiple quality criteria, from unit tests to security scans to performance benchmarks. By catching issues earlier in the development process, teams can reduce costs, accelerate delivery, and maintain higher standards of quality.

Throughout this comprehensive guide, you'll discover the practical steps needed to establish continuous testing within your DevOps environment. We'll explore the essential tools and technologies that power automated testing, examine best practices for designing effective test strategies, and provide actionable frameworks for implementing continuous testing regardless of your current development maturity level. Whether you're just beginning your DevOps journey or looking to optimize an existing pipeline, you'll find concrete guidance for building a testing infrastructure that supports rapid, reliable software delivery.

Understanding the Foundation of Continuous Testing

Before diving into implementation details, it's essential to grasp what continuous testing truly means in the context of DevOps. Continuous testing goes far beyond simply automating existing manual tests. It represents a comprehensive approach to quality assurance that integrates testing activities into every stage of the software delivery lifecycle. From the moment a developer commits code to a repository until that code runs in production, continuous testing provides ongoing feedback about the quality, security, and performance of the application.

The philosophy behind continuous testing aligns perfectly with DevOps principles of collaboration, automation, and rapid feedback. Traditional testing models created bottlenecks where development teams would "throw code over the wall" to QA teams, who would then spend days or weeks validating functionality. This sequential approach introduced delays, created finger-pointing when issues arose, and ultimately slowed down the entire delivery process. Continuous testing eliminates these silos by making quality everyone's responsibility and providing immediate feedback on every change.

"The shift to continuous testing isn't just about running tests more frequently—it's about fundamentally rethinking how we approach quality in software development."

At its core, continuous testing relies on several key principles that differentiate it from traditional testing approaches. First, testing must be automated and repeatable, ensuring that the same tests can be executed consistently across different environments and at any time. Second, tests should provide fast feedback, ideally within minutes of a code change, so developers can address issues while the context is still fresh. Third, testing must be comprehensive, covering not just functional requirements but also security, performance, accessibility, and other quality attributes. Finally, continuous testing should be integrated into the pipeline, not bolted on as an afterthought.

The business value of continuous testing extends beyond technical benefits. Organizations that successfully implement continuous testing typically see dramatic reductions in the cost of defects, since catching issues earlier in the development process is exponentially cheaper than fixing production bugs. They also experience faster time-to-market, as automated testing removes bottlenecks that previously delayed releases. Perhaps most importantly, continuous testing enables teams to deploy with confidence, knowing that multiple layers of automated checks have validated their changes before reaching customers.

The Testing Pyramid and Continuous Testing Strategy

One of the most important concepts in continuous testing is the testing pyramid, which provides a framework for thinking about the right balance of different test types. The pyramid suggests that you should have many fast, focused unit tests at the base, fewer integration tests in the middle, and even fewer end-to-end tests at the top. This distribution ensures that you catch most issues early with fast-running tests, while still maintaining confidence through higher-level tests that validate complete user workflows.

| Test Level | Purpose | Execution Speed | Coverage Scope | Recommended Proportion |

|---|---|---|---|---|

| Unit Tests | Validate individual functions and methods in isolation | Milliseconds to seconds | Single function or class | 70-80% of total tests |

| Integration Tests | Verify interactions between components and services | Seconds to minutes | Multiple components or services | 15-20% of total tests |

| End-to-End Tests | Validate complete user workflows through the entire system | Minutes to hours | Entire application stack | 5-10% of total tests |

| Performance Tests | Assess system behavior under load and stress conditions | Minutes to hours | System-wide performance characteristics | Executed selectively based on changes |

| Security Tests | Identify vulnerabilities and security weaknesses | Minutes to hours | Application and infrastructure security | Continuous scanning with targeted deep tests |

Applying the testing pyramid to continuous testing means strategically placing different test types at appropriate stages in your pipeline. Unit tests should run on every commit, providing immediate feedback to developers. Integration tests might run on every pull request or merge to the main branch. End-to-end tests could execute on deployment to staging environments. This layered approach ensures comprehensive coverage while maintaining fast feedback cycles for the most common changes.

Building Your Continuous Testing Infrastructure

Establishing the technical foundation for continuous testing requires careful selection and configuration of tools that will support your testing strategy. The infrastructure you build must be reliable, scalable, and integrated seamlessly with your existing DevOps toolchain. While the specific tools may vary based on your technology stack and organizational requirements, certain categories of tools are essential for any continuous testing implementation.

Essential Tool Categories for Continuous Testing

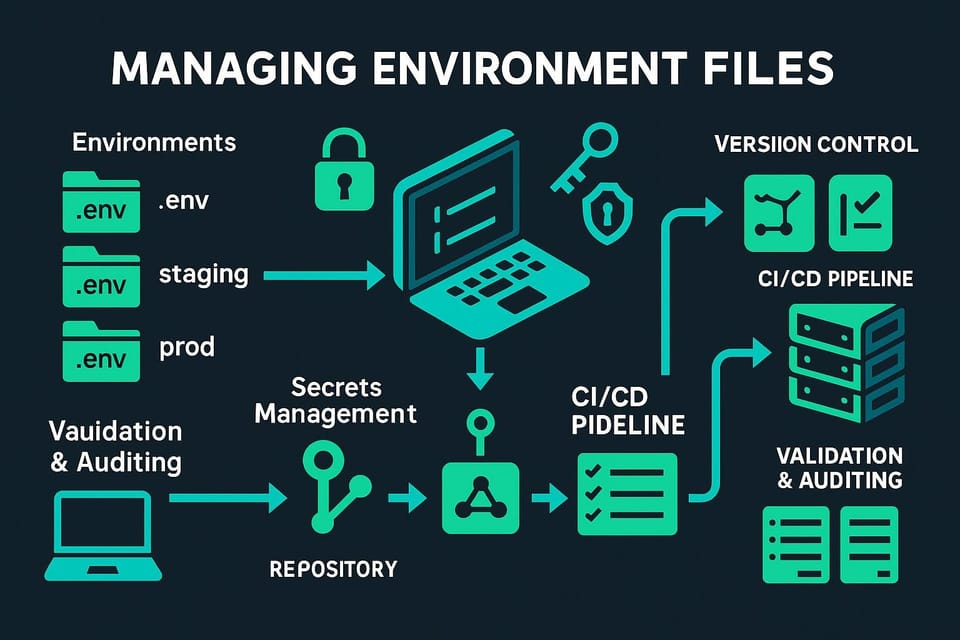

Version Control and CI/CD Platforms: Your continuous testing infrastructure begins with version control systems like Git, which track all code changes and trigger automated processes. Modern CI/CD platforms such as Jenkins, GitLab CI, GitHub Actions, CircleCI, or Azure DevOps serve as the orchestration layer that coordinates testing activities. These platforms detect code changes, provision testing environments, execute test suites, and report results back to development teams. Choosing a CI/CD platform that integrates well with your existing tools and supports your team's workflow is crucial for smooth operations.

Test Automation Frameworks: Different types of tests require different frameworks and tools. For unit testing, you'll use language-specific frameworks like JUnit for Java, pytest for Python, Jest for JavaScript, or NUnit for .NET. Integration testing might leverage tools like Postman or REST Assured for API testing, or Testcontainers for testing with real dependencies in isolated containers. End-to-end testing often employs browser automation tools such as Selenium, Playwright, or Cypress for web applications, or Appium for mobile applications. The key is selecting frameworks that align with your technology stack and provide the capabilities your tests require.

"The best testing infrastructure is the one that developers actually use—focus on making testing easy and fast, not on accumulating every possible tool."

Test Data Management: Effective continuous testing requires consistent, realistic test data that doesn't compromise security or privacy. Tools for test data management help you generate synthetic data, anonymize production data for testing purposes, or maintain reference datasets that tests can use reliably. Solutions like Delphix, Tonic.ai, or custom data generation scripts ensure that your tests have the data they need without creating dependencies on production systems or violating data privacy regulations.

Environment Management: Continuous testing demands the ability to quickly provision and tear down testing environments. Containerization technologies like Docker enable you to package applications with their dependencies, ensuring consistency across different testing stages. Container orchestration platforms such as Kubernetes can manage multiple testing environments simultaneously. Infrastructure-as-Code tools like Terraform or CloudFormation allow you to define testing environments as code, making them reproducible and version-controlled.

Configuring Your Pipeline for Continuous Testing

Once you've selected your tools, the next step involves configuring your CI/CD pipeline to execute tests at appropriate stages. A well-designed pipeline balances thoroughness with speed, ensuring that fast tests run early and provide quick feedback, while more comprehensive tests run later in the pipeline. The pipeline should also include mechanisms for parallel execution, allowing multiple test suites to run simultaneously and reducing overall execution time.

A typical continuous testing pipeline might include the following stages:

- 🔍 Code Commit Stage: When developers commit code, the pipeline immediately triggers static code analysis and linting to catch obvious issues like syntax errors, code style violations, or common security vulnerabilities. These checks run in seconds and prevent clearly problematic code from progressing further.

- ⚡ Build and Unit Test Stage: The pipeline compiles the code (if necessary) and executes the full suite of unit tests. Since unit tests should be fast and numerous, this stage typically completes within a few minutes. Any failures at this stage indicate that the code change has broken existing functionality at the component level.

- 🔗 Integration Test Stage: After successful unit testing, the pipeline deploys the application to a test environment and runs integration tests that verify interactions between components, database operations, API contracts, and external service integrations. This stage might take 10-30 minutes depending on the complexity of your application.

- 🌐 End-to-End Test Stage: For changes that pass integration testing, the pipeline executes a subset of critical end-to-end tests that validate key user workflows through the entire application stack. These tests ensure that the application functions correctly from the user's perspective.

- 🛡️ Security and Performance Test Stage: Depending on the nature of the code change, the pipeline may trigger security scans, dependency vulnerability checks, or performance tests. These tests might not run on every commit but could be scheduled regularly or triggered for specific types of changes.

The pipeline configuration should also include clear failure handling and notification mechanisms. When tests fail, the pipeline should immediately notify the relevant developers through their preferred channels—whether that's Slack, email, or integration with project management tools. The notification should include enough context for developers to quickly understand what failed and why, ideally linking directly to test results, logs, and the specific code changes that triggered the failure.

Implementing Test Automation Best Practices

Having the right tools and infrastructure is only part of the equation—how you write and maintain your automated tests determines whether your continuous testing implementation succeeds or becomes a burden. Poorly designed tests can be brittle, slow, and difficult to maintain, ultimately undermining confidence in the testing process. Following established best practices ensures that your automated tests provide reliable feedback while remaining maintainable as your application evolves.

Writing Effective Automated Tests

Effective automated tests share several common characteristics that make them valuable assets rather than maintenance liabilities. First, tests should be independent and isolated, meaning each test can run in any order without depending on the state created by other tests. This independence enables parallel execution and makes it easier to diagnose failures, since you don't need to trace through multiple tests to understand why one failed.

Tests should also be deterministic and reliable, producing the same result every time they run against the same code. Flaky tests—those that sometimes pass and sometimes fail without any code changes—erode confidence in your testing suite and waste developer time investigating false positives. Common causes of flakiness include timing issues, dependencies on external services, improper test isolation, and reliance on random data. Addressing these issues requires techniques like using test doubles (mocks, stubs, fakes) for external dependencies, implementing proper wait strategies instead of hard-coded delays, and using fixed seeds for random data generation.

"A test that fails intermittently is worse than no test at all—it trains your team to ignore test failures, which defeats the entire purpose of automated testing."

Another critical principle is keeping tests focused and readable. Each test should verify one specific behavior or requirement, making it immediately clear what functionality broke when the test fails. Test names should clearly describe what they're testing, following patterns like "should_return_error_when_invalid_email_provided" rather than generic names like "test1" or "testUserCreation". The test code itself should read almost like documentation, making it easy for any team member to understand what's being tested and why.

Maintaining Test Suites Over Time

As your application grows and evolves, your test suite will naturally expand. Without active maintenance, test suites can become slow, redundant, or outdated. Regularly reviewing and refactoring your tests ensures they continue to provide value without becoming a burden. This includes removing tests that no longer serve a purpose, consolidating redundant tests that verify the same behavior, and updating tests to reflect changes in application behavior or architecture.

Test execution time is a critical factor in continuous testing effectiveness. If your test suite takes hours to run, developers won't get timely feedback, and the pipeline becomes a bottleneck rather than an enabler. Strategies for managing test execution time include:

- 📊 Profiling and optimizing slow tests: Identify tests that take disproportionately long to execute and investigate whether they can be optimized, perhaps by reducing setup complexity or using test doubles instead of real dependencies.

- ⚖️ Implementing test categorization: Tag tests by their execution speed and importance, allowing you to run different test subsets at different pipeline stages. Critical fast tests run on every commit, while comprehensive but slower tests run nightly or on specific branches.

- 🔄 Leveraging parallel execution: Configure your CI/CD platform to run tests in parallel across multiple workers, dramatically reducing total execution time for large test suites.

- 🎯 Using smart test selection: Advanced CI/CD systems can analyze code changes and determine which tests are most likely to be affected, running only relevant tests for each commit rather than the entire suite.

Documentation and knowledge sharing are also essential for sustainable test maintenance. Your team should maintain clear documentation about testing standards, patterns to follow, and common pitfalls to avoid. Code reviews should include scrutiny of test code, not just production code, ensuring that tests meet quality standards. Regular team discussions about testing challenges and solutions help spread knowledge and improve overall testing practices.

Integrating Different Types of Testing

While unit and integration tests form the foundation of continuous testing, a comprehensive strategy must incorporate additional testing types that address different quality attributes. Each testing type serves a specific purpose and requires different tools, techniques, and integration points within your DevOps pipeline.

Security Testing Integration

Security testing in continuous delivery environments—often called DevSecOps—embeds security checks throughout the development pipeline rather than treating security as a final gate before release. This approach catches vulnerabilities early when they're cheaper and easier to fix. Security testing includes multiple components: static application security testing (SAST) analyzes source code for security vulnerabilities, dynamic application security testing (DAST) tests running applications for vulnerabilities, and software composition analysis (SCA) identifies security issues in third-party dependencies.

Tools like SonarQube, Checkmarx, or Snyk can integrate directly into your CI/CD pipeline, automatically scanning code on every commit or pull request. These tools should be configured to fail the build when critical security vulnerabilities are detected, preventing insecure code from progressing through the pipeline. However, it's important to tune security tools appropriately—overly aggressive settings that flag every minor issue can lead to alert fatigue, where developers begin ignoring security warnings.

Performance and Load Testing

Performance testing validates that your application meets response time, throughput, and resource utilization requirements under various load conditions. Unlike functional tests that verify what the application does, performance tests verify how well it does it. Integrating performance testing into continuous delivery requires careful planning, since performance tests typically require more resources and time than functional tests.

"Performance testing isn't just about finding the breaking point—it's about understanding how your system behaves under realistic conditions and catching performance degradations before they reach production."

Performance testing strategies in continuous delivery often include several approaches. Smoke performance tests run on every build, executing a small number of virtual users through critical workflows to catch obvious performance regressions. These lightweight tests complete quickly enough to fit within the standard pipeline. More comprehensive load tests that simulate realistic user volumes might run nightly or on-demand, providing deeper insights into system behavior under stress. Tools like JMeter, Gatling, or k6 can automate performance testing, while application performance monitoring (APM) solutions like New Relic or Datadog provide ongoing performance visibility in production.

Accessibility and Usability Testing

Ensuring your application is accessible to users with disabilities isn't just a legal requirement—it's a quality attribute that benefits all users. Automated accessibility testing tools like axe-core, Pa11y, or Lighthouse can integrate into your CI/CD pipeline, scanning your application for common accessibility violations such as missing alt text, poor color contrast, or improper heading hierarchy. While automated tools can't catch all accessibility issues, they provide a baseline level of compliance checking that complements manual accessibility testing.

Usability testing, which evaluates how easily users can accomplish tasks in your application, is more challenging to automate but can still be incorporated into continuous testing through synthetic monitoring and user journey testing. These tests simulate real user workflows and measure completion rates, error rates, and task completion times, alerting teams when usability metrics degrade.

| Testing Type | Key Tools | Pipeline Integration Point | Execution Frequency | Primary Purpose |

|---|---|---|---|---|

| Static Security Analysis | SonarQube, Checkmarx, Fortify | Pre-commit or commit stage | Every commit | Identify code-level security vulnerabilities |

| Dependency Scanning | Snyk, WhiteSource, OWASP Dependency-Check | Build stage | Every build | Detect vulnerable third-party libraries |

| Dynamic Security Testing | OWASP ZAP, Burp Suite, Acunetix | Staging deployment | Daily or on-demand | Find runtime security vulnerabilities |

| Performance Testing | JMeter, Gatling, k6, Locust | Staging deployment | Nightly or on-demand | Validate performance requirements |

| Accessibility Testing | axe-core, Pa11y, Lighthouse | Integration test stage | Every merge to main branch | Ensure accessibility compliance |

Creating a Test Data Strategy

Test data represents one of the most challenging aspects of continuous testing, yet it's fundamental to test reliability and effectiveness. Tests need realistic data that reflects production scenarios, but using actual production data raises security, privacy, and compliance concerns. Additionally, tests require data in specific states to validate different scenarios, and managing this data across multiple testing environments and parallel test executions adds complexity.

A comprehensive test data strategy addresses several key concerns. First, it defines where test data comes from—whether generated synthetically, copied from production with appropriate anonymization, or maintained as reference datasets. Second, it establishes how test data is provisioned to testing environments, ensuring tests have access to the data they need without creating dependencies that slow down execution. Third, it addresses data cleanup and reset, ensuring that tests leave environments in a clean state for subsequent tests.

Approaches to Test Data Management

Synthetic Data Generation: Creating artificial data that resembles real data offers several advantages. Synthetic data doesn't contain sensitive information, can be generated on-demand, and can be tailored to specific test scenarios. Libraries like Faker (available in multiple languages), Bogus, or custom data generators can create realistic names, addresses, email addresses, and other common data types. For domain-specific data, you might need to develop custom generators that understand your business rules and data relationships.

Production Data Anonymization: When synthetic data isn't sufficient—perhaps because you need to test with realistic data distributions or complex relationships—anonymizing production data provides an alternative. Data masking techniques replace sensitive information with realistic but fake values, while preserving data structure and relationships. Tools like Delphix, Tonic.ai, or custom anonymization scripts can automate this process, but you must ensure that anonymization is truly irreversible and complies with data privacy regulations like GDPR or CCPA.

"The best test data strategy is one that gives your tests the data they need to be effective while protecting privacy and making it easy for developers to run tests anywhere."

Test Data as Code: Treating test data as code—storing it in version control alongside your tests—provides several benefits. Data becomes versioned, reviewable, and reproducible. Changes to test data go through the same review process as code changes. This approach works well for reference datasets that don't change frequently, such as lookup tables, configuration data, or small sets of test entities. However, it's less suitable for large datasets or data that needs to vary across test runs.

Managing Test Data in Continuous Testing Pipelines

In continuous testing environments, test data management must be automated and integrated into the pipeline. Before tests execute, the pipeline should provision fresh test data, ensuring that each test run starts with a known, consistent state. After tests complete, the pipeline should clean up test data, preventing accumulation of stale data in testing environments.

Containerization technologies offer elegant solutions for test data management. You can create Docker images that include pre-populated databases with test data, allowing tests to quickly spin up isolated database instances. Database migration tools like Flyway or Liquibase can automatically set up database schemas and seed data as part of test initialization. For more complex scenarios, tools like Testcontainers provide APIs for programmatically managing containerized dependencies, including databases with specific data states.

Test data management also intersects with test isolation. When tests run in parallel, they must not interfere with each other's data. Strategies for achieving this include giving each test its own isolated data scope (perhaps by using unique identifiers or separate database schemas), implementing database rollback mechanisms that undo changes after each test, or using copy-on-write techniques that allow tests to share read-only data while maintaining separate writable copies.

Monitoring and Analyzing Test Results

Collecting test results is only valuable if you can effectively analyze them and act on insights they provide. In continuous testing environments where hundreds or thousands of tests run multiple times per day, manual review of test results becomes impractical. You need automated systems for aggregating, analyzing, and reporting test results, along with clear processes for responding to failures and tracking quality trends over time.

Test Reporting and Visualization

Modern CI/CD platforms provide built-in test reporting capabilities, but you may want to aggregate results across multiple projects or provide custom visualizations for stakeholders. Tools like Allure, ReportPortal, or custom dashboards built with Grafana can consolidate test results from various sources, providing a unified view of testing status across your organization. These dashboards should answer key questions at a glance: What's our current test pass rate? Which tests are failing most frequently? How has test execution time trended over the past month?

Effective test reporting goes beyond simple pass/fail metrics. It should provide context that helps teams understand quality trends and make informed decisions. Useful metrics include test coverage (what percentage of code is exercised by tests), test effectiveness (how often tests catch real bugs), test stability (how often tests produce inconsistent results), and test execution efficiency (how long tests take to run and whether this is improving or degrading over time).

Handling Test Failures

When tests fail, your process should ensure that failures are investigated promptly and that the root cause is addressed. This requires clear ownership—someone must be responsible for investigating each failure. In some organizations, the developer who made the code change that caused the failure investigates. In others, a rotating role reviews all failures each day. Whatever approach you choose, failures shouldn't languish unaddressed, as this erodes confidence in the testing process.

"The way your team responds to test failures reveals whether you truly value automated testing—if failures are routinely ignored or bypassed, your testing investment is wasted."

Not all test failures indicate bugs in production code. Sometimes tests themselves have bugs, or they make incorrect assumptions about system behavior. When this happens, the test needs to be fixed or removed. Other times, tests fail due to environmental issues—network problems, resource constraints, or configuration errors. These failures require infrastructure improvements rather than code changes. Distinguishing between these different failure types is crucial for directing attention appropriately.

Test failure patterns can reveal systemic issues. If certain tests fail frequently, they might be flaky and need to be rewritten or removed. If failures cluster in particular areas of the codebase, those areas might need refactoring or additional testing. If test execution time suddenly increases, it might indicate performance regressions or infrastructure problems. Regularly reviewing these patterns helps you continuously improve your testing approach.

Quality Gates and Release Decisions

Quality gates define the criteria that must be met before code can progress to the next stage of the pipeline. A typical quality gate might require that all unit tests pass, code coverage exceeds a certain threshold, no critical security vulnerabilities exist, and no critical bugs remain open. Quality gates should be enforced automatically by the pipeline, preventing code that doesn't meet quality standards from advancing.

However, quality gates must be balanced with pragmatism. Overly strict gates can block necessary hotfixes or prevent deployment of valuable features due to minor issues. Most organizations implement different quality gates at different stages—stricter requirements for production deployments than for development environment deployments, for example. Some also provide override mechanisms that allow authorized personnel to bypass gates in exceptional circumstances, with appropriate logging and review.

Release decisions should be informed by testing results but not solely determined by them. Automated tests provide confidence, but they can't catch every possible issue. Combining automated testing with other practices—like feature flags that allow gradual rollout, canary deployments that expose changes to a small percentage of users first, and comprehensive monitoring that quickly detects production issues—creates a more robust approach to quality assurance than relying on testing alone.

Scaling Continuous Testing Across Teams

As organizations grow, continuous testing practices that worked for a small team may not scale effectively. Multiple teams working on different components or services need coordinated testing strategies that prevent conflicts while maintaining autonomy. Shared infrastructure must support concurrent test execution from many teams without becoming a bottleneck. Testing standards and practices need consistency across the organization while allowing for technology-specific variations.

Establishing Testing Standards and Governance

Organizational testing standards provide guidelines that ensure consistency and quality across teams. These standards might define minimum code coverage thresholds, required test types for different changes, naming conventions for tests, or patterns for structuring test code. Standards should be documented clearly and made easily accessible to all team members, perhaps through an internal wiki or documentation site.

However, standards shouldn't be so rigid that they stifle innovation or prevent teams from adopting better practices. The goal is to establish a baseline of quality while allowing teams to exceed it. Regular reviews of testing standards ensure they remain relevant as technologies and practices evolve. Feedback from teams helps identify standards that aren't working or areas where additional guidance would be valuable.

Governance mechanisms ensure that teams actually follow established standards. This might include automated policy enforcement through tools that check compliance with testing requirements, periodic audits of testing practices across teams, or incorporating testing quality into team performance metrics. The key is making compliance easier than non-compliance—if following standards requires extra effort or slows down development, teams will find workarounds.

Managing Shared Testing Infrastructure

Shared testing infrastructure—CI/CD systems, test environments, test data repositories—must be reliable, performant, and fair to all teams. Infrastructure capacity should be planned based on projected usage, with monitoring to detect when resources become constrained. Resource allocation policies prevent any single team from monopolizing shared infrastructure, perhaps by implementing quotas or priority systems that ensure fair access.

Infrastructure-as-Code practices make it easier to scale testing infrastructure. Teams can provision their own testing environments from templates, reducing dependencies on central infrastructure teams. Container orchestration platforms can dynamically scale testing capacity based on demand. Cloud-based CI/CD services offer virtually unlimited capacity, though at a cost that must be managed.

"Scaling testing isn't just about adding more infrastructure—it's about creating systems and processes that allow teams to move independently while maintaining quality standards."

Fostering a Testing Culture

Technical practices and tools enable continuous testing, but culture determines whether it succeeds. A strong testing culture values quality, treats testing as everyone's responsibility, and celebrates improvements in testing practices. Leaders model this culture by prioritizing testing work, allocating time for test maintenance, and recognizing team members who contribute to testing excellence.

Education and skill development support testing culture. Teams need training on testing tools, techniques, and best practices. Lunch-and-learn sessions where teams share testing innovations, internal conferences focused on quality practices, or bringing in external experts for workshops all contribute to building testing expertise across the organization. Pairing junior developers with experienced testers helps transfer knowledge and builds testing skills.

Communities of practice around testing bring together people interested in testing topics across team boundaries. These communities share knowledge, solve common problems, and drive improvements in testing practices organization-wide. They might maintain shared testing libraries, develop internal testing tools, or create training materials. By connecting people passionate about testing, communities of practice accelerate the spread of better practices and innovations.

Overcoming Common Continuous Testing Challenges

Even with careful planning and implementation, organizations encounter challenges when establishing continuous testing. Recognizing these common obstacles and having strategies to address them increases your chances of success. Many challenges stem from organizational dynamics rather than technical issues, requiring change management and communication as much as technical solutions.

Addressing Resistance to Testing

Developers sometimes resist writing tests, viewing them as extra work that slows down feature development. This resistance often stems from past experiences with poorly designed tests that were difficult to maintain or provided little value. Overcoming this resistance requires demonstrating the value that good tests provide—catching bugs early, enabling confident refactoring, documenting expected behavior, and ultimately accelerating development by reducing debugging time.

Making testing easier reduces resistance. Providing good examples, templates, and helper libraries lowers the barrier to writing tests. Pairing developers who are comfortable with testing with those who struggle helps build skills and confidence. Celebrating when tests catch bugs before they reach production reinforces the value of testing. Most importantly, ensuring that the testing infrastructure is reliable and fast prevents frustration that leads to testing abandonment.

Managing Test Maintenance Burden

As test suites grow, maintenance can become overwhelming. Tests break due to application changes, become outdated as requirements evolve, or accumulate technical debt just like production code. Without active management, test maintenance consumes increasing amounts of time, leading teams to disable or delete tests rather than fix them—undermining the entire testing investment.

Strategies for managing test maintenance include treating test code with the same care as production code, including code reviews, refactoring, and documentation. Regular test suite reviews identify tests that no longer provide value and can be removed. Investing in test infrastructure that makes tests easier to write and maintain—such as shared test utilities, page object models for UI tests, or API client libraries—pays dividends over time by reducing per-test maintenance costs.

Balancing Speed and Thoroughness

Continuous testing creates tension between the desire for fast feedback and the need for comprehensive testing. Running every possible test on every code change would take too long, but running only minimal tests might miss important issues. Finding the right balance requires thoughtful test categorization and pipeline design.

Risk-based testing approaches help optimize this balance. Critical functionality that affects many users or handles sensitive operations receives more thorough testing than edge cases or rarely-used features. Code changes in high-risk areas trigger more extensive testing than changes in well-tested, stable areas. Combining automated testing with other quality practices—like code review, pair programming, and production monitoring—provides defense in depth that doesn't rely solely on testing to catch every issue.

Measuring Success and Continuous Improvement

Establishing continuous testing is not a one-time project but an ongoing journey of improvement. Measuring the effectiveness of your testing practices helps you understand what's working, identify areas for improvement, and demonstrate value to stakeholders. However, choosing the right metrics is crucial—poorly chosen metrics can drive counterproductive behaviors, while good metrics illuminate opportunities for improvement.

Key Metrics for Continuous Testing

Several categories of metrics provide insights into testing effectiveness. Quality metrics measure the outcomes of testing, such as defect escape rate (how many bugs reach production), mean time to detection (how quickly bugs are found), and customer-reported issues. These metrics indicate whether your testing is actually preventing quality problems.

Efficiency metrics assess how well your testing process operates. Test execution time, test pass rate, and time spent on test maintenance reveal whether your testing infrastructure is supporting or hindering development. Build success rate and time to feedback indicate how smoothly the pipeline operates. These metrics help identify bottlenecks and inefficiencies in your testing process.

Coverage metrics show how much of your application is exercised by tests. Code coverage (what percentage of code lines or branches are executed during testing) provides one view, though it's important not to fixate on coverage percentages at the expense of test quality. Requirement coverage (whether all requirements have corresponding tests) and risk coverage (whether high-risk areas receive adequate testing) often provide more meaningful insights than raw code coverage numbers.

Implementing Feedback Loops

Metrics are only valuable if they lead to action. Regular reviews of testing metrics—perhaps in team retrospectives or dedicated quality review sessions—help teams identify improvement opportunities. When metrics indicate problems, teams should investigate root causes and implement corrective actions, then monitor metrics to verify that improvements are effective.

Feedback from developers about the testing process is equally important. Regular surveys or discussions about testing pain points reveal issues that metrics might not capture, such as unclear error messages, difficult-to-debug test failures, or gaps in testing documentation. Acting on this feedback demonstrates that the organization values testing and is committed to continuous improvement.

External feedback from customers and production monitoring also informs testing improvements. When production issues occur, post-incident reviews should ask whether better testing could have caught the issue and what changes to testing practices would prevent similar problems in the future. This learning loop ensures that your testing evolves to address real-world challenges rather than theoretical concerns.

Frequently Asked Questions

What is the difference between continuous testing and traditional testing approaches?

Traditional testing typically occurs as a separate phase after development is complete, often creating bottlenecks and delays in the release process. Continuous testing integrates quality checks throughout the entire development pipeline, providing immediate feedback on every code change. This shift-left approach catches issues earlier when they're cheaper to fix, enables faster release cycles, and makes quality everyone's responsibility rather than just the QA team's concern. Continuous testing relies heavily on automation and is tightly integrated with CI/CD pipelines, whereas traditional testing often involves significant manual effort and happens in distinct phases.

How much test automation is enough for effective continuous testing?

There's no universal percentage, as the right amount of automation depends on your application type, risk profile, and release frequency. However, successful continuous testing implementations typically automate the majority of regression testing—the repetitive tests that verify existing functionality still works after changes. A common guideline is to automate tests that run frequently, are time-consuming to execute manually, or test critical functionality. Start by automating your most important user workflows and expand from there. Remember that 100% automation isn't the goal—some exploratory testing and usability evaluation will always require human judgment. Focus on automating tests that provide the best return on investment in terms of defect detection and feedback speed.

What should I do when tests become flaky and unreliable?

Flaky tests—those that sometimes pass and sometimes fail without code changes—are a serious problem that undermines confidence in your testing suite. First, identify which tests are flaky by tracking test result history and flagging tests that fail intermittently. Then investigate root causes, which commonly include timing issues (tests that don't properly wait for asynchronous operations), dependencies on external services, improper test isolation, or race conditions in parallel test execution. Fix flaky tests by implementing proper wait strategies, using test doubles to eliminate external dependencies, ensuring each test properly sets up and tears down its required state, and adding appropriate synchronization for parallel operations. If a test remains flaky despite multiple fix attempts, consider whether it's testing something important enough to justify the maintenance burden—sometimes the best solution is to remove the test and cover the functionality differently.

How do I convince management to invest in continuous testing infrastructure and practices?

Frame the investment in terms of business outcomes rather than technical practices. Emphasize how continuous testing reduces the cost of defects by catching issues early in development when they're exponentially cheaper to fix than production bugs. Highlight how automated testing accelerates time-to-market by removing testing bottlenecks that delay releases. Present data on how quality issues impact customer satisfaction and revenue. If possible, quantify the time currently spent on manual testing and show how automation could redirect that effort toward more valuable work. Start with a pilot project that demonstrates value in a limited scope, then use those results to justify broader investment. Many organizations find that the cost of continuous testing infrastructure is recovered within months through reduced debugging time, fewer production incidents, and faster release cycles.

What's the best way to get started with continuous testing if we currently have minimal automation?

Start small and build incrementally rather than trying to automate everything at once. Begin by setting up a basic CI/CD pipeline if you don't have one, then add simple smoke tests that verify critical functionality. Focus first on automating tests for your most important user workflows or the areas of code that change most frequently. As you build experience and confidence, gradually expand your test coverage. Invest time in building solid test infrastructure—good test frameworks, helper utilities, and clear patterns—even if this means writing fewer tests initially, as this foundation will accelerate future test development. Ensure your early automated tests are reliable and provide clear value, as early successes build momentum and organizational support for expanding testing efforts. Consider bringing in external expertise through training or consulting to accelerate your learning curve and avoid common pitfalls.

How do I handle testing for microservices architectures where multiple services must work together?

Testing microservices requires a multi-layered approach that balances testing services in isolation with testing their interactions. Each service should have comprehensive unit tests and integration tests that verify its functionality independently, using test doubles for dependencies on other services. Contract testing tools like Pact or Spring Cloud Contract help ensure that services maintain compatible interfaces, catching breaking changes before they cause integration failures. End-to-end tests that span multiple services should focus on critical business workflows rather than trying to test every possible interaction. Service virtualization or containerized test environments allow you to test against realistic versions of dependent services without requiring the full production environment. The key is finding the right balance—too much emphasis on end-to-end testing creates slow, brittle tests, while testing only in isolation misses integration issues.