How to Set Up Kubernetes Cluster for Production

Setting up a production-grade cluster represents one of the most critical decisions your organization will make in its cloud-native journey. The difference between a hastily configured environment and a properly architected system can mean the difference between seamless operations and catastrophic failures that impact your business continuity, customer satisfaction, and ultimately your bottom line. Production environments demand meticulous attention to detail, comprehensive planning, and adherence to industry best practices that have been refined through countless deployments across diverse industries.

Container orchestration through this powerful platform involves creating a distributed system that manages containerized applications across multiple nodes, ensuring high availability, automatic scaling, and self-healing capabilities. This infrastructure serves as the backbone for modern microservices architectures, providing the reliability and flexibility that contemporary applications require. The process encompasses numerous technical considerations, from initial architecture decisions through security hardening, networking configuration, storage provisioning, and ongoing operational maintenance.

Throughout this comprehensive guide, you'll discover the essential steps, proven strategies, and critical considerations necessary to build a robust production environment. We'll explore infrastructure requirements, security implementations, networking configurations, monitoring solutions, and operational best practices that collectively ensure your deployment meets enterprise-grade standards. Whether you're migrating existing workloads or building new cloud-native applications, this resource provides the detailed knowledge required to establish a foundation that supports your organization's growth and innovation goals.

Infrastructure Planning and Architecture Decisions

Before provisioning any resources, establishing a solid architectural foundation determines the long-term success of your deployment. The infrastructure planning phase requires careful evaluation of your organization's specific requirements, growth projections, compliance obligations, and operational capabilities. These decisions cascade through every subsequent configuration choice, making this initial planning phase absolutely critical to project success.

Choosing Your Deployment Model

Organizations face several fundamental choices regarding how they deploy and manage their orchestration platform. Managed services from cloud providers offer reduced operational overhead, automatic upgrades, and integrated cloud-native features, making them attractive for teams seeking to minimize infrastructure management burden. These solutions typically provide built-in high availability, automated backups, and seamless integration with other cloud services, though they may involve higher costs and potential vendor lock-in considerations.

Alternatively, self-managed deployments provide maximum flexibility and control over every aspect of your environment. This approach allows customization of networking configurations, storage backends, security policies, and upgrade schedules according to your precise requirements. However, this flexibility comes with increased operational responsibility, requiring dedicated expertise for installation, maintenance, security patching, and troubleshooting. Organizations must honestly assess their internal capabilities and resource availability when making this critical decision.

The architecture decisions you make during the planning phase will impact your operations for years to come. Rushing this stage to accelerate deployment timelines inevitably leads to technical debt and operational challenges that become increasingly difficult to remediate.

Sizing Your Control Plane

The control plane serves as the brain of your orchestration system, managing the desired state of all resources and coordinating activities across your entire cluster. For production environments, implementing a highly available control plane with multiple master nodes distributed across availability zones provides resilience against infrastructure failures. This configuration typically involves three or five master nodes running behind a load balancer, ensuring continuity even when individual components experience issues.

Resource allocation for control plane nodes depends on cluster scale and workload characteristics. Small to medium deployments might function adequately with 2-4 CPU cores and 8-16GB RAM per master node, while large-scale environments supporting thousands of nodes and tens of thousands of pods require substantially more resources. The etcd datastore, which maintains cluster state, particularly benefits from fast SSD storage and low-latency networking to maintain optimal performance.

| Cluster Size | Control Plane Nodes | CPU per Node | Memory per Node | Storage Type |

|---|---|---|---|---|

| Small (1-50 nodes) | 3 | 2-4 cores | 8-16 GB | SSD |

| Medium (51-200 nodes) | 3 | 4-8 cores | 16-32 GB | NVMe SSD |

| Large (201-500 nodes) | 5 | 8-16 cores | 32-64 GB | NVMe SSD |

| Enterprise (500+ nodes) | 5+ | 16+ cores | 64+ GB | High-performance NVMe |

Worker Node Configuration

Worker nodes host your actual application workloads, and their configuration directly impacts application performance and resource efficiency. Node heterogeneity allows matching specific workload requirements with appropriate hardware configurations. Compute-intensive applications benefit from CPU-optimized instances, memory-intensive workloads require RAM-optimized configurations, and storage-heavy applications need instances with enhanced I/O capabilities.

Implementing node pools or node groups enables logical separation of different workload types while simplifying management and scaling operations. You might maintain separate pools for production applications, development workloads, batch processing jobs, and GPU-accelerated machine learning tasks. This segregation improves resource utilization, simplifies capacity planning, and enables targeted scaling policies that respond to specific workload demands.

Security Hardening and Access Control

Security represents a fundamental requirement for production environments, not an optional enhancement to be addressed later. A comprehensive security strategy encompasses multiple layers, from infrastructure hardening through network policies, access controls, secrets management, and runtime security monitoring. Each layer contributes to defense-in-depth, ensuring that compromise of any single component doesn't result in complete system breach.

Authentication and Authorization Framework

Implementing robust authentication mechanisms ensures that only authorized users and services can interact with your orchestration platform. Role-Based Access Control (RBAC) provides granular permissions management, allowing you to define precisely what actions different users and service accounts can perform. Production environments should never rely on default administrative credentials or overly permissive access policies that grant unnecessary privileges.

Integrating with existing identity providers through OpenID Connect (OIDC) or LDAP centralizes authentication management and enables consistent policy enforcement across your infrastructure. This integration allows leveraging existing user directories, multi-factor authentication requirements, and audit logging capabilities. Service accounts for application components should follow the principle of least privilege, receiving only the specific permissions required for their designated functions.

Security cannot be an afterthought in production deployments. Every day that passes with inadequate security controls increases the risk of breaches that could compromise sensitive data, disrupt operations, and damage your organization's reputation.

Network Security Policies

By default, orchestration platforms allow unrestricted communication between all pods within a cluster, creating potential security vulnerabilities. Network policies implement microsegmentation, defining exactly which pods can communicate with each other and what external resources they can access. These policies function as a distributed firewall, enforcing security boundaries at the network layer.

Production environments should implement a default-deny approach, explicitly allowing only necessary communications rather than attempting to block known-bad traffic patterns. This strategy requires more upfront planning but provides significantly stronger security posture. Policies should segregate different application tiers, isolate multi-tenant workloads, and restrict egress traffic to prevent data exfiltration and limit the impact of compromised containers.

- 🔒 Implement Pod Security Standards to restrict privileged containers, host namespace access, and dangerous capabilities that could enable container escape

- 🔐 Enable audit logging to maintain comprehensive records of all API requests, facilitating security investigations and compliance reporting

- 🛡️ Deploy admission controllers to enforce security policies, validate configurations, and prevent deployment of non-compliant resources

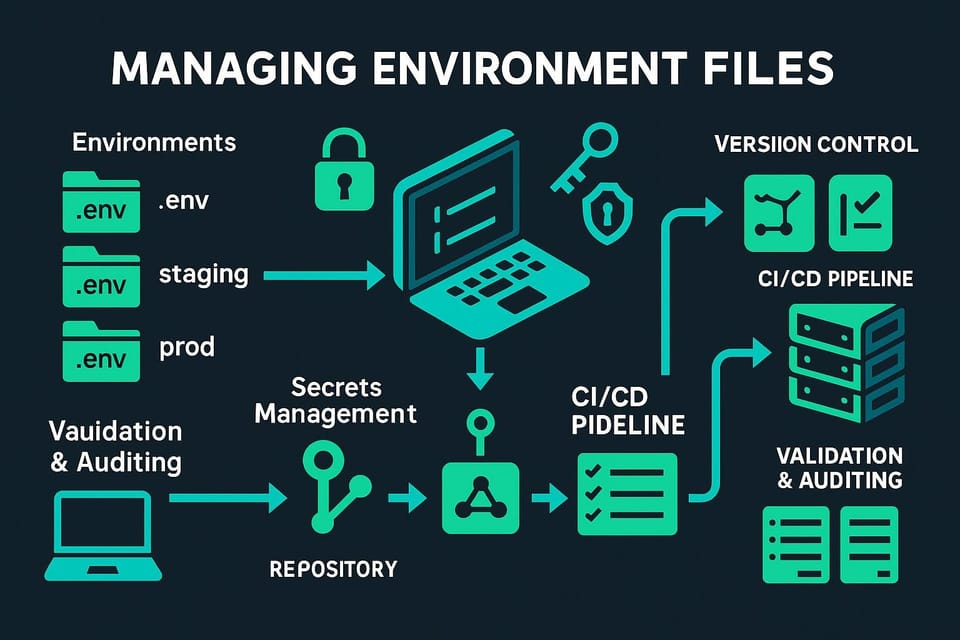

- 🔑 Utilize secrets management solutions like HashiCorp Vault or cloud-native services to protect sensitive credentials and encryption keys

- 🚨 Implement runtime security monitoring to detect anomalous behavior, unauthorized access attempts, and potential security incidents

Image Security and Supply Chain Protection

Container images represent a critical attack surface, as vulnerabilities in base images or application dependencies can expose your entire environment. Implementing image scanning as part of your continuous integration pipeline identifies known vulnerabilities before images reach production. This scanning should occur at multiple stages: during development, before pushing to registries, and continuously after deployment as new vulnerabilities are discovered.

Maintaining a private container registry with strict access controls prevents unauthorized image modifications and ensures that only approved, scanned images can be deployed. Image signing and verification through tools like Notary or Sigstore provides cryptographic assurance that images haven't been tampered with between build and deployment. These measures collectively protect against supply chain attacks that have become increasingly prevalent in modern software ecosystems.

Networking Configuration and Service Mesh

Networking forms the connective tissue of your orchestration platform, enabling communication between application components, external clients, and backend services. Production deployments require carefully planned networking architectures that balance performance, security, reliability, and operational complexity. The networking decisions you make impact everything from application latency to security policy enforcement and troubleshooting capabilities.

Container Network Interface Selection

The Container Network Interface (CNI) plugin determines how pods receive IP addresses and communicate across your infrastructure. Different CNI implementations offer varying feature sets, performance characteristics, and operational complexity. Calico provides robust network policy enforcement and scales well to large deployments, making it popular for security-conscious organizations. Cilium leverages eBPF technology for high-performance networking and advanced observability features.

For cloud deployments, native CNI plugins from cloud providers often integrate seamlessly with platform networking features, simplifying configuration and providing optimized performance. AWS VPC CNI, Azure CNI, and Google Cloud's native networking leverage the underlying cloud infrastructure for pod networking, enabling features like security group integration and direct pod-to-service communication without additional network hops.

Ingress and Load Balancing Strategy

Exposing applications to external traffic requires careful consideration of ingress controllers, load balancing strategies, and traffic management policies. Ingress controllers like NGINX, Traefik, or cloud-native solutions provide HTTP/HTTPS routing, SSL termination, and traffic management capabilities. Production environments benefit from deploying ingress controllers as highly available services, typically running multiple replicas across different nodes.

Implementing multiple ingress classes allows segregating different types of traffic, such as public-facing applications, internal services, and administrative interfaces. This separation improves security by enabling different security policies, SSL certificate management, and access controls for each traffic category. Load balancer services distribute traffic across application replicas, with cloud providers typically offering integration with their native load balancing services for seamless scalability and high availability.

Network performance directly impacts application user experience. Investing time in proper network architecture and optimization pays dividends through improved application responsiveness and reduced operational challenges.

Service Mesh Implementation

As microservices architectures grow in complexity, service mesh technologies like Istio, Linkerd, or Consul provide advanced traffic management, security, and observability features. Service meshes implement mutual TLS authentication between services, sophisticated traffic routing policies, circuit breaking, and detailed telemetry collection without requiring application code changes.

However, service meshes introduce additional operational complexity and resource overhead. Organizations should carefully evaluate whether their architecture complexity justifies the operational burden of maintaining a service mesh. Smaller deployments with straightforward service communication patterns might achieve their requirements through simpler solutions like ingress controllers and network policies, reserving service mesh adoption for when architecture complexity demands its advanced capabilities.

Storage Architecture and Data Persistence

Stateful applications require persistent storage that survives pod restarts, node failures, and cluster maintenance operations. Production storage architecture must address performance requirements, data durability, backup and recovery procedures, and cost optimization. The ephemeral nature of containers makes proper storage configuration absolutely critical for applications that maintain state or process valuable data.

Storage Class Configuration

Storage classes define different storage tiers with varying performance characteristics, availability guarantees, and cost profiles. Production environments typically implement multiple storage classes to match diverse application requirements. High-performance databases might utilize SSD-backed storage with high IOPS provisioning, while archival applications can leverage cost-effective HDD storage with lower performance guarantees.

Cloud providers offer various storage types optimized for different use cases. Block storage provides traditional disk-like volumes suitable for databases and applications requiring direct filesystem access. Object storage offers scalable, cost-effective storage for unstructured data like media files, backups, and logs. File storage enables shared access across multiple pods, supporting applications that require concurrent read/write access to shared filesystems.

| Storage Type | Use Cases | Performance | Availability | Cost |

|---|---|---|---|---|

| Premium SSD | Databases, high-performance apps | Very High | 99.9% | High |

| Standard SSD | General purpose applications | High | 99.5% | Medium |

| Standard HDD | Development, testing, archives | Medium | 99% | Low |

| Shared File Storage | Shared content, legacy apps | Medium-High | 99.9% | Medium-High |

Backup and Disaster Recovery

No production environment is complete without comprehensive backup and disaster recovery capabilities. Volume snapshots provide point-in-time copies of persistent volumes, enabling recovery from data corruption, accidental deletion, or application errors. Automated snapshot schedules ensure regular backups without manual intervention, while retention policies manage storage costs by removing obsolete snapshots.

Beyond volume snapshots, cluster-level backups protect your orchestration configuration, custom resources, and application definitions. Tools like Velero enable backing up entire namespaces or specific resources, supporting disaster recovery scenarios where you need to rebuild entire environments. Testing recovery procedures regularly ensures that backups function correctly and that your team understands recovery processes before emergencies occur.

Data loss in production can be catastrophic. Backup strategies must be tested regularly, as untested backups provide false confidence that evaporates when disaster strikes and recovery fails.

Observability and Monitoring Infrastructure

Comprehensive observability enables understanding system behavior, identifying performance bottlenecks, detecting anomalies, and troubleshooting issues before they impact users. Production environments require monitoring at multiple levels: infrastructure health, application performance, resource utilization, and business metrics. The insights gained from proper observability directly translate to improved reliability and faster incident resolution.

Metrics Collection and Visualization

Prometheus has become the de facto standard for metrics collection in orchestration environments, providing powerful querying capabilities and extensive ecosystem integration. Deploying Prometheus with proper retention policies, high availability configuration, and remote storage integration ensures reliable metrics collection even during infrastructure issues. Complementing Prometheus with Grafana provides rich visualization capabilities, enabling creation of dashboards that surface critical metrics and trends.

Production monitoring should track both infrastructure and application metrics. Infrastructure metrics include node CPU and memory utilization, disk I/O, network throughput, and pod resource consumption. Application metrics encompass request rates, error rates, response times, and business-specific indicators. Implementing Service Level Indicators (SLIs) and Service Level Objectives (SLOs) provides objective measures of service quality and helps prioritize engineering efforts.

- 📊 Implement distributed tracing with tools like Jaeger or Zipkin to understand request flows through microservices architectures

- 📝 Centralize log aggregation using solutions like ELK Stack or Loki to facilitate troubleshooting and security investigations

- 🚨 Configure intelligent alerting that notifies teams of genuine issues while minimizing alert fatigue from false positives

- 📈 Track resource efficiency metrics to identify optimization opportunities and control infrastructure costs

- 🔍 Monitor control plane health to detect issues with core components before they impact application availability

Logging Strategy

Centralized logging aggregates logs from all containers, nodes, and infrastructure components into searchable repositories that facilitate troubleshooting and compliance reporting. Structured logging in JSON format enables more powerful queries and analysis compared to unstructured text logs. Applications should emit logs at appropriate verbosity levels, providing detailed information for debugging while avoiding excessive log volume that increases storage costs and complicates analysis.

Implementing log retention policies balances troubleshooting needs against storage costs. Recent logs might be retained in hot storage for quick access, while older logs archive to cheaper cold storage. Compliance requirements often dictate minimum retention periods for audit logs and security-relevant events. Log shipping should be resilient to temporary network issues, buffering logs locally when central aggregation services are unavailable.

Deployment Automation and CI/CD Integration

Manual deployments don't scale and introduce human error that causes outages and inconsistencies. Production environments require automated deployment pipelines that ensure consistent, repeatable, and auditable application releases. Continuous Integration and Continuous Deployment practices accelerate development velocity while improving deployment reliability through automated testing and validation.

GitOps Workflow Implementation

GitOps treats Git repositories as the single source of truth for infrastructure and application configurations. Changes to desired state occur through Git commits, with automated systems continuously reconciling actual cluster state with the declared desired state. This approach provides complete audit trails, enables easy rollbacks, and facilitates disaster recovery by maintaining all configurations in version control.

Tools like Flux or ArgoCD implement GitOps workflows, monitoring Git repositories for changes and automatically applying updates to clusters. These tools support progressive delivery strategies like canary deployments and blue-green deployments, enabling safer rollouts that minimize blast radius when issues occur. Separating application code repositories from configuration repositories allows different teams to manage their respective concerns while maintaining clear ownership boundaries.

Automation eliminates the variability and errors inherent in manual processes. Every manual deployment represents an opportunity for mistakes that could cause outages or security vulnerabilities.

Progressive Delivery Strategies

Simply replacing all running instances with new versions creates risk, as bugs or performance issues immediately impact all users. Progressive delivery techniques gradually roll out changes, enabling detection and mitigation of issues before they affect the entire user base. Canary deployments route a small percentage of traffic to new versions, monitoring key metrics before gradually increasing traffic allocation.

Blue-green deployments maintain two complete environments, routing traffic to one while preparing the other. This approach enables instant rollbacks by simply redirecting traffic back to the previous environment. Feature flags provide even more granular control, enabling activation of new functionality for specific user segments independent of deployment processes. These techniques collectively reduce deployment risk and enable faster iteration cycles.

High Availability and Disaster Recovery

Production systems must remain operational despite infrastructure failures, maintenance activities, and unexpected disasters. High availability architecture eliminates single points of failure through redundancy and automated failover mechanisms. Disaster recovery planning ensures business continuity even in catastrophic scenarios like complete datacenter loss or major security breaches.

Multi-Zone and Multi-Region Architecture

Distributing infrastructure across multiple availability zones within a region protects against zone-level failures like power outages or network issues. Zone-aware scheduling ensures that application replicas spread across zones, maintaining availability when individual zones experience problems. Critical applications should specify pod anti-affinity rules that prevent all replicas from running on nodes in the same zone.

For truly critical systems, multi-region deployments provide protection against regional disasters and enable serving users from geographically distributed locations to minimize latency. Multi-region architecture introduces significant complexity around data replication, cross-region networking, and traffic management. Organizations must carefully evaluate whether their availability requirements justify this complexity and cost.

Automated Recovery and Self-Healing

The orchestration platform's self-healing capabilities automatically restart failed containers, reschedule pods from failed nodes, and replace unhealthy instances. However, production environments benefit from additional automation that handles application-specific recovery scenarios. Liveness probes detect when applications enter unrecoverable states, triggering automatic restarts. Readiness probes prevent routing traffic to instances that aren't ready to handle requests.

Implementing Pod Disruption Budgets ensures that voluntary disruptions like node drains or cluster upgrades maintain minimum availability levels. These budgets specify the minimum number or percentage of pods that must remain available during disruptions, preventing maintenance operations from causing outages. Careful tuning of these budgets balances availability requirements against operational flexibility for maintenance activities.

Cost Optimization and Resource Management

Cloud infrastructure costs can escalate quickly without proper resource management and optimization practices. Production environments require balancing performance and availability requirements against cost constraints. Implementing resource quotas, right-sizing workloads, and leveraging cost-effective infrastructure options reduces expenses without compromising reliability or performance.

Resource Requests and Limits

Properly configuring resource requests and limits for every container ensures efficient resource utilization and prevents individual workloads from monopolizing cluster resources. Requests specify the minimum resources guaranteed to containers, influencing scheduling decisions and ensuring adequate resources for normal operation. Limits cap maximum resource consumption, preventing runaway processes from impacting other workloads.

Production environments should establish resource requests based on actual application requirements rather than guesswork. Monitoring actual resource consumption over time reveals patterns that inform accurate request values. Setting limits too low causes throttling and performance degradation, while excessively high limits waste resources and increase costs. Vertical Pod Autoscaling can automatically adjust requests and limits based on observed usage patterns, simplifying ongoing optimization efforts.

- 💰 Implement cluster autoscaling to automatically adjust node counts based on workload demands, reducing costs during low-utilization periods

- 🎯 Use spot instances or preemptible VMs for fault-tolerant workloads that can tolerate interruptions, achieving significant cost savings

- 📊 Monitor resource utilization to identify over-provisioned workloads and right-size resource allocations

- 🏷️ Implement cost allocation tags to track spending by team, project, or environment for better financial accountability

- ⚡ Schedule non-critical workloads during off-peak hours to leverage lower-cost infrastructure options

Cluster Efficiency and Bin Packing

The scheduler's bin-packing algorithms attempt to efficiently place pods on nodes to maximize resource utilization. However, default scheduling behavior doesn't always produce optimal results. Priority classes enable specifying relative importance of different workloads, allowing the scheduler to preempt lower-priority pods when higher-priority workloads need resources.

Implementing node affinity and pod affinity rules influences scheduling decisions to achieve specific placement goals. These rules can colocate related services to minimize network latency, spread replicas for availability, or restrict workloads to specific node types. Careful use of these features improves both resource efficiency and application performance, though overly complex rules can constrain scheduling flexibility and reduce overall cluster utilization.

Security Compliance and Governance

Organizations in regulated industries face specific compliance requirements around data protection, access controls, audit logging, and operational procedures. Meeting these requirements requires implementing appropriate technical controls, maintaining comprehensive documentation, and establishing governance processes that ensure ongoing compliance as environments evolve.

Policy Enforcement and Validation

Policy engines like Open Policy Agent (OPA) or Kyverno enable defining and enforcing organizational policies as code. These policies can validate resource configurations, enforce naming conventions, require specific labels or annotations, and prevent deployment of non-compliant resources. Implementing policies as code ensures consistent enforcement across all environments and provides clear documentation of organizational requirements.

Policies should be tested in non-production environments before enforcement in production to avoid disrupting legitimate workloads. Audit mode allows logging policy violations without blocking resource creation, enabling identification of non-compliant resources that need remediation. Gradually transitioning from audit to enforcement mode minimizes disruption while improving compliance posture.

Compliance is not a one-time achievement but an ongoing process. Regular audits, continuous monitoring, and adaptation to evolving requirements ensure that your environment maintains its compliance posture over time.

Audit Logging and Compliance Reporting

Comprehensive audit logging records all API requests, including who made the request, what action was attempted, and whether it succeeded or failed. These logs provide critical evidence for security investigations, compliance audits, and troubleshooting operational issues. Audit logs must be protected from tampering and retained according to regulatory requirements, typically involving shipping logs to tamper-proof storage systems.

Regular compliance reporting demonstrates adherence to regulatory requirements and organizational policies. Automated tools can scan configurations, analyze audit logs, and generate reports highlighting compliance status and identifying issues requiring remediation. These reports provide evidence for auditors and help prioritize security and compliance improvement efforts.

Operational Excellence and Maintenance

Launching a production cluster represents the beginning of an ongoing operational journey, not a final destination. Maintaining reliability, security, and performance requires continuous attention to upgrades, capacity management, incident response, and operational improvements. Establishing strong operational practices ensures that your environment remains healthy and continues meeting evolving business requirements.

Upgrade Strategy and Version Management

Regular upgrades ensure access to new features, performance improvements, and security patches. However, upgrades introduce risk of breaking changes or unexpected behavior. Staged upgrade rollouts apply updates first to non-production environments, then to less critical production workloads, and finally to mission-critical systems after validation. This approach identifies issues before they impact critical services.

Maintaining version compatibility between control plane components, worker nodes, and application dependencies requires careful planning. Orchestration platforms typically support running control planes one or two minor versions ahead of worker nodes, enabling gradual upgrades. Testing applications against new versions in staging environments before production upgrades prevents compatibility issues from causing outages.

Capacity Planning and Growth Management

Proactive capacity planning ensures that infrastructure scales ahead of demand rather than scrambling to add capacity during incidents. Monitoring growth trends in resource consumption, pod counts, and storage utilization enables forecasting future requirements. Establishing capacity buffers prevents resource exhaustion during traffic spikes or unexpected growth.

Regular capacity reviews examine utilization patterns, identify optimization opportunities, and plan infrastructure expansions. These reviews should consider both immediate needs and longer-term growth projections based on business plans. Automating capacity additions through cluster autoscaling and storage expansion policies reduces manual intervention and improves responsiveness to changing demands.

Incident Response and Post-Mortem Analysis

Despite best efforts, incidents will occur. Effective incident response processes minimize impact through rapid detection, clear escalation paths, and coordinated remediation efforts. On-call rotations ensure that qualified personnel are available to respond to issues at any time. Runbooks document common issues and their resolutions, enabling faster response even when the original implementers aren't available.

Conducting blameless post-mortems after significant incidents identifies root causes and systemic improvements rather than assigning fault to individuals. These analyses should result in concrete action items that prevent recurrence, whether through automation, improved monitoring, documentation updates, or architectural changes. Sharing post-mortem findings across the organization spreads knowledge and improves overall system reliability.

Frequently Asked Questions

What is the minimum number of nodes required for a production cluster?

A production-ready deployment should include at least three control plane nodes for high availability and a minimum of three worker nodes to ensure workload redundancy. This configuration provides resilience against individual node failures while supporting basic availability requirements. Larger deployments require additional nodes based on workload demands and availability requirements.

How often should production clusters be upgraded?

Organizations should plan to upgrade production environments at least quarterly to maintain security posture and access to important bug fixes. Critical security patches may require more immediate application. However, upgrade frequency should balance currency against stability, with thorough testing in non-production environments before production upgrades.

What is the difference between managed and self-hosted deployments?

Managed services from cloud providers handle control plane operations, upgrades, and infrastructure management, reducing operational burden but potentially increasing costs and creating vendor dependencies. Self-hosted deployments provide maximum flexibility and control but require dedicated expertise for installation, maintenance, and troubleshooting. The choice depends on organizational capabilities, requirements, and resources.

How can I reduce infrastructure costs without compromising reliability?

Cost optimization strategies include right-sizing resource requests based on actual consumption, implementing cluster autoscaling to match capacity to demand, using spot instances for fault-tolerant workloads, and establishing resource quotas to prevent waste. Regular utilization reviews identify optimization opportunities while monitoring ensures that cost reductions don't impact performance or availability.

What monitoring tools are essential for production environments?

Essential monitoring includes metrics collection through Prometheus, visualization with Grafana, centralized logging via ELK Stack or similar solutions, distributed tracing for microservices, and alerting systems that notify teams of issues. Additionally, implementing health checks, resource monitoring, and security scanning provides comprehensive observability across your environment.

How do I ensure data persistence and backup in containerized environments?

Data persistence requires properly configured persistent volumes backed by reliable storage systems. Implement automated snapshot schedules for volume backups, maintain cluster-level backups of configurations and resources, and regularly test recovery procedures. Retention policies should balance recovery needs against storage costs while meeting compliance requirements.

What security measures are critical for production deployments?

Critical security measures include implementing RBAC for access control, deploying network policies for microsegmentation, scanning container images for vulnerabilities, using secrets management solutions for sensitive data, enabling audit logging, and implementing pod security standards. Regular security assessments and penetration testing validate the effectiveness of security controls.

How should I handle multi-tenancy in production clusters?

Multi-tenancy requires strong isolation through namespaces, resource quotas, network policies, and RBAC. Consider separate clusters for tenants with strict isolation requirements or significantly different security profiles. Implement monitoring and alerting per tenant to track resource consumption and identify issues. Policy enforcement ensures tenants cannot interfere with each other's workloads.