How to Use Helm Charts for Kubernetes Applications

Helm chart overview: Chart.yaml, values.yaml and templates produce Kubernetes manifests; helm install creates versioned releases to deploy, configure and update containerized apps.

How to Use Helm Charts for Kubernetes Applications

Managing Kubernetes applications can quickly become overwhelming as your infrastructure grows. Every deployment requires multiple YAML files, configuration management across environments, and careful coordination of resources. When you multiply this complexity across dozens or hundreds of applications, the operational burden becomes unsustainable, leading to errors, inconsistencies, and countless hours spent on repetitive tasks.

Helm charts provide a packaging format that transforms how teams deploy and manage Kubernetes applications. Think of Helm as the package manager for Kubernetes—similar to how apt works for Ubuntu or brew for macOS—bundling all necessary resources into a single, versioned artifact. This approach brings templating, dependency management, and repeatable deployments to your container orchestration workflow.

Throughout this guide, you'll discover practical techniques for creating, customizing, and deploying Helm charts in production environments. We'll explore the architecture behind Helm, walk through real-world implementation scenarios, examine best practices for chart development, and address common challenges teams face when adopting this technology. Whether you're deploying a simple web application or orchestrating complex microservices architectures, you'll gain actionable knowledge to streamline your Kubernetes operations.

Understanding the Helm Architecture and Core Concepts

Helm operates as a client-side tool that interacts directly with the Kubernetes API server. Unlike its predecessor (Helm 2), which required a server-side component called Tiller, Helm 3 simplified the architecture by eliminating this dependency. This change improved security, reduced operational complexity, and aligned better with Kubernetes' native role-based access control mechanisms.

The fundamental building block of Helm is the chart—a collection of files that describe a related set of Kubernetes resources. Charts follow a specific directory structure that includes templates, default values, metadata, and optional dependencies. When you install a chart, Helm processes these templates, merges them with user-provided values, and submits the resulting manifests to Kubernetes.

A Helm release represents a specific installation of a chart in your cluster. You can install the same chart multiple times, creating separate releases with different configurations. Each release maintains its own history, allowing you to track changes, perform rollbacks, and manage the lifecycle independently. This separation enables powerful workflows like running multiple versions of an application simultaneously for testing or gradual migrations.

"The shift from manually managing YAML files to using Helm charts reduced our deployment time from hours to minutes while eliminating configuration drift across environments."

Helm repositories function as chart storage and distribution mechanisms. Public repositories like Artifact Hub host thousands of community-maintained charts for popular applications, while private repositories let organizations share internal charts securely. The repository system supports versioning, dependency resolution, and authentication, creating an ecosystem similar to language-specific package managers.

Key Components That Power Helm Functionality

The Helm client binary provides the command-line interface for all operations. It reads chart files, renders templates using the Go templating engine, and communicates with Kubernetes to create or modify resources. The client stores release information as Kubernetes secrets or configmaps in the same namespace as the release, enabling multiple team members to manage releases without a centralized state server.

Chart templates leverage Go's text/template package with additional functions from the Sprig library. This combination provides powerful capabilities for conditional logic, iteration, string manipulation, and data transformation. Templates access values through a structured hierarchy, allowing chart authors to expose configuration options while maintaining sensible defaults.

| Component | Purpose | Key Features |

|---|---|---|

| Chart | Package format for Kubernetes resources | Templates, values, metadata, dependencies |

| Release | Instance of a chart running in cluster | Version history, rollback capability, lifecycle management |

| Repository | Storage and distribution for charts | Versioning, indexing, authentication |

| Values | Configuration parameters for charts | Hierarchical structure, environment-specific overrides |

| Templates | Kubernetes manifest generators | Go templating, conditional logic, helper functions |

The values system implements a multi-layered configuration approach. Charts include a values.yaml file with defaults, which users can override through command-line flags, separate values files, or environment variables. Helm merges these sources in a predictable order, giving users fine-grained control over configuration while maintaining chart portability across environments.

Installing and Configuring Your Helm Environment

Getting started with Helm requires minimal setup. The single-binary architecture means installation involves downloading the appropriate executable for your operating system and adding it to your system path. Most package managers include Helm in their repositories, simplifying installation on Linux, macOS, and Windows systems.

For Linux systems, you can install Helm using the official installation script or through package managers. The script method provides the latest stable release and works across distributions. Ubuntu and Debian users can leverage apt, while Red Hat-based systems use yum or dnf. macOS users typically install through Homebrew, which handles updates alongside other development tools.

# Using the official installation script

curl https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3 | bash

# Using Homebrew on macOS

brew install helm

# Using Chocolatey on Windows

choco install kubernetes-helmAfter installation, verify Helm can communicate with your Kubernetes cluster by running helm version. This command displays both the Helm client version and confirms connectivity to your cluster. Helm uses the same kubeconfig file as kubectl, so if kubectl works, Helm should work without additional configuration.

Configuring Repository Access and Chart Discovery

Helm doesn't include default repositories in version 3, requiring explicit repository addition before installing charts. The most comprehensive public repository is Artifact Hub, which aggregates charts from multiple sources. Adding repositories involves providing a name and URL, after which Helm can search and install charts from that source.

# Add the Bitnami repository

helm repo add bitnami https://charts.bitnami.com/bitnami

# Add the stable repository (legacy)

helm repo add stable https://charts.helm.sh/stable

# Update repository indexes

helm repo update

# Search for charts

helm search repo nginx"Proper repository management transforms Helm from a deployment tool into a complete application lifecycle platform, enabling teams to share and reuse solutions across projects."

For enterprise environments, setting up private chart repositories ensures security and control over internal applications. Several options exist, including ChartMuseum (a lightweight open-source solution), cloud provider services like AWS S3 or Google Cloud Storage, and integrated solutions within artifact managers like Artifactory or Nexus. Private repositories support authentication, access control, and audit logging.

Repository credentials can be stored in Helm's configuration or passed per-command. For automated systems, consider using service accounts or workload identity mechanisms rather than long-lived credentials. Many CI/CD platforms provide secure credential injection, allowing pipelines to authenticate to private repositories without exposing sensitive information in configuration files.

Essential Environment Configuration Options

Helm respects several environment variables that modify its behavior. HELM_CACHE_HOME determines where Helm stores cached chart archives, while HELM_CONFIG_HOME specifies the configuration directory. For multi-tenant systems or shared development machines, setting these variables prevents conflicts between users or projects.

The HELM_DRIVER variable controls how Helm stores release information. The default "secret" driver stores release data as Kubernetes secrets, while "configmap" uses configmaps instead. For environments with many releases or large chart values, the secret storage can approach Kubernetes' size limits, making the configmap driver a better choice despite slightly reduced security.

- 🔧 KUBECONFIG - Specifies which Kubernetes cluster Helm connects to, supporting multi-cluster workflows

- 🔧 HELM_NAMESPACE - Sets the default namespace for operations, reducing repetitive flag usage

- 🔧 HELM_DEBUG - Enables detailed logging for troubleshooting template rendering and API interactions

- 🔧 HELM_MAX_HISTORY - Limits stored release revisions to prevent unbounded growth in large clusters

- 🔧 HELM_REPOSITORY_CONFIG - Points to the repository configuration file for custom setups

Namespace configuration deserves special attention when working with Helm. While you can specify namespaces per-command with the --namespace flag, establishing conventions early prevents mistakes. Some teams dedicate namespaces to environments (development, staging, production), while others organize by application or team. Helm honors Kubernetes namespace isolation, so RBAC policies can restrict which users can deploy to specific namespaces.

Creating Your First Helm Chart from Scratch

Building custom charts gives you complete control over application deployment while leveraging Helm's powerful features. The helm create command generates a starter chart with a conventional directory structure and example files. This scaffold provides a foundation you can modify to match your application's requirements.

# Create a new chart

helm create myapp

# Resulting directory structure:

myapp/

Chart.yaml # Chart metadata

values.yaml # Default configuration values

charts/ # Chart dependencies

templates/ # Kubernetes manifest templates

deployment.yaml

service.yaml

ingress.yaml

_helpers.tpl # Template helper functions

.helmignore # Files to exclude from packagingThe Chart.yaml file contains metadata about your chart, including name, version, description, and maintainer information. The version field follows semantic versioning and increments with each chart change, while appVersion tracks the version of the application being deployed. These separate version numbers allow chart improvements independent of application updates.

Structuring Templates for Flexibility and Reusability

Templates transform static YAML files into dynamic, configurable manifests. The templating syntax uses double curly braces to inject values, call functions, and implement logic. The .Values object provides access to configuration parameters, while .Release contains information about the current release, and .Chart exposes chart metadata.

apiVersion: apps/v1

kind: Deployment

metadata:

name: {{ include "myapp.fullname" . }}

labels:

{{- include "myapp.labels" . | nindent 4 }}

spec:

replicas: {{ .Values.replicaCount }}

selector:

matchLabels:

{{- include "myapp.selectorLabels" . | nindent 6 }}

template:

metadata:

labels:

{{- include "myapp.selectorLabels" . | nindent 8 }}

spec:

containers:

- name: {{ .Chart.Name }}

image: "{{ .Values.image.repository }}:{{ .Values.image.tag | default .Chart.AppVersion }}"

imagePullPolicy: {{ .Values.image.pullPolicy }}

ports:

- containerPort: {{ .Values.service.port }}"Template helpers dramatically improve maintainability by centralizing common patterns, reducing duplication, and making charts easier to understand and modify."

The _helpers.tpl file defines named templates that encapsulate reusable logic. Common helpers generate consistent labels, construct resource names, and handle conditional configurations. Using helpers instead of duplicating template code across files makes charts more maintainable and reduces the risk of inconsistencies.

Conditional logic in templates enables environment-specific configurations. The if statement evaluates expressions and includes content only when conditions are met. This capability allows a single chart to support multiple deployment scenarios, such as enabling ingress only in production or configuring different resource limits based on environment.

{{- if .Values.ingress.enabled }}

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: {{ include "myapp.fullname" . }}

annotations:

{{- range $key, $value := .Values.ingress.annotations }}

{{ $key }}: {{ $value | quote }}

{{- end }}

spec:

rules:

{{- range .Values.ingress.hosts }}

- host: {{ .host | quote }}

http:

paths:

{{- range .paths }}

- path: {{ .path }}

pathType: {{ .pathType }}

backend:

service:

name: {{ include "myapp.fullname" $ }}

port:

number: {{ $.Values.service.port }}

{{- end }}

{{- end }}

{{- end }}Defining Comprehensive Values for Configuration Management

The values.yaml file establishes default configuration for your chart. Well-structured values use hierarchical organization to group related settings, making the configuration intuitive and discoverable. Comments explaining each value's purpose and acceptable options help users customize the chart without reading template code.

| Configuration Category | Common Values | Purpose |

|---|---|---|

| Image Settings | repository, tag, pullPolicy, pullSecrets | Control container image source and update behavior |

| Replica Configuration | replicaCount, autoscaling parameters | Manage application scaling and availability |

| Service Definition | type, port, targetPort, annotations | Configure network access to application |

| Resource Limits | requests, limits for CPU and memory | Ensure proper resource allocation and prevent overconsumption |

| Security Context | runAsUser, fsGroup, capabilities | Apply security policies and privilege restrictions |

| Ingress Settings | enabled, hosts, tls, annotations | Configure external access and routing rules |

Validation within templates prevents invalid configurations from reaching Kubernetes. The required function enforces mandatory values, failing chart installation if critical parameters are missing. The fail function enables custom validation logic, checking value ranges, format compliance, or logical consistency between related settings.

# Example values.yaml with documentation

replicaCount: 1

image:

# Container image repository

repository: nginx

# Image pull policy (Always, IfNotPresent, Never)

pullPolicy: IfNotPresent

# Overrides the image tag (defaults to chart appVersion)

tag: ""

service:

# Service type (ClusterIP, NodePort, LoadBalancer)

type: ClusterIP

# Service port

port: 80

resources:

# Resource limits prevent overconsumption

limits:

cpu: 100m

memory: 128Mi

# Resource requests ensure minimum allocation

requests:

cpu: 100m

memory: 128Mi

autoscaling:

enabled: false

minReplicas: 1

maxReplicas: 10

targetCPUUtilizationPercentage: 80Testing your chart during development catches issues before deployment. The helm template command renders templates locally without connecting to Kubernetes, allowing quick iteration. The helm lint command validates chart structure and identifies common problems. For comprehensive testing, helm install --dry-run --debug renders templates and shows what would be sent to Kubernetes without actually creating resources.

Deploying Applications with Helm Releases

Installing a chart creates a release in your Kubernetes cluster. The helm install command requires a release name and chart reference, with optional values overrides to customize the deployment. Helm processes templates, merges configurations, and submits resulting manifests to Kubernetes in a coordinated sequence.

# Install a chart from a repository

helm install myrelease bitnami/nginx

# Install with custom values

helm install myrelease bitnami/nginx \

--set replicaCount=3 \

--set service.type=LoadBalancer

# Install with a values file

helm install myrelease bitnami/nginx \

--values production-values.yaml \

--namespace production \

--create-namespace

# Install from a local chart directory

helm install myrelease ./myapp

# Install with generated name

helm install myapp --generate-nameThe --set flag provides a quick way to override individual values from the command line. For complex configurations, values files offer better organization and version control. You can specify multiple values files, with later files taking precedence, enabling a base configuration with environment-specific overlays.

"Release management through Helm transformed our deployment process from error-prone manual steps to reliable, repeatable operations that anyone on the team can execute confidently."

Managing Release Lifecycle and Updates

Upgrading releases applies changes to running applications while maintaining continuity. The helm upgrade command accepts the same value override options as install, computing differences between the current and desired state. Helm creates a new revision for each upgrade, enabling rollback if problems occur.

# Upgrade a release with new values

helm upgrade myrelease bitnami/nginx \

--set image.tag=1.21.0 \

--reuse-values

# Upgrade or install (useful in automation)

helm upgrade --install myrelease bitnami/nginx \

--values production-values.yaml

# Upgrade with wait for readiness

helm upgrade myrelease bitnami/nginx \

--wait \

--timeout 5m

# Force resource updates

helm upgrade myrelease bitnami/nginx \

--forceThe --reuse-values flag preserves values from the previous release, applying only specified changes. Without this flag, Helm resets to chart defaults and applies only the new values you provide. Understanding this behavior prevents accidental configuration loss during upgrades.

Rollback functionality provides a safety net when upgrades cause issues. Each release revision stores the complete configuration and manifest set, allowing instant reversion to any previous state. The helm rollback command specifies the release name and optionally a revision number, defaulting to the previous revision if omitted.

- ✅ helm list - Shows all releases in the current namespace with status and revision information

- ✅ helm status - Displays detailed information about a specific release including resource status

- ✅ helm history - Lists all revisions for a release with timestamps and descriptions

- ✅ helm get values - Retrieves the values used for a specific release revision

- ✅ helm get manifest - Shows the Kubernetes manifests generated for a release

Advanced Deployment Strategies and Techniques

The --wait flag makes Helm monitor resource readiness before returning, ensuring the deployment completes successfully. This behavior proves valuable in CI/CD pipelines where subsequent steps depend on application availability. The --timeout parameter sets a maximum wait duration, preventing indefinite hangs when issues occur.

Atomic deployments combine installation and automatic rollback on failure. The --atomic flag tells Helm to roll back changes if the deployment doesn't succeed within the timeout period. This approach maintains cluster stability in automated environments by preventing partial deployments from remaining in a broken state.

# Atomic deployment with automatic rollback

helm upgrade myrelease bitnami/nginx \

--atomic \

--timeout 10m \

--values production-values.yaml

# Deployment with hooks and wait

helm install myrelease ./myapp \

--wait \

--wait-for-jobs \

--timeout 15m

# Test release after installation

helm test myreleaseHelm hooks enable executing actions at specific points in the release lifecycle. Common hook types include pre-install, post-install, pre-upgrade, and post-upgrade. Hooks typically run as Kubernetes jobs or pods, performing tasks like database migrations, cache warming, or validation checks. The hook weight annotation controls execution order when multiple hooks exist for the same lifecycle event.

"Implementing pre-upgrade hooks for database migrations eliminated deployment failures caused by schema incompatibilities, reducing our incident rate by over 60 percent."

Cleanup strategies determine how Helm handles resources during uninstallation. By default, Helm deletes all resources it created, but you can configure resources to persist using the helm.sh/resource-policy: keep annotation. This capability protects critical resources like persistent volumes or secrets that should survive application removal.

Working with Chart Dependencies and Complex Applications

Real-world applications often require multiple components working together. Helm dependencies allow charts to declare relationships with other charts, automatically managing installation and configuration of required services. A web application chart might depend on database and cache charts, with Helm coordinating the complete stack deployment.

Dependencies are declared in Chart.yaml using the dependencies section. Each dependency specifies a chart name, version, and repository. The helm dependency update command downloads dependency charts into the charts/ directory, where Helm includes them during installation. Version constraints support semantic versioning ranges, allowing flexible compatibility specifications.

# Chart.yaml with dependencies

apiVersion: v2

name: myapp

version: 1.0.0

appVersion: "2.0"

dependencies:

- name: postgresql

version: "11.x.x"

repository: https://charts.bitnami.com/bitnami

condition: postgresql.enabled

- name: redis

version: "^16.0.0"

repository: https://charts.bitnami.com/bitnami

condition: redis.enabled

tags:

- cacheConfiguring and Customizing Dependency Behavior

Conditions and tags provide control over which dependencies get installed. Conditions reference values that enable or disable specific dependencies, while tags group related dependencies for collective management. This flexibility supports different deployment scenarios, such as using an external database in production while including a database chart for development environments.

# values.yaml with dependency configuration

postgresql:

enabled: true

auth:

username: myapp

password: changeme

database: myappdb

primary:

persistence:

size: 10Gi

redis:

enabled: true

auth:

enabled: true

password: changeme

master:

persistence:

size: 5GiDependency values get namespaced under the dependency name in your chart's values. This namespacing prevents conflicts and maintains clear configuration boundaries. You can pass configuration to dependencies through your values file, allowing users to customize the entire stack through a single configuration interface.

Aliases enable using the same chart multiple times with different configurations. When you need multiple instances of a dependency—such as separate databases for different application components—aliases provide unique names while referencing the same chart. Each aliased dependency gets its own configuration section in values.

# Multiple instances using aliases

dependencies:

- name: postgresql

version: "11.x.x"

repository: https://charts.bitnami.com/bitnami

alias: primarydb

- name: postgresql

version: "11.x.x"

repository: https://charts.bitnami.com/bitnami

alias: analyticsdb

# Corresponding values

primarydb:

auth:

database: mainapp

primary:

persistence:

size: 20Gi

analyticsdb:

auth:

database: analytics

primary:

persistence:

size: 50GiManaging Subcharts and Parent-Child Relationships

Subcharts can access values from parent charts through the global section. Global values provide a mechanism for sharing configuration across the entire chart hierarchy, useful for common settings like image registries, environment identifiers, or shared credentials. Subcharts can also export values to parent charts, enabling dynamic configuration based on subchart behavior.

"Chart dependencies transformed our microservices deployment from managing 30 separate Helm releases to a single cohesive application chart, dramatically simplifying operations and reducing deployment time."

The import-values field enables pulling specific values from subcharts into the parent chart's values. This capability supports advanced scenarios where parent charts need to react to subchart configuration or expose selected subchart settings to users. Import specifications can rename values during import, maintaining clean value hierarchies.

Dependency management commands help maintain chart consistency. The helm dependency list command shows all declared dependencies with their status, indicating whether they're present in the charts/ directory. The helm dependency build command packages dependency charts from the charts/ directory, while helm dependency update fetches the latest versions matching declared constraints.

Implementing Advanced Templating Techniques

Mastering Helm's templating capabilities unlocks sophisticated configuration patterns and reusable components. Beyond basic value substitution, templates support complex logic, data manipulation, and dynamic resource generation. These advanced techniques enable creating highly flexible charts that adapt to diverse deployment requirements without sacrificing maintainability.

The range action iterates over lists and maps, generating repeated template sections. This capability proves invaluable for creating multiple similar resources or processing collections of configuration data. Within a range block, the context changes to the current iteration element, requiring careful attention to scope when accessing parent values.

{{- range .Values.extraConfigMaps }}

---

apiVersion: v1

kind: ConfigMap

metadata:

name: {{ include "myapp.fullname" $ }}-{{ .name }}

data:

{{- range $key, $value := .data }}

{{ $key }}: {{ $value | quote }}

{{- end }}

{{- end }}

# Corresponding values

extraConfigMaps:

- name: app-config

data:

APP_ENV: production

LOG_LEVEL: info

- name: feature-flags

data:

FEATURE_X: "true"

FEATURE_Y: "false"Leveraging Template Functions and Pipelines

Template functions transform data during rendering. Helm includes dozens of functions for string manipulation, type conversion, encoding, cryptographic operations, and more. Functions chain together using the pipe operator, creating readable data transformation pipelines. The Sprig library provides additional functions beyond Go's standard template functions.

- 🔄 default - Provides fallback values when variables are undefined or empty

- 🔄 quote - Wraps strings in double quotes for YAML compatibility

- 🔄 nindent - Adds newline and indentation for proper YAML formatting

- 🔄 toYaml - Converts complex structures to YAML format

- 🔄 include - Renders named templates and allows piping their output

The with action modifies template scope, simplifying access to nested values. Within a with block, the dot represents the specified value rather than the root context. This scoping reduces repetitive path traversal and improves template readability, especially when working with deeply nested configuration structures.

{{- with .Values.securityContext }}

securityContext:

runAsNonRoot: {{ .runAsNonRoot }}

runAsUser: {{ .runAsUser }}

fsGroup: {{ .fsGroup }}

{{- if .capabilities }}

capabilities:

drop:

{{- range .capabilities.drop }}

- {{ . }}

{{- end }}

{{- end }}

{{- end }}Creating Reusable Template Helpers and Functions

Named templates defined in _helpers.tpl encapsulate reusable logic. The define action creates a named template, while include renders it at the call site. Unlike the template action, include allows piping the output through additional functions, enabling flexible composition and formatting.

{{/*

Generate standard labels

*/}}

{{- define "myapp.labels" -}}

helm.sh/chart: {{ include "myapp.chart" . }}

{{ include "myapp.selectorLabels" . }}

{{- if .Chart.AppVersion }}

app.kubernetes.io/version: {{ .Chart.AppVersion | quote }}

{{- end }}

app.kubernetes.io/managed-by: {{ .Release.Service }}

{{- end }}

{{/*

Selector labels

*/}}

{{- define "myapp.selectorLabels" -}}

app.kubernetes.io/name: {{ include "myapp.name" . }}

app.kubernetes.io/instance: {{ .Release.Name }}

{{- end }}

{{/*

Create chart name and version

*/}}

{{- define "myapp.chart" -}}

{{- printf "%s-%s" .Chart.Name .Chart.Version | replace "+" "_" | trunc 63 | trimSuffix "-" }}

{{- end }}The tpl function evaluates strings as templates, enabling dynamic template generation. This advanced technique supports scenarios where template logic itself needs to be configurable, such as allowing users to provide custom label patterns or annotation templates. The function requires careful use to avoid security issues from untrusted input.

Template debugging becomes crucial as complexity increases. The --debug flag shows rendered templates alongside error messages, helping identify issues. The fail function can add custom validation messages, while strategic use of comments and formatting makes templates more maintainable. Consider extracting complex logic into named templates with clear documentation.

{{/*

Validate replica count

*/}}

{{- if lt (.Values.replicaCount | int) 1 }}

{{- fail "replicaCount must be at least 1" }}

{{- end }}

{{/*

Validate resource limits

*/}}

{{- if .Values.resources.limits }}

{{- if and .Values.resources.limits.memory .Values.resources.requests.memory }}

{{- if lt (.Values.resources.limits.memory | replace "Mi" "" | replace "Gi" "" | int) (.Values.resources.requests.memory | replace "Mi" "" | replace "Gi" "" | int) }}

{{- fail "Memory limits must be greater than or equal to requests" }}

{{- end }}

{{- end }}

{{- end }}Securing Helm Deployments and Managing Secrets

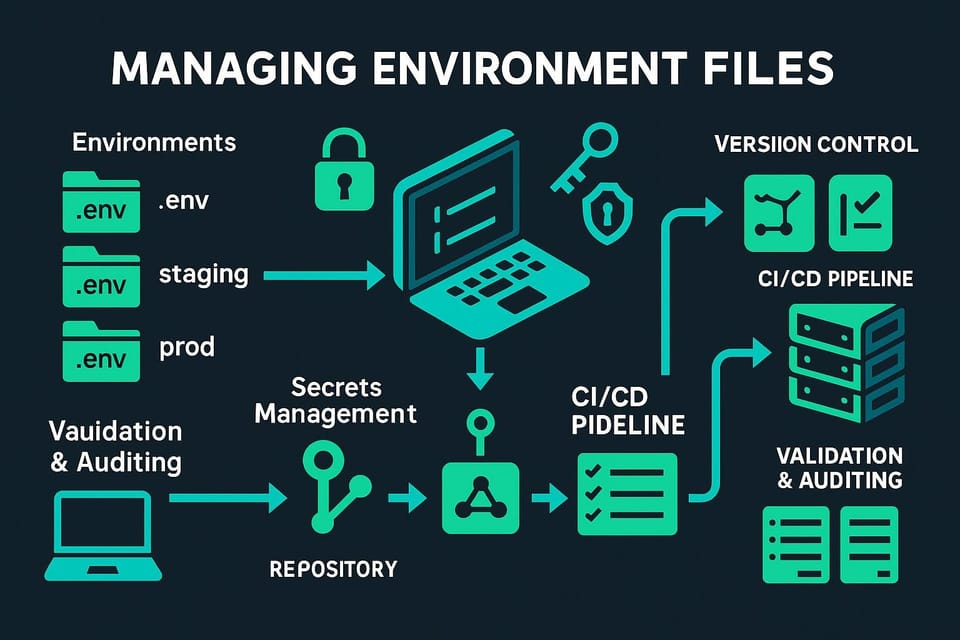

Security considerations permeate every aspect of Helm usage, from chart development through production deployment. Kubernetes secrets provide the foundation for sensitive data management, but Helm's values system requires careful handling to prevent credential exposure. Several patterns and tools address these challenges while maintaining Helm's usability advantages.

Never commit sensitive values to version control in plain text. Values files containing passwords, API keys, or certificates should either use placeholders that get replaced during deployment or leverage external secret management systems. Many teams maintain separate values files for sensitive data, storing them in secure vaults and injecting them only during deployment.

Integrating External Secret Management Solutions

The Helm Secrets plugin extends Helm with transparent encryption for values files. Using Mozilla SOPS for encryption, the plugin supports various key management systems including AWS KMS, Google Cloud KMS, Azure Key Vault, and PGP. Encrypted values files can safely reside in version control, with decryption happening automatically during Helm operations.

# Install the Helm Secrets plugin

helm plugin install https://github.com/jkroepke/helm-secrets

# Create an encrypted values file

helm secrets enc values-prod.yaml

# Install using encrypted values

helm secrets install myrelease ./myapp \

-f values-prod.yaml

# View decrypted values

helm secrets view values-prod.yamlExternal secret operators like External Secrets Operator or Sealed Secrets provide alternative approaches. These tools create Kubernetes secrets from external sources, allowing charts to reference secrets without including sensitive data in values. Charts define secret references rather than actual credentials, with the operator handling synchronization from the external system.

"Adopting External Secrets Operator eliminated hardcoded credentials from our charts and values files, reducing security incidents while simplifying secret rotation across environments."

Implementing Least Privilege and Access Controls

Kubernetes RBAC determines what actions Helm can perform in your cluster. Create service accounts with minimal necessary permissions for automated Helm operations. Avoid using cluster-admin privileges in production; instead, grant specific permissions for the namespaces and resource types your charts manage. This principle limits the impact of compromised credentials or misconfigured deployments.

apiVersion: v1

kind: ServiceAccount

metadata:

name: helm-deployer

namespace: production

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: helm-deployer

namespace: production

rules:

- apiGroups: ["apps"]

resources: ["deployments", "statefulsets", "daemonsets"]

verbs: ["get", "list", "watch", "create", "update", "patch", "delete"]

- apiGroups: [""]

resources: ["services", "configmaps", "secrets"]

verbs: ["get", "list", "watch", "create", "update", "patch", "delete"]

- apiGroups: ["networking.k8s.io"]

resources: ["ingresses"]

verbs: ["get", "list", "watch", "create", "update", "patch", "delete"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: helm-deployer

namespace: production

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: helm-deployer

subjects:

- kind: ServiceAccount

name: helm-deployer

namespace: productionChart signing and verification protect against tampering and ensure chart authenticity. Helm supports signing charts with PGP keys and verifying signatures before installation. Organizations distributing internal charts should implement signing to prevent unauthorized modifications. The helm package --sign command creates a signature file, while helm install --verify checks signatures before deployment.

Security contexts in chart templates enforce pod-level and container-level security policies. Configure non-root users, read-only root filesystems, dropped capabilities, and privilege escalation prevention. These settings follow defense-in-depth principles, limiting the impact of potential container breakouts or application vulnerabilities.

securityContext:

runAsNonRoot: true

runAsUser: 1000

fsGroup: 1000

seccompProfile:

type: RuntimeDefault

containers:

- name: {{ .Chart.Name }}

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

capabilities:

drop:

- ALL

volumeMounts:

- name: tmp

mountPath: /tmp

volumes:

- name: tmp

emptyDir: {}Scanning Charts for Vulnerabilities and Compliance

Static analysis tools identify security issues and policy violations in charts before deployment. Tools like Checkov, Kubesec, and Datree scan Kubernetes manifests for misconfigurations, missing security controls, and violations of organizational policies. Integrate these tools into CI/CD pipelines to catch issues early in the development cycle.

Container image scanning complements chart security by identifying vulnerabilities in application images. While Helm manages deployment configuration, the images themselves represent a significant attack surface. Tools like Trivy, Clair, or cloud provider scanning services analyze image layers for known vulnerabilities, outdated packages, and security issues.

Optimizing Helm for Production Environments

Production deployments demand reliability, performance, and operational excellence. Helm charts require careful tuning and additional considerations beyond basic functionality to meet production requirements. Resource management, monitoring integration, disaster recovery, and automation all play crucial roles in successful production Helm usage.

Resource requests and limits prevent resource contention and ensure application stability. Charts should include sensible defaults that work for typical deployments while allowing customization for specific requirements. Requests guarantee minimum resource allocation, while limits prevent overconsumption. Properly configured resources enable Kubernetes scheduler to make optimal placement decisions and maintain cluster health.

resources:

requests:

memory: "256Mi"

cpu: "250m"

limits:

memory: "512Mi"

cpu: "500m"

# Production values with increased resources

resources:

requests:

memory: "1Gi"

cpu: "1000m"

limits:

memory: "2Gi"

cpu: "2000m"Implementing High Availability and Resilience

Pod disruption budgets maintain application availability during voluntary disruptions like node drains or cluster upgrades. Charts should include PDB templates that specify minimum available replicas or maximum unavailable replicas. This protection ensures Kubernetes respects availability requirements when performing maintenance operations.

{{- if gt (.Values.replicaCount | int) 1 }}

apiVersion: policy/v1

kind: PodDisruptionBudget

metadata:

name: {{ include "myapp.fullname" . }}

spec:

minAvailable: {{ .Values.podDisruptionBudget.minAvailable | default 1 }}

selector:

matchLabels:

{{- include "myapp.selectorLabels" . | nindent 6 }}

{{- end }}Affinity and anti-affinity rules control pod placement across nodes and zones. Anti-affinity prevents multiple replicas from running on the same node, protecting against node failures. Topology spread constraints provide more flexible distribution controls, ensuring even spread across failure domains. These configurations improve resilience without requiring application changes.

Health checks enable Kubernetes to detect and recover from application failures automatically. Liveness probes trigger container restarts when applications become unresponsive, while readiness probes remove unhealthy pods from service endpoints. Startup probes handle applications with slow initialization, preventing premature failure detection. Charts should include configurable probe definitions with reasonable defaults.

livenessProbe:

httpGet:

path: {{ .Values.livenessProbe.path | default "/healthz" }}

port: {{ .Values.service.port }}

initialDelaySeconds: {{ .Values.livenessProbe.initialDelaySeconds | default 30 }}

periodSeconds: {{ .Values.livenessProbe.periodSeconds | default 10 }}

timeoutSeconds: {{ .Values.livenessProbe.timeoutSeconds | default 5 }}

failureThreshold: {{ .Values.livenessProbe.failureThreshold | default 3 }}

readinessProbe:

httpGet:

path: {{ .Values.readinessProbe.path | default "/ready" }}

port: {{ .Values.service.port }}

initialDelaySeconds: {{ .Values.readinessProbe.initialDelaySeconds | default 10 }}

periodSeconds: {{ .Values.readinessProbe.periodSeconds | default 5 }}

timeoutSeconds: {{ .Values.readinessProbe.timeoutSeconds | default 3 }}

failureThreshold: {{ .Values.readinessProbe.failureThreshold | default 3 }}Integrating Observability and Monitoring

Monitoring integration starts with proper labeling. Consistent labels enable monitoring systems to discover and scrape metrics automatically. Charts should include annotations that configure Prometheus scraping, specify metric paths, and define scrape intervals. This declarative approach eliminates manual monitoring configuration for each deployment.

"Standardizing monitoring annotations across all our Helm charts reduced the time to achieve full observability for new services from days to minutes, dramatically improving our operational capabilities."

Service mesh integration enhances observability and traffic management. When deploying to service mesh environments like Istio or Linkerd, charts should include appropriate annotations and labels. The mesh automatically instruments applications with metrics, tracing, and traffic control capabilities, providing deep insights without application modifications.

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "{{ .Values.metrics.port }}"

prometheus.io/path: "{{ .Values.metrics.path }}"

{{- if .Values.serviceMesh.enabled }}

sidecar.istio.io/inject: "true"

{{- end }}

# ServiceMonitor for Prometheus Operator

{{- if .Values.metrics.serviceMonitor.enabled }}

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: {{ include "myapp.fullname" . }}

spec:

selector:

matchLabels:

{{- include "myapp.selectorLabels" . | nindent 6 }}

endpoints:

- port: metrics

interval: {{ .Values.metrics.serviceMonitor.interval | default "30s" }}

path: {{ .Values.metrics.path | default "/metrics" }}

{{- end }}Automating Deployment Workflows

CI/CD integration automates chart testing and deployment. Popular platforms like GitLab CI, GitHub Actions, Jenkins, and ArgoCD all support Helm operations. Pipelines should include stages for linting, testing, security scanning, and progressive deployment across environments. This automation ensures consistency and reduces manual errors.

# GitHub Actions workflow example

name: Deploy Application

on:

push:

branches: [main]

jobs:

deploy:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- name: Install Helm

uses: azure/setup-helm@v3

with:

version: '3.12.0'

- name: Lint Chart

run: helm lint ./charts/myapp

- name: Run Chart Tests

run: |

helm template myapp ./charts/myapp --values ./charts/myapp/values-test.yaml

helm unittest ./charts/myapp

- name: Configure Kubernetes

uses: azure/k8s-set-context@v3

with:

kubeconfig: ${{ secrets.KUBE_CONFIG }}

- name: Deploy to Production

run: |

helm upgrade --install myapp ./charts/myapp \

--namespace production \

--values ./charts/myapp/values-prod.yaml \

--wait \

--timeout 10m \

--atomicGitOps approaches use Git as the source of truth for cluster state. Tools like ArgoCD and Flux continuously monitor Git repositories and automatically apply changes to clusters. This pattern provides audit trails, rollback capabilities, and declarative infrastructure management. Helm integrates naturally with GitOps, serving as the templating and packaging layer while the GitOps operator handles deployment.

Progressive delivery strategies minimize deployment risk through gradual rollouts. Canary deployments route small percentages of traffic to new versions, monitoring metrics before full rollout. Blue-green deployments maintain parallel environments, switching traffic only after validation. Flagger and Argo Rollouts extend Kubernetes with automated progressive delivery capabilities that work seamlessly with Helm.

Troubleshooting Common Helm Issues

Even well-designed charts encounter issues during development and deployment. Systematic troubleshooting approaches combined with understanding common failure patterns enable quick problem resolution. Helm provides several debugging tools and techniques that help identify and fix issues efficiently.

Template rendering errors typically manifest during installation or upgrade attempts. The error messages often indicate the specific template and line causing issues. Use helm template to render templates locally, examining the output for malformed YAML or unexpected values. The --debug flag provides additional context, showing value resolution and template execution flow.

# Render templates locally for inspection

helm template myrelease ./myapp --debug

# Test with specific values

helm template myrelease ./myapp \

--values test-values.yaml \

--debug

# Output to file for detailed analysis

helm template myrelease ./myapp > output.yaml

# Validate rendered output

kubectl apply --dry-run=client -f output.yamlDiagnosing Release and Resource Issues

Failed releases require investigating both Helm state and Kubernetes resources. The helm status command shows release information and recent events. For more details, examine individual resources using kubectl. Look for pod failures, image pull errors, configuration issues, or resource constraints that prevent successful deployment.

- ⚠️ Image pull failures - Verify image names, tags, and registry authentication credentials

- ⚠️ Resource constraints - Check for insufficient CPU, memory, or storage preventing pod scheduling

- ⚠️ Configuration errors - Validate configmaps, secrets, and environment variables contain expected values

- ⚠️ Network issues - Confirm service definitions, ingress rules, and network policies allow required traffic

- ⚠️ Permission problems - Verify RBAC roles, service accounts, and security contexts have necessary permissions

Upgrade failures often result from incompatible changes or resource conflicts. The --force flag recreates resources instead of patching them, resolving issues where field changes aren't allowed. The --cleanup-on-fail flag removes resources created during a failed upgrade, preventing partial deployments from blocking subsequent attempts.

# Diagnose failed release

helm status myrelease

helm get manifest myrelease

helm get values myrelease

# Check Kubernetes resources

kubectl get pods -l app.kubernetes.io/instance=myrelease

kubectl describe pod

kubectl logs

# View release history

helm history myrelease

# Rollback to previous version

helm rollback myrelease

# Rollback to specific revision

helm rollback myrelease 3Resolving Value and Configuration Problems

Value precedence issues cause confusion when overrides don't apply as expected. Helm merges values from multiple sources in a specific order: chart defaults, parent chart values, user-supplied values files (in order), and finally command-line set values. Understanding this hierarchy helps diagnose why certain values aren't taking effect.

The helm get values command shows exactly what values were used for a release, helping identify discrepancies between expected and actual configuration. Compare this output against your values files and command-line arguments to find where values differ from expectations.

"Establishing a clear debugging workflow that starts with template rendering, moves through value inspection, and ends with resource examination reduced our average troubleshooting time from hours to minutes."

Dependency issues arise when subchart versions conflict or required charts are missing. Run helm dependency list to verify all dependencies are present and at expected versions. The helm dependency update command refreshes dependencies, potentially resolving version mismatches or missing charts.

# Check dependency status

helm dependency list ./myapp

# Update dependencies

helm dependency update ./myapp

# Build dependency packages

helm dependency build ./myapp

# Verify dependency configuration

cat ./myapp/Chart.yaml

# Check dependency values

helm show values bitnami/postgresqlHandling Release State and Storage Issues

Corrupted release state occasionally prevents normal Helm operations. Release information is stored as Kubernetes secrets or configmaps in the release namespace. In extreme cases, manually inspecting or modifying these storage objects can resolve stuck releases, though this approach should be a last resort after exhausting standard troubleshooting steps.

The maximum release size limitation (1MB by default for secrets) affects large charts or those with extensive values. Symptoms include installation failures with cryptic error messages about secret size. Solutions include using the configmap storage driver, reducing chart size by removing unnecessary templates or comments, or splitting large applications into multiple charts.

# List release secrets

kubectl get secrets -l owner=helm

# Examine release secret

kubectl get secret sh.helm.release.v1.myrelease.v1 -o yaml

# Switch to configmap storage driver

export HELM_DRIVER=configmap

# Uninstall stuck release

helm uninstall myrelease --no-hooks

# Force delete release resources

kubectl delete all -l app.kubernetes.io/instance=myreleaseNamespace issues occur when releases are installed in unexpected namespaces or when namespace-related RBAC restrictions prevent operations. Always explicitly specify namespaces using the --namespace flag to avoid confusion. Remember that Helm stores release information in the same namespace as the release, so namespace deletion removes release history.

Frequently Asked Questions

What is the difference between Helm 2 and Helm 3?

Helm 3 removed the server-side Tiller component that Helm 2 required, eliminating a significant security concern and operational complexity. Helm 3 stores release information as Kubernetes secrets or configmaps rather than in Tiller, improving security and enabling standard Kubernetes RBAC. Additionally, Helm 3 introduced three-way strategic merge patches for upgrades, improved CRD support, and removed the requirements directive in favor of dependencies. The architecture change means Helm 3 operates purely as a client-side tool, making it simpler to install and more secure by default. Migration from Helm 2 to Helm 3 requires using the helm-2to3 plugin to convert releases and clean up Tiller components.

How do I manage secrets securely in Helm charts?

Several approaches exist for secure secret management with Helm. The Helm Secrets plugin encrypts values files using Mozilla SOPS with support for various key management systems including AWS KMS, Google Cloud KMS, and Azure Key Vault. External secret operators like External Secrets Operator synchronize secrets from external vaults into Kubernetes, allowing charts to reference secrets without containing sensitive data. For simpler scenarios, maintain separate encrypted values files outside version control and inject them only during deployment. Never commit plain-text secrets to Git repositories, and consider implementing secret scanning tools in your CI/CD pipeline to prevent accidental exposure. Some teams use sealed secrets, which encrypt secrets in a way that only the cluster can decrypt, enabling safe storage in Git.

Can I use Helm with GitOps workflows?

Helm integrates excellently with GitOps tools like ArgoCD and Flux, which use Helm as their templating and packaging mechanism while adding continuous deployment capabilities. In GitOps workflows, Helm charts and values files reside in Git repositories, which serve as the source of truth for cluster state. The GitOps operator monitors these repositories and automatically applies changes to clusters, handling the actual Helm install and upgrade commands. This approach provides audit trails, enables rollbacks through Git history, and supports multiple environments through branch or directory structures. ArgoCD offers a web UI for visualizing Helm releases and their sync status, while Flux provides a more Kubernetes-native approach using custom resources. Both tools support Helm dependencies, values overrides, and release lifecycle management.

How do I test Helm charts before deploying to production?

Comprehensive chart testing involves multiple stages. Start with helm lint to catch structural issues and best practice violations. Use helm template to render templates locally and verify the generated manifests match expectations. The --dry-run flag performs server-side validation without creating resources, catching issues that client-side rendering might miss. Consider using helm-unittest plugin for unit testing templates with various value combinations. Deploy to non-production environments that mirror production configuration, running integration tests against deployed applications. Implement chart testing hooks that run validation jobs after installation, verifying that applications start correctly and respond to health checks. Tools like Kubeconform or Kubeval validate Kubernetes manifests against API schemas, catching version compatibility issues. Finally, incorporate security scanning tools like Checkov or Datree to identify misconfigurations and policy violations before production deployment.

What are the best practices for structuring large Helm charts?

Large charts benefit from modular organization and clear separation of concerns. Break complex applications into multiple subcharts rather than creating monolithic charts with hundreds of templates. Use the charts/ directory for dependencies and maintain clear boundaries between components. Implement comprehensive helper templates in _helpers.tpl to avoid duplication and ensure consistency. Organize values hierarchically with clear documentation for each parameter, including acceptable values and defaults. Consider creating a library chart for shared templates and helpers that multiple application charts can use. Maintain separate values files for different environments rather than embedding environment-specific logic in templates. Use consistent naming conventions across all charts in your organization. Document chart architecture, customization points, and upgrade procedures in README files. Implement validation logic to catch configuration errors early. Finally, version charts semantically and maintain a changelog documenting breaking changes and new features between versions.

How do I handle database migrations with Helm?

Database migrations with Helm typically use hooks to run migration jobs at appropriate points in the release lifecycle. Create a Kubernetes Job template with a helm.sh/hook: pre-upgrade annotation to run migrations before application updates. Set helm.sh/hook-weight to control execution order when multiple hooks exist, ensuring migrations complete before application pods start. Use helm.sh/hook-delete-policy: before-hook-creation to clean up previous migration jobs automatically. The migration job should use the same database credentials as the application, typically mounted from secrets. Implement idempotent migrations that can run multiple times safely, handling cases where Helm retries failed upgrades. Consider using migration tools like Flyway or Liquibase that track applied migrations and skip already-executed changes. For complex scenarios, separate migration charts from application charts, deploying migrations as a distinct release that the application chart depends on. Always test migration procedures in non-production environments and maintain rollback plans for failed migrations.