Using grep and awk for Text Processing in Linux

Learn how to use grep and awk to search, filter, and transform text files in Linux. Step-by-step examples and practical tips for Linux and DevOps beginners.

Short introduction

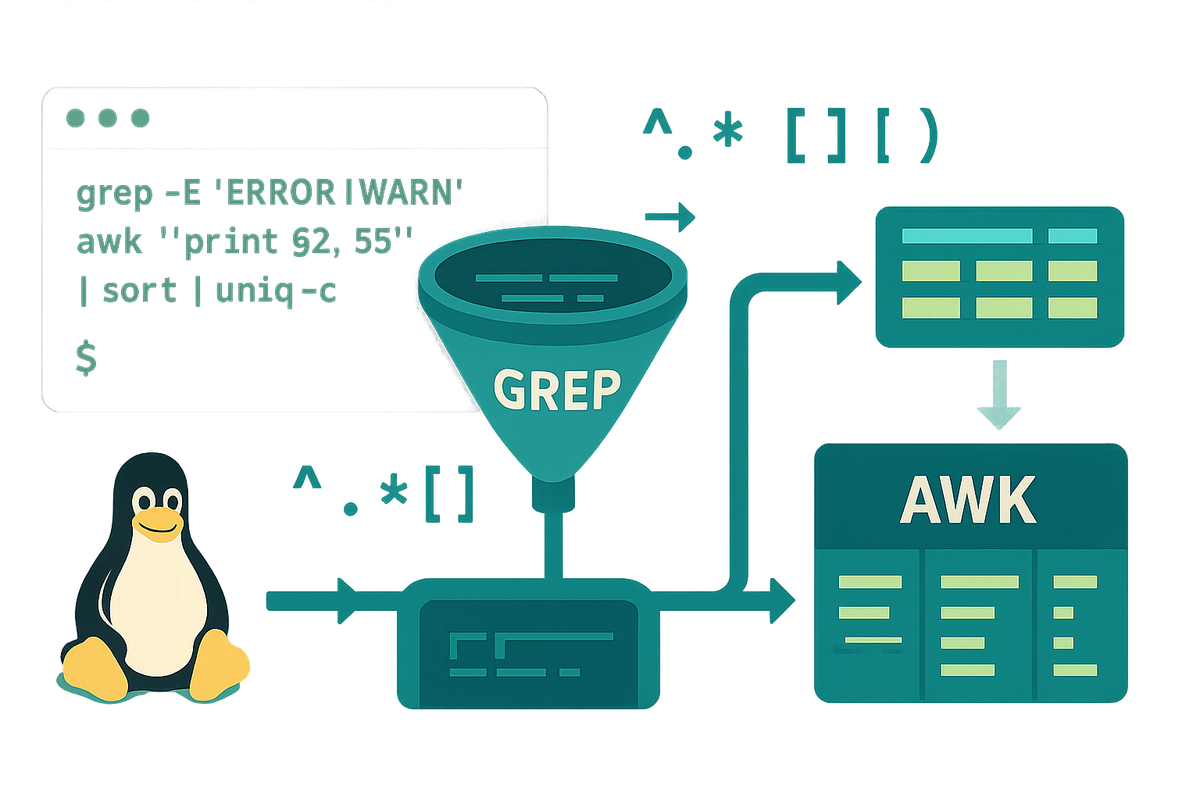

Text processing is one of the most common tasks on the Linux command line. Two small but powerful tools—grep and awk—let you search, filter, and transform text quickly without writing a full program. This tutorial walks through practical examples and explanations to help beginners use grep and awk together effectively.

Why grep and awk?

grep and awk serve complementary roles. grep is ideal for fast pattern matching and filtering lines; awk is a lightweight programming language designed for field-aware processing and reporting. Together they form a simple, expressive toolkit for many tasks: log analysis, CSV processing, extracting columns, and producing summaries.

Example: find lines that mention “error” in a log file

# find error lines quickly

grep -i "error" /var/log/syslog

Explanation: -i makes the match case-insensitive. grep returns full lines that contain the pattern.

Searching Text with grep

grep basics: pattern matching, regular expressions, and useful options like -i (ignore case), -v (invert match), -n (show line numbers), and -r (recursive). Use grep when you want to locate lines that match a pattern or narrow down input before further processing.

Simple usage:

# plain substring match

grep "TODO" notes.txt

# show line numbers for quick reference

grep -n "TODO" notes.txt

# recursive search in a directory

grep -R --line-number "TODO" ~/projects

Regular expressions:

# basic regex: match lines that start with "ERROR"

grep "^ERROR" app.log

# extended regex with alternation

grep -E "ERROR|WARN" app.log

# use word boundaries (GNU grep supports \< and \>)

grep -E "\<user\>" data.txt

Piping and counting:

# count matches

grep -c "failed" access.log

# count unique IPs that caused failures (example with cut)

grep "failed" access.log | cut -d' ' -f1 | sort | uniq -c | sort -nr | head

Explanation: grep filters lines; combine with cut/sort/uniq for quick summaries. Use -P for Perl-compatible regex if available for advanced patterns.

Processing Columns with awk

awk treats each input line as a record split into fields, accessible as $1, $2, ..., with built-in variables like NF (number of fields) and NR (record number). It's great for column-aware tasks: selecting columns, aggregations, conditional formatting.

Field selection:

# print 1st and 3rd columns (default FS is whitespace)

awk '{print $1, $3}' data.txt

# for CSV with comma separator

awk -F, '{print $2}' file.csv

Filtering and conditions:

# print lines where 3rd column > 100

awk '$3 > 100 {print $0}' metrics.txt

# combine condition and formatted output

awk -F: '$3 ~ /bash/ {printf "%-15s %s\n", $1, $3}' /etc/passwd

Built-in patterns and actions:

# print header then compute totals

awk 'BEGIN {print "User\tCount"} {counts[$1]++} END {for (u in counts) print u"\t"counts[u]}' data.txt

Explanation: BEGIN runs before input; END runs after. Arrays let you aggregate counts. Use OFS to set output field separator: awk 'BEGIN{OFS=","} {print $1,$2}'.

Combining grep and awk (Pipelines)

Common workflows use grep to filter lines and awk to parse fields or produce summaries. This keeps each tool focused on what it does best and results in readable, maintainable commands.

Example: find error messages and extract timestamp and message

# assume log format: YYYY-MM-DD HH:MM:SS LEVEL message...

grep "ERROR" app.log | awk '{print $1" "$2": "$5" "$6" "$7}'

Example: find users with failed logins and count attempts

# find lines with "Failed password", extract username, count

grep "Failed password" /var/log/auth.log | awk '{print $9}' | sort | uniq -c | sort -nr

Using awk first can be better when you need to restructure lines before pattern matching:

# extract message column then grep for a pattern inside it

awk '{print $5}' app.log | grep -i "timeout"

Practical pipeline with explanation:

# get top 10 IPs by request count from an access log

awk '{print $1}' access.log | sort | uniq -c | sort -nr | head -n 10

Explanation: awk extracts the IP (first field), sort prepares for uniq -c, uniq counts, then sort -nr sorts by numeric reverse to show most frequent.

Commands table

Below is a compact reference of common options and commands for grep and awk.

| Command | Description | Example |

|---|---|---|

| grep -i PATTERN file | Case-insensitive search | grep -i "error" log.txt |

| grep -n PATTERN file | Show line numbers | grep -n "TODO" notes.md |

| grep -v PATTERN file | Invert match (exclude) | grep -v "^#" config.cfg |

| grep -E PATTERN file | Extended regex | grep -E "WARN |

| grep -R PATTERN dir | Recursive search | grep -R "FIXME" ~/project |

| awk '{print $1,$2}' file | Print first two fields (whitespace FS) | awk '{print $1,$2}' data.txt |

| awk -F, '{print $2}' file.csv | Set input FS to comma | awk -F, '{print $2}' file.csv |

| awk 'BEGIN{...} {...} END{...}' file | Use BEGIN/END blocks | awk 'BEGIN{OFS=","} {print $1,$3} END{print "done"}' file |

| awk '$3 > 100 {print $0}' file | Conditional processing | awk '$3 > 100 {print $0}' metrics.txt |

| awk '{counts[$1]++} END{for (i in counts) print i, counts[i]}' file | Aggregation using arrays | awk '{counts[$1]++} END{for (i in counts) print counts[i], i}' access.log |

Explanation: The table shows commands, brief description, and a short example. For more advanced needs, consult man grep and man awk.

Common Pitfalls

- Relying on default field separators: awk defaults to whitespace; for CSVs specify -F, or use FPAT in gawk to handle quoted fields.

- Grep matching unintended substrings: use word boundaries or anchors (^, $) to avoid partial matches, or prefer -w to match whole words.

- Not quoting patterns: shell expansion can alter patterns (especially with , ?, $). Always quote regex patterns, e.g., grep -E "foo|bar" file or grep -F "ab" file.

Next Steps

- Practice with real logs: try extracting and summarizing data from /var/log/syslog or an application log.

- Learn regular expressions: invest time in regex basics; they multiply the power of grep and awk.

- Explore awk features: study gawk extensions (FPAT, time functions) and write small awk scripts for repeated tasks.

This tutorial covered practical uses of grep for searching and awk for transforming text, plus how to combine them in pipelines. With these basics you can start automating many routine text-processing tasks on Linux.

👉 Explore more IT books and guides at dargslan.com.