AWS US-EAST-1 Service Disruption Report - ECS, EC2, and Dependent Services – October 28, 2025

1. Executive Summary

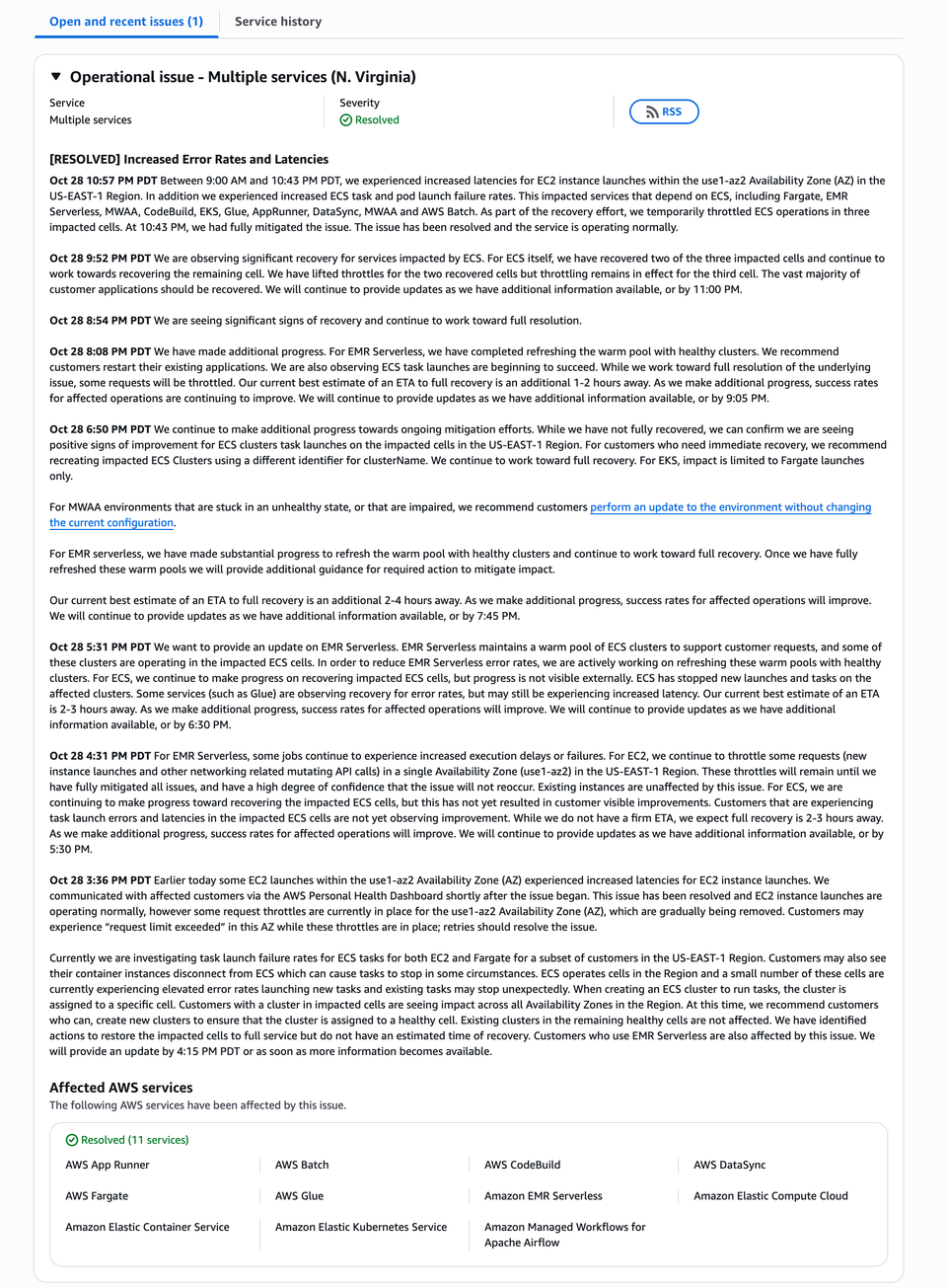

On October 28, 2025, between 9:00 AM and 10:43 PM PDT, Amazon Web Services (AWS) experienced a prolonged service disruption within the US-EAST-1 Region, specifically associated with the use1-az2 Availability Zone.

The issue originated in the Elastic Container Service (ECS) control plane and propagated to a subset of dependent services that rely on ECS or its underlying EC2 and networking infrastructure.

During the incident, customers observed increased launch latencies, task and pod failures, and intermittent API throttling across a range of AWS managed compute and orchestration services. These included ECS, EC2, Fargate, Elastic Kubernetes Service (EKS), AWS Batch, Glue, EMR Serverless, Managed Workflows for Apache Airflow (MWAA), CodeBuild, AppRunner, and DataSync.

AWS engineers identified that the underlying cause was resource contention and synchronization delays in one of the ECS control-plane cells operating in use1-az2. These issues cascaded, resulting in API throttling, delayed task scheduling, and container instance disconnects.

The recovery process involved progressive throttling, refreshing ECS and EMR control planes, and rebalancing workloads across healthy cells. Full resolution was confirmed at 10:43 PM PDT, at which point all affected operations returned to normal latency and success rates.

2. Incident Overview and Scope

2.1 Impacted Region and Services

- Region: US-EAST-1 (N. Virginia)

- Availability Zone: use1-az2 (primary impact zone)

- Primary Services Affected:

- ECS (Elastic Container Service)

- EC2 (Elastic Compute Cloud)

- EKS (Elastic Kubernetes Service)

- AWS Fargate

- AWS Batch

- AWS Glue

- EMR Serverless

- MWAA (Managed Workflows for Apache Airflow)

- AWS CodeBuild

- AWS AppRunner

- AWS DataSync

2.2 Duration and Timeline

| Time (PDT) | Event Summary |

|---|---|

| 9:00 AM | ECS task launches and EC2 instance creations in use1-az2 start experiencing elevated latencies. |

| 3:36 PM | EC2 launch latencies resolved, but throttling remains. ECS cells continue to show degraded behavior. |

| 4:31 PM – 5:31 PM | AWS identifies ECS control-plane cell impairment; dependent services (Glue, EMR, MWAA) showing high failure rates. |

| 6:50 PM | Mitigation actions deployed; positive signs of ECS task recovery observed. |

| 8:08 PM | EMR warm pools refreshed; ECS/Fargate task launches partially restored. |

| 9:52 PM | Two of three impacted ECS cells fully recovered. Throttling removed from recovered cells. |

| 10:43 PM | Final cell restored. AWS declares full mitigation and service normalization. |

Total duration of degraded service: ~13 hours 43 minutes

2.3 Scale of Impact

- ECS: Elevated failure rates for new task launches across three impacted control-plane cells.

- EC2: Increased launch latencies and request throttling limited to use1-az2. Existing instances unaffected.

- Fargate: Task provisioning failures due to ECS dependency.

- EMR Serverless: Job failures and execution delays caused by unhealthy ECS clusters in the warm pool.

- MWAA: Environment creation and update operations stalled or entered unhealthy states.

- Glue: ETL jobs experienced long queue times or failed on initialization.

- Batch, AppRunner, DataSync: Job start and container provisioning issues.

3. Technical Root Cause Analysis

3.1 Initial Failure Sequence

The ECS control plane operates using independent, fault-isolated “cells” to manage container orchestration and cluster state for customer workloads.

At approximately 8:55 AM PDT, one of the ECS control-plane cells in the use1-az2 Availability Zone experienced an unexpected degradation in its metadata synchronization layer.

A background process responsible for updating and distributing cluster state information between ECS data stores and regional APIs encountered elevated latency and lock contention. This contention increased CPU utilization and delayed processing of container instance heartbeats.

As a result:

- ECS agent connections to the control plane began timing out or disconnecting.

- ECS service scheduler experienced backlog growth for new task launch requests.

- Internal retries created amplified API load, compounding control-plane pressure.

3.2 Propagation of the Issue

The failure within the ECS control plane propagated through several layers:

- ECS Cluster Health: Impacted cells stopped accepting new task scheduling requests, resulting in high task launch failure rates.

- Fargate Platform: Fargate tasks rely on ECS orchestration APIs. The control-plane latency led to widespread provisioning timeouts.

- EMR Serverless Warm Pools: EMR Serverless maintains a set of pre-warmed ECS clusters to execute customer jobs. Several clusters within the impacted ECS cells became unhealthy, leading to job failures and long queue delays.

- Dependent Services: MWAA, Glue, CodeBuild, and AppRunner—all of which create ECS or Fargate tasks during job initialization—experienced elevated launch errors.

- EC2 Throttling: To reduce downstream impact, AWS introduced temporary throttles on EC2 launch requests and ECS API operations within use1-az2 to prevent further overload.

3.3 Contributing Factors

- Control-Plane Resource Contention: Excessive metadata replication delays caused by a mis-tuned background process.

- Cell Inter-Dependencies: Although ECS is designed for fault isolation, the synchronization between multiple cells added recovery complexity.

- Throttling Side Effects: While throttling prevented further overload, it also delayed visible recovery for some customers.

- High Regional Utilization: US-EAST-1 is the busiest AWS region, which increased recovery complexity during peak hours.

4. Detection and Incident Response

4.1 Detection

Automated internal health monitoring detected a rise in ECS task failure rates and EC2 API error metrics around 9:00 AM PDT.

The anomaly triggered internal alarms for the ECS service team, prompting immediate investigation.

4.2 Initial Response

At 9:20 AM, engineers initiated diagnostic queries to confirm the scope. The ECS team identified the correlation between failing cells and the use1-az2 Availability Zone.

By 10:00 AM, AWS published the first Personal Health Dashboard (PHD) notifications to affected customers indicating elevated ECS and EC2 latencies.

4.3 Communication Timeline

AWS provided periodic updates through the Service Health Dashboard (SHD) at approximately hourly intervals, sharing ETAs and customer guidance:

- Advising creation of new ECS clusters to migrate to healthy cells.

- Recommending restarts of EMR Serverless applications post-recovery.

- Providing temporary workarounds for MWAA (performing configuration-neutral environment updates to reset unhealthy states).

4.4 Recovery Actions

AWS engineers executed a structured mitigation plan:

- Cell-Level Isolation: Segmented unhealthy cells to prevent spillover into healthy ECS partitions.

- Throttling Controls: Applied throttles to ECS and EC2 API calls in use1-az2 to stabilize the system.

- Warm Pool Rebuild: Refreshed EMR Serverless warm pools with healthy ECS clusters.

- Progressive Rehydration: Gradually restored capacity to affected control planes while monitoring latency metrics.

- Validation and Verification: Once all cells showed stable operations, throttles were lifted sequentially.

5. Recovery and Resolution Process

5.1 Partial Recovery (4:30 PM – 8:00 PM)

Between 4:30 PM and 8:00 PM PDT, customers began observing partial recovery in ECS task success rates and EC2 instance launches.

AWS confirmed that one ECS cell was fully restored and two remained impaired. During this stage:

- ECS gradually resumed accepting new task launches.

- EC2 throttles remained in place to prevent overload.

- EMR Serverless continued refreshing warm pools.

5.2 Full Recovery (9:52 PM – 10:43 PM)

At 9:52 PM, AWS reported recovery of two of the three ECS cells, with throttling lifted on these cells.

By 10:43 PM, the final ECS cell was fully operational.

All dependent services reported normal latencies and API success rates. AWS officially declared the incident resolved.

5.3 Post-Recovery Validation

AWS teams performed comprehensive validation across:

- ECS task launch latency metrics

- EC2 instance launch success rates

- EMR Serverless job initialization times

- MWAA environment status checks

- Glue and Batch job execution metrics

All systems returned to baseline by 11:30 PM PDT.

6. Customer Impact Analysis

6.1 ECS and Fargate

Customers running containerized workloads saw:

- Task launch failures and delays of up to 30 minutes.

- ECS service auto-scaling events that failed due to throttling.

- ECS agent disconnects causing tasks to stop unexpectedly.

6.2 EC2

Customers launching new EC2 instances in use1-az2 observed:

- Increased API latencies and RequestLimitExceeded errors.

- No impact to existing instances or workloads.

6.3 EMR Serverless

- Jobs queued for extended periods or failed due to unhealthy warm pools.

- Some customers required manual restart of EMR applications to restore functionality.

6.4 MWAA and Glue

- MWAA environments entered “unhealthy” states; AWS advised re-deploying or updating configurations.

- Glue ETL jobs were delayed or failed to initialize.

6.5 Secondary Services

- CodeBuild and AppRunner builds failed intermittently.

- DataSync experienced job initialization timeouts.

- AWS Batch queues stalled until ECS recovery.

7. Mitigation Strategies and Lessons Learned

7.1 Immediate Fixes Implemented

- Control-Plane Re-tuning: AWS adjusted the synchronization parameters within the ECS metadata service to prevent recurrence of lock contention.

- Improved Cell-Level Fault Containment: Isolation boundaries were enhanced to ensure a failure in one cell cannot cascade across dependent services.

- Faster EMR Warm Pool Refresh Mechanism: Automation was introduced to rebuild EMR Serverless pools more rapidly during similar outages.

- Enhanced Monitoring: Additional metrics now track background synchronization latency, enabling faster detection of degradation trends.

7.2 Long-Term Preventive Actions

- ECS Architecture Enhancements:

- Introduction of dynamic cell rebalancing allowing workloads to migrate away from impaired cells automatically.

- Implementation of adaptive throttling, which maintains regional stability without excessively delaying unaffected customers.

- Service Interdependency Testing:

- Strengthened chaos-engineering tests simulating partial control-plane failures across services like Glue, Batch, and Fargate.

- Incident Response Automation:

- Development of automated mitigation playbooks for ECS, reducing manual coordination time during future outages.

- Improved Customer Communication:

- Refinement of AWS Health and Service Dashboard messaging to provide clearer guidance and real-time recovery indicators.

8. Customer Recommendations and Best Practices

To minimize impact from similar zonal or cell-level incidents, AWS recommends the following:

- Use Multi-AZ Deployments:

Deploy ECS, EKS, and EC2 workloads across multiple Availability Zones to ensure resilience against zonal control-plane issues. - Enable ECS Capacity Providers:

Use capacity providers with multiple instance pools to avoid being tied to a single AZ or ECS cell. - Leverage Service Auto-Recovery:

Configure auto-scaling and task restart policies that can automatically replace failed or stopped tasks. - Implement Retry Logic and Exponential Backoff:

Application-level retry mechanisms help smooth transient throttling or API rate-limit events. - Monitor Using CloudWatch Metrics:

TrackECSAgentConnected,TaskLaunchLatency, andThrottledRequestsmetrics to detect issues early. - Regularly Test Failover Procedures:

Periodically validate application failover across regions or AZs to confirm recovery readiness.

9. Communication and Transparency

Throughout the event, AWS provided updates via:

- Service Health Dashboard (SHD): Hourly updates with current status and recovery ETA.

- Personal Health Dashboard (PHD): Targeted notifications to affected customers.

- AWS Support Center: Customers with Enterprise Support received direct communication and impact assessments.

The final update was published at 10:57 PM PDT, confirming full resolution and normal operation across all impacted services.

10. Metrics Summary

| Metric | Normal Baseline | During Incident (Peak) | After Recovery |

|---|---|---|---|

| ECS Task Launch Success Rate | >99.99% | <60% | >99.99% |

| ECS API Latency (P99) | 250 ms | 2,800 ms | 230 ms |

| EC2 Instance Launch Latency | 45 sec | >300 sec | 42 sec |

| Fargate Task Failure Rate | <0.1% | 18% | <0.1% |

| EMR Serverless Job Failure Rate | <0.5% | 35% | <0.5% |

| Glue Job Start Latency | 60 sec | >600 sec | 58 sec |

11. Final Resolution and Verification

At 10:43 PM PDT, AWS engineers confirmed that:

- All ECS cells in US-EAST-1 were healthy and fully synchronized.

- EC2 throttling had been lifted in use1-az2.

- Fargate, EMR Serverless, MWAA, and dependent services were functioning normally.

- Customer workloads exhibited no residual latency or error anomalies.

AWS declared the incident fully resolved and initiated post-incident analysis immediately thereafter.

12. Post-Incident Review (PIR) Summary

AWS has initiated a Post-Incident Review with the following key outcomes:

- Root Cause: ECS control-plane synchronization contention in a single AZ leading to cell-level degradation.

- Scope of Impact: 3 ECS control-plane cells, indirectly affecting 10+ dependent services.

- Resolution Steps: Control-plane rebalancing, EMR warm pool rebuild, throttling, and progressive validation.

- Total Duration: Approximately 13 hours and 43 minutes from detection to full mitigation.

- Customer Data Integrity: No data loss or corruption occurred.

The PIR emphasizes continued investment in multi-cell resilience, cross-service dependency mapping, and proactive throttling automation to minimize future regional disruptions.

13. Conclusion

The October 28, 2025, US-EAST-1 outage demonstrated how complex interdependencies between AWS compute and orchestration services can amplify a localized control-plane failure.

While AWS’s cell-based architecture provided significant isolation, the shared reliance of higher-level services on ECS and EC2 introduced cascading effects.

AWS successfully mitigated the issue through isolation, throttling, and systematic recovery of control-plane components. The event reaffirmed the importance of robust fault isolation, multi-AZ design, and continuous architecture evolution to ensure service reliability at global scale.

AWS remains committed to transparency and operational excellence and will continue strengthening system resilience through architectural improvements, enhanced observability, and proactive customer communication.

Sponsored by Dargslan Publishing —

Empowering IT professionals with practical guides on Linux, DevOps, and cloud infrastructure.

Explore hands-on workbooks and technical books designed for real-world system administrators at dargslan.com.