Cloudflare Global Network Outage: Full Analysis, Recovery Progress, and Technical Breakdown

Cloudflare Global Network Outage: Full Analysis, Recovery Progress, and Technical Breakdown

Cloudflare has been battling a significant global service disruption throughout the day, affecting millions of websites, applications, APIs, and Zero Trust authentication workflows. The incident has triggered cascading errors across web traffic, internal Cloudflare systems, and WARP connectivity—particularly in London, where access was temporarily disabled to support remediation.

As of 13:35 UTC, Cloudflare reports that major components such as Access and WARP have been restored to pre-incident levels, and engineers are actively working to restore remaining application services.

This article breaks down the full timeline, the technical implications of the outage, what has been restored so far, and what this incident reveals about the fragility and scale of Cloudflare’s global network.

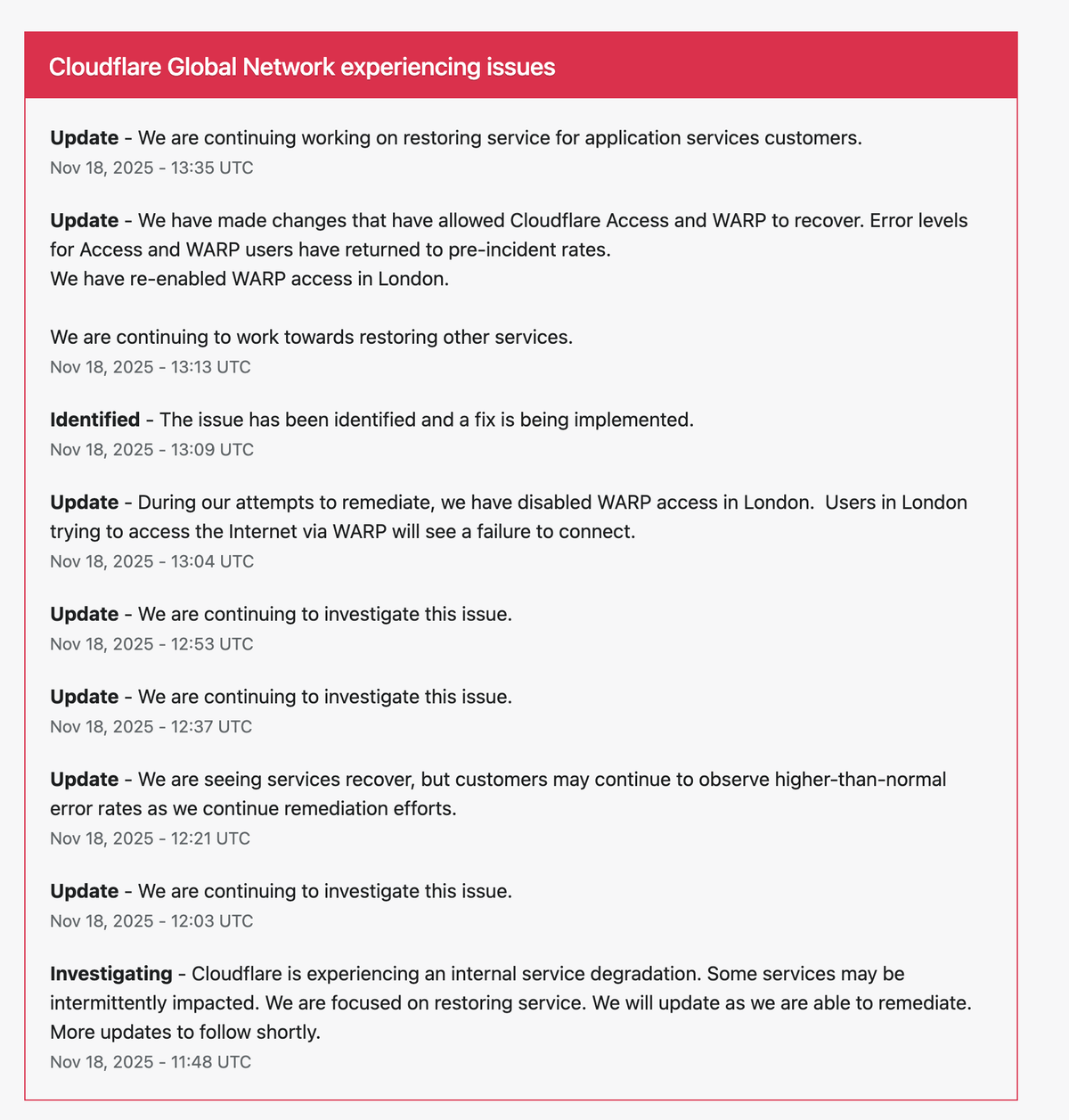

🕒 Full Timeline of Cloudflare Updates (11:48–13:35 UTC)

Below is a detailed, chronological breakdown of the official updates released during the outage.

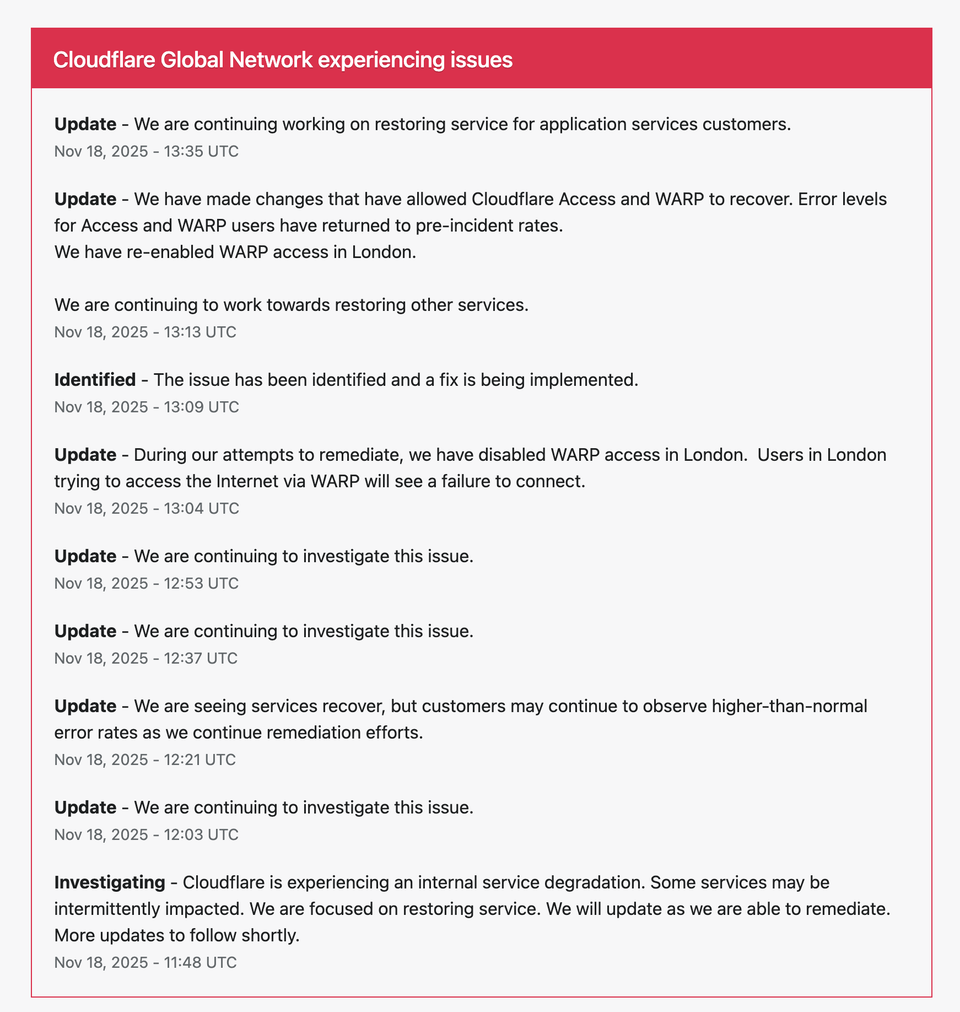

13:35 UTC — Working to Restore Application Service Customers

“We are continuing working on restoring service for application services customers.”

This indicates Cloudflare is now deep into recovery mode. Application services—such as Workers, Pages, web routing, and API delivery—are among the final components to be fully restored.

This confirms a broad system impact, not just isolated subsystems.

13:13 UTC — Access & WARP Fully Restored, WARP Re-enabled in London

“We have made changes that have allowed Cloudflare Access and WARP to recover. Error levels for Access and WARP users have returned to pre-incident rates.

We have re-enabled WARP access in London.

We are continuing to work towards restoring other services.”

This was a major step forward. Cloudflare’s Access (Zero Trust identity gateway) and WARP (secure connectivity/VPN) returned to normal error rates. London’s WARP access had been disabled earlier, meaning Cloudflare intentionally isolated the London PoP (Point of Presence) during the incident to stabilize the system.

Re-enabling WARP indicates:

- London infrastructure was stabilized

- Routing paths were validated

- Global Anycast propagation became consistent again

13:09 UTC — Issue Identified, Fix Being Implemented

“The issue has been identified and a fix is being implemented.”

This was the critical turning point. After nearly 90 minutes of continuous investigation, Cloudflare identified the root cause. Although no public technical explanation has been released yet, transitioning from investigate to fix means the root problem was likely internal and systemic.

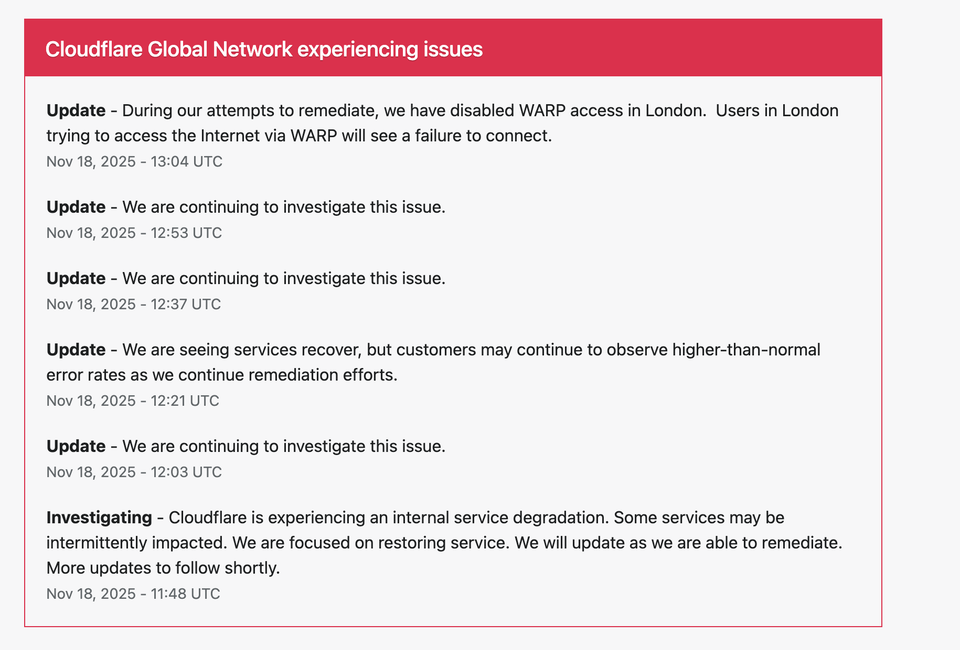

13:04 UTC — WARP Disabled in London (Temporary)

“We have disabled WARP access in London. Users in London trying to access the Internet via WARP will see a failure to connect.”

Cloudflare took an extraordinary step:

temporarily shutting down WARP in a major metropolitan region.

This suggests:

- London routing or PoP connectivity was unstable

- Traffic needed to be rerouted to prevent further cascading failures

- Cloudflare isolated London to protect global traffic flow

This step is extremely rare and highlights the severity of the instability.

12:53 UTC — Continued Investigation

“We are continuing to investigate this issue.”

At this point, no improvement was reported. The fix was still unknown.

12:37 UTC — Continued Investigation

“We are continuing to investigate this issue.”

At these timestamps, Cloudflare was still diagnosing the problem. The lack of additional information indicated a complex, multi-layered failure.

12:21 UTC — Partial Recovery Noted

“We are seeing services recover, but customers may continue to observe higher-than-normal error rates as we continue remediation efforts.”

This marked the first sign of improvement. Some global PoPs began stabilizing.

12:03 UTC — Continued Investigation

“We are continuing to investigate this issue.”

No change. Cloudflare engineers were still tracing the fault.

11:48 UTC — Initial Incident Report (Internal Service Degradation)

“Cloudflare is experiencing an internal service degradation. Some services may be intermittently impacted.”

This confirmed the earliest stage of the outage. The issue was classified as internal, ruling out:

- regional internet provider failures

- upstream backbone outages

- third-party routing incidents

Internal service degradation generally means problems within Cloudflare’s own core systems.

🌐 Global Impact: What Broke, and Why It Mattered

The outage affected multiple core Cloudflare services at once:

1. CDN & Edge Routing

- Widespread HTTP 500 errors

- Slow or failed website loading

- Multiple PoPs experiencing inconsistent behavior

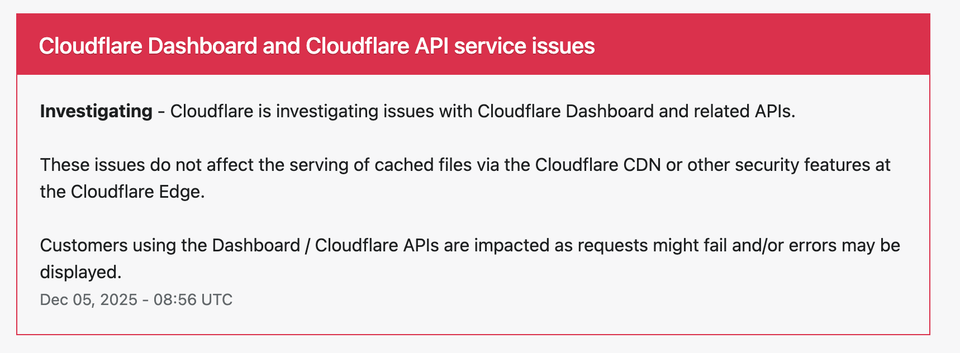

2. Cloudflare Dashboard

- Login errors

- Missing analytics

- Unable to modify DNS or firewall settings

3. Cloudflare API

- Automation workflows failing

- API calls timing out

- CI/CD deployments blocked

4. Cloudflare Access (Zero Trust)

- Users unable to authenticate

- Internal services unreachable

5. Cloudflare WARP

- Fully disabled in London

- Degradation elsewhere

6. Workers & Pages

- Deployment failures

- Scripts not executing correctly

7. DNS Services

- Intermittent resolution delays

Because Cloudflare sits in front of a massive portion of the world’s web traffic, the effects were instant and widespread.

🧠 What Might Have Caused the Outage?

Cloudflare has not yet provided a root-cause analysis, but the symptoms strongly suggest one of the following scenarios.

1. Global Configuration Push Gone Wrong (Most Likely)

Cloudflare regularly pushes live configuration updates across:

- WAF (Web Application Firewall)

- Firewall rules

- Caching logic

- Routing tables

- Reverse proxy logic

A faulty global configuration push could instantly break:

- request processing

- routing behavior

- WARP tunnels

- Access authentication

- internal API services

This would explain:

- widespread 500 errors

- WARP/Access failures

- dashboard outages

- regional issues like London

Cloudflare has had similar outages in the past (e.g., July 2020 WAF rule crash).

2. Internal Microservice Failure

Cloudflare depends on dozens of internal microservices:

- routing orchestration

- internal API gateways

- configuration registries

- authentication pipelines

If one of them fails, it can cascade.

3. Anycast/BGP Instability

Cloudflare’s network uses Anycast BGP routing so all PoPs share the same IPs.

If:

- route announcements become unstable

- some PoPs drop or defer traffic

- WARP routes diverge

…then global traffic becomes inconsistent.

Disabling London WARP fits this theory.

4. Overload or Cascading System Failure

If a central internal system becomes overloaded, it cascades across:

- DNS

- CDN

- proxy servers

- API endpoints

This is less likely, but still plausible.

💼 How Businesses and End Users Were Affected

E-commerce

- checkout failures

- slow product loads

- broken payment workflows

SaaS companies

- broken dashboards

- login failures

- admin interface outages

Financial services

- delayed API responses

- authentication issues

Remote teams (Zero Trust users)

- Access blocked

- private networks unreachable

Consumers

- WARP/VPN offline

- unstable browsing

Because Cloudflare sits between the customer and the web, its outages immediately impact user experience.

🔧 Where Recovery Stands Now

As of the latest update (13:35 UTC):

Recovered

✔ Cloudflare Access

✔ Cloudflare WARP

✔ WARP London restored

✔ Zero Trust authentication

✔ Error levels returned to normal for Access/WARP

Improving

⬆ Application services

⬆ Workers & Pages

⬆ API performance

Still being restored

🔧 Some application-level components

🔧 Analytics and dashboard stability

🔧 Regional traffic routing

Cloudflare engineers are now in the stabilization phase, which typically includes:

- verifying data center health

- rolling back faulty configs

- rebalancing traffic

- re-enabling automated systems

📌 Conclusion

Cloudflare’s global network outage was one of the more severe incidents in recent years, impacting key products simultaneously and requiring emergency isolation actions—such as disabling WARP in London—to maintain global stability.

The situation has now significantly improved:

- the root issue has been identified

- a fix is being deployed

- Access and WARP have fully recovered

- London WARP is re-enabled

- engineers are working to restore remaining services

The incident underscores how deeply the modern internet depends on Cloudflare, and how internal infrastructure failures can instantly ripple across global digital services.

A full post-incident report is expected from Cloudflare once the system is fully stabilized.