Cloudflare Outage Worsens: Widespread 500 Errors, Dashboard and API Failing as Investigation Continues

Cloudflare Outage Worsens: Widespread 500 Errors, Dashboard and API Failing as Investigation Continues

Cloudflare’s global network outage has escalated, as the company confirms that widespread 500 errors, Dashboard failures, and API outages are now impacting a significant portion of its customer base. The disruption, which began earlier today, is affecting millions of users, businesses, and online services worldwide.

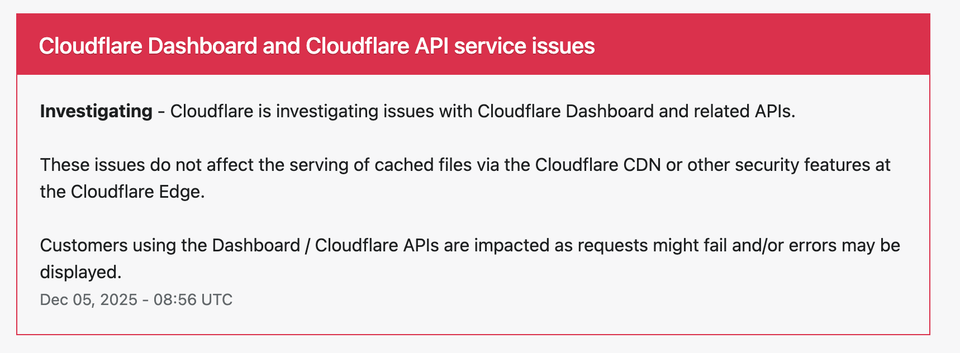

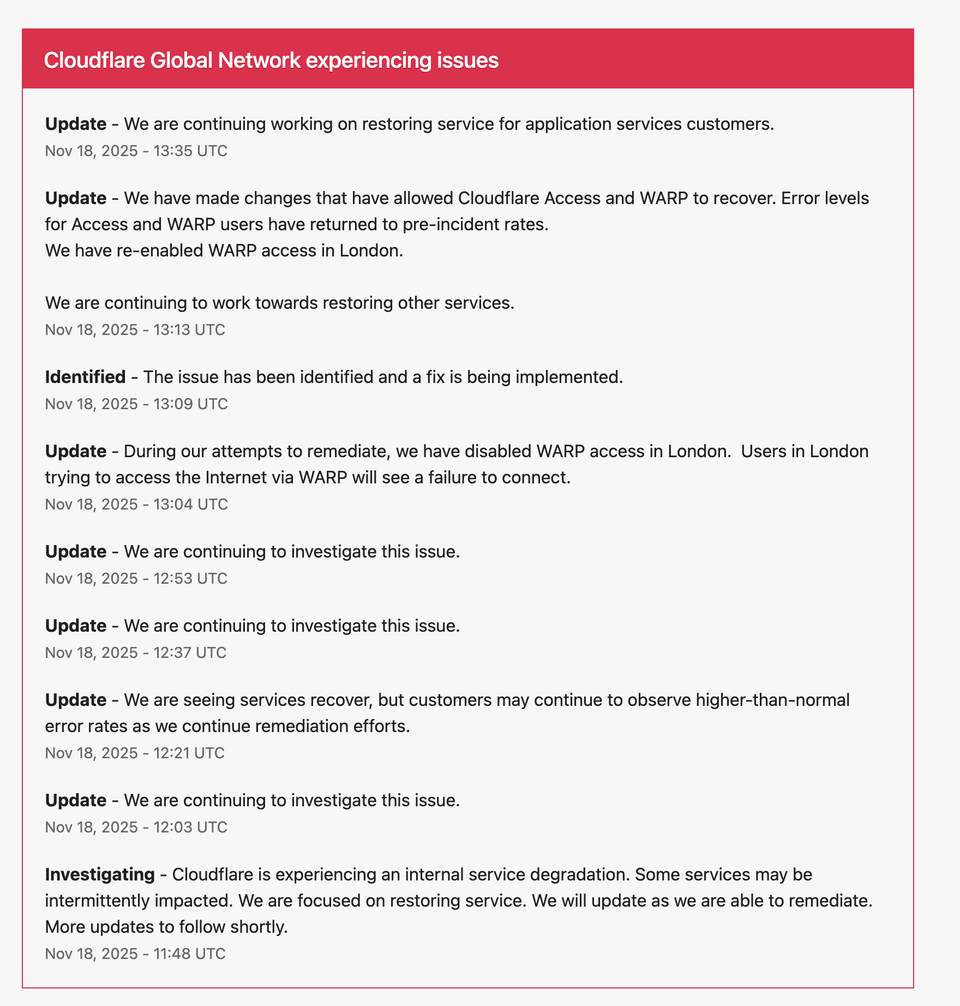

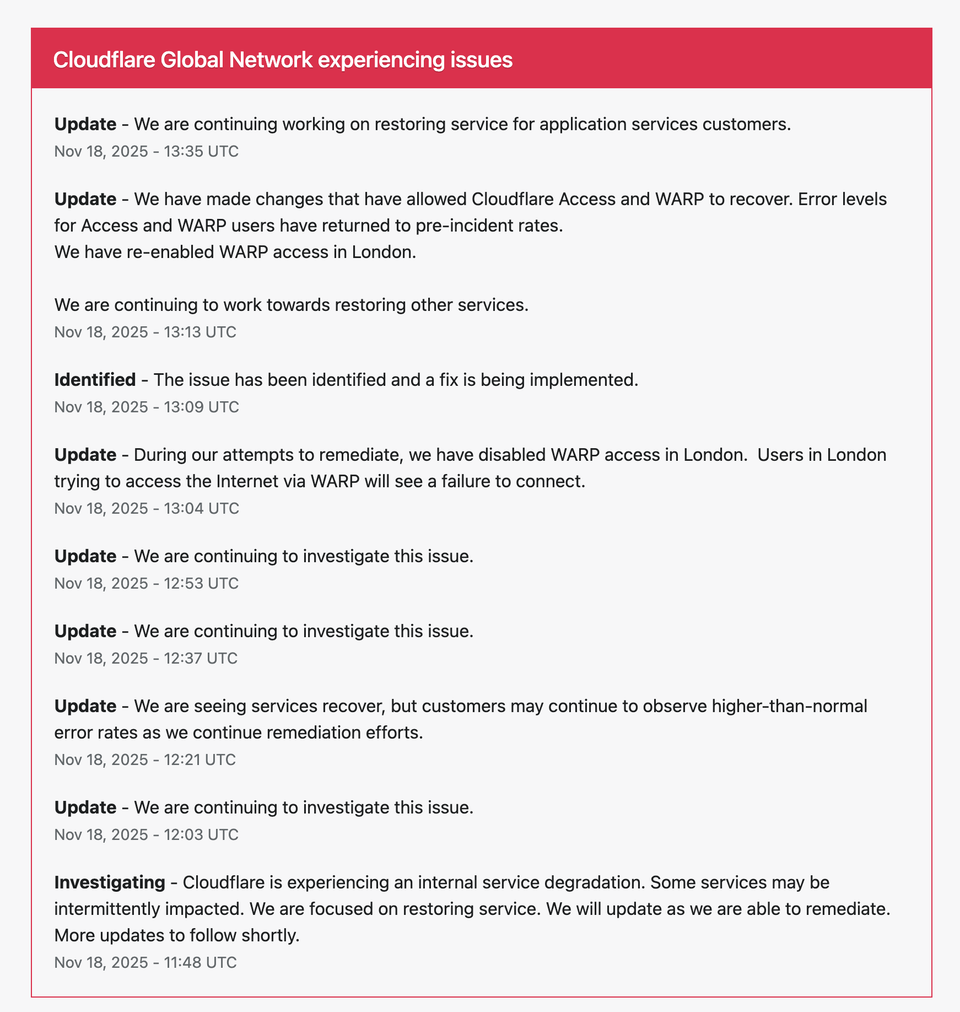

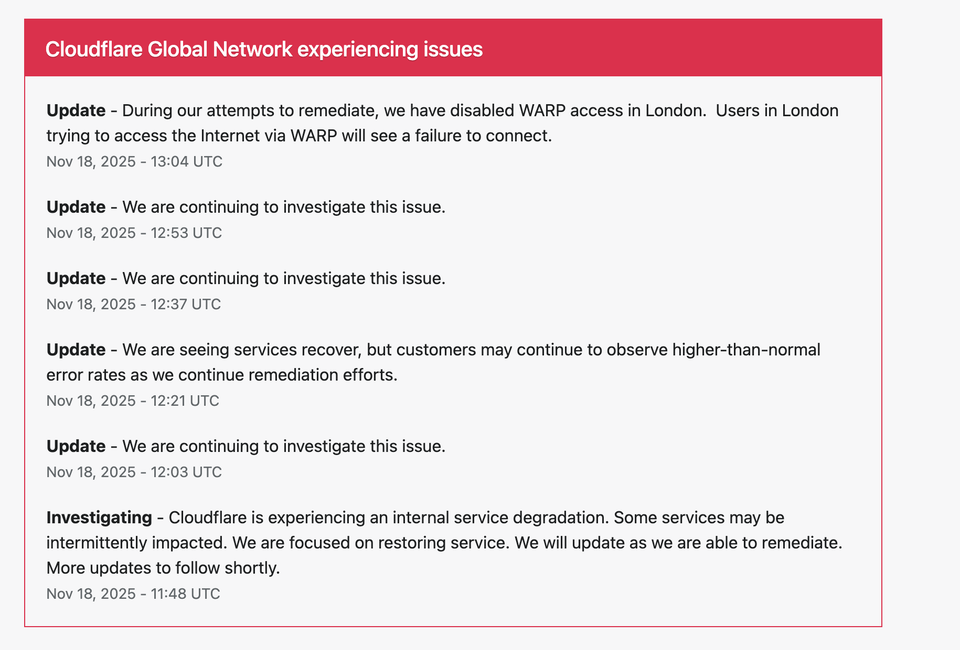

At 12:03 UTC, Cloudflare issued a new update:

“We are continuing to investigate this issue.”

Just minutes earlier, a more detailed message stated:

“Widespread 500 errors, Cloudflare Dashboard and API also failing. We are working to understand the full impact and mitigate this problem.”

Timestamp: 11:48 UTC

These messages indicate the situation is still ongoing and may be more severe than initially understood.

What Is Known So Far: A Multi-Layer Failure

Cloudflare has acknowledged failures across multiple critical components:

✔ Widespread HTTP 500 Errors

This means Cloudflare servers are unable to process requests, resulting in:

- Websites not loading

- Backend applications failing

- API requests timing out

- Authentication and login issues

- E-commerce systems breaking during checkout

500 errors at this scale almost always point to a core system failure, not a regional outage.

✔ Cloudflare Dashboard Outage

Users cannot:

- Log into the Cloudflare Dashboard

- Update DNS settings

- Modify firewall rules

- Access Zero Trust controls

- Manage Workers or Pages deployments

This limits the ability of businesses to respond or mitigate the outage on their own.

✔ Cloudflare API Failure

APIs are critical for:

- Automated deployments

- Bot management updates

- DNS automation

- Security system integration

- SaaS platforms that rely on Cloudflare’s backend

When the API is down, many enterprise systems become partially or fully unusable.

Why This Outage Is More Serious Than Most Cloudflare Incidents

Cloudflare outages are not uncommon, but full-stack failures involving:

- CDN

- DNS

- API

- Dashboard

- Reverse proxy

- Firewall

…are rare and point to a large internal failure.

The company has NOT yet identified the root cause publicly, but based on historical patterns, incidents of this scale are typically caused by:

1️⃣ BGP or global routing failure

A bad update to Cloudflare’s global routing tables could disconnect multiple data centers.

2️⃣ System-wide configuration push gone wrong

Cloudflare deploys updates globally within minutes.

A faulty rule could take down:

- WAF

- rate limiting

- workers

- cache

- load balancing

3️⃣ Outage in a core internal service

If Cloudflare’s internal authentication, storage, routing, or caching service fails, the entire platform becomes unstable.

4️⃣ Massive DDoS or attack-related overload

Cloudflare absorbs some of the world’s largest attacks.

If any mitigation layer breaks, edge servers overload instantly.

Impact on Businesses and the Global Internet

Because Cloudflare sits at the center of the global web, the consequences are significant:

Businesses Affected

- Online stores unable to process payments

- Apps unable to authenticate users

- APIs returning 500 errors

- Admin dashboards inaccessible

- Enterprises unable to modify DNS or security rules

Users Affected

- Websites not loading

- Login issues across dozens of services

- Mobile apps failing to sync

- Slow browsing or complete timeouts

Developers Affected

- Deployment pipelines failing

- Worker scripts broken

- Zero Trust login failures

- DNS propagation halted

This type of outage impacts both the front-end and the administrative back-end of thousands of systems.

Scheduled Maintenance in Santiago (SCL) — Unrelated Event?

Cloudflare also reported:

“Scheduled maintenance in SCL (Santiago) datacenter between 12:00 and 15:00 UTC.”

However, the global outage began before this maintenance window and affects all Cloudflare regions, making it unlikely that the Chilean datacenter maintenance is related.

Still, simultaneous events can complicate internal triage efforts.

What Happens Next?

Cloudflare’s team is actively investigating the core issue. Typically, for major incidents:

- Engineers isolate the failing component (routing, caching, API, etc.)

- They roll back recent changes

- Traffic is redistributed across healthy nodes

- Internal systems restart and propagate globally

- A public postmortem is published once resolved

Given the scale of the failure, recovery may take:

- minutes,

- or several hours,

- depending on the underlying cause.

Conclusion: A Developing Situation With Growing Impact

The Cloudflare outage has expanded significantly, affecting nearly every layer of Cloudflare’s global network, from website delivery to administrative access. With widespread 500 errors, Dashboard unavailability, and API failure, the incident is now one of the most disruptive Cloudflare issues in recent years.

Cloudflare has not yet published a root cause, but investigations are ongoing, and more updates are expected shortly.

Until then, businesses and users should expect intermittent failures across any service relying on Cloudflare’s infrastructure.