How to Test APIs for Performance and Security

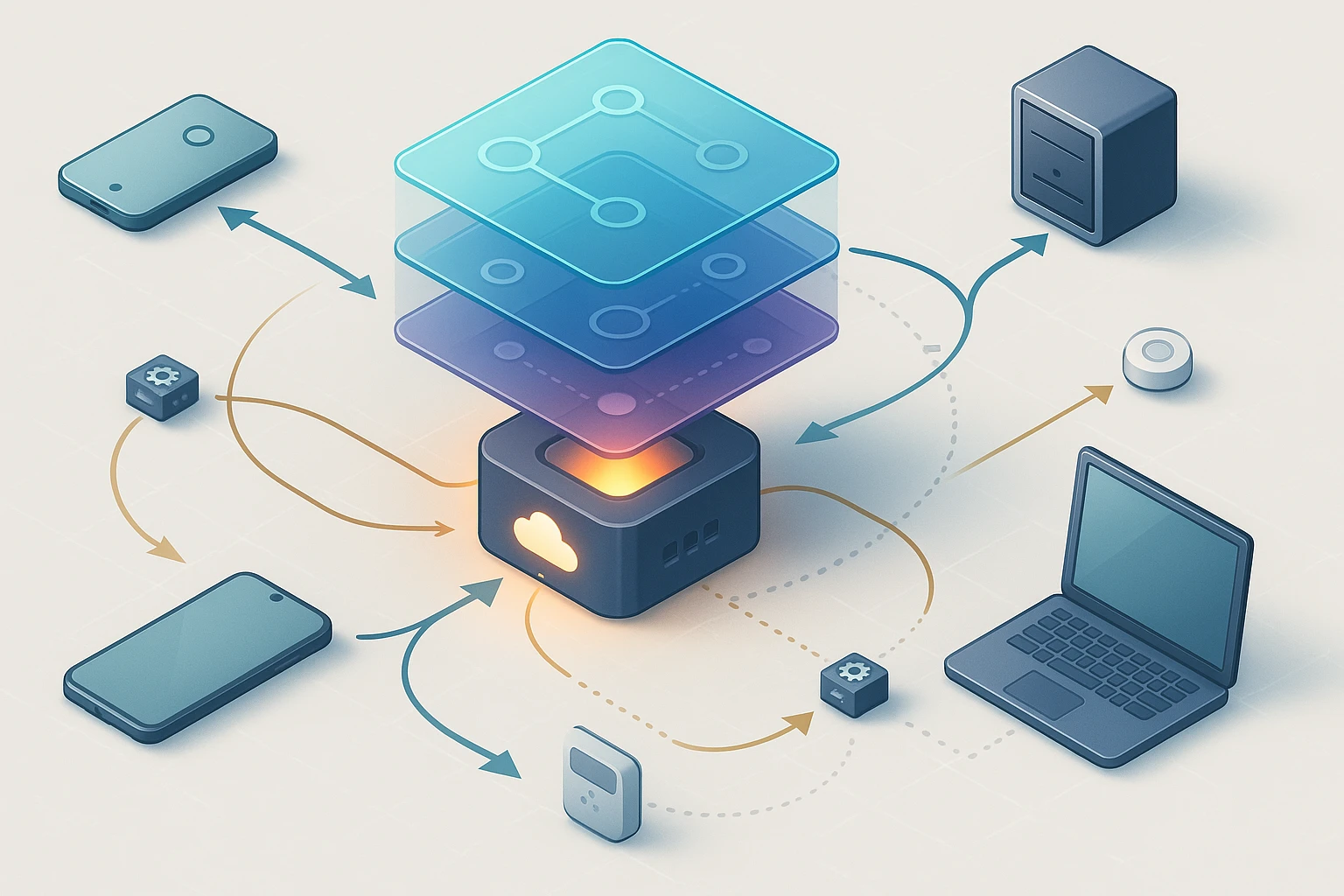

Illustration of API testing workflow showcases load testing, security scanning, request tracing, response validation, rate limiting checks, metrics dashboards, logs, and CI alerts.

How to Test APIs for Performance and Security

In today's interconnected digital landscape, Application Programming Interfaces serve as the backbone of modern software architecture, facilitating seamless communication between systems, applications, and services. When these critical pathways fail to perform optimally or become vulnerable to security threats, the consequences can ripple through entire organizations, affecting everything from user experience to data integrity. The stakes have never been higher, as businesses increasingly rely on API-driven ecosystems to deliver value, maintain competitive advantage, and protect sensitive information.

Testing these interfaces encompasses a comprehensive evaluation process that examines both how quickly and reliably they respond under various conditions, and how well they withstand potential attacks or unauthorized access attempts. This dual focus represents not just a technical necessity but a strategic imperative, as organizations must balance the demand for speed and functionality with the non-negotiable requirement for robust protection. The challenge lies in implementing methodologies that address both dimensions without compromise, creating systems that are simultaneously fast and fortified.

Throughout this exploration, you'll discover practical approaches to evaluating API behavior under stress, identifying vulnerabilities before they become exploits, and implementing testing frameworks that integrate seamlessly into development workflows. We'll examine specific tools, techniques, and best practices that professional teams use to ensure their interfaces meet demanding standards, along with real-world considerations that influence testing strategies. Whether you're establishing a testing program from scratch or refining existing processes, the insights ahead will equip you with actionable knowledge to elevate your API quality assurance.

Understanding Performance Testing Fundamentals

Performance testing for APIs differs fundamentally from traditional application testing because it focuses on the invisible transactions happening behind the scenes rather than user interface interactions. These tests measure how an interface handles requests under various conditions, examining metrics like response time, throughput, and resource utilization. The goal extends beyond simply confirming that an endpoint returns correct data; it encompasses understanding how that endpoint behaves when subjected to realistic and extreme loads.

Key Performance Metrics That Matter

When evaluating API performance, certain metrics provide critical insights into system health and user experience. Response time measures the duration between sending a request and receiving a complete response, typically broken down into network latency, processing time, and data transfer time. This metric directly impacts user satisfaction, as delays accumulate across multiple API calls within a single user action.

Throughput quantifies how many requests an API can process within a specific timeframe, usually expressed as requests per second. This measurement reveals capacity limits and helps teams understand scaling requirements. Closely related is concurrent users, which indicates how many simultaneous connections the system can maintain without degradation.

Error rate tracking identifies the percentage of requests that fail or return unexpected results under load. Even a small increase in errors during peak usage can signal underlying issues with resource management, database connections, or third-party dependencies. Resource utilization metrics—including CPU usage, memory consumption, and network bandwidth—provide visibility into infrastructure efficiency and help identify bottlenecks.

| Metric Category | Measurement Focus | Typical Threshold | Business Impact |

|---|---|---|---|

| Response Time | End-to-end request completion | < 200ms for critical endpoints | User satisfaction, conversion rates |

| Throughput | Requests processed per second | Varies by application scale | System capacity, scalability planning |

| Error Rate | Failed requests percentage | < 0.1% under normal load | Reliability, data integrity |

| CPU Utilization | Processor load percentage | < 70% sustained usage | Infrastructure costs, performance headroom |

| Memory Consumption | RAM usage patterns | No memory leaks, stable baseline | System stability, crash prevention |

Types of Performance Testing Approaches

Different testing methodologies reveal distinct aspects of API behavior. Load testing simulates expected user volumes to verify the system performs adequately under normal conditions. This baseline testing establishes performance benchmarks and validates that the API meets service level agreements during typical usage patterns.

Stress testing pushes the system beyond normal operational capacity to identify breaking points and understand failure modes. By gradually increasing load until the system fails, teams discover maximum capacity and observe how gracefully the application degrades. This information proves invaluable for capacity planning and incident response preparation.

Spike testing examines how the API handles sudden, dramatic increases in traffic—scenarios common during marketing campaigns, viral content, or coordinated events. These tests reveal whether auto-scaling mechanisms respond quickly enough and whether the system recovers properly after the spike subsides.

Soak testing, also called endurance testing, runs sustained load over extended periods to uncover issues like memory leaks, resource exhaustion, or gradual performance degradation. Problems that only manifest after hours or days of operation often escape detection in shorter test cycles.

"The difference between a system that works under test conditions and one that thrives in production often comes down to understanding behavior under sustained, realistic load patterns that mirror actual user behavior rather than idealized scenarios."

Establishing Performance Baselines

Before conducting meaningful performance tests, establishing baseline metrics provides a reference point for comparison. These baselines should reflect the API's behavior under controlled conditions with minimal load, capturing response times, resource usage, and throughput when the system operates without stress.

Baseline establishment requires running tests multiple times to account for environmental variability, then calculating average values and acceptable deviation ranges. This statistical approach helps distinguish between genuine performance degradation and normal fluctuation. Teams should document infrastructure configuration, dependencies, and test conditions to ensure reproducibility.

Regular baseline updates become necessary as the application evolves. New features, code changes, and infrastructure modifications all impact performance characteristics. Comparing current performance against outdated baselines leads to misguided conclusions and missed optimization opportunities.

Implementing Effective Performance Testing

Translating performance testing theory into practice requires careful planning, appropriate tooling, and systematic execution. The implementation process begins with defining clear objectives based on business requirements and user expectations, then selecting tools and methodologies that align with those goals.

Choosing the Right Testing Tools

The performance testing ecosystem offers numerous tools, each with distinct strengths and ideal use cases. Apache JMeter remains a popular open-source option, providing comprehensive functionality for HTTP/HTTPS testing, database queries, and various protocols. Its GUI facilitates test plan creation, while command-line execution supports CI/CD integration.

Gatling appeals to teams preferring code-based test definitions, using Scala or Java to create scenarios. Its high-performance engine handles massive concurrent user simulations efficiently, and its detailed HTML reports provide excellent visualization of results. The tool particularly excels at identifying performance degradation patterns across complex user journeys.

K6 offers a modern, developer-friendly approach using JavaScript for test scripting. Its cloud execution option simplifies distributed load generation, while local execution keeps testing costs minimal. The tool integrates naturally with modern DevOps workflows and provides real-time metrics streaming to various monitoring platforms.

Cloud-based solutions like BlazeMeter, LoadRunner Cloud, and Artillery eliminate infrastructure management concerns and enable testing from multiple geographic locations. These platforms often include advanced features like test recording, collaborative test management, and integration with APM tools.

- 🎯 JMeter - Comprehensive open-source solution with extensive protocol support and plugin ecosystem

- 🚀 Gatling - High-performance testing with code-based scenarios and excellent reporting

- ⚡ K6 - Modern JavaScript-based tool with cloud execution and real-time metrics

- ☁️ Cloud platforms - Managed services offering distributed testing and geographic diversity

- 🔧 Locust - Python-based framework enabling custom test logic and distributed execution

Designing Realistic Test Scenarios

Effective performance tests mirror actual user behavior rather than simply hammering endpoints with uniform requests. Analyzing production traffic patterns reveals how real users interact with the API—which endpoints receive the most traffic, what sequences of calls typically occur, and how request rates vary throughout the day.

Test scenarios should incorporate think time—realistic pauses between requests that simulate users reading information, making decisions, or performing other actions. Without think time, tests generate unrealistically intense traffic that doesn't reflect genuine usage patterns. Variable think time, using random distributions within reasonable ranges, creates more authentic simulation.

Request payload diversity matters significantly. Tests using identical data for every request may not expose performance issues related to data processing complexity, database query optimization, or caching effectiveness. Incorporating varied, realistic data ensures comprehensive evaluation.

Authentication and session management require special attention. Many APIs require tokens, cookies, or other session identifiers that must be obtained, maintained, and refreshed during testing. Properly simulating these authentication flows ensures tests accurately represent production behavior and identify authentication-related bottlenecks.

"Performance testing that ignores the nuances of real user behavior—the pauses, the varied data, the authentication complexities—produces metrics that look impressive in reports but fail to predict actual production performance under genuine load conditions."

Executing Tests and Analyzing Results

Test execution requires controlled environments that minimize external variables. Dedicated testing infrastructure, isolated from development and production systems, ensures consistent conditions. Network configuration, including bandwidth limitations and latency simulation, should reflect the environments where actual users operate.

Gradual load ramping prevents sudden traffic spikes that might trigger rate limiting or cause unrealistic failures. Starting with minimal load and incrementally increasing concurrent users allows the system to warm up—initializing caches, establishing database connections, and reaching steady-state operation before measurement begins.

During execution, real-time monitoring provides immediate visibility into system behavior. Watching response time distributions, error rates, and resource utilization as load increases helps identify the precise point where performance degrades. This live feedback enables quick iteration when unexpected issues emerge.

Result analysis extends beyond simple pass/fail determinations. Examining percentile distributions reveals how the slowest requests perform—the 95th and 99th percentile response times often matter more than averages, as they represent the experience of real users during peak conditions. Correlation analysis between load levels and response times identifies capacity limits and scaling characteristics.

Comparing results across test runs highlights trends and validates that changes improve rather than degrade performance. Version control for test scripts and results enables historical analysis, showing how performance evolves as the application develops.

Security Testing Foundations

While performance testing ensures APIs respond quickly and handle load effectively, security testing verifies they resist attacks and protect sensitive data. These complementary disciplines require different mindsets—performance testing asks "how fast and how much," while security testing asks "what could go wrong and how can it be exploited."

Common API Security Vulnerabilities

The Open Web Application Security Project (OWASP) maintains a specific list of API security risks that provides essential guidance for testing priorities. Broken object level authorization tops this list, occurring when APIs fail to verify that users can only access resources they own. An attacker might manipulate object identifiers in requests to access other users' data.

Broken authentication encompasses various weaknesses in how APIs verify user identity, including weak password requirements, missing rate limiting on authentication endpoints, and improper token validation. These vulnerabilities enable account takeover attacks and unauthorized access.

Excessive data exposure happens when APIs return more information than necessary, relying on clients to filter data appropriately. Attackers can exploit this to gather sensitive information that should remain hidden, even from authenticated users.

Injection attacks—including SQL injection, NoSQL injection, and command injection—occur when APIs fail to properly validate and sanitize input data. Malicious input can manipulate backend queries or commands, potentially exposing or modifying data.

Security misconfiguration represents a broad category encompassing improperly configured CORS policies, verbose error messages revealing system details, missing security headers, and outdated dependencies with known vulnerabilities. These issues often result from oversight rather than coding errors.

| Vulnerability Type | Attack Vector | Potential Impact | Testing Approach |

|---|---|---|---|

| Broken Object Authorization | Manipulating resource identifiers | Unauthorized data access | Parameter fuzzing, privilege escalation tests |

| Broken Authentication | Credential stuffing, token manipulation | Account takeover | Authentication flow analysis, token validation |

| Excessive Data Exposure | Analyzing API responses | Information disclosure | Response inspection, data minimization checks |

| Injection Vulnerabilities | Malicious input payloads | Data breach, system compromise | Input fuzzing, payload injection |

| Security Misconfiguration | Exploiting default settings | Various, depending on misconfiguration | Configuration audits, header analysis |

Authentication and Authorization Testing

Verifying authentication mechanisms requires testing multiple scenarios beyond successful login. Attempting access without credentials should consistently return appropriate error codes rather than exposing system information. Testing with expired, malformed, or manipulated tokens reveals whether validation logic operates correctly.

Authorization testing examines whether authenticated users can only perform actions and access resources appropriate to their roles. This involves attempting operations with insufficient privileges, accessing other users' resources, and testing boundary conditions where permission levels change.

Session management testing evaluates how the API handles tokens or session identifiers. Tests should verify that tokens expire appropriately, that refresh mechanisms function securely, and that session termination properly invalidates credentials. Token predictability analysis ensures identifiers cannot be guessed or enumerated.

"Authorization testing reveals the difference between authenticating who you are and authorizing what you can do—a distinction that attackers exploit relentlessly when APIs verify identity but fail to properly check permissions for every single operation."

Input Validation and Injection Testing

Comprehensive input validation testing subjects every parameter to malicious and malformed input. This includes extremely long strings, special characters, null bytes, and various encoding schemes. APIs should reject invalid input gracefully without revealing internal system details through error messages.

SQL injection testing attempts to manipulate database queries through user-controlled input. Even when using parameterized queries or ORMs, configuration errors or dynamic query construction can introduce vulnerabilities. Testing involves submitting SQL syntax in parameters and observing whether the API behavior changes in ways that indicate query manipulation.

NoSQL injection targets document databases and key-value stores using different syntax but similar principles. Testing requires understanding the specific database technology and crafting payloads appropriate to that system.

Command injection testing attempts to execute system commands through vulnerable input fields. APIs that interact with the operating system, process files, or invoke external programs require particular scrutiny, as successful command injection can completely compromise the server.

Implementing Comprehensive Security Testing

Translating security awareness into effective testing requires systematic approaches, specialized tools, and integration into development workflows. Security testing shouldn't be a one-time audit but rather an ongoing process that evolves with the application.

Security Testing Tools and Frameworks

OWASP ZAP (Zed Attack Proxy) provides an accessible entry point for security testing, offering both automated scanning and manual testing capabilities. Its intercepting proxy functionality allows testers to modify requests in real-time, exploring how the API responds to unexpected input. The tool's active scanner automatically tests for common vulnerabilities, while passive scanning identifies issues without sending potentially harmful requests.

Burp Suite represents the professional standard for web application and API security testing. Its comprehensive toolkit includes advanced scanning, request manipulation, and vulnerability exploitation capabilities. The Repeater and Intruder tools facilitate systematic testing of parameters and authentication mechanisms.

Postman, while primarily known for API development, includes security testing features through its collection runner and scripting capabilities. Teams can create security test collections that verify authentication, check for common vulnerabilities, and validate security headers.

Specialized tools like sqlmap focus on specific vulnerability types, in this case SQL injection. These targeted tools often provide deeper analysis and exploitation capabilities than general-purpose scanners.

Static analysis tools examine API code without executing it, identifying potential vulnerabilities in source code. SonarQube, Checkmarx, and language-specific linters detect security anti-patterns, hardcoded secrets, and dangerous function usage.

Automated Security Scanning Integration

Integrating security scanning into CI/CD pipelines ensures continuous evaluation as code changes. Automated scans should run against every build, failing the pipeline when critical vulnerabilities are detected. This shift-left approach catches security issues early when they're less expensive to fix.

Dynamic Application Security Testing (DAST) tools analyze running applications, making actual requests and observing responses. These tools excel at finding runtime vulnerabilities that static analysis might miss, including configuration issues and authentication problems.

API specification testing validates that implementations match their OpenAPI or similar specifications, ensuring documented security requirements are actually enforced. Tools can automatically generate test cases from specifications, verifying that authentication requirements, parameter validation, and response schemas match documentation.

Container and dependency scanning identifies known vulnerabilities in third-party libraries and base images. Tools like Snyk, Aqua Security, and Clair maintain databases of CVEs (Common Vulnerabilities and Exposures) and alert teams when vulnerable components are detected.

"Automation handles the repetitive security checks that humans might skip under deadline pressure, but automated tools cannot replace the creativity and contextual understanding that manual security testing brings to discovering novel vulnerabilities specific to your business logic."

Manual Security Testing Techniques

While automated tools provide excellent coverage of known vulnerability patterns, manual testing uncovers business logic flaws and complex attack chains that tools cannot detect. This testing requires understanding both the application's intended functionality and creative thinking about how that functionality might be abused.

Business logic testing examines workflows and processes for flaws in their design. For example, an e-commerce API might properly validate individual operations but fail to prevent users from applying multiple discount codes, manipulating order totals through race conditions, or exploiting refund processes.

Parameter pollution testing submits multiple values for the same parameter or uses different encoding schemes to bypass validation. APIs might handle the first instance of a parameter correctly but process subsequent instances without validation, or different application layers might interpret parameters differently.

Rate limiting verification ensures APIs properly restrict request frequency to prevent abuse. Testing involves making rapid successive requests and observing whether the API enforces documented limits. Effective rate limiting should apply per user or API key rather than globally, and should include appropriate retry-after headers.

Error handling analysis examines how APIs respond to unexpected conditions. Error messages should provide enough information for legitimate debugging without revealing sensitive system details. Stack traces, database error messages, and file paths in error responses help attackers understand system architecture.

Penetration Testing and Red Team Exercises

Comprehensive security assessment includes penetration testing—authorized attempts to exploit vulnerabilities in controlled environments. Penetration testers adopt an attacker's mindset, chaining multiple smaller issues into significant compromises and exploring attack vectors that automated tools miss.

These exercises should follow a defined scope and rules of engagement, clearly specifying which systems can be tested, what techniques are permitted, and how to handle discovered vulnerabilities. Documentation of findings should include detailed reproduction steps, potential impact assessment, and remediation recommendations.

Red team exercises take this further, simulating realistic attack scenarios without advance notice to some team members. These exercises test not just technical security controls but also incident detection and response capabilities.

Integrating Testing into Development Workflows

Effective API testing doesn't exist in isolation but integrates seamlessly into development processes. This integration ensures testing happens consistently, results inform decision-making, and quality gates prevent vulnerable or poorly performing code from reaching production.

Shift-Left Testing Philosophy

The shift-left approach moves testing earlier in the development lifecycle, catching issues when they're easier and cheaper to fix. Developers run basic performance and security tests locally before committing code, receiving immediate feedback about potential problems.

Unit tests can include performance assertions, verifying that individual functions complete within acceptable timeframes. While these tests don't replace comprehensive performance testing, they prevent obvious performance regressions from entering the codebase.

Security unit tests verify that security controls function correctly in isolation. Testing authentication functions, authorization logic, and input validation routines independently ensures these critical components work correctly before integration.

Continuous Testing in CI/CD Pipelines

CI/CD integration makes testing automatic and consistent. Every code commit triggers a testing pipeline that includes functional tests, performance checks, and security scans. This automation removes the temptation to skip testing under time pressure and ensures consistent quality standards.

Pipeline stages should progress from fast, focused tests to slower, comprehensive evaluations. Initial stages run unit tests and static analysis within minutes, providing quick feedback. Later stages execute integration tests, API contract validation, and security scans. Final stages might include performance testing against staging environments.

Quality gates enforce minimum standards, preventing deployment when critical issues are detected. These gates might require that all tests pass, that code coverage exceeds thresholds, that no high-severity security vulnerabilities exist, and that performance metrics meet defined SLAs.

"The most sophisticated testing tools and methodologies deliver minimal value when they run infrequently or only after problems reach production; continuous integration transforms testing from an occasional checkpoint into a constant guardian of quality."

Test Environment Management

Reliable testing requires stable, representative environments. Test environments should mirror production architecture, including load balancers, caching layers, and database configurations. Differences between test and production environments often explain why issues escape detection.

Data management presents particular challenges. Test databases need realistic data volumes and distributions to accurately represent production behavior, but must not contain actual customer data due to privacy concerns. Synthetic data generation and production data anonymization provide solutions, though each approach has tradeoffs.

Infrastructure as Code (IaC) ensures environment consistency by defining infrastructure through version-controlled configuration files. Tools like Terraform, CloudFormation, and Ansible create reproducible environments, eliminating configuration drift between test instances.

Containerization simplifies environment management by packaging applications with their dependencies. Docker containers ensure that tests run in identical environments regardless of where they execute, while Kubernetes orchestration facilitates scaling test infrastructure.

Monitoring and Observability

Testing provides snapshots of API behavior, but production monitoring delivers continuous visibility. Comprehensive observability includes metrics, logs, and traces that reveal how APIs perform under actual usage conditions.

Application Performance Monitoring (APM) tools track response times, error rates, and throughput in production. These metrics validate that performance test predictions match reality and alert teams when degradation occurs. Tools like New Relic, Datadog, and Dynatrace provide detailed transaction tracing that identifies bottlenecks.

Security monitoring detects attack attempts and suspicious patterns. Web Application Firewalls (WAFs) block common attacks while logging attempted exploits. Security Information and Event Management (SIEM) systems aggregate security logs, correlating events to identify sophisticated attacks.

Synthetic monitoring simulates user interactions from various locations, providing early warning when APIs become slow or unavailable. These active checks complement passive monitoring by detecting issues before they affect actual users.

Advanced Testing Considerations

As APIs grow more complex and critical to business operations, testing strategies must evolve to address sophisticated scenarios and emerging challenges. Advanced testing techniques handle edge cases, complex integrations, and specialized security concerns.

Microservices and Distributed System Testing

Microservices architectures complicate testing by distributing functionality across multiple independent services. Testing individual services in isolation misses integration issues, while testing the entire system becomes complex and slow.

Contract testing addresses this challenge by verifying that services communicate according to agreed-upon interfaces. Tools like Pact enable consumer-driven contract testing, where services define expectations for their dependencies. These contracts are validated independently, catching integration issues without requiring full system deployment.

Chaos engineering intentionally introduces failures to verify system resilience. By randomly terminating services, introducing network latency, or causing resource exhaustion, teams discover how systems behave under adverse conditions. Tools like Chaos Monkey and Gremlin facilitate controlled chaos experiments.

Service mesh technologies like Istio provide built-in observability and security features. They enable testing traffic routing, circuit breakers, and mutual TLS without modifying application code.

API Versioning and Backward Compatibility

APIs evolve over time, requiring careful management of changes to avoid breaking existing clients. Testing must verify that new versions maintain backward compatibility or that breaking changes are properly communicated and managed.

Compatibility testing runs existing test suites against new API versions, verifying that previously working functionality remains operational. This regression testing catches unintended breaking changes before they affect production clients.

Deprecation testing ensures that deprecated endpoints continue functioning during transition periods while new endpoints work correctly. Teams must test both old and new implementations simultaneously, verifying that migration paths function as documented.

Third-Party Integration Testing

APIs frequently depend on external services—payment processors, authentication providers, mapping services, and more. Testing these integrations presents challenges because external services are outside your control and may charge for API calls.

Service virtualization creates simulated versions of external dependencies, enabling testing without actually calling third-party APIs. These virtual services can simulate various response scenarios, including errors and edge cases that would be difficult to trigger with real services.

Contract verification ensures that your understanding of third-party APIs matches their actual behavior. Recording real API interactions and replaying them in tests catches changes in external service behavior that might break your application.

Compliance and Regulatory Testing

APIs handling sensitive data must comply with regulations like GDPR, HIPAA, PCI DSS, and others. Testing must verify that security controls meet regulatory requirements and that data handling practices align with privacy policies.

Data privacy testing verifies that personal information is properly protected, that users can exercise their rights (access, deletion, portability), and that consent mechanisms function correctly. Automated tests can verify encryption, access controls, and audit logging.

Audit trail testing ensures that all data access and modifications are properly logged with sufficient detail for compliance audits. Tests should verify that logs cannot be tampered with and that they contain required information.

Building a Comprehensive Testing Strategy

Effective API testing requires more than individual techniques—it demands a cohesive strategy that balances thoroughness with efficiency, automation with manual expertise, and prevention with detection.

Risk-Based Testing Prioritization

Not all APIs carry equal risk or importance. Critical endpoints handling financial transactions, personal data, or authentication deserve more intensive testing than informational endpoints with limited impact. Risk-based prioritization focuses testing resources where they provide maximum value.

Risk assessment considers multiple factors: the sensitivity of data handled, the potential business impact of failures, the complexity of implementation, and the likelihood of attack. High-risk APIs receive comprehensive testing including manual security reviews, extensive performance testing, and frequent regression testing.

Attack surface analysis identifies which parts of the API are exposed to potential attackers. Public endpoints accessible without authentication face higher risk than internal APIs behind multiple security layers. Testing intensity should reflect this exposure.

Documentation and Knowledge Sharing

Testing knowledge must be captured and shared across teams. Comprehensive documentation includes test strategies, test case libraries, tool configurations, and lessons learned from past issues.

API documentation should explicitly describe security expectations, performance requirements, and error handling behavior. This documentation guides both testing and development, ensuring shared understanding of requirements.

Runbooks document testing procedures, making them repeatable and accessible to team members. These guides should include setup instructions, execution steps, and result interpretation guidance.

Metrics and Continuous Improvement

Measuring testing effectiveness enables continuous improvement. Key metrics include defect detection rates, the percentage of issues found in testing versus production, test coverage percentages, and mean time to detect and resolve issues.

Retrospective analysis of production incidents reveals testing gaps. When issues escape to production, teams should examine why existing tests didn't catch them and implement new tests to prevent recurrence.

Testing efficiency metrics help optimize resource allocation. Track how long different test types take to execute, how often they find issues, and their cost-benefit ratio. This data informs decisions about where to invest testing effort.

"Testing maturity isn't measured by the sophistication of your tools or the comprehensiveness of your test suites, but by how effectively testing prevents production issues while enabling rapid, confident deployment of new functionality."

Team Skills and Training

Effective testing requires diverse skills spanning development, security, and operations. Teams need training in security principles, performance optimization, testing tools, and the specific technologies their APIs use.

Cross-functional collaboration breaks down silos between development, testing, security, and operations teams. Developers should understand security testing, security specialists should learn performance implications, and everyone should appreciate operational concerns.

Staying current with evolving threats and testing techniques requires ongoing learning. Security vulnerabilities evolve, new attack vectors emerge, and testing tools advance. Regular training, conference attendance, and community participation keep skills sharp.

Real-World Testing Scenarios and Solutions

Theoretical knowledge becomes valuable when applied to practical challenges. Examining common scenarios helps teams recognize similar situations in their own work and adapt proven solutions.

Handling Rate Limiting and Throttling

Testing APIs with rate limiting requires special consideration. Aggressive testing can trigger rate limits, causing test failures that don't represent actual issues. Solutions include using multiple API keys to distribute load, implementing intelligent retry logic, and coordinating with API providers to temporarily raise limits for testing.

Validating rate limiting implementation requires confirming that limits are enforced correctly and that error responses include appropriate headers indicating when requests can resume. Tests should verify both that legitimate usage stays within limits and that excessive requests are properly rejected.

Testing Asynchronous and Event-Driven APIs

APIs that process requests asynchronously or communicate through events present unique testing challenges. Immediate responses don't indicate success—the actual processing happens later, potentially failing without obvious indication.

Testing asynchronous operations requires polling for completion, subscribing to webhooks or events, or examining message queues to verify processing. Performance testing must account for the delay between request and completion, measuring end-to-end duration rather than just initial response time.

Event-driven architectures require testing message delivery, ordering, and idempotency. Tests should verify that events are published correctly, that subscribers receive them reliably, and that duplicate messages are handled appropriately.

GraphQL-Specific Testing Considerations

GraphQL APIs introduce unique security and performance concerns. Query complexity attacks can overwhelm servers by requesting deeply nested data structures. Testing should include complexity analysis, verifying that queries exceeding defined thresholds are rejected.

Authorization in GraphQL requires field-level enforcement, as clients can request arbitrary combinations of data. Testing must verify that authorization checks apply to every field, not just top-level queries.

Performance testing GraphQL APIs should include diverse query patterns, as performance varies dramatically based on what fields are requested and how they're nested. Caching strategies also differ from REST APIs, requiring specialized testing approaches.

Mobile and IoT API Testing

APIs serving mobile applications and IoT devices face constraints around network reliability, bandwidth, and battery consumption. Testing should simulate poor network conditions, including high latency, packet loss, and intermittent connectivity.

Payload size optimization becomes critical for mobile and IoT scenarios. Tests should verify that APIs support compression, that responses don't include unnecessary data, and that pagination works correctly for large datasets.

Device diversity creates testing challenges, as different devices have varying capabilities and behavior. Testing should cover representative device types, operating system versions, and network conditions.

Frequently Asked Questions

How often should performance and security testing be conducted?

Testing frequency depends on deployment cadence and risk tolerance. At minimum, comprehensive testing should occur before major releases, but modern practices favor continuous testing integrated into CI/CD pipelines. Performance regression tests should run with every significant code change, while security scans can execute daily or with each commit. Quarterly or biannual penetration testing by external specialists provides additional assurance. High-risk APIs or those handling sensitive data warrant more frequent comprehensive testing.

What's the difference between performance testing and load testing?

Performance testing is the broader category encompassing various types of testing that evaluate how systems behave under different conditions. Load testing specifically examines performance under expected normal and peak usage levels. Other performance testing types include stress testing (pushing beyond capacity), spike testing (sudden traffic increases), soak testing (sustained load over time), and scalability testing (how performance changes as resources scale). Each type reveals different aspects of system behavior and capacity.

Can automated security testing replace manual penetration testing?

Automated tools excel at finding known vulnerability patterns and performing repetitive checks, but they cannot replace human creativity and contextual understanding. Manual testing discovers business logic flaws, complex attack chains, and novel vulnerabilities that automated tools miss. The most effective approach combines automated scanning for broad coverage with periodic manual testing for depth. Automation handles continuous monitoring and regression prevention, while manual testing provides comprehensive assessment of high-risk areas.

How do you test APIs that depend on third-party services?

Third-party dependencies complicate testing but several strategies help. Service virtualization creates simulated versions of external APIs for testing without actual calls. Contract testing verifies that your integration matches the third-party API's behavior. Recording real interactions and replaying them in tests provides realistic scenarios. For unavoidable live testing, use sandbox environments provided by many services. Implement circuit breakers and fallback mechanisms, then test these resilience patterns to ensure graceful degradation when dependencies fail.

What metrics indicate that API performance testing is effective?

Effective performance testing manifests in several ways: production performance matches test predictions, capacity planning accurately forecasts resource needs, performance regressions are caught before deployment, and performance-related incidents decrease over time. Specific metrics include the percentage of performance issues found in testing versus production, the accuracy of load projections, test environment fidelity to production, and the time required to identify performance bottlenecks. Regular comparison of test results with production monitoring data validates testing effectiveness.

How do you balance security with performance in API testing?

Security and performance aren't inherently opposed but require thoughtful implementation. Some security measures like encryption and authentication add overhead, making performance testing of secured endpoints essential. Test both dimensions together rather than separately—performance tests should use realistic authentication, while security tests should consider performance implications of controls. Optimize security implementations without compromising protection, using techniques like connection pooling, caching, and efficient algorithms. Monitor both metrics in production to catch tradeoffs early.