How to Use map() and filter() Functions

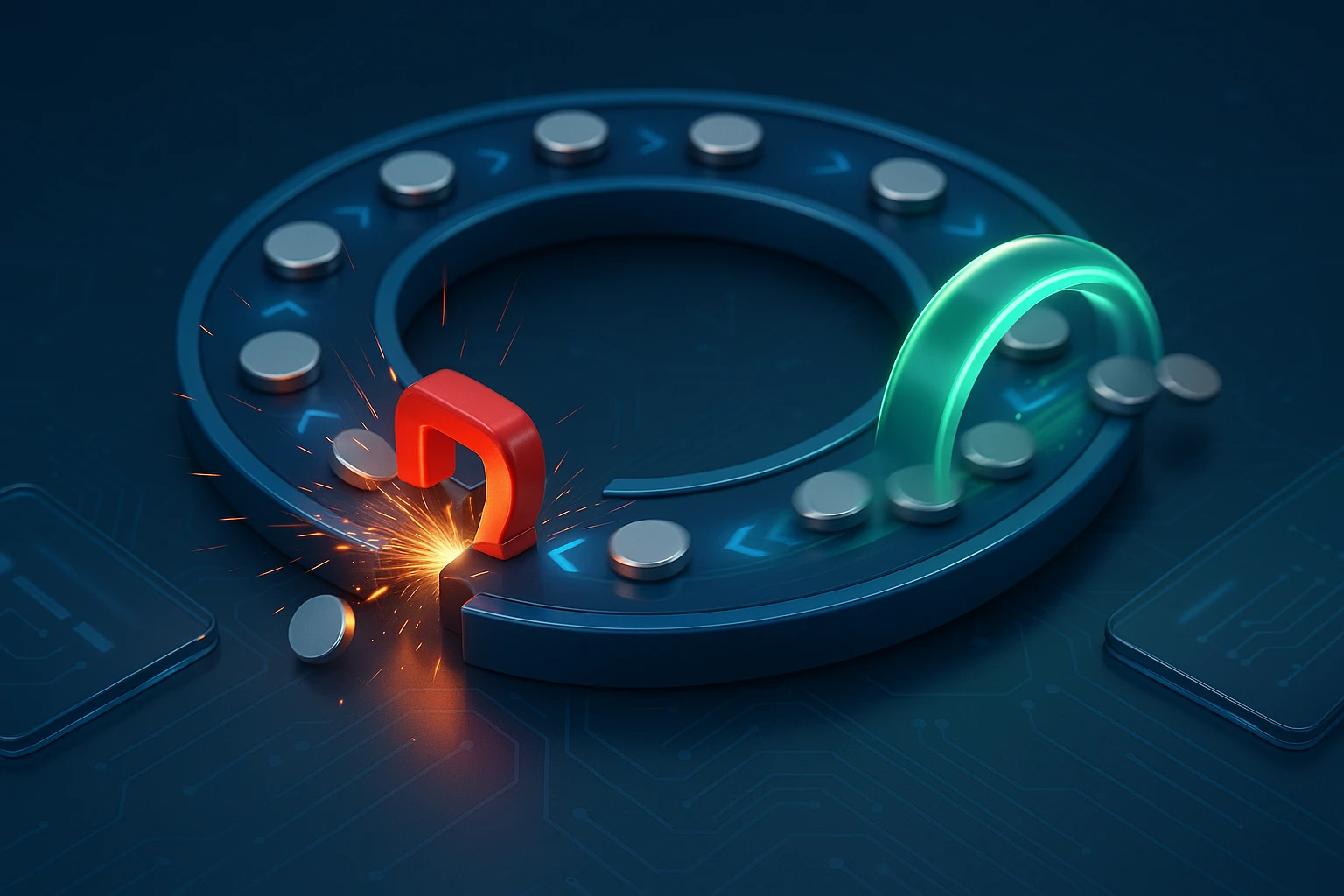

Diagram showing map() applying a function to each item in a list to produce a transformed list, and filter() selecting items by predicate, with arrows and sample arrays. with notes

Every developer faces the challenge of processing collections of data efficiently and elegantly. Whether you're transforming user inputs, cleaning datasets, or preparing information for display, the way you handle arrays can make the difference between clunky, hard-to-maintain code and clean, professional solutions. The map() and filter() functions represent fundamental tools that separate novice programmers from those who write production-ready code.

These two methods belong to the family of higher-order functions—operations that take other functions as arguments and apply them systematically across data structures. They provide declarative approaches to data transformation and selection, allowing you to express what you want to achieve rather than how to achieve it through manual loops. Both functions return new arrays without modifying the original data, embracing immutability principles that prevent unexpected side effects in your applications.

Throughout this exploration, you'll discover practical implementations, performance considerations, real-world use cases, and best practices that professionals rely on daily. You'll learn when to use each function, how to combine them effectively, and which common pitfalls to avoid. By understanding these powerful array methods deeply, you'll write more maintainable code, reduce bugs, and communicate your intentions more clearly to other developers reading your work.

Understanding the Core Mechanics of map()

The map() function creates a new array by applying a transformation function to every element in an existing array. Think of it as a production line where each item passes through the same modification process. The original array remains untouched, while a completely new array emerges with transformed values. This method accepts a callback function that receives three parameters: the current element, the index of that element, and the entire array being traversed.

"The beauty of map() lies in its predictability—one input element always produces exactly one output element, maintaining the array's length while transforming its contents."

When you invoke map(), JavaScript iterates through each element sequentially, executes your callback function, and collects the returned values into a new array. The callback function must return a value for each iteration; otherwise, undefined will appear in the resulting array at those positions. This characteristic makes map() perfect for scenarios where you need to convert data formats, extract specific properties from objects, or perform calculations on numeric collections.

Basic Syntax and Implementation Patterns

The fundamental structure follows a consistent pattern that becomes second nature with practice. You call the method on an array, pass a function that describes the transformation, and receive a new array with modified elements. The callback can be an arrow function, a traditional function expression, or a reference to a named function defined elsewhere in your code.

const numbers = [1, 2, 3, 4, 5];

const doubled = numbers.map(num => num * 2);

// Result: [2, 4, 6, 8, 10]

const users = [

{ name: 'Alice', age: 28 },

{ name: 'Bob', age: 34 },

{ name: 'Charlie', age: 22 }

];

const names = users.map(user => user.name);

// Result: ['Alice', 'Bob', 'Charlie']

const agesInMonths = users.map(user => user.age * 12);

// Result: [336, 408, 264]These examples demonstrate the versatility of map() across different data types. With primitive values like numbers, you can perform arithmetic operations. With objects, you can extract properties, create derived values, or even restructure entire objects into new shapes. The key principle remains consistent: transform each element individually and collect the results.

Advanced Transformation Techniques

Beyond simple property extraction and arithmetic, map() enables sophisticated data manipulation. You can use the index parameter to create position-dependent transformations, access the original array to implement context-aware logic, or chain multiple map() calls to perform sequential transformations. Destructuring within the callback function parameters provides elegant syntax for working with complex objects.

const products = [

{ name: 'Laptop', price: 999, category: 'Electronics' },

{ name: 'Coffee Maker', price: 79, category: 'Appliances' },

{ name: 'Desk Chair', price: 199, category: 'Furniture' }

];

const enrichedProducts = products.map((product, index) => ({

id: index + 1,

displayName: `${product.name} (${product.category})`,

priceWithTax: product.price * 1.2,

originalPrice: product.price

}));

const coordinates = [

[10, 20],

[30, 40],

[50, 60]

];

const points = coordinates.map(([x, y]) => ({

x: x,

y: y,

distance: Math.sqrt(x * x + y * y)

}));"When restructuring data with map(), always consider whether you're creating unnecessary object references or if you can optimize by reusing existing structures."

Mastering the filter() Function

While map() transforms every element, filter() selectively includes elements based on conditions. This function creates a new array containing only the elements that pass a test implemented by the provided callback function. The resulting array can be shorter than, equal to, or theoretically the same length as the original, but never longer. Each element either makes it into the new array or gets excluded based on whether the callback returns a truthy or falsy value.

The callback function in filter() receives the same three parameters as map(): the current element, its index, and the complete array. However, instead of returning a transformed value, your callback should return a boolean or a value that JavaScript can coerce to true or false. Elements for which the callback returns truthy values appear in the result array in the same order they appeared in the original.

Implementing Selection Logic

Filter operations excel at scenarios where you need to narrow down datasets based on criteria. Whether you're filtering search results, removing invalid entries, or segmenting data by categories, filter() provides a clean, declarative approach. The function name itself documents your intention—readers immediately understand you're selecting a subset rather than transforming values.

const ages = [12, 18, 25, 30, 15, 40, 17];

const adults = ages.filter(age => age >= 18);

// Result: [18, 25, 30, 40]

const products = [

{ name: 'Laptop', inStock: true, price: 999 },

{ name: 'Mouse', inStock: false, price: 25 },

{ name: 'Keyboard', inStock: true, price: 79 },

{ name: 'Monitor', inStock: true, price: 299 }

];

const availableProducts = products.filter(product => product.inStock);

const premiumProducts = products.filter(product => product.price > 100);

const affordableInStock = products.filter(product =>

product.inStock && product.price < 100

);Notice how filter() naturally handles multiple conditions through logical operators. You can combine AND (&&) and OR (||) operations within your callback to implement complex selection logic. For readability in production code, consider extracting complex conditions into named functions that clearly express the filtering criteria.

Working with Complex Filtering Scenarios

Real-world applications often require filtering based on relationships between elements or external data sources. You might need to remove duplicates, find elements matching patterns, or exclude items based on their position relative to other elements. The index and array parameters become valuable in these situations, enabling context-aware filtering logic.

const numbers = [1, 2, 3, 4, 5, 6, 7, 8, 9, 10];

const evenIndexElements = numbers.filter((num, index) => index % 2 === 0);

// Result: [1, 3, 5, 7, 9]

const words = ['apple', 'banana', 'apple', 'cherry', 'banana', 'date'];

const uniqueWords = words.filter((word, index, array) =>

array.indexOf(word) === index

);

// Result: ['apple', 'banana', 'cherry', 'date']

const transactions = [

{ id: 1, amount: 100, category: 'food' },

{ id: 2, amount: 50, category: 'transport' },

{ id: 3, amount: 200, category: 'food' },

{ id: 4, amount: 75, category: 'entertainment' }

];

const excludedCategories = ['transport', 'entertainment'];

const filteredTransactions = transactions.filter(

transaction => !excludedCategories.includes(transaction.category)

);"The power of filter() becomes evident when you realize it lets you express complex selection criteria in a single, readable statement rather than nested conditional blocks."

Combining map() and filter() Effectively

The true power emerges when you chain these functions together, creating data processing pipelines that transform and filter in sequence. This approach mirrors functional programming paradigms where data flows through a series of transformations. Each method returns a new array, making chaining natural and intuitive. The order of operations matters significantly—filtering before mapping can improve performance by reducing the number of transformations, while mapping before filtering might be necessary when your filter criteria depend on transformed values.

const orders = [

{ id: 1, items: 3, total: 150, status: 'completed' },

{ id: 2, items: 1, total: 45, status: 'pending' },

{ id: 3, items: 5, total: 320, status: 'completed' },

{ id: 4, items: 2, total: 80, status: 'cancelled' },

{ id: 5, items: 4, total: 210, status: 'completed' }

];

const completedHighValueOrders = orders

.filter(order => order.status === 'completed')

.filter(order => order.total > 100)

.map(order => ({

orderId: order.id,

revenue: order.total,

averageItemPrice: order.total / order.items

}));

const userInputs = [' hello ', '', ' world ', null, 'javascript', undefined];

const cleanedInputs = userInputs

.filter(input => input != null)

.map(input => input.trim())

.filter(input => input.length > 0)

.map(input => input.toLowerCase());These pipelines read almost like natural language descriptions of the operations. You can clearly see the sequence of transformations and selections, making the code self-documenting. Each step operates on the result of the previous step, building complexity through composition rather than nested loops and conditional statements.

| Scenario | Recommended Order | Reasoning |

|---|---|---|

| Simple property extraction from subset | filter() → map() | Reduces number of transformations by eliminating unwanted elements first |

| Filtering based on calculated values | map() → filter() | Transformation must occur before the filter criteria can be evaluated |

| Multiple independent filters | filter() → filter() → map() | Each filter reduces dataset size, making subsequent operations faster |

| Complex object restructuring with validation | filter() → map() → filter() | Remove invalid entries, transform, then filter based on transformed properties |

| Data enrichment with external lookup | map() → filter() | Enrichment might add properties needed for filtering decisions |

Performance Considerations and Optimization Strategies

While map() and filter() provide elegant syntax, understanding their performance characteristics helps you write efficient code at scale. Each method iterates through the entire array once, creating a new array in memory. Chaining multiple operations means multiple complete iterations and multiple intermediate arrays. For small datasets, this overhead remains negligible, but with thousands or millions of elements, optimization becomes crucial.

Memory and Iteration Efficiency

Every call to map() or filter() allocates a new array, copying references or values from the original. Chaining five methods means creating five intermediate arrays, most of which you immediately discard. For performance-critical code processing large datasets, consider using a single reduce() operation or traditional loops that can combine transformations and filtering in one pass. However, premature optimization often sacrifices readability—measure before optimizing.

// Less efficient with large datasets - multiple iterations

const result1 = largeArray

.filter(item => item.active)

.map(item => item.value)

.filter(value => value > 100)

.map(value => value * 2);

// More efficient - single iteration

const result2 = largeArray.reduce((acc, item) => {

if (item.active && item.value > 100) {

acc.push(item.value * 2);

}

return acc;

}, []);

// Using for loop - most efficient for pure performance

const result3 = [];

for (let i = 0; i < largeArray.length; i++) {

const item = largeArray[i];

if (item.active && item.value > 100) {

result3.push(item.value * 2);

}

}"Choose readability first, performance second—but always know where the performance costs lie so you can optimize intelligently when metrics demand it."

Avoiding Common Performance Pitfalls

Certain patterns create unnecessary work or memory pressure. Calling expensive functions inside callbacks means executing them for every element. Creating complex objects with spread operators or Object.assign() in map() callbacks generates significant garbage collection pressure. Accessing array.length repeatedly in conditions wastes cycles. Being aware of these patterns helps you write code that's both clean and efficient.

- 🎯 Cache expensive calculations outside the callback when possible, especially API calls or complex computations that don't depend on the current element

- 🎯 Avoid nested map() or filter() calls that create quadratic time complexity; flatten your data structure or use more efficient algorithms

- 🎯 Consider lazy evaluation libraries like lodash for chained operations on large datasets, which can optimize multiple operations into single passes

- 🎯 Use early returns in filter callbacks with complex conditions to avoid evaluating unnecessary expressions once the result is determined

- 🎯 Profile your actual use cases with realistic data volumes before optimizing, as premature optimization based on assumptions often targets the wrong bottlenecks

Real-World Application Patterns

Professional codebases use map() and filter() in consistent patterns that solve recurring problems. Recognizing these patterns helps you identify opportunities to apply these methods and understand others' code more quickly. From data normalization to UI rendering, these functions appear throughout modern JavaScript applications.

Data Transformation for API Integration

APIs often return data in formats that don't match your application's internal models. Transforming external data structures into internal representations is a perfect use case for map(). You might need to rename properties, combine fields, parse dates, or compute derived values. Filtering removes records that don't meet validation criteria or fall outside relevant date ranges.

const apiResponse = {

users: [

{ user_id: 1, first_name: 'John', last_name: 'Doe', created_at: '2023-01-15', is_active: true },

{ user_id: 2, first_name: 'Jane', last_name: 'Smith', created_at: '2023-03-22', is_active: false },

{ user_id: 3, first_name: 'Bob', last_name: 'Johnson', created_at: '2023-06-10', is_active: true }

]

};

const normalizedUsers = apiResponse.users

.filter(user => user.is_active)

.map(user => ({

id: user.user_id,

fullName: `${user.first_name} ${user.last_name}`,

firstName: user.first_name,

lastName: user.last_name,

createdDate: new Date(user.created_at),

status: 'active'

}));

const recentActiveUsers = normalizedUsers.filter(user => {

const threeMonthsAgo = new Date();

threeMonthsAgo.setMonth(threeMonthsAgo.getMonth() - 3);

return user.createdDate > threeMonthsAgo;

});Preparing Data for User Interfaces

UI components often require data in specific formats. Dropdown options need value-label pairs, tables need flattened data structures, and charts need numeric arrays. Map() transforms your domain models into view models optimized for rendering. Filter() removes items that shouldn't appear in the current view based on user permissions, search queries, or selected filters.

const products = [

{ id: 1, name: 'Laptop', price: 999, category: 'Electronics', inStock: true },

{ id: 2, name: 'Mouse', price: 25, category: 'Electronics', inStock: false },

{ id: 3, name: 'Desk', price: 299, category: 'Furniture', inStock: true }

];

// Dropdown options

const categoryOptions = products

.map(p => p.category)

.filter((category, index, array) => array.indexOf(category) === index)

.map(category => ({

value: category.toLowerCase(),

label: category

}));

// Table rows

const tableData = products

.filter(p => p.inStock)

.map(p => ({

id: p.id,

name: p.name,

price: `$${p.price.toFixed(2)}`,

category: p.category,

actions: ['edit', 'delete']

}));

// Chart data

const chartData = products

.filter(p => p.category === 'Electronics')

.map(p => ({

x: p.name,

y: p.price

}));| Use Case | Primary Method | Common Pattern | Key Consideration |

|---|---|---|---|

| Form dropdown population | map() | Extract id and display name into value-label objects | Handle null values and provide default options |

| Search result filtering | filter() | Match query against multiple fields with case-insensitive comparison | Debounce input to avoid filtering on every keystroke |

| Data grid rendering | map() + filter() | Filter by active filters, map to row format with computed columns | Memoize results to avoid recalculating on every render |

| Permission-based UI | filter() | Remove menu items or actions user cannot access | Centralize permission logic rather than duplicating in filters |

| Batch processing results | map() | Transform array of promises or async results into consistent format | Handle errors individually to prevent one failure breaking entire batch |

Form Validation and Data Cleaning

User input arrives in unpredictable formats requiring cleaning and validation. Filter() removes empty entries, invalid formats, or duplicate submissions. Map() normalizes formats, trims whitespace, converts types, and standardizes casing. Combining these operations creates robust input processing pipelines that handle edge cases gracefully.

const formInputs = [

' john@example.com ',

'JANE@EXAMPLE.COM',

'',

'invalid-email',

'bob@example.com',

' john@example.com '

];

const emailPattern = /^[^\s@]+@[^\s@]+\.[^\s@]+$/;

const cleanedEmails = formInputs

.map(email => email.trim())

.filter(email => email.length > 0)

.map(email => email.toLowerCase())

.filter(email => emailPattern.test(email))

.filter((email, index, array) => array.indexOf(email) === index);

// Result: ['john@example.com', 'jane@example.com', 'bob@example.com']

const phoneInputs = [

'(555) 123-4567',

'555-123-4567',

'5551234567',

'555.123.4567'

];

const normalizedPhones = phoneInputs

.map(phone => phone.replace(/[^\d]/g, ''))

.filter(phone => phone.length === 10)

.map(phone => `(${phone.slice(0,3)}) ${phone.slice(3,6)}-${phone.slice(6)}`);"Data cleaning pipelines built with map() and filter() serve as documentation of your validation rules, making requirements explicit and testable."

Error Handling and Edge Cases

Production code must handle unexpected inputs gracefully. Map() and filter() continue iterating even when callbacks throw errors, but the error propagates and stops execution. Understanding how these methods behave with edge cases prevents runtime failures and data corruption. Defensive programming within callbacks ensures robustness across diverse input scenarios.

Handling Null and Undefined Values

Arrays containing null or undefined elements require careful handling. Attempting to access properties on these values throws errors. Filter() can remove them before transformation, or map() callbacks can include null checks. The optional chaining operator (?.) provides elegant syntax for safe property access, returning undefined instead of throwing when encountering null or undefined.

const data = [

{ name: 'Alice', age: 28 },

null,

{ name: 'Bob', age: null },

undefined,

{ name: 'Charlie' },

{ name: 'David', age: 35 }

];

// Safe filtering and mapping

const validAges = data

.filter(item => item != null)

.filter(item => item.age != null)

.map(item => item.age);

// Using optional chaining

const names = data

.filter(item => item?.name)

.map(item => item.name.toUpperCase());

// Providing defaults

const usersWithAge = data

.filter(item => item != null)

.map(item => ({

name: item.name || 'Unknown',

age: item.age ?? 0,

isAdult: (item.age ?? 0) >= 18

}));Managing Asynchronous Operations

Map() and filter() operate synchronously, but callbacks might need to perform asynchronous operations like API calls or database queries. Simply using async callbacks doesn't work as expected—map() returns immediately with an array of Promises rather than resolved values. You must use Promise.all() to wait for all operations to complete, or consider alternative patterns like for...of loops with await.

const userIds = [1, 2, 3, 4, 5];

// Wrong approach - returns array of Promises

const wrongResult = userIds.map(async id => {

const response = await fetch(`/api/users/${id}`);

return response.json();

});

// Correct approach - wait for all promises

const correctResult = await Promise.all(

userIds.map(async id => {

const response = await fetch(`/api/users/${id}`);

return response.json();

})

);

// With error handling

const safeResult = await Promise.all(

userIds.map(async id => {

try {

const response = await fetch(`/api/users/${id}`);

if (!response.ok) throw new Error(`HTTP ${response.status}`);

return await response.json();

} catch (error) {

console.error(`Failed to fetch user ${id}:`, error);

return null;

}

})

).then(results => results.filter(user => user !== null));Validating Callback Return Values

Map() expects callbacks to return values, but forgetting a return statement results in undefined elements. Filter() coerces return values to boolean, which can cause unexpected behavior with truthy/falsy values. Being explicit about return values and understanding JavaScript's type coercion prevents subtle bugs that manifest only with specific data combinations.

const numbers = [1, 2, 3, 4, 5];

// Bug: missing return statement

const buggyDoubled = numbers.map(num => {

num * 2; // Missing return!

});

// Result: [undefined, undefined, undefined, undefined, undefined]

// Bug: implicit truthy/falsy filtering

const values = [0, 1, 2, '', 'hello', false, true, null];

const buggyFilter = values.filter(val => val);

// Result: [1, 2, 'hello', true] - removes 0, '', false, null

// Correct: explicit conditions

const explicitFilter = values.filter(val => val !== null && val !== undefined);

// Result: [0, 1, 2, '', 'hello', false, true]

// Correct: explicit boolean return

const isPositive = numbers.filter(num => num > 0 ? true : false);"Explicit is better than implicit—always return values explicitly in map() and use clear boolean expressions in filter() to document your intentions."

Testing and Debugging Strategies

Testing code that uses map() and filter() focuses on verifying transformations and selections produce expected outputs for various inputs. Unit tests should cover normal cases, edge cases, empty arrays, and error conditions. The pure nature of these functions—same input always produces same output—makes them highly testable without complex mocking or setup.

Writing Effective Unit Tests

Structure tests around input-output pairs, testing both the final result and intermediate steps in chains. Separate complex callback functions into named functions that you can test independently. This approach provides better error messages when tests fail and makes it easier to identify which transformation step introduced a bug.

// Function to test

const processOrders = (orders) => {

return orders

.filter(order => order.status === 'completed')

.map(order => ({

id: order.id,

revenue: order.total * 0.9 // 10% discount

}))

.filter(order => order.revenue > 50);

};

// Test cases

describe('processOrders', () => {

test('filters completed orders only', () => {

const input = [

{ id: 1, status: 'completed', total: 100 },

{ id: 2, status: 'pending', total: 200 }

];

const result = processOrders(input);

expect(result).toHaveLength(1);

expect(result[0].id).toBe(1);

});

test('applies discount correctly', () => {

const input = [

{ id: 1, status: 'completed', total: 100 }

];

const result = processOrders(input);

expect(result[0].revenue).toBe(90);

});

test('filters low revenue orders', () => {

const input = [

{ id: 1, status: 'completed', total: 50 },

{ id: 2, status: 'completed', total: 100 }

];

const result = processOrders(input);

expect(result).toHaveLength(1);

expect(result[0].id).toBe(2);

});

test('handles empty array', () => {

const result = processOrders([]);

expect(result).toEqual([]);

});

});Debugging Complex Chains

Long chains of map() and filter() can be difficult to debug when results don't match expectations. Breaking chains into intermediate variables lets you inspect data at each step. Console logging within callbacks reveals what values each element receives. Using debugger statements or breakpoints in browser developer tools allows stepping through iterations one element at a time.

// Difficult to debug

const result = data

.filter(x => x.active)

.map(x => x.value * 2)

.filter(x => x > 100)

.map(x => x.toString());

// Easier to debug with intermediate steps

const activeItems = data.filter(x => x.active);

console.log('Active items:', activeItems);

const doubledValues = activeItems.map(x => x.value * 2);

console.log('Doubled values:', doubledValues);

const highValues = doubledValues.filter(x => x > 100);

console.log('High values:', highValues);

const stringValues = highValues.map(x => x.toString());

console.log('Final result:', stringValues);

// Debugging within callbacks

const debugResult = data

.filter(x => {

console.log('Filtering:', x);

return x.active;

})

.map(x => {

const doubled = x.value * 2;

console.log(`Mapping ${x.value} to ${doubled}`);

return doubled;

});Alternative Approaches and When to Use Them

While map() and filter() excel in many scenarios, other approaches sometimes provide better solutions. Understanding alternatives helps you choose the right tool for each situation. Traditional loops offer more control and better performance for complex logic. Reduce() combines mapping and filtering in single iterations. Library functions provide optimized implementations for specific use cases.

Using reduce() for Combined Operations

The reduce() method can perform mapping and filtering simultaneously, iterating once instead of multiple times. This approach improves performance with large datasets and reduces intermediate array allocations. However, reduce() requires more complex logic and can be harder to read, so use it judiciously when performance measurements justify the readability trade-off.

const orders = [

{ id: 1, status: 'completed', total: 150 },

{ id: 2, status: 'pending', total: 200 },

{ id: 3, status: 'completed', total: 75 },

{ id: 4, status: 'cancelled', total: 300 }

];

// Using map and filter (two iterations)

const result1 = orders

.filter(order => order.status === 'completed')

.map(order => order.total);

// Using reduce (one iteration)

const result2 = orders.reduce((acc, order) => {

if (order.status === 'completed') {

acc.push(order.total);

}

return acc;

}, []);

// Complex transformation with reduce

const summary = orders.reduce((acc, order) => {

if (order.status === 'completed') {

acc.completedOrders.push({

id: order.id,

revenue: order.total * 0.9

});

acc.totalRevenue += order.total * 0.9;

}

return acc;

}, { completedOrders: [], totalRevenue: 0 });Traditional Loops for Complex Logic

When logic becomes sufficiently complex—requiring multiple conditions, early exits, or state management across iterations—traditional for or while loops often provide clearer code. They allow break and continue statements, multiple nested conditions, and imperative state updates that would be awkward to express functionally. Don't force functional patterns where imperative code communicates intent more clearly.

// Complex logic better suited to traditional loop

const processTransactions = (transactions, accountBalances) => {

const results = [];

for (let i = 0; i < transactions.length; i++) {

const transaction = transactions[i];

// Skip if account not found

if (!accountBalances[transaction.accountId]) {

continue;

}

// Check balance before processing

if (accountBalances[transaction.accountId] < transaction.amount) {

results.push({

...transaction,

status: 'insufficient_funds'

});

continue;

}

// Update balance and record

accountBalances[transaction.accountId] -= transaction.amount;

results.push({

...transaction,

status: 'completed',

newBalance: accountBalances[transaction.accountId]

});

// Stop if we've processed enough

if (results.length >= 100) {

break;

}

}

return results;

};"Choose the approach that makes your code's intent clearest to future readers—sometimes that's functional composition, sometimes it's imperative loops."

Best Practices and Professional Guidelines

Professional developers follow consistent patterns when using map() and filter() to maintain code quality across teams. These practices balance readability, performance, and maintainability. They emerge from years of production experience and help teams avoid common pitfalls while keeping codebases clean and understandable.

Code Organization and Readability

Extract complex callback logic into named functions with descriptive names. This practice documents the transformation or filter criteria, makes code reusable, and enables independent testing. When chains grow beyond three or four operations, consider breaking them into intermediate variables with meaningful names. Future developers—including yourself—will appreciate the clarity when debugging or extending functionality.

// Poor: complex inline logic

const result = users

.filter(u => u.age >= 18 && u.verified && !u.suspended && u.lastLogin > Date.now() - 86400000)

.map(u => ({ ...u, displayName: `${u.firstName} ${u.lastName}`.trim(), initials: `${u.firstName[0]}${u.lastName[0]}`.toUpperCase() }));

// Better: named functions

const isActiveAdult = (user) => {

const dayInMs = 86400000;

const yesterday = Date.now() - dayInMs;

return user.age >= 18

&& user.verified

&& !user.suspended

&& user.lastLogin > yesterday;

};

const formatUserForDisplay = (user) => ({

...user,

displayName: `${user.firstName} ${user.lastName}`.trim(),

initials: `${user.firstName[0]}${user.lastName[0]}`.toUpperCase()

});

const result = users

.filter(isActiveAdult)

.map(formatUserForDisplay);Performance and Scalability Considerations

Measure performance with realistic data volumes before optimizing. Small arrays (hundreds of elements) rarely justify optimization at the cost of readability. Large arrays (thousands or millions) might benefit from reduce(), traditional loops, or streaming approaches. Consider lazy evaluation libraries for very large datasets where you might not need all results. Always profile actual bottlenecks rather than optimizing based on assumptions.

- 💡 Prefer filter before map to reduce the number of transformations performed on elements that will be excluded anyway

- 💡 Cache computed values outside callbacks when they don't depend on individual elements, avoiding redundant calculations

- 💡 Use early returns in filter callbacks with complex conditions to avoid evaluating unnecessary expressions

- 💡 Consider pagination or virtualization for UI rendering of large filtered/mapped datasets rather than processing everything upfront

- 💡 Leverage memoization in React or similar frameworks to avoid recalculating map/filter results on every render

Type Safety and Documentation

Using TypeScript or JSDoc comments provides type safety and documentation for map() and filter() operations. Type annotations make transformations explicit, catch errors at compile time, and improve IDE autocomplete. Document complex transformations with comments explaining why the logic exists, not just what it does. Future maintainers need context about business rules and edge cases.

/**

* Filters orders to find high-value completed transactions

* @param {Array} orders - Array of order objects

* @param {number} threshold - Minimum order value to include

* @returns {Array<{orderId: number, revenue: number}>} Processed orders

*/

const getHighValueOrders = (orders, threshold) => {

return orders

.filter(order => order.status === 'completed')

.filter(order => order.total >= threshold)

.map(order => ({

orderId: order.id,

revenue: order.total * 0.9 // Apply 10% processing fee

}));

};

// TypeScript version

interface Order {

id: number;

status: 'pending' | 'completed' | 'cancelled';

total: number;

}

interface ProcessedOrder {

orderId: number;

revenue: number;

}

const getHighValueOrders = (

orders: Order[],

threshold: number

): ProcessedOrder[] => {

return orders

.filter((order): order is Order => order.status === 'completed')

.filter(order => order.total >= threshold)

.map(order => ({

orderId: order.id,

revenue: order.total * 0.9

}));

};Framework-Specific Patterns

Modern JavaScript frameworks like React, Vue, and Angular frequently use map() and filter() for rendering lists and managing state. Understanding framework-specific patterns and best practices ensures you write performant, idiomatic code that integrates smoothly with framework lifecycles and optimization strategies.

React Component Rendering

React components commonly map arrays to JSX elements for rendering lists. Each element needs a unique key prop for React's reconciliation algorithm. Filtering and mapping data before rendering keeps components focused on presentation logic. Memoizing expensive map/filter operations with useMemo prevents unnecessary recalculations on every render, significantly improving performance in complex UIs.

import React, { useMemo, useState } from 'react';

const ProductList = ({ products }) => {

const [searchTerm, setSearchTerm] = useState('');

const [category, setCategory] = useState('all');

// Memoize filtered and mapped products

const displayProducts = useMemo(() => {

return products

.filter(product => {

const matchesSearch = product.name

.toLowerCase()

.includes(searchTerm.toLowerCase());

const matchesCategory = category === 'all' ||

product.category === category;

return matchesSearch && matchesCategory;

})

.map(product => ({

...product,

displayPrice: `$${product.price.toFixed(2)}`,

isOnSale: product.discount > 0

}));

}, [products, searchTerm, category]);

return (

setSearchTerm(e.target.value)}

placeholder="Search products..."

/>

setCategory(e.target.value)}>

All Categories

Electronics

Clothing

{displayProducts.map(product => (

{product.name}

{product.displayPrice}

{product.isOnSale && On Sale!}

))}

);

};State Management Patterns

Redux, Vuex, and similar state management libraries often use map() and filter() in reducers and selectors. These functions help maintain immutability—a core principle in state management—by creating new arrays rather than mutating existing ones. Selectors that derive data from state typically combine filtering and mapping to prepare data for specific components.

// Redux reducer with immutable updates

const todosReducer = (state = [], action) => {

switch (action.type) {

case 'TOGGLE_TODO':

return state.map(todo =>

todo.id === action.id

? { ...todo, completed: !todo.completed }

: todo

);

case 'DELETE_COMPLETED':

return state.filter(todo => !todo.completed);

case 'UPDATE_TODO':

return state.map(todo =>

todo.id === action.id

? { ...todo, ...action.updates }

: todo

);

default:

return state;

}

};

// Selector with filtering and mapping

const selectActiveTodos = (state) => {

return state.todos

.filter(todo => !todo.completed)

.map(todo => ({

...todo,

displayText: todo.text.substring(0, 50)

}));

};

// Memoized selector for performance

import { createSelector } from 'reselect';

const selectTodos = state => state.todos;

const selectFilter = state => state.filter;

const selectFilteredTodos = createSelector(

[selectTodos, selectFilter],

(todos, filter) => {

return todos

.filter(todo => {

switch (filter) {

case 'active': return !todo.completed;

case 'completed': return todo.completed;

default: return true;

}

})

.map(todo => ({

...todo,

displayDate: new Date(todo.createdAt).toLocaleDateString()

}));

}

);What is the main difference between map() and filter()?

Map() transforms every element in an array and always returns a new array of the same length with modified values. Filter() selectively includes elements based on a condition and returns a new array that can be shorter than the original, containing only elements that passed the test. Map() is for transformation, filter() is for selection.

Can I modify the original array inside map() or filter()?

While technically possible, modifying the original array inside these callbacks violates their intended purpose and creates confusing side effects. These methods are designed to be pure functions that don't mutate input data. If you need to modify the original array, use forEach() or traditional loops instead, making your intentions explicit.

How do I handle errors in map() or filter() callbacks?

Wrap callback logic in try-catch blocks to handle errors gracefully. For individual element failures, return a default value or null from map(), then filter out failed elements. For critical operations where any failure should stop processing, let errors propagate and handle them outside the map/filter chain with try-catch around the entire operation.

When should I use reduce() instead of map() and filter()?

Use reduce() when you need to combine filtering and mapping in a single iteration for performance reasons with large datasets, or when you're building a complex result like an object or accumulating values. For simple transformations or selections, map() and filter() provide better readability. Always profile before optimizing, as readability usually matters more than micro-optimizations.

Why do my async callbacks in map() return promises instead of resolved values?

Map() executes synchronously and immediately returns an array of whatever your callback returns—if your callback is async, it returns promises. You must use Promise.all() to wait for all promises to resolve. Alternatively, use a for...of loop with await for sequential async operations, or consider libraries like p-map for controlled concurrency.

How can I improve performance when chaining multiple map() and filter() operations?

Filter before mapping to reduce transformations on elements you'll discard. For very large datasets, consider combining operations into a single reduce() call or traditional loop. Use memoization in frameworks like React to avoid recalculating on every render. Profile your actual use case before optimizing—premature optimization often sacrifices readability for negligible gains.