What Is a Python Generator?

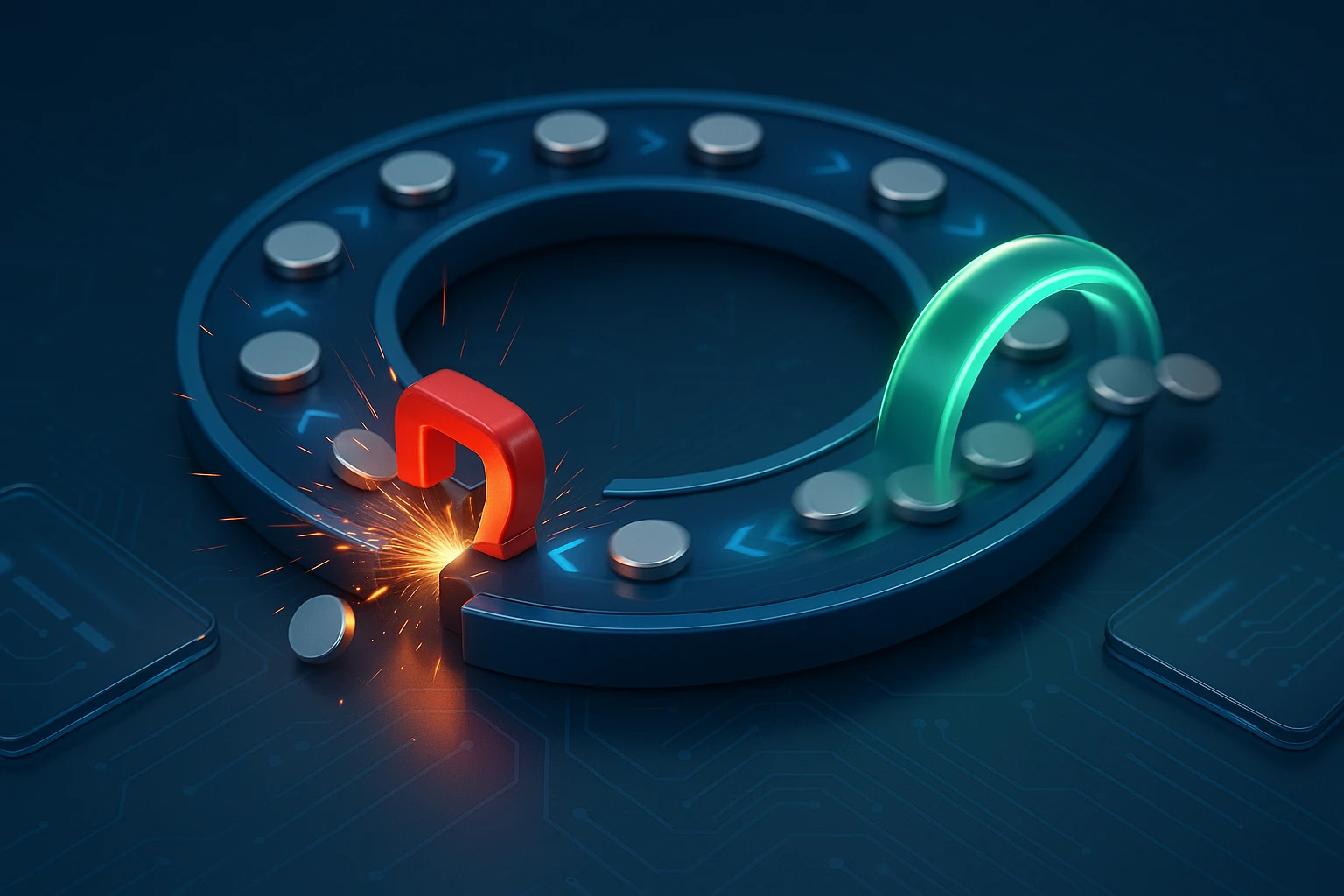

Diagram of Python generator: function yielding values lazily, iterator protocol with next() advancing state; memory-efficient streaming for loops and generator expressions. widely.

Understanding Python Generators: A Fundamental Tool for Modern Development

In the world of programming, efficiency isn't just a buzzword—it's the difference between applications that scale gracefully and those that collapse under their own weight. When you're processing millions of records, streaming data from external sources, or building pipelines that transform information on the fly, traditional approaches can quickly consume all available memory and bring your system to its knees. This is where one of Python's most elegant features comes into play, offering a solution that's both powerful and surprisingly intuitive.

A generator in Python is a special type of function that returns an iterator object, allowing you to iterate over a sequence of values lazily—meaning values are produced one at a time, only when requested, rather than all at once. Unlike regular functions that return a complete result and terminate, generators maintain their state between calls, yielding values incrementally while consuming minimal memory. This fundamental difference transforms how we approach data processing, enabling developers to work with datasets that would otherwise be impossible to handle.

Throughout this exploration, you'll discover how generators work under the hood, why they're essential for memory-efficient programming, and how to leverage them in real-world scenarios. We'll examine the syntax that makes generators possible, compare them with alternative approaches, explore practical use cases from simple iterations to complex data pipelines, and provide you with actionable patterns you can implement immediately. Whether you're optimizing existing code or designing new systems, understanding generators will fundamentally change how you think about data processing in Python.

The Mechanics Behind Generator Functions

At the heart of every generator lies the yield keyword, which fundamentally distinguishes generator functions from their conventional counterparts. When a function contains yield, Python automatically transforms it into a generator function. Upon calling this function, instead of executing its body immediately, Python returns a generator object—a special iterator that controls the function's execution.

The execution model of generators introduces a pause-and-resume mechanism that's unique in its elegance. When you call next() on a generator object or iterate over it in a loop, the function executes until it encounters a yield statement. At that moment, the function's state is frozen: all local variables, the instruction pointer, and the internal evaluation stack are preserved. The yielded value is returned to the caller, and the function essentially goes to sleep. When the next value is requested, the function wakes up exactly where it left off, continuing execution immediately after the yield statement.

"The beauty of generators lies not in what they do, but in what they don't do—they never create data structures you don't immediately need."

This state preservation mechanism is what makes generators memory-efficient. Consider a function that generates a sequence of one million numbers. A traditional approach would create a list containing all million numbers in memory simultaneously, consuming significant resources. A generator, however, maintains only the current state—a few variables tracking where it is in the sequence—producing each number on demand and immediately discarding it once the caller has processed it.

Basic Syntax and Structure

Creating a generator function requires minimal syntactic changes from regular functions. Here's the fundamental pattern:

- Function definition uses the standard def keyword, indistinguishable from regular functions at first glance

- Yield statements replace or supplement return statements, producing values without terminating the function

- Multiple yields can exist within the same function, each producing a value in sequence

- Return statements can still be used to signal completion, raising a StopIteration exception with the return value

- Generator expressions provide a compact syntax similar to list comprehensions but with parentheses

The generator object itself implements the iterator protocol, meaning it has both __iter__() and __next__() methods. This makes generators compatible with any Python construct that expects an iterator, including for loops, list comprehensions, and functions like sum(), max(), or any().

State Management and Lifecycle

Understanding the lifecycle of a generator is crucial for effective usage. When first created, a generator is in a suspended state—its code hasn't executed yet. The first call to next() starts execution from the beginning until the first yield. Subsequent calls resume from the last yield point. When the function completes (either by reaching the end or hitting a return statement), the generator raises StopIteration, signaling exhaustion.

| Generator State | Description | Behavior |

|---|---|---|

| Created | Generator object instantiated but not started | No code has executed; waiting for first next() call |

| Running | Actively executing code between yields | Processing logic, calculations, or I/O operations |

| Suspended | Paused at a yield statement | State preserved, waiting for next() to resume |

| Closed | Completed execution or explicitly closed | Raises StopIteration on any further next() calls |

Generators also support additional methods beyond next(). The send() method allows you to pass values back into the generator, which the yield expression receives. The throw() method lets you raise exceptions inside the generator, and close() terminates it prematurely. These advanced features enable sophisticated patterns like coroutines and bidirectional communication channels.

Memory Efficiency: The Primary Advantage

The most compelling reason to use generators is their exceptional memory efficiency, particularly when dealing with large datasets or infinite sequences. Traditional approaches that materialize entire collections in memory can quickly become impractical or impossible as data volumes grow. Generators solve this by producing values on-the-fly, maintaining only the minimal state necessary to continue the sequence.

Consider the difference between reading a large file. A naive approach might use readlines() to load the entire file into a list of strings, consuming memory proportional to the file size. With a generator that yields one line at a time, memory usage remains constant regardless of file size. This isn't just a theoretical optimization—it's the difference between successfully processing gigabyte-sized logs and running out of memory.

"When you stop thinking about data as something to collect and start thinking about it as something to flow through, generators become not just useful but essential."

Comparing Memory Footprints

The memory savings become dramatic when working with large sequences. A list of one million integers consumes approximately 8 megabytes of memory (8 bytes per integer in a 64-bit Python). The equivalent generator maintains only the current counter and loop state—typically less than 100 bytes regardless of sequence length. This difference scales linearly with data size, making generators essential for big data processing.

| Approach | Memory for 1M Items | Memory for 1B Items | Scalability |

|---|---|---|---|

| List materialization | ~8 MB | ~8 GB | Linear growth, eventually exhausts memory |

| Generator approach | ~100 bytes | ~100 bytes | Constant memory, infinite scalability |

| Generator with buffering | ~80 KB (10K buffer) | ~80 KB (10K buffer) | Controlled memory, optimized throughput |

Lazy Evaluation Benefits

Beyond memory savings, lazy evaluation through generators offers computational efficiency. If you're searching for the first item matching a condition in a large dataset, a generator stops producing values the moment you find what you need. A list-based approach would compute all values upfront, wasting CPU cycles on calculations you'll never use.

This lazy behavior compounds when chaining operations. Multiple generators can be composed together, each transforming data as it flows through. The entire pipeline remains lazy—no intermediate lists are created, and computation only occurs when the final consumer requests values. This creates elegant, memory-efficient data processing chains that rival specialized streaming frameworks.

Practical Implementation Patterns

Moving from theory to practice, generators shine in numerous real-world scenarios. Understanding common patterns helps you recognize opportunities to apply generators effectively and avoid reinventing solutions that the Python community has already refined.

🔄 Infinite Sequences

Generators excel at representing infinite sequences that would be impossible to store in memory. Counter generators, repeating patterns, or mathematical sequences like Fibonacci numbers become trivial to implement. These infinite generators can be combined with functions like itertools.islice() to take finite portions when needed, or used directly in loops that break based on conditions.

📁 File Processing

Reading files line-by-line is perhaps the most common generator use case. Python's built-in file objects are themselves generators when iterated over, yielding one line at a time. This pattern extends to processing CSV files, JSON streams, log files, or any line-oriented data format. Generators allow you to process files of arbitrary size with constant memory usage.

🔗 Pipeline Construction

Generators compose beautifully into processing pipelines. Each stage of the pipeline is a generator that takes an iterable input and yields transformed output. This functional approach creates clear, maintainable code where each transformation is isolated and testable. Pipelines can include filtering, mapping, aggregation, or any operation that processes items sequentially.

"The power of generators isn't in any single use case—it's in their composability, the way they let you build complex data flows from simple, understandable pieces."

🌐 API Pagination

When consuming paginated APIs that return data in chunks, generators provide an elegant abstraction. The generator handles the pagination logic—making requests, extracting data, and requesting the next page—while yielding individual items. Consumers iterate naturally without worrying about page boundaries or request management.

⚡ Performance Optimization

In performance-critical code, generators can reduce overhead by eliminating intermediate data structures. When processing data through multiple transformations, a generator pipeline avoids creating temporary lists between stages. This reduces memory allocations, garbage collection pressure, and cache misses, potentially improving performance significantly.

Generator Expressions: Concise Syntax

For simple cases, generator expressions provide a compact alternative to full generator functions. Syntactically similar to list comprehensions but using parentheses instead of brackets, generator expressions create generators in a single line. This conciseness makes them ideal for simple transformations, filtering, or anywhere you'd use a list comprehension but want lazy evaluation.

The syntax follows the pattern: (expression for item in iterable if condition). The expression is evaluated for each item that passes the optional condition, yielding results one at a time. Generator expressions can be nested, though readability suffers beyond simple cases. They're particularly useful as arguments to functions that consume iterables, where the parentheses can be omitted if they're the only argument.

Performance-wise, generator expressions are slightly faster than equivalent generator functions because they're optimized at the bytecode level. However, they're limited to single expressions and can't contain complex logic, multiple statements, or maintain additional state beyond the iteration variables. For anything beyond simple transformations, full generator functions provide necessary flexibility.

Advanced Patterns and Techniques

Beyond basic iteration, generators support sophisticated patterns that enable complex behaviors. These advanced techniques unlock capabilities that make generators suitable for concurrent programming, event handling, and cooperative multitasking scenarios.

Bidirectional Communication with send()

The send() method transforms generators from simple iterators into coroutines capable of receiving values. When you call generator.send(value), that value becomes the result of the yield expression inside the generator. This enables a ping-pong communication pattern where the generator and its caller exchange information back and forth. This capability is foundational to Python's async/await syntax, which builds on generator mechanics.

Exception Handling with throw()

Generators can receive exceptions from the outside via the throw() method, which raises the specified exception at the point where the generator is paused. This allows external code to signal error conditions into the generator, which can catch and handle them or allow them to propagate. Combined with try/finally blocks inside generators, this enables robust resource cleanup even when generators are terminated abnormally.

"Advanced generator patterns blur the line between data structures and control flow, creating abstractions that are simultaneously iterators, state machines, and communication channels."

Delegation with yield from

The yield from syntax allows a generator to delegate part of its operations to another generator. This isn't just syntactic sugar—it establishes a transparent bidirectional channel between the delegating generator and its subgenerator. Values, exceptions, and return values all flow through this channel, enabling clean composition of generators without manual forwarding logic.

Common Pitfalls and Best Practices

Despite their elegance, generators introduce subtleties that can surprise developers unfamiliar with their behavior. Understanding common pitfalls helps you avoid debugging sessions and write more robust code.

Single-use nature: Generators are exhausted after one complete iteration. Unlike lists that can be iterated multiple times, once a generator raises StopIteration, it's done forever. Attempting to iterate again yields nothing. If you need multiple passes over data, either recreate the generator each time or materialize it into a list when necessary.

Debugging challenges: The lazy evaluation that makes generators efficient also makes them harder to debug. Values don't exist until requested, so you can't easily inspect the "contents" of a generator. When debugging, consider materializing small portions with list(islice(generator, 10)) to examine the first few values without exhausting the entire generator.

State side effects: Because generators maintain state between calls, they can create unexpected behaviors if that state includes mutable objects or external resources. Be cautious about generators that modify global state, maintain open file handles, or depend on external conditions that might change between yields.

"The hardest bugs in generator code come from forgetting they're stateful—they remember everything that happened before, and that memory can surprise you."

Performance misconceptions: While generators save memory, they're not always faster. The overhead of function calls and state management can make generators slower than list operations for small datasets that fit comfortably in memory. Profile before optimizing, and remember that generators optimize for memory first, speed second.

Integration with Python's Ecosystem

Generators integrate seamlessly with Python's standard library and third-party ecosystem. The itertools module provides a rich collection of generator-based tools for efficient iteration patterns. Functions like chain(), cycle(), repeat(), and combinations() are all generators that compose with your own generator functions to create powerful data processing pipelines.

The functools module complements generators with tools like reduce() that consume iterables efficiently. The operator module provides function versions of Python's operators, useful in generator expressions and functional pipelines. Together, these modules enable a functional programming style where generators serve as the primary data abstraction.

Modern Python frameworks increasingly embrace generators for streaming responses, asynchronous iteration, and data pipelines. Web frameworks use generators to stream HTTP responses without buffering entire payloads. Data processing libraries like Pandas can iterate over large datasets in chunks using generator-like interfaces. Understanding generators makes you more effective with these tools.

When to Choose Generators Over Alternatives

Choosing between generators and alternatives depends on your specific requirements. Generators excel when memory efficiency matters, when processing large or infinite sequences, or when building data pipelines. They're ideal for one-pass algorithms where you process each item once and move on.

Lists remain appropriate for small collections that you'll access multiple times, when you need random access to elements, or when you'll modify the collection. Lists support indexing, slicing, and in-place modifications that generators cannot provide. If your dataset fits comfortably in memory and you need these capabilities, lists are the simpler choice.

Iterators provide a middle ground—they're one-pass like generators but can be implemented as classes with full object-oriented features. Choose custom iterator classes when you need complex state management, multiple methods, or integration with class hierarchies. Generators are essentially lightweight iterators optimized for the common case of sequential value production.

Real-World Applications

In production systems, generators solve concrete problems across diverse domains. Data engineering pipelines use generators to process streaming data from Kafka or other message queues, transforming millions of events per second with constant memory usage. The generator pattern allows each processing stage to operate independently, improving maintainability and enabling parallel processing when appropriate.

Web scraping applications leverage generators to crawl websites efficiently, yielding discovered URLs or extracted data without storing entire site structures in memory. Combined with asynchronous I/O, generator-based crawlers can handle thousands of concurrent requests while maintaining clean, readable code.

Scientific computing uses generators for processing large datasets that don't fit in memory—genomic sequences, astronomical observations, or sensor data. By streaming data through analysis pipelines, researchers can work with datasets that would otherwise require specialized big data infrastructure.

Machine learning workflows employ generators for batch processing during training. Instead of loading entire training sets into memory, generators yield batches of data on demand, enabling training on datasets larger than available RAM. This pattern is so common that frameworks like TensorFlow and PyTorch provide generator-compatible data loading APIs.

Performance Considerations and Optimization

While generators optimize memory usage, their performance characteristics deserve attention. The function call overhead and state management introduce small per-iteration costs. For tight inner loops processing millions of simple items, this overhead can accumulate. In such cases, consider batching—having generators yield lists of items rather than individual items, reducing the per-item overhead while maintaining memory benefits.

Generator expressions are typically faster than equivalent generator functions due to bytecode optimizations. When the logic fits in a single expression, prefer generator expressions. For complex logic, the readability and maintainability of generator functions outweigh the minor performance difference.

Chaining multiple generators creates a pipeline where each value passes through all stages. While elegant, long chains can accumulate overhead. Profile your pipelines, and consider consolidating stages when performance becomes critical. Sometimes a single generator doing multiple transformations outperforms a chain of simple generators.

Memory access patterns matter too. Generators that yield data with good locality of reference (accessing nearby memory addresses) can benefit from CPU caching, partially offsetting their overhead. Conversely, generators that jump around memory unpredictably may suffer cache misses, reducing performance below equivalent list-based code.

Testing and Documentation

Testing generator code requires different approaches than testing regular functions. Since generators are lazy, you can't simply compare their "output" to expected values—you must iterate through them. Testing frameworks should consume generator output, typically by converting to lists for comparison, or by verifying that each yielded value matches expectations.

Documenting generators should clearly indicate their lazy nature and single-use behavior. Specify what each generator yields, any exceptions it might raise, and whether it can be infinite. If the generator maintains state or has side effects, document these explicitly. Good documentation prevents consumers from making incorrect assumptions about generator behavior.

When writing docstrings for generator functions, consider including examples that show typical usage patterns. Demonstrate how to consume the generator, how to limit infinite generators, and how to handle exceptions. These examples serve as both documentation and informal tests of your generator's interface.

Frequently Asked Questions

Can generators be used multiple times?

No, generators are single-use iterators. Once exhausted, they cannot be reset or reused. If you need to iterate multiple times, you must either call the generator function again to create a new generator instance, or materialize the results into a list if the data fits in memory. This single-use nature is fundamental to how generators maintain state efficiently.

What's the difference between yield and return?

The yield keyword produces a value and suspends the function, preserving its state for later resumption. The return keyword terminates the function completely, optionally providing a value that becomes the StopIteration exception's value. A function with yield is a generator function; without yield, it's a regular function. You can use both in the same generator function—yield for producing values, return to signal completion with a final value.

Are generators slower than lists?

For small datasets, generators can be slightly slower due to function call overhead and state management. However, for large datasets, generators are often faster because they avoid the upfront cost of creating and populating a list. The real advantage is memory efficiency—generators can process datasets that would exhaust memory if materialized as lists. Profile your specific use case to determine which approach is optimal.

How do I debug generator code?

Debugging generators requires different techniques than regular functions. Use list(itertools.islice(generator, n)) to materialize and inspect the first n values without exhausting the generator. Add print statements or logging inside the generator to observe execution flow. Debuggers can step through generator code, but remember that execution only advances when values are requested. For complex generators, consider writing unit tests that verify yielded values match expectations.

Can generators be pickled or serialized?

Generator objects generally cannot be pickled or serialized because they contain execution state that's difficult to capture. Generator functions themselves can be pickled if they're defined at module level, but active generator instances with partially executed state typically cannot. If you need to serialize iteration state, consider implementing a custom iterator class with explicit state variables that can be pickled, or redesign your approach to avoid needing serialization.

Sponsor message — This article is made possible by Dargslan.com, a publisher of practical, no-fluff IT & developer workbooks.

Why Dargslan.com?

If you prefer doing over endless theory, Dargslan’s titles are built for you. Every workbook focuses on skills you can apply the same day—server hardening, Linux one-liners, PowerShell for admins, Python automation, cloud basics, and more.